In a recent article, we described how we used Red Hat Data Grid, built from the Infinispan community project, to deliver a global leaderboard that tracked real-time scores for an online game.

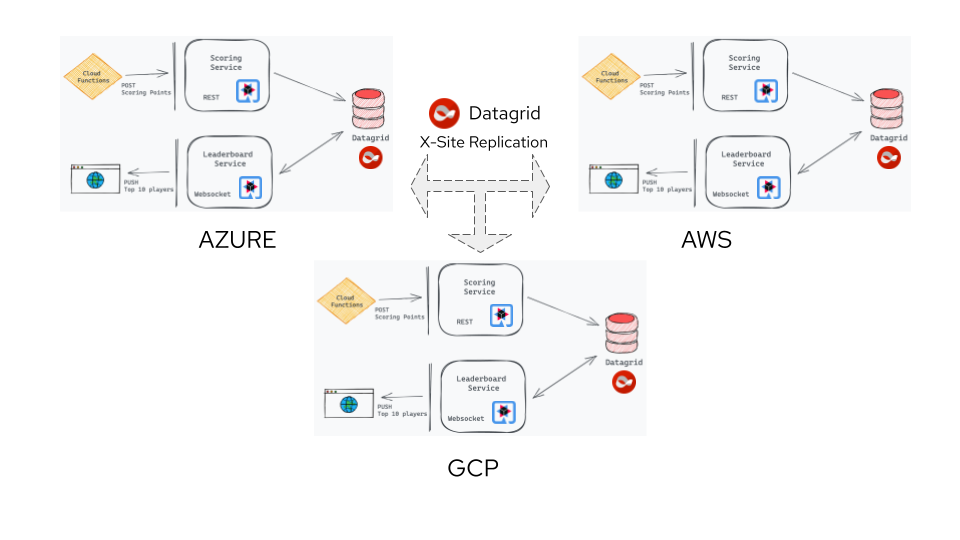

In this article, we’re back to demonstrate how we used the Red Hat Data Grid Operator on Red Hat OpenShift to create and manage services running on AWS (Amazon Web Services), GCP (Google Cloud Platform), and Microsoft Azure. In this case, we used the Data Grid Operator to create a global Data Grid cluster across multiple cloud platforms that appeared as a single Data Grid service to external consumers.

Note: The global leaderboard was featured during Burr Sutter's Red Hat Summit keynote in April 2021. Get a program schedule for the second half of this year's virtual summit, coming June 15 to 16, 2021.

Global leaderboard across the hybrid cloud

Figure 1 shows the global leaderboard that we introduced in our previous article.

As we explained in the previous article, the online game and all related services were hosted on different cloud providers in three separate geographical regions. To ensure the system performed well and was responsive enough to show real-time results for each match, we used Data Grid to provide low-latency access to in-memory data stored close to the player’s physical location.

The leaderboard represents a global ranking of players, so the system needs to use all scores across each of the three clusters to determine overall winners. To achieve all this, we devised a solution that brought together the following technologies:

- Indexing and querying caches using Protobuf encoding.

- Quarkus extensions for Infinispan, RESTEasy, Websockets, and Scheduler.

- Data Grid cross-site replication.

- Data Grid Operator for OpenShift.

Data Grid Operator

To make our lives easier, we created a Data Grid Operator subscription on each OpenShift cluster at each data center. The Operator then automatically configured and managed the Data Grid server nodes and did all the heavy lifting to establish cross-site network connections. As a result, we didn’t have as much deployment complexity to deal with, and could concentrate on our implementation. The following Infinispan custom resource contains the Kubernetes API URL for each site and a reference to a secret that contains each cluster’s credentials. We referenced each site name in our cache’s <backups/> configuration.

apiVersion: infinispan.org/v1

kind: Infinispan

metadata:

name: datagrid

spec:

image: quay.io/infinispan/server:12.1.0.Final

container:

cpu: "1000m"

memory: 4Gi

expose:

type: LoadBalancer

replicas: 2

security:

endpointEncryption:

type: None

endpointAuthentication: false

service:

type: DataGrid

sites:

local:

name: SITE_NAME

expose:

type: LoadBalancer

locations:

- name: AWS

url: openshift://api.summit-aws.28ts.p1.openshiftapps.com:6443

secretName: aws-token

- name: GCP

url: openshift://api.summit-gcp.eior.p2.openshiftapps.com:6443

secretName: gcp-token

- name: AZURE

url: openshift://api.g9dkpkud.centralus.aroapp.io:6443

secretName: azure-token

logging:

categories:

org.jgroups.protocols.TCP: error

org.jgroups.protocols.relay.RELAY2: error

To create and initialize our caches, we used a combination of the Cache and Batch custom resources, which the Data Grid Operator provides. We'll look at those next.

The Cache custom resource

The caches that we needed to back up to other locations were game, players-scores, and players-shots. We created these caches with the following Cache custom resource, where the CACHE_NAME and BACKUP_SITE_ placeholders were replaced with correct values for each site deployment:

---

apiVersion: infinispan.org/v2alpha1

kind: Cache

metadata:

name: CACHE_NAME

spec:

clusterName: datagrid

name: CACHE_NAME

adminAuth:

secretName: cache-credentials

template: |

<infinispan>

<cache-container>

<distributed-cache name="game" statistics="true">

<encoding>

<key media-type="text/plain" />

<value media-type="text/plain" />

</encoding>

<backups>

<backup site="BACKUP_SITE_1" strategy="ASYNC" enabled="true">

<take-offline min-wait="60000" after-failures="3" />

</backup>

<backup site="BACKUP_SITE_2" strategy="ASYNC" enabled="true">

<take-offline min-wait="60000" after-failures="3" />

</backup>

</backups>

</distributed-cache>

</cache-container>

</infinispan>

The Batch custom resource

To create the other caches we needed for our system, and to upload all our *.proto (Protobuf) schemas to our Data Grid cluster, we used the Batch custom resource. The Batch custom resource executes the batch file in a ConfigMap using the Data Grid command-line interface (CLI). We were able to take advantage of full CLI capabilities for manipulating caches without worrying too much about the authentication and connection details. Here’s the Batch custom resource we used to upload our schema, create the players and match-instances caches at each site, and then put an initial entry into the game cache:

apiVersion: infinispan.org/v2alpha1

kind: Batch

metadata:

name: datagrid

spec:

cluster: datagrid

configMap: datagrid-batch

---

apiVersion: v1

kind: ConfigMap

metadata:

name: datagrid-batch

data:

LeaderboardServiceContextInitializer.proto: |

// File name: LeaderboardServiceContextInitializer.proto

// Generated from : com.redhat.model.LeaderboardServiceContextInitializer

syntax = "proto2";

package com.redhat;

enum GameStatus {

PLAYING = 1;

WIN = 2;

LOSS = 3;

}

enum ShipType {

CARRIER = 1;

SUBMARINE = 2;

BATTLESHIP = 3;

DESTROYER = 4;

}

/**

* @Indexed

*/

message PlayerScore {

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional string userId = 1;

optional string matchId = 2;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional string gameId = 3;

optional string username = 4;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional bool human = 5;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

* @SortableField

*/

optional int32 score = 6;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

* @SortableField

*/

optional int64 timestamp = 7;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional GameStatus gameStatus = 8;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional int32 bonus = 9;

}

/**

* @Indexed

*/

message Shot {

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

required string userId = 1;

required string matchId = 2;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional string gameId = 3;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional bool human = 4;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional int64 timestamp = 5;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional ShotType shotType = 6;

/**

* @Field(index=Index.YES, analyze = Analyze.NO, store = Store.YES)

*/

optional ShipType shipType = 7;

}

enum ShotType {

HIT = 1;

MISS = 2;

SUNK = 3;

}

batch: |

schema --upload=/etc/batch/LeaderboardServiceContextInitializer.proto LeaderboardServiceContextInitializer.proto

create cache --file=/etc/batch/match-instances.xml match-instances

create cache --file=/etc/batch/players.xml players

cd caches/game

put --encoding=application/json --file=/etc/batch/game-config.json game

game-config.json: "{\n\t\"id\": \"uuidv4\",\n\t\"state\": \"lobby\"\n}\n"

game.xml: |

<infinispan>

<cache-container>

<distributed-cache name="game" statistics="true">

<encoding>

<key media-type="text/plain" />

<value media-type="text/plain" />

</encoding>

</distributed-cache>

</cache-container>

</infinispan>

match-instances.xml: |

<infinispan>

<cache-container>

<distributed-cache name="players" statistics="true">

<encoding>

<key media-type="text/plain" />

<value media-type="text/plain" />

</encoding>

</distributed-cache>

</cache-container>

</infinispan>

players.xml: |

<infinispan>

<cache-container>

<distributed-cache name="players" statistics="true">

<encoding>

<key media-type="text/plain" />

<value media-type="text/plain" />

</encoding>

</distributed-cache>

</cache-container>

</infinispan>

Conclusion

In this article, we’ve shown you how we created a system built with Red Hat Data Grid and Quarkus with RESTEasy, Websockets, Scheduler, and the infinispan-client extensions, along with Protobuf encoding. The resulting system creates a global ranking of game players across three different cloud providers in separate geographic regions. We hope all of these details will inspire you to start using Data Grid, or the Infinispan project, for other hybrid cloud use cases. If you’re interested in finding out more, please feel free to start with the Data Grid project page or visit our Infinispan community website.

Last updated: September 19, 2023