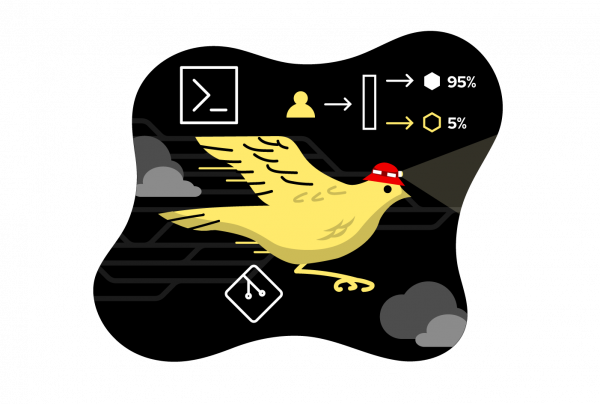

Overview: Run the Canary Deployment pattern on Kubernetes

One of the benefits of using containers to develop applications is the ease and speed with which you can deploy new versions. A rolling update can be performed in seconds, after which your new version will be available.

The downside is that it’s quite easy to quickly introduce a buggy version of your application. This double-edged sword can be threatening, but there are deployment methodologies that can help mitigate the danger. One such deployment pattern is called the Canary Deployment.

In this learning path, you will use basic Kubernetes skills to understand and implement the Canary Deployment.

Prerequisites

- A Developer Sandbox for Red Hat OpenShift (Developer Sandbox) account

- The OpenShift command-line interface (CLI)

- Comfort with working at the command line and using Bash or PowerShell

- Access to a web browser to obtain your Red Hat OpenShift login token

In this learning path, you will:

- Log into your Developer Sandbox at the command line

- Spin up a host service

- Run a curl command loop

- Set a pod count

- Spin up a different version

- Patch both versions to use the same route

- Change the pod counts

- Observe the results