Build here. Go anywhere.

Discover Red Hat’s latest solutions for building applications with more flexibility, scalability, security, and reliability.

Rapidly build, test, and deploy applications that make work better for your organization.

Using API Management for applications during modernization

Accurately labeled data is crucial for training AI models. Learn how to...

Learn how to configure Red Hat OpenShift AI to train a YOLO model using an...

Red Hat Enterprise Linux (RHEL) 9.4 is now generally available (GA). Learn...

A Red Hat Developer membership comes with a ton of benefits, including no-cost access to products such as Red Hat Enterprise Linux (RHEL), Red Hat OpenShift, and Red Hat Ansible Automation Platform.

1 year of access to all Red Hat products

Developer learning resources

Virtual and in-person tech events

Red Hat Customer Portal access

Exclusive content

Access to the Developer Sandbox for Red Hat OpenShift, a shared OpenShift and Kubernetes cluster for practicing your skills

This year’s session catalog—offering 300+ keynotes, breakout sessions, hands-on labs, and more—is now available.

Boost your technical skills to expert-level with our interactive learning tutorials.

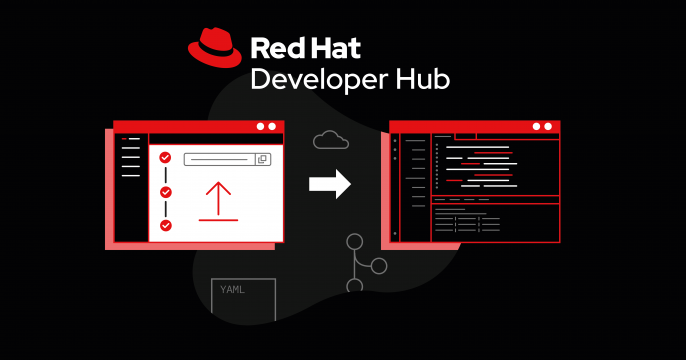

Explore developer resources and tools that help you build, deliver, and manage innovative cloud-native apps and services.

Explore insights, news, and tutorials on Red Hat developer tools, platforms, and more.