Free e-book: DevOps with OpenShift

Experts explain how to configure Docker application containers and the Kubernetes cluster manager with OpenShift’s developer- and operational-centric tools. Discover how this infrastructure-agnostic container management platform can help companies navigate the murky area where infrastructure-as-code ends and application automation begins.

The book covers the following topics:

- Why automation is important

- Patterns and practical examples for managing continuous deployments such as rolling, A/B, blue-green, and canary

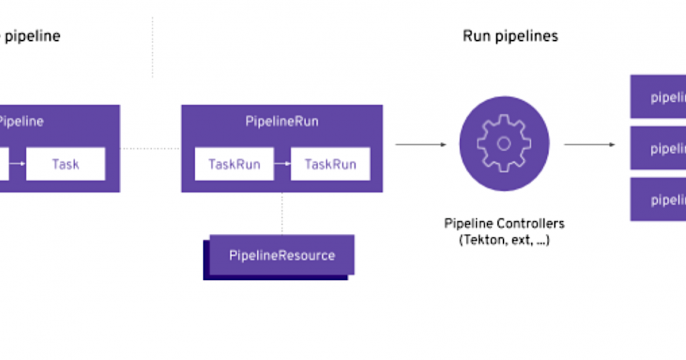

- Implementing continuous integration pipelines with OpenShift’s CI/CD capability

- Mechanisms for separating and managing configuration from static runtime software

- Customizing OpenShift’s source-to-image capability

- Considerations when working with OpenShift-based application workloads

- Self-contained local versions of the OpenShift environment on your computer