Apache Kafka is a rock-solid, super-fast, event streaming backbone that is not only for microservices. It's an enabler for many use cases, including activity tracking, log aggregation, stream processing, change-data capture, Internet of Things (IoT) telemetry, and more.

Red Hat AMQ Streams makes it easy to run and manage Kafka natively on Red Hat OpenShift. AMQ Streams' upstream project, Strimzi, does the same thing for Kubernetes.

Setting up a Kafka cluster on a developer's laptop is fast and easy, but in some environments, the client setup is harder. Kafka uses a TCP/IP-based proprietary protocol and has clients available for many different programming languages. Only the JVM client is on Kafka's main codebase, however.

In many scenarios, it is difficult, impossible, or we just don't want to put in the effort to install and set up a Kafka client manually. A hidden gem in AMQ Streams can be a big help to developers who want to access a Kafka client, but don't want the bother of setting one up. In this article, you will get started with Red Hat AMQ Streams Kafka Bridge, a RESTful interface for producing and consuming Kafka topics using HTTP/1.1.

Note: The Kafka HTTP bridge is available from AMQ Streams 1.3 and Strimzi 0.12 forward.

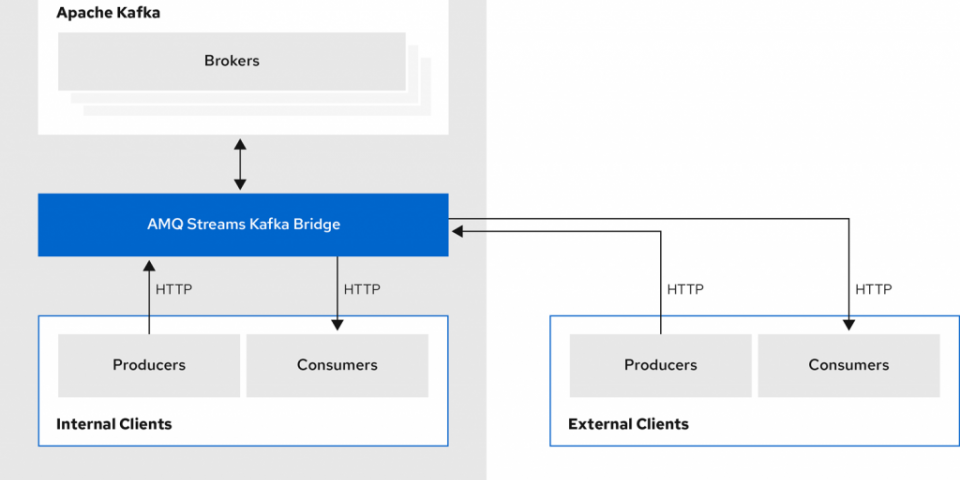

Figure 1 shows AMQ Streams Kafka Bridge in a typical Apache Kafka messaging system.

Getting started with AMQ Streams Kafka Bridge

To use AMQ Streams, you need an OpenShift cluster, 3.11 or newer, and a user with the cluster-admin role.

I tested the code for this article on a developer laptop with Red Hat Enterprise Linux (RHEL) 7.6 and Red Hat CodeReady Containers (CRC) 1.9 on OpenShift 4.3.1. I suggest running CRC with at least 16GB of memory and eight cores, but it's up to you. (Just don't be too stingy; otherwise, you might have issues starting the Kafka cluster.)

The five-minute installation

First, we will install a Kafka custom resource definition (CRD) and role-based access control (RBAC) on a dedicated project named kafka. Then, we'll install a Kafka cluster in the project, which we'll name my-kafka-cluster.

-

- Download the AMQ Streams 1.4 OpenShift Container Platform (OCP) installation and examples. Unzip the file and move it inside the folder

amq-streams-1.4.0-ocp-install-examples. - Log in to your cluster using the command

cluster-admin(CRC) oc login -u kubeadmin [...]. - Install the Cluster Operator into the

kafkaproject:

$ sed -i 's/namespace: .*/namespace: kafka/' install/cluster-operator/*RoleBinding*.yaml $ oc new-project kafka $ oc project kafka $ oc apply -f install/cluster-operator/

- Install the Topic and Entity Operators in a Kafka cluster project:

$ oc new-project my-kafka-project $ oc set env deploy/strimzi-cluster-operator STRIMZI_NAMESPACE=kafka,my-kafka-project -n kafka $ oc apply -f install/cluster-operator/020-RoleBinding-strimzi-cluster-operator.yaml -n my-kafka-project $ oc apply -f install/cluster-operator/032-RoleBinding-strimzi-cluster-operator-topic-operator-delegation.yaml -n my-kafka-project $ oc apply -f install/cluster-operator/031-RoleBinding-strimzi-cluster-operator-entity-operator-delegation.yaml -n my-kafka-project $ oc apply -f install/strimzi-admin

- Create the Kafka cluster:

$ oc project my-kafka-project $ cat << EOF | oc create -f - apiVersion: kafka.strimzi.io/v1beta1 kind: Kafka metadata: name: my-cluster spec: kafka: replicas: 3 listeners: plain: {} tls: {} external: type: route storage: type: ephemeral zookeeper: replicas: 3 storage: type: ephemeral entityOperator: topicOperator: {} EOF - Wait for the cluster to start:

$ oc wait kafka/my-cluster --for=condition=Ready --timeout=300s -n my-kafka-project

- Download the AMQ Streams 1.4 OpenShift Container Platform (OCP) installation and examples. Unzip the file and move it inside the folder

That's it! The Kafka cluster is up and running.

Install AMQ Streams Kafka Bridge

Installing the Kafka HTTP bridge for AMQ Streams requires just one YAML file:

$ oc apply -f examples/kafka-bridge/kafka-bridge.yaml

Once you have installed the file, the Cluster Operator will create a deployment, a service, and a pod.

Expose the bridge outside of OCP

We've installed and configured the bridge, but we can only access it inside the cluster. Use the following command to expose it outside of OpenShift:

$ oc expose service my-bridge-bridge-service

The bridge itself doesn't provide any security, but we can secure it with other methods such as network policies, reverse proxy (OAuth), and Transport Layer Security (TLS) termination. If we want a more full-featured solution, we can use the bridge with a 3scale API Gateway that includes TLS authentication and authorization as well as metrics, rate limits, and billing.

Note: The Kafka HTTP bridge supports TLS or Simple Authentication and Security Layer (SASL)-based authentication, and TLS-encrypted connections when connected to a Kafka cluster. It's also possible to install many bridges, choosing between internal or external implementations, each with different authentication mechanisms and different access control lists.

Verify the installation

Let's check to see whether the bridge is available:

$ BRIDGE=$(oc get routes my-bridge-bridge-service -o=jsonpath='{.status.ingress[0].host}{"\n"}')

curl -v $BRIDGE/healthy

Note that the bridge exposes the REST API as OpenAPI-compatible:

$ curl -X GET $BRIDGE/openapi

Using AMQ Streams Kafka Bridge

At this point, everything is ready to produce and consume messages using the AMQ Streams Kafka Bridge. We'll go through a quick demonstration together.

Produce and consume system logs

Log ingestion is one of the common use cases for Kafka. We are going to fill a Kafka topic with our system logs, but they can come from any system that supports HTTP. Likewise, the logs can be consumed by other systems.

Start by creating a topic and naming it machine-log-topic:

$ cat << EOF | oc create -f -

apiVersion: kafka.strimzi.io/v1beta1

kind: KafkaTopic

metadata:

name: my-topic

labels:

strimzi.io/cluster: "my-cluster"

spec:

partitions: 3

replicas: 3

EOF

Then, fill the topic with data using curl and jq:

$ journalctl --since "5 minutes ago" -p "emerg".."err" -o json-pretty | \

jq --slurp '{records:[.[]|{"key":.__CURSOR,value: .}]}' - | \

curl -X POST $BRIDGE/topics/machine-log-topic -H 'content-type: application/vnd.kafka.json.v2+json' -d @-

Usually, the content type is application/vnd.kafka.json.v2+json, but it's also available as application/vnd.kafka.binary.v2+json for the binary data format. A Base64 value is expected if you use the binary data format.

Consuming messages

Now we have messages to consume. Before we can consume from a topic, we have to add our consumer to a consumer group. Then, we must subscribe the consumer to the topic. In this example, we include the consumer my-consumer in the consumer group my-group:

$ CONS_URL=$(curl -s -X POST $BRIDGE/consumers/my-group -H 'content-type: application/vnd.kafka.v2+json' \

-d '{

"name": "my-consumer",

"format": "json",

"auto.offset.reset": "earliest",

"enable.auto.commit": true

}' | \

jq .base_uri | \

sed 's/\"//g')

Next, we subscribe it to the topic my-topic:

$ curl -v $CONS_URL/subscription -H 'content-type: application/vnd.kafka.v2+json' -d '{"topics": ["my-topic"]}

And now we are ready to consume:

$ curl -X GET $CONS_URL/records -H 'accept: application/vnd.kafka.json.v2+json' | jq

Conclusion

Integrating old but good services or devices in a bleeding-edge microservice architecture can be challenging. But if you can live without hyperspeed messaging (which these older services provide), the Apache Kafka HTTP bridge allows those services—with just a little bit of HTTP/1.1—to leverage the power of the Apache Kafka.

The Apache Kafka HTTP bridge is easy to set up and integrate using its REST API, and it grants unlimited use as an HTTP transport. In this article, I've shown you a quick installation procedure for deploying AMQ Streams Kafka Bridge on OCP, then demonstrated a producer-consumer messaging scenario using logging data over HTTP.

Last updated: October 28, 2020