Red Hat Summit provides an experience for every type of attendee: Whether it be to attend as many presentations as possible to glean the best practices related to open source technology, to visit as many booths in the Partner Pavilion to see how vendors are enabling open source solutions (or to snag as much swag as possible), or to attend hands-on labs and training sessions to get practical experience with experts to provide guidance. 2017 was my fourth Red Hat Summit event and in each of the prior appearances, I have had the opportunity to participate in both traditional breakout sessions along with demonstrations at the Red Hat booth in the Partner Pavilion. One aspect of Red Hat Summit that I had yet to participate in was with one of the many hands-on labs that are available to attendees. For those unfamiliar with a hands-on lab at Red Hat Summit, the session consists of a two-hour instructor-led course that allows attendees to test drive many popular open source tools and technologies. It provides a way for attendees to have firsthand experience with many of the concepts that are mentioned during Summit. What I did not anticipate going in was the amount of coordination and hard work that was needed in order for the labs to flow seamlessly. Since attendees may not appreciate this effort either, this write-up is intended to provide insights into what it takes to put together and execute a successful hands-on lab at Red Hat Summit.

An Idea to Bring to the Masses

A good part of my job at Red Hat entails working with multiple teams across a variety of the Red Hat portfolio ranging from the underlying platform (Red Hat Enterprise Linux), middleware, and the cloud. Since I have been working extensively with the Red Hat OpenShift Container Platform, this would be the focal point for any lab that I was to produce for Summit. However, a lab that demonstrates how to just install OpenShift or deploy an application would not provide much value to attendees since many of these steps are already covered by the OpenShift installation process or by making use of the core components within the product. Working with customers around the world, a repeating theme that I am often asked is not only how to automate the installation of OpenShift but how to perform ongoing maintenance and monitoring of the platform. OpenShift itself cannot perform all of these functions itself, but fortunately, Red Hat has solutions available for this problem set. The challenge was finding experts that would be able to help solve these sets of requirements and tune the lab to meet the hardware requirements of the provided Summit resources.

Build the Right Team

Organizing and delivering a Red Hat Summit hands-on lab is not a task that can be solved by a single individual. Instead, a team of highly skilled experts must be able to work together to develop and deliver a solution that is beneficial for attendees. Given the challenge of managing a complex set of goals for this lab, there needed to be a group of individuals who had the expertise to pull off this feat. Fortunately, I was able to work with some of the best Red Hat has to offer:

- Andrew Block - Principal Consultant specializing in container-based application development and deployment.

- Scott Collier - Atomic OpenShift Group 4 Lead driving projects like the OpenShift Container Platform on provider integration as well as onboard partner/ISV application to OpenShift.

- Jason DeTiberus – One of the original creators of the OpenShift Ansible project and overall expert on Ansible automation.

- Vinny Valdez – Infrastructure expert and automation enthusiast with a strong background in OpenStack as a former Consulting Architect and one of the original writers of the OpenStack Architecture Design Guide.

Each member of the team came from a different group within Red Hat, which provided diverse perspectives on how the lab would be composed and delivered.

Devise a Solution

One of the first tasks that the team needed to agree upon was a high-level overview of the solution that would be delivered. Each of us had our own set of skills and ambitions of what we wanted to showcase and narrowing down the scope became challenging as each one of us wanted to contribute as much as possible. The team originally formed as a result of submitting similar topics to an internal document discussing Summit lab ideas and themes. After numerous conversations and deliberations, the initial team of Scott, Jason, and I agreed upon several high-level patterns that would shape the resulting structure of the lab.

Automate Everything using Ansible

Since Ansible is at the core of the OpenShift installation process, it was a logical choice to not only use the baseline functionality provided by the installer but to also manage the provisioning and configuration of the underlying instances. To allow for additional management and monitoring capabilities, Ansible Tower was brought in as a way to centralize the overall execution. Ansible Tower could reference playbooks and roles stored in a Git repository where the execution of each item can be managed by role based access controls with the results logged for analysis after each run.

Develop a Proposal

Just like any other breakout session at Red Hat Summit, hands-on labs undergo the same Call for Proposals (CFP) process in order to determine which lab would appear during the event. CFP’s are due approximately six (6) months prior to the conference. The CFP process required the team to take the high-level goals that had been agreed upon and draft a paragraph that would describe to the conference organizers what would be delivered as part of the lab. The key to any successful CFP is to be succinct in the overall topic and attendee outcome but to also not back yourself into a corner and provide the flexibility to introduce additional concepts or technologies.

Wait, Pray, Rinse, Repeat

Once the Call for Proposal process closes, it typically takes 45-60 days for conference organizers to review the submissions. As the days began to dwindle, each new email notification felt like waiting to receive standardized testing results. Sure enough, an email confirming acceptance onto the Summit schedule was received. Now the real work was about to begin.

Build Out the Rest of the Solution

Since Ansible was chosen as the mechanism to drive automation around the installation and management of OpenShift, the remaining components for the lab needed to be determined.

A Runtime Environment to Deploy OpenShift

A typical production like deployment of OpenShift requires the use of multiple server instances consisting of masters that schedule workloads and nodes that the workloads ultimately run within. These servers can be bare-metal or virtual machines provided by any means possible. However, a technical requirement for this lab was to provide KVM qcow2 images. As a team, we create a decision matrix with possible ways to provide these servers and advantages and disadvantages of each approach. To satisfy these requirements, along with providing students the ability to dynamically scale the environment to demonstrate the use case of providing additional resources, the IaaS Red Hat OpenStack Platform was chosen to host the server instances representing the deployment of OpenShift. OpenStack also gave us several advantages such as the ability to use Cinder volume-backed Glance images with sparsified volumes, Swift for object-based storage for OpenShift, and a rich set of APIs that are easily consumed by Ansible modules.

Monitoring the Environment

A complex environment, especially one making use of both Red Hat OpenStack and the OpenShift Container Platform requires ample monitoring to ensure the overall health and stability. To provide complete coverage of both the IaaS and PaaS environments, Red Hat CloudForms was selected as the tool of choice. The team was also interested in exploring a containerized deployment of CloudForms that was scheduled to be released in the weeks leading up to the Red Hat Summit lab. It is the risk of embarking on bleeding edge technology that provides the most value to attendees.

Understand the Target Environment

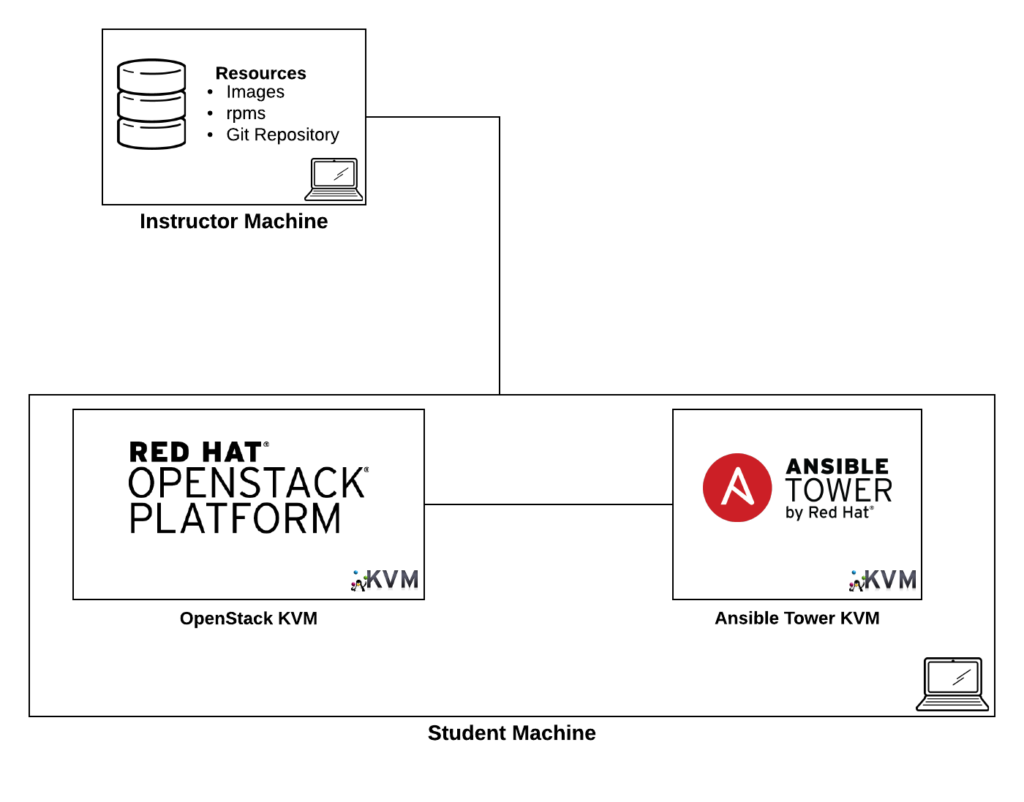

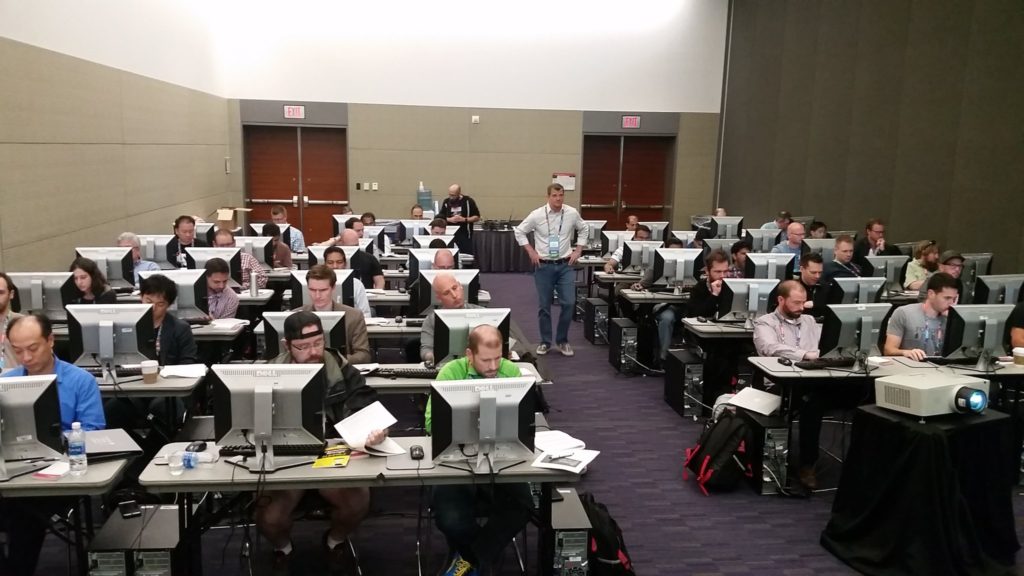

With the majority of the primary components decided on, focus then shifted over to how it could all be packaged and delivered during the 2-hour lab timeframe. Since this was my first lab, I really had no prior knowledge into how a lab was executed. Fortunately, Scott had run several labs in past years and was able to help bridge the learning curve. To allow for the execution of 45 labs, with many of them running concurrently over the course of the three-day conference, there are specific guidelines that lab teams had to adhere to. Lab organizers whose job was to ensure the smooth execution of the lab from both the presenter and attendee perspectives set the conditions forth. Each lab classroom consisted of 50 student machines and to facilitate as short as a 10-minute turnaround time between session, teams are expected to have their lab delivered to the organizers as a KVM image (or images) so that they could be easily distributed to the environment. Given the large footprint needed to deploy each lab component, one of the primary themes that persisted throughout the planning and the majority of the execution phase was whether the machines that would be available in the classroom would be able to execute the lab as intended. Each product has its own set of recommendations and minimum requirements and ensuring that each would be satisfied became a make or break concern. The lab team worked closely with lab organizers to assess the on-site hardware and to determine the feasibility. The use of email and video conferencing helped both teams work through any questions or concerns to ultimately agree upon a solution. In the end, by leveraging multiple KVM images (including advanced image optimization techniques due to the need for nested virtualization when using instances on top of OpenStack which itself is a KVM virtual machine) that would span both the instructor provided machine along with each student workstation, the components within the lab could be deployed successfully.

Project Management is Key

Organizing a complex lab with a distributed team can be challenging, especially when each member of the team has a full-time day job and their own set of responsibilities. Several steps were made to ensure the necessary tasks and resources could be managed effectively.

- The team adopted Agile methodologies for managing the lab as a whole. A scrum board was created on Trello that tracked the upcoming (backlog), in progress and completed tasks. The board is publicly accessible to provide full transparency to the work being accomplished

- Initially, the team met weekly for 30 minutes in a standup fashion to discuss what each member was working on, any blockers and what they were planning to work on over the course of the next week. As the lab grew closer, the weekly standup turned into a traditional daily standup as it became imperative to have updates on a more frequent basis.

- Real-time collaboration became essential as the team evolved the solution and worked through challenges. Slack was used as a medium to perform ongoing discussions of the lab between team members. It became a lifeline for me personally as I hopped around the United States visiting various Red Hat customers where I would still be connected to the ongoing work being accomplished from my mobile device. Video conferencing supplemented communication via slack as it helped bridge the gap while working in a distributed manner and allowed team members bring ideas to life.

- Internally, Red Hat uses the Google suite of productivity products. While not used extensively, the word processing and presentation tools were used to collaborate on the lab guide and slide deck that was given to attendees and posted online.

- The actual assets that would eventually be consumed by attendees were stored and versioned in a Git repository. Like any good developer, the use of a source control management (SCM) tool, such as Git, allowed the team to safely develop the resulting solution in a way that was versioned and open for all to view.

A Little Help Goes a Long Way

Whether you may or may not have noticed, Vinny was introduced as a team member, but aside from his introduction earlier, he has yet to be mentioned. This can be attributed to the fact that he was originally not a member of the lab during the CFP or acceptance process. Vinny had worked with several members of the team previously, myself included, and graciously offered to help out as the scope continued to expand and time was starting to run short. His infrastructure and automation expertise helped jumpstart many of the solutions delivered as part of the lab.

Know Your Limits and Adjust

The combination of running OpenShift on top of OpenStack along with the CloudForms Management Engine within OpenShift may sound advantageous to many. The lab team wanted to provide attendees a glimpse into the types of complex environments that many of our customers are working within. One such area, which has grown in popularity, is the concept of Container Native Storage (CNS). CNS in OpenShift makes use of Red Hat Gluster Storage to deliver a hyper-converged solution where the storage containers running Gluster also serve out storage for the environment. The OpenShift internal registry along with the backing datastore to CloudForms are just some of the resources that require the use of persistent storage. As Summit grew closer, the team made a decision to forego the CNS implementation as part of the lab due, in part, to the tight timeframe and the ability to focus on solidifying the key components of the lab. While the team was disappointed attendees would not be able to leverage this functionality, it demonstrated how the use of agile methodologies allowed for the continual reevaluation of the tasks and project needs and re-adjust accordingly to make the overall lab a success. Ultimately, Cinder within OpenStack was utilized as the persistent storage provider for the environment.

Plan for Failure, Hope for the Best

Anyone who has attended a large conference can attest to suspect network connectivity. Considering that the installation and management of OpenShift makes use of a large amount of resources from the public Internet, 50+ participants concurrently accessing and downloading the required dependencies within the time allotted would not be feasible. To mitigate these concerns, another goal was to allow the lab to be executed in a fully disconnected state from the public Internet. As part of the preparation, all of the needed dependencies were cached into a repository image that would be centrally deployed on the instructor machines. The dependencies needed for the lab included the RPM’s for OpenShift, Docker images for the OpenShift infrastructure and applications, along with a Git repository supporting the sample application that would be deployed as part of the lab. Excessive testing was performed in order to verify that the lab could be executed in a fully disconnected environment. When preparing for a session at a conference, always assume that the unexpected will occur. If all goes smoothly, breath a sigh of relief knowing that you took proactive steps to mitigate potential failures.

Gametime

The days leading up to Red Hat Summit were both a combination of excitement and sheer terror. Working sessions between the team members became a daily occurrence in order to finalize any last preparations. Only so much work could be done remotely in preparation with the biggest test being the execution of the lab on the actual lab machines. Some remote testing was available, however, due to the complexities of this particular lab, testing would have to wait until the entire team was on site in Boston at Red Hat Summit. The conference this year ran from Tuesday through Thursday and the lab was scheduled for the afternoon on the final day. To allow for ample testing time and preparation, the entire team arrived on site by Monday morning. The next three days leading up to the lab was a whirlwind as you can imagine. Each member of the team had their own set of priorities at Summit and it became a juggling act satisfying obligations along with performing the necessary preparations. Days were long and the stress levels were intensified. Countless cycles running through the lab were performed. The results of one of the test runs had the team questioning whether the lab could actually be executed successfully in the environment. Fortunately, these fears were dismissed after a few more cycles and performance tuning.

Finally, after months of preparation, the day of the lab had come upon us. One of the last tasks was to print out the guides that would be provided to each student as they worked through the lab. While the guides were available as a soft copy on each student machine, having a hard copy available gave students the ability to flip through the sections in their lab space without disturbing their work on the machine. Plus, it gave them a tangible artifact that they could walk away with from their Summit experience.

Akin to a set change during a theatrical play, as soon as the prior lab concludes, it can best be summed up as organized to semi-organized chaos. Participants from the proceeding lab exit and there are only a few moments until eager attendees line up and eventually start entering. Lab machines are wiped and re-imaged, lab guides are distributed, and audio/visual systems such as microphones and the machine containing the presentation are tested and validated. Before you know it, it is time for the lab to begin. While I wish I could say that our lab went off without a hitch, we did run into several technical challenges. We threw so much into the lab that it caused nearly half of the environment to lose power due to a thrown circuit breaker (including the vital instructor machine). While it did take about 30 minutes for conference facilities to ultimately restore power, it did allow for some impromptu and in-depth discussion on the technologies that would be used within the lab. As a consultant, I am always on my toes and this lab certainly did not disappoint in that respect. Once the initial set of technical hurdles were ultimately resolved, the lab went smoothly. Students were able to complete the assigned tasks and were pleased with the amount of content they will able to learn in such a short amount of time.

Before we knew it, time was up and the next lab team and their attendees were ready to enter. Last minute questions were answered and all of a sudden, it was all over. Since the lab was scheduled during one of the final session slots, the team held a short debrief session outside the lab room and then dispersed to early evening flights and the final sessions.

Post-Summit Access

As mentioned, all of the materials used to create the lab including the lab images are found in a GitHub repository. Vinny also puts together a great walkthrough of the lab in the form of a video screencast. This video steps through the each aspect of the lab and is a great way to get the student experience. The video description also has all the links to the aforementioned GitHub repository along with the Summit slide deck and student guide.

Final Thoughts

Looking back, regardless of the technical issues that were experienced, the lab was a smashing success. Attendees were able to become immersed in the different sets of technologies showcased and use the experience as an opportunity to see how these set of tools can help install and manage the OpenShift Container Platform. As mentioned to attendees leaving the lab, the tooling used to build and run the lab is open sourced and available on GitHub for all to use. The lab team is also using the assets produced during this lab as a baseline for future endeavors. Some of the items that were not implemented due to time constraints will be added along. Be on the lookout over the course of the next year and at Red Hat Summit 2018 for your opportunity to participate. If you do attend a Summit lab or breakout session, please remember that filling out the survey is vital to providing feedback to not only help the instructors optimize the material but it the single factor used to determine if that session will return the following year. We appreciated those that filled out the survey with great feedback that will only make future Summit offerings even better!

Finally, a personal reflection on my experience participating in a hands-on lab at Red Hat Summit. Having a lab instead of a traditional breakout session affords one to take a different approach to the conference. In years past, I would have spent time preparing for my presentation ensuring that all of the slides are in pristine order including each aspect memorized and carefully choreographed. Since the majority of the work for the lab was completed upfront and packaged into an image, there was little that the team or I could do in comparison. While there were still a lot of long days and evenings during the conference performing verification, validation, and tuning, a lab session did allow me to participate at Summit in ways that I had not done previously. For someone, who has yet to attend Red Hat Summit or has not experienced a hands-on lab, I highly encourage you to do either so. The insights that you will gain from not only the experts but also those working within in the Open Source community will inspire how you work with technology on a daily basis.

Join the Red Hat Developer Program (it’s free) and get access to related cheat sheets, books, and product downloads.

Last updated: September 19, 2023