The Concept of Auto Scaling and Scaling SAFSMS (SAF School Management Software)

In 2008, I have heard about Cloud Computing and AWS. But frankly, the more I wanted to understand what cloud computing is the more I got confused. I have stumbled upon a number of marketing videos using the hype of Cloud Computing to even confuse me more.

Two years later, we have launched the web version of our result compiler software that will enable parents to access their kids’ results online. I had decided to use the Google App Engine because I learned that it’s free or extremely cheap. It runs on the same Infrastructure that Gmail runs and that it scales automatically.

So, I gave it a shot but was not impressed. I started hitting my limits before even releasing the application.

As of that time, we have not launched the full web version of SAFSMS and most of our clients were offline. Scaling was not on our list of problems.

But Cloud Computing and auto scaling make sense when you start hitting traffic more than you had expected. When Facebook launched the “Facebook platform” in 2007, they had hit their limit and were going to San Francisco to get servers to support the traffic. Assuming Facebook was on AWS then and had set up auto scaling, they wouldn’t have faced that challenge.

Fast forward to 2017; SAFSMS is now being used online in over 200 schools with thousands of users. Expanding and accommodating the traffic is now a requirement. One option is to invest in the purchase and deployment of in-house servers that can accommodate the traffic at peak period. Another option is to subscribe to high-capacity servers online that can accommodate the requirements at its peak.

But the traffic on SAFSMS fluctuates according to school academic calendar. Usually, during the end of the term, there are spikes in the traffic and during vacations; the traffic can drop by over 90%. In essence, if we invest in servers with the capacity that meets SAFSMS traffic requirements at peak, we’d be spending 80% more than the average traffic requirements. You may also be unaware with exponential growth such as the “Facebook Platform” launch moment, which means you need to invest more on the servers.

Consuming just the required processing resources at every instance, being able to accommodate unprecedented growth and saving that 80% cost are the key objectives of cloud computing. Another application of cloud computing is in DevOps which is a discussion for another day.

To achieve these goals in SAFSMS, one of the first requirements was to update the architectural design. Initially, every school is deployed as a single tenant application. For 200 schools, it means that we need to deploy 200 instances. If a 1GB RAM is required for 1 school this signifies that we need a 200GB RAM server (or cluster of servers) to serve 200 schools.

But since all the applications are identical and the only differences between the schools are determined based on the configurations on the database, we could use only one application to serve all the schools. This is referred to “multi-tenancy” architecture.

In multi-tenancy, your application figures out the tenant (the user) and then queries the appropriate database to serve the user.

Fortunately, we use Eclipse Link for Java Persistence API (JPA), which supports multi-tenancy. We simply needed to update a few annotations on the entities and intercept user calls to determine the right database to use based on the sub-domain of the caller. With SAFSMS now supporting a multi-tenant architecture, we just have to worry about scaling one application.

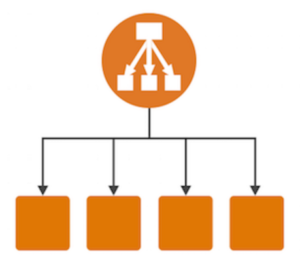

AWS Elastic Load Balancer

There are two types of scaling: vertical and horizontal. In vertical scaling, the CPU or RAM of a server is increased or decreased based on some defined conditions. In horizontal scaling, the server requirements are upgraded by adding more nodes (instances). Another machine (or group of machines) called the load balancer usually sits in front of these nodes, which serves as the channel where all requests and responses from clients go through. The job of the load balancer is to select one of those nodes to serve requests. In AWS load balancing, you do not have to worry about setting up an instance for load balancing. The AWS ELB provides this functionality out of the box and all you need to do is specify some parameters to define your balancing requirements. SAFSMS employs horizontal auto scaling and uses the AWS ELB for its load balancing.

Immutable Instances

Usually, instances that are deployed for the purpose of scaling are identical in terms of specification, configuration and application they are running. In auto scaling, any instance can be destroyed and a new one added without affecting the state of the application. To achieve this, it is important to make your instances immutable, i.e. their state cannot be modified after they are created. Obviously, the first step to making your instance immutable is by separating your database from your application servers. Secondly, dynamic files such as images, video and other documents should also not be stored on your instances.

To achieve immutability in SAFSMS, we save our dynamic files on Amazon S3 and have a different database server. Our application servers are therefore identical which are created from an Amazon Machine Image (AMI). We even made it more immutable by running a docker container on each instance. The docker image bundles the application server and the stable version of SAFSMS. We simply have an AMI that has docker installed and everything else is bundled on the docker image.

Auto Scaling Group

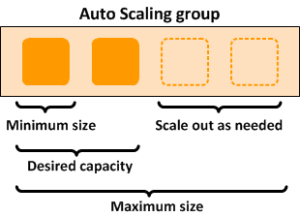

Setting up a load balancer allows traffic to be distributed across a number of fixed servers, but it does not automatically scale your application up or down. To achieve auto scaling on SAFSMS, we used the AWS Auto Scaling Group. In Auto Scaling Group configuration, you specify the minimum and the maximum number of instances required. You can define a number of scaling policies, which will be used to determine the desired capacity (between the minimum and maximum) at every point in time and automatically adjust the number of instances. Scaling policies include factors such as CPU, memory or bandwidth utilization.

The auto scaling group and your load balancer are associated by specifying that instances launched in the auto scaling group should be attached to the load balancer.

AWS Launch Configuration is also required in setting up an auto scaling group. The Launch Configuration will specify the AMI to be used, the instance type (server capacity) and other configurations such as a startup script that you want to be executed when an instance is launched.

With the current SAFSMS architecture, it is certain that we can easily scale from 200 schools to 10,000 with little or no challenges to worry about. We simply need to update our scaling policies where necessary.

Gotchas

If you are a seasoned developer, I am sure by now you have come to the conclusion that, in practice, there are a number of unforeseen challenges that you have to figure out how to resolve when implementing theoretical stuff. Most information you get always discusses an ideal situation.

This was not an exception in auto scaling SAFSMS. Some of the gotchas we had to deal with included “session stickiness” and “database caching.

Since SAFSMS is a stateless application, you must setup session stickiness so that the application instances can be able to identify the user that each request is coming from. Fortunately, AWS ELB provides a functionality for session stickiness that we took advantage of.

SAFSMS also uses the JPA caching feature to improve performance. Since each Java EE server (Glassfish) caches on the instance it is running, new data is not immediately available to other users that are served by another instance. To solve this problem, a centralized caching system such as Memcached or Redis is required.

I first posted this article on Flexisaf blog authored by Faiz Bashir.

Developers can now get a no-cost Red Hat Enterprise Linux® Developer Suite subscription for development purposes by registering and downloading through developers.redhat.com.

Last updated: May 31, 2024