Disclaimer

This content is based on publicly available community information and technical analysis. The installation of SEAPATH on Red Hat Enterprise Linux today is an exercise in leveraging existing open source components under Red Hat's standard third-party software support policies. Users are advised to review the current community documentation and Red Hat support policies.

The global push for grid modernization and the rise of distributed energy resources (DER) are demanding an updated approach to electrical substation automation. Operators are increasingly looking to software-defined architectures to replace traditional, hardware-bound protection, automation, and control (PAC) systems. This is driving the convergence of information technology (IT) and operational technology (OT), an evolution that requires stable, secure, and vendor-agnostic platforms.

The utilities industry's NFV moment

The electrification domain is now experiencing its equivalent of the telecommunications industry's network function virtualization (NFV) transformation. This virtualization shift aims to decouple hardware and software life cycles, improve system availability, and enhance cybersecurity through IT principles with virtualized network functions (VNF). It addresses similar concerns as those seen in PAC systems: Low latency workload with high availability requirements, migration of large brownfield installations, and introducing a global workforce to new sets of IT capabilities.

Many utilities that began this journey with commercial virtualization vendors are now seeking robust, open alternatives for mission-critical infrastructure. This momentum is driving interest in open source solutions and has brought the LF Energy SEAPATH project to the fore, with industry experience of more than 6 years since its initial inception.

What is LF Energy SEAPATH?

SEAPATH, which stands for software enabled automation platform and artifacts (therein), is an open source reference design for an industrial-grade real-time platform. It's designed to run virtualized protection, automation, and control (vPAC) applications in digital substations.

The project successfully combines mature, well-established open source components into an opinionated, highly reliable architecture.

Key architectural components

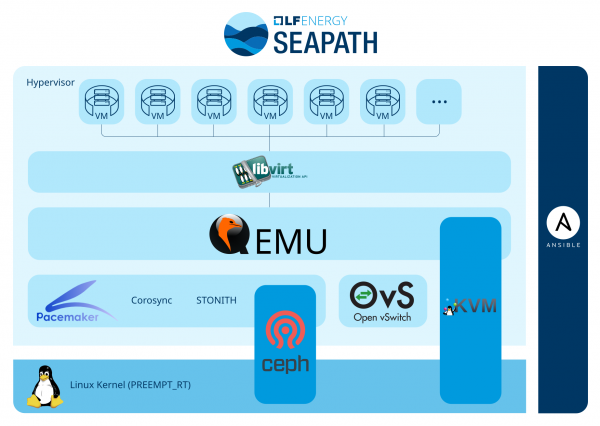

SEAPATH leverages a core set of open source technologies (Figure 1) to meet the stringent demands of critical infrastructure in a hyperconverged setup (for example, running software-defined compute, storage, and networking within one commercially-off-the-shelf available, general purpose server):

- Real-time Linux kernel for deterministic scheduling and low latency.

- KVM (kernel-based virtual machine) for the hypervisor layer managed with QEMU and libvirt.

- Corosync/Pacemaker for high availability, clustering, and fencing.

- Ceph for software-defined distributed storage.

- Open vSwitch (OVS) for network connectivity on the cluster.

- Red Hat Ansible Automation Platform for repeatable setup and infrastructure-as-code management.

This platform is engineered to address key requirements of the industry, including IEC 61850 standards compliance, high availability, and PTP (IEEE 1588) time synchronization.

As a reference design, SEAPATH aims to ease the adoption of a combination of multiple open source projects. The technologies used are still the upstream components. For example, there is no SEAPATH hypervisor, but the KVM hypervisor is installed and configured according to the design of SEAPATH. If you have insight into the Red Hat portfolio, then these open source projects probably sound familiar to you. Red Hat has been shipping solutions that combine many of the same open source projects, but with a different set of default configurations. For example, at Distributech 2025 and Red Hat Summit 2025, Red Hat demonstrated single-node Red Hat Enterprise Linux (RHEL) systems with an RT-kernel, running ABB SSC600SW as a virtualized protection on the KVM hypervisor leveraging only packages built-into RHEL. This showcased the possibilities and low-latency performance of a Red Hat solution suited for substations. Now at Enlit 2025 in Bilbao, we're bringing these efforts together with SEAPATH.

LF Energy SEAPATH on Red Hat Enterprise Linux

For utilities that require commercial support, SEAPATH aligns naturally with the RHEL ecosystem. RHEL provides the long life cycles, security focus, and stability required for critical infrastructure. Customers who want to install SEAPATH on RHEL can do so today. Because the project's foundation is a set of Ansible roles and playbooks that configure the underlying operating system and open source packages (such as CentOS Stream), RHEL can be successfully configured to run SEAPATH's core components.

The vast majority (over 90%) of required packages can be sourced from fully supported Red Hat repositories, including the core RHEL distribution and add-ons like the High Availability and RT or NFV repositories, which provide clustering tools and real-time extensions necessary for the vPAC environment. The remaining packages that are not part of the RHEL distribution are available from the Extra Packages for Enterprise Linux (EPEL) repository. This is maintained by the Fedora community. Red Hat's third-party software support policies and policies for EPEL content apply. This is taking SEAPATH as-is, with no modification to the SEAPATH code.

The immediate technical compatibility allows grid operators and vendors who already rely on RHEL for IT infrastructure to begin experimenting with the SEAPATH architecture on a familiar, stable base. The current release of SEAPATH is tied to an older way of automating Ceph deployments (using ceph-ansible instead of cephadm), this implementation also took Ceph from upstream repositories and not a Red Hat distribution to validate the SEAPATH code as-is on RHEL. The SEAPATH roadmap is already looking to address this.

A full SEAPATH installation on RHEL has around 1,200 packages. You can install most of these packages from a trusted and supported source in the following Red Hat repositories:

| Repository | Package count | Content source |

|---|---|---|

| @rhel-9-for-x86_64-baseos-rpms | 460 | RHEL |

| @rhel-9-for-x86_64-appstream-rpms | 590 | RHEL |

| @rhel-9-for-x86_64-baseos-beta-rpms | 8 | Red Hat Beta |

| @rhel-9-for-x86_64-appstream-beta-rpms | 12 | Red Hat Beta |

| @codeready-builder-for-rhel-9-x86_64-rpms | 2 | RHEL |

| @rhel-9-for-x86_64-highavailability-rpms | 19 | RHEL HA add-On |

| @fast-datapath-for-rhel-9-x86_64-rpms | 2 | RHEL RT/NFV add-On |

| @rhel-9-for-x86_64-nfv-rpms | 10 | RHEL RT/NFV add-On |

| @epel | 66 | EPEL |

| @ceph | 20 | Ceph Upstream |

| @ceph-noarch | 10 | Ceph Upstream |

The first five repos listed for RHEL and Red Hat beta are part of the standard RHEL installation. The next three repos are add-ons. Only the last three repos provide unsupported upstream content (fewer than 100 packages). It may be possible to further reduce the number of community packages by different means, either through active contributions in the SEAPATH community or with extended support offerings by Red Hat. For single node SEAPATH systems (which do not require Ceph), the number of required extra packages is even lower.

Beta packages listed (as of RHEL 9.6) are not on the critical path of the deployment and will not be required after some more investigation and version control (in use are OpenSSL as a dependency of Ceph version Squid and additional Cockpit dashboards).

Technical challenges with this approach

Because SEAPATH began on Debian Linux, a few fundamental packages in RHEL are replaced by different (and from Red Hat’s point of view, often) legacy tooling. For example, SEAPATH currently uses the systemd-networkd package instead of network-manager, which is shipped by Red Hat as a default for managing network connections and interfaces. This requires close attention when opening support cases today, but could be addressed with small contributions to the SEAPATH project. A setup with a stack provided fully by Red Hat is also possible today (resolving the challenges listed above) to get started with the platform and gain experience with the concepts of Linux and virtualization for vPAC systems.

Setup overview

Whether you follow the opinionated implementation from SEAPATH, or use a fully supported approach by Red Hat, the high-level architecture in the following diagrams is exactly the same. SEAPATH comes with implementation designs for single nodes, two nodes with an observer, or a three-node hyperconverged setup, and Red Hat's products allow for other configurations. This could mean more flexibility for storage solutions (for example, integrating external iSCSI storage instead of Ceph), and network topologies without attached cluster nodes for further scalability. With industry demand, such patterns can also be contributed to SEAPATH.

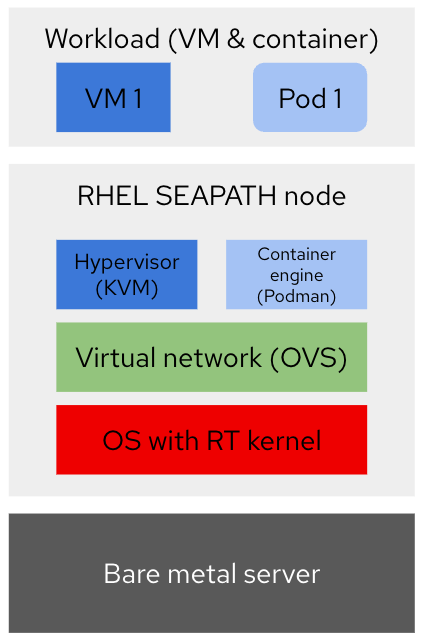

Single-node SEAPATH on RHEL

To get started with Linux and real-time virtualization, a single-node setup in labs provides a quick start. With or without SEAPATH, a server running RHEL can act as a host for virtual machines (VM) and containers running in pods, and fulfill low latency requirements with the proper performance tuning configuration. See Figure 2.

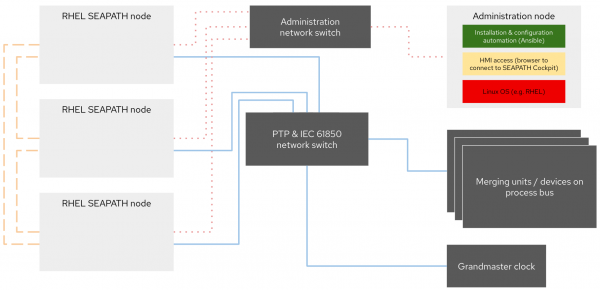

Also on the network topology (see Figure 3), a normal RHEL installation does not differ from a setup suggested by SEAPATH. The RHEL node would be accessed from an administration node (which could also be an operator workstation) to drive automated setup and configuration, and to access system dashboards running in RHEL’s Cockpit using a web browser. The vPAC workload (whether running in a VM or a container) can be connected to the station and process bus networks where the PTP clock and sampled value (SV) publishers (for example, merging units or in-lab environment simulators) reside. This can be over a dedicated physical network interface card (NIC) on the host, passed directly to the workload for exclusive use, or it can leverage virtual networks provided by the OS, KVM, and OVS. Also, SR-IOV NICs are a supported option.

The final technology choice depends on the actual latency requirements and number of different workloads the system is supposed to host for an optimized total cost of ownership.

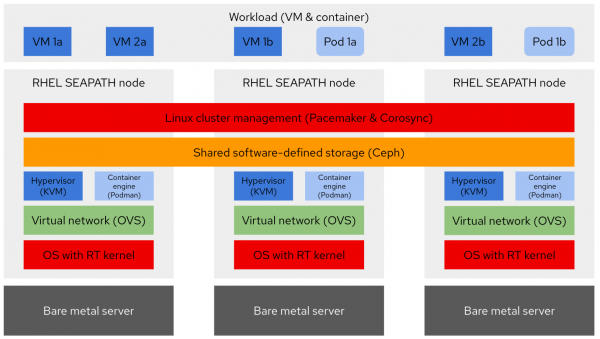

Three-node SEAPATH cluster on RHEL

With three nodes running in a hyperconverged cluster, the setup gets more interesting, and also resilient to failures of individual nodes. In a three-node setup, SEAPATH adds a layer of shared software-defined storage with Ceph and Linux cluster management capabilities provided by Pacemaker and Corosync (see Figure 4).

Ceph takes control over dedicated disks (for example, SSD or NVMe) in the node, and synchronously replicates all data across all nodes. This is where persistent volumes of virtual machines and pods store their data. In case of a node failure, another instance of the workload can be started immediately on a different node with minimal downtime (mere seconds). These failover times are still too slow for the requirements of virtual protection functions and don’t remove the need for redundant setups, but they minimize the times of single points of failure until a backup protection is restored. This also comes in handy with planned maintenance events.

The actual logic of restarting the workload on a different host is provided on the cluster management layer, including management of virtual IPs and required capabilities to avoid split-brain scenarios.

The diagram above shows three workloads (VM 1, VM 2, and Pod 1) running in two active instances each (a and b), spread across the different nodes of the cluster. Provided that the cluster is right-sized for capacity, a node outage would lead to workload being started on another host and still ending up with two active instances of each. This visualizes how multiple functions in a substation can be run in a highly available setup on three nodes (the same outcome with traditional approaches in substation automation requires six hardware appliances). The isolation provided by the real-time kernel of the OS allows running both critical and non-critical workloads on the same node, leading to even further centralization in digital substations.

From a network perspective (see the image below), every node requires the same connectivity to the network for administration (red dotted lines) and traffic related to PTP and IEC 61850 (blue solid lines). In addition, SEAPATH suggests a cluster internal network directly attaching all three nodes with each other (yellow dashed lines). This is used mainly as a back end for Ceph, because replicating all data across all nodes can come with significant network bandwidth requirements that traditional network equipment in substations may not be able to provide. This setup is also possible and supported when using Red Hat Enterprise Linux for the SEAPATH nodes. Nevertheless, there are various degrees of separation and consolidation that are possible on the network layer, depending on the existing network environment in the substation and the actual workload requirements. There are benefits and costs for direct-attached nodes, and we encourage careful consideration. The network design and configuration in the SEAPATH inventory file can be challenging, especially when introducing additional capabilities such as PRP (parallel redundancy protocol).

The administration node remains unchanged also in a multi node configuration because Ansible excels at automating systems at scale. With a growing number of SEAPATH cluster instances, a logical next step is to replace this node with a central management system, for example Red Hat Ansible Automation Platform.

Next steps for this setup and the community

The SEAPATH community is focused on continuous improvement to enhance its use in production environments. Areas of active focus that are also relevant for an improved experience on top of RHEL include:

- Reducing technical debt: Modernizing the automation layer (moving from

ceph-ansibletocephadm) and evolving certain legacy tooling to improve stability and maintainability. Also looking at reducing the number of overall packages to minimize the attack surface and update efforts and going towards immutable operating systems with bootable containers. - Improving documentation: Enhancing user-facing documentation and getting-started guides to lower the barrier to entry for OT professionals who may not be familiar with advanced Linux and Ansible concepts.

- Strengthening enterprise adoption: Increasing community diversity and ensuring that the project benefits from the expertise of multiple major vendors to deliver the lifecycle stability and support required by mission-critical environments.

The collaboration between utilities, technology vendors, and open source leaders within the LF Energy community is a powerful force driving the future of the electric grid. Running SEAPATH on a hardened enterprise platform like RHEL is a significant step forward in bringing IT/OT convergence and software-defined architectures to the world's power substations. Watch this space.

Stop by at the Red Hat booth at Enlit 2025 in Bilbao to see this in action. Reach out to the author or Red Hat's global edge team to engage in conversations around your particular environment.