HyperShift introduces a new way to manage Kubernetes with a Red Hat OpenShift feature called hosted control planes that run as workloads on existing clusters. This model cuts costs and complexity, speeds up cluster creation and upgrades, and makes it easier to scale large fleets. With stronger isolation, smarter automation, and optimized resource usage, HyperShift delivers the agility enterprises need to stay ahead.

What is HyperShift?

HyperShift is the open source technology behind the hosted control planes feature in OpenShift. Instead of running each cluster’s control plane on nodes, HyperShift hosts them on a management cluster, enabling faster provisioning, better efficiency, and greater scalability. In practice, you would not install HyperShift directly. You would use hosted control planes in OpenShift powered by HyperShift.

Hosted clusters with OpenShift Virtualization

Running Red Hat OpenShift Virtualization on hosted clusters unlocks a powerful platform for managing virtual machine workloads. This architecture combines the hardware-level control of bare metal with the performance benefits of lightweight hosted control planes, offering a flexible and efficient infrastructure for modern applications.

NodePools: VMs vs. bare metal

NodePools are groups of worker nodes in a hosted cluster, where VMs and applications actually run, all sharing the same configuration and lifecycle management settings.

HyperShift hosted control planes can run NodePools on either virtual machines or bare metal agents:

- VMs: Provide easier provisioning, cloud-friendly, integrates well with existing virtualization and storage platforms.

- Bare metal: Offers better performance, hardware isolation, but requires careful setup of networking (i.e., VIPs, DNS, and load balancers) and storage.

This article focuses on the bare metal approach, highlighting the specific networking and storage considerations required for this scenario.

Understanding the architecture

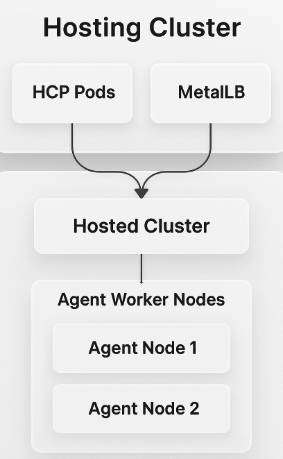

Before diving into the implementation, it's important to understand the following key components of the cluster architecture:

- Management cluster (hosting cluster): The existing OpenShift cluster that hosts the control plane workloads.

- Hosted cluster: The new OpenShift cluster whose control plane runs on the management cluster (Figure 1).

- Agent nodes: Physical machines that join the hosted cluster as worker nodes.

Certificate management considerations

When working with custom domains for the Kube API, it's important to understand how certificate generation works in HyperShift. The system automatically generates certificates based on the IP address configuration, which ensures secure communication while maintaining compatibility with the hosting infrastructure.

For environments requiring specific FQDN-based certificates, consider implementing custom certificate management strategies that align with your organization's PKI requirements.

Deploy clusters with HyperShift

HyperShift's hosted cluster deployment provides a powerful foundation for multi-tenant OpenShift environments. The following steps demonstrate the configuration patterns and best practices to create scalable, more secure, and manageable OpenShift infrastructures that meet enterprise requirements.

Step 1: Prepare the management cluster

- Ensure you have an existing OpenShift cluster that will serve as the management/hosting cluster.

- Verify the installation of these required operators:

- Multicluster Engine

- MetalLB

Step 2: Configure networking

This is a crucial step that involves configuring the network infrastructure for your hosted cluster's API server. This requires careful coordination between DNS, load balancing, and IP address management.

Reserve the API server endpoint

- Define a Virtual IP (VIP) for the hosted cluster’s API server.

- Create a DNS record pointing this VIP to the desired domain (api.<cluster>.<basedomain>).

To make it easier to understand, let's assume these variables:

hosted-cluster-namespace = hc-site1-linux-workloadhosted-cluster-name = ocp-linux-vms

Next, we will configure the MetalLB as follows:

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: ocp-linux-vms-api-ip

namespace: metallb-system

spec:

addresses:

- 192.168.0.11/32

serviceAllocation:

namespaces:

- hc-site1-linux-workload-ocp-linux-vms

autoAssign: true

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: ocp-linux-vms-api-advertisement

namespace: metallb-system

spec:

ipAddressPools:

- ocp-linux-vms-api-ipNetworking checklist:

- The pool IP = the IP used in the DNS record.

- Only one IP used for the API server.

- Namespace of the pool must follow the pattern

{hosted-cluster-namespace}-{hosted-cluster-name}

A hosted cluster also needs a VIP for the Ingress layer (wildcard). You could use a load balancer or MetalLB, but that's a topic for another article.

Step 3: Configure storage

If you're using Red Hat OpenShift Data Foundation, install the operator on the management cluster. If you use another type of storage, make sure to configure it in the clusters that need access to it. Also, adjust the storageClass as needed.

Ensure that the ocs-storagecluster-ceph-rbd-virtualization storage class exists (if using ODF).

If you do not want template images downloaded automatically, adjust the HyperConverged resource as follows:

spec:

enableCommonBootImageImport: falseStep 4: Define environment variables

Using variables is not mandatory, but they will make the process easier to repeat and facilitate automation.

export HOSTED_CLUSTER_NAME="ocp-linux-vms"

export CLUSTERS_NAMESPACE="hc-site1-linux-workload"

export NODE_POOL_REPLICAS="2"

export BASEDOMAIN="example.com"

export ETCD_SC="ocs-storagecluster-ceph-rbd-virtualization"

export KUBEAPI_VIP="192.168.0.11"When creating the inventory with Agent Nodes, don't forget to apply a label to them. You can do this via NMStateConfig.

Step 5: Create the hosted cluster

Use the hcp CLI to generate the hosted cluster manifests:

hcp create cluster agent \

--name ${HOSTED_CLUSTER_NAME} \

--namespace ${CLUSTERS_NAMESPACE} \

--base-domain=${BASEDOMAIN} \

--node-pool-replicas ${NODE_POOL_REPLICAS} \

--etcd-storage-class ${ETCD_SC} \

--api-server-address ${KUBEAPI_VIP} \

--render --render-sensitive > hosted-cluster-manifests.yamlPay attention to the render parameter. This is important because we can easily customize the YAML manifests of the hosted cluster.

This is just one example. You can explore many other parameters and options, but the best choice depends on the project’s needs.

Step 6: Adjust service publishing strategy

The default configuration uses NodePort services. But for production deployments, LoadBalancer services provide better integration with enterprise networking.

Use a combination of LoadBalancer and Routes as follows:

services:

- service: APIServer

servicePublishingStrategy:

type: LoadBalancer

- service: OAuthServer

servicePublishingStrategy:

type: Route

- service: OIDC

servicePublishingStrategy:

type: Route

- service: Konnectivity

servicePublishingStrategy:

type: Route

- service: Ignition

servicePublishingStrategy:

type: RouteThis configuration leverages OpenShift's native routing capabilities for most services while using LoadBalancer specifically for the API server.

Step 7: Manage certificates

HyperShift automatically generates certificates based on the configured IP.

For enterprise environments:

- Integrate with your internal CA/PKI.

- Replace the default certificates if compliance requires it.

- Customize your hosted cluster

HostekubeAPIServerDNSNamevariable.

Step 8: Production hardening

To harden production, plan according to the following:

- Right-size the management cluster to handle control plane workloads.

- Avoid subnet overlaps between management and hosted clusters.

- Define backup and disaster recovery strategies (including geographic DR if needed).

Step 9: Monitor and validate

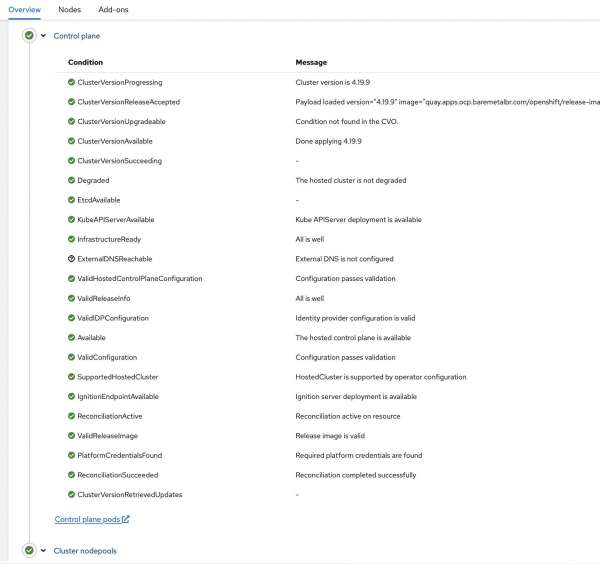

For monitoring, you can track cluster creation in OpenShift Console > Infrastructure > Clusters.

To validate, follow these steps:

- Confirm agent node registration and readiness.

- Test API server accessibility with

oclogin. - Verify connectivity between management and hosted clusters.

- Schedule workloads properly on agent nodes.

You can see the deployed hosted cluster in Figure 2.

Wrap up

HyperShift's hosted cluster deployment provides a powerful foundation for multi-tenant OpenShift environments. By following the configuration patterns and best practices demonstrated in this article, you can create scalable, more secure, and manageable OpenShift infrastructures that meet enterprise requirements.

This technology demonstrates Red Hat's commitment to simplifying Kubernetes operations while maintaining the full power and flexibility of OpenShift. As organizations continue to adopt cloud-native architectures, HypersShift provides the operational efficiency needed to manage multiple clusters at scale.

Whether you're building development environments, implementing multi-tenant platforms, or creating edge-computing solutions, HyperShift's hosted cluster approach offers the flexibility and efficiency modern infrastructure demands.

Last updated: October 13, 2025