Integrating the powerful capabilities of large language models (LLMs) into real-world workflows is one of the most significant advancements in modern application development. This article explores how to integrate Red Hat AI Inference Server with LangChain to build agentic workflows, with a focus on document processing. In this example, Red Hat AI Inference Server serves the LLM, while LangChain manages the application state and workflow.

About LangChain

LangChain is a framework designed to facilitate the development of applications powered by large language models. LangChain implements a standard interface for LLMs and related technologies, such as embedding models and vector stores, and integration with other providers.

LangChain can also be integrated with the LangGraph framework, which enables fine-grained control over workflows that integrate LLMs. Therefore, if the use case involves a sequence of steps requiring orchestration and decision-making at key points, LangGraph provides the structure to manage that complexity effectively.

About Red Hat AI Inference Server

Red Hat AI Inference Server is a robust, enterprise-grade solution for LLM inference and serving, supported and maintained by Red Hat, which is leveraged from the popular community-supported vLLM inference server.

One of Red Hat AI Inference Server's great advantages is the flexibility for model serving options, having a wide range of validated models, parametrization, and more. Also, it is cloud native, allowing it to scale in any hybrid cloud OpenShift environment. For more details, refer to the Red Hat AI Hugging Face space and the Red Hat AI Inference Server official documentation.

Use case description

To demonstrate the integration between LangChain and Red Hat AI Inference Server, a document processing use case was developed. Depending on the LLM's reasoning, the document will be either approved or rejected.

The application contains a document-processing agent that extracts information from an uploaded PDF and embeds it in a vector store. This vectorized information is processed by the LLM, which checks compliance rules, and finally responds with a status, Approved or Rejected. All coordinated via LangGraph states.

Planning the app workflow

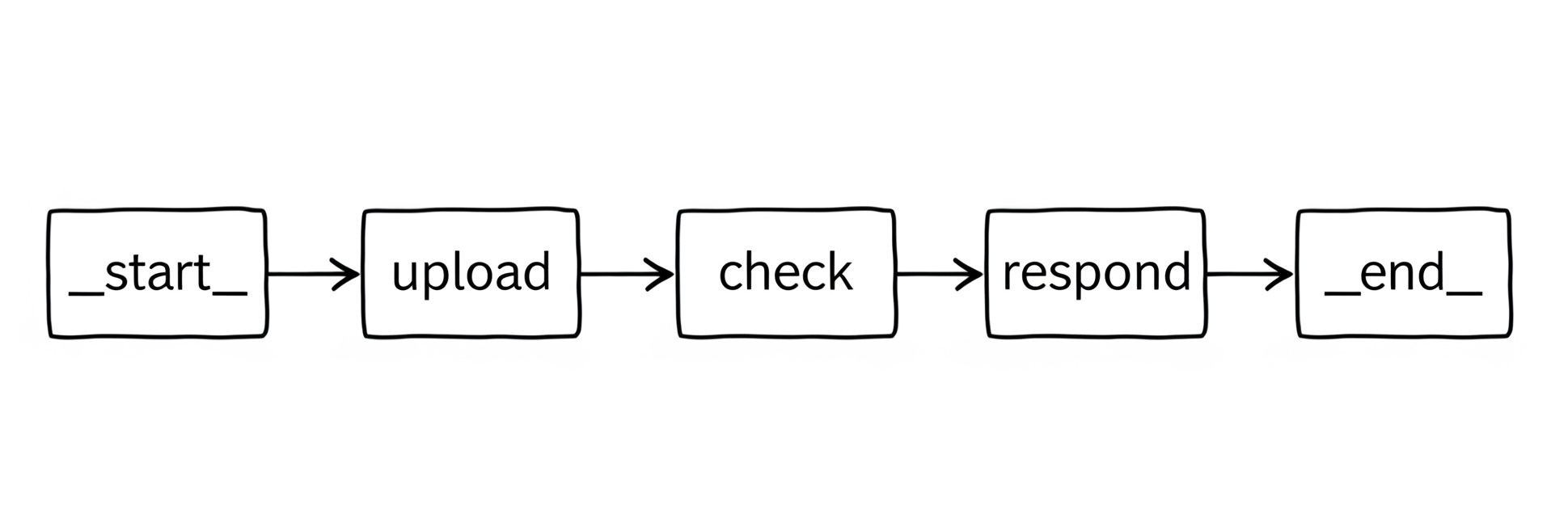

Having described the use case, the next step is to design the graph nodes and workflow (Figure 1). For this use case, there are three main nodes to build:

- Upload the file: Locate the document in a valid local path. Its contents will be processed by the LLM.

- Checking compliance rules: Verify if the previously uploaded document contains the desired rule, in this case, if it includes a termination clause.

- Response: Generated a final response approving or rejecting the document. It also includes a reasoning from the LLM.

Translating this to LangChain in Python code, an option is to define the states as functions (you could also define them as classes, depending on each use case and development practices). In the current case, each node is defined as a function:

def upload_and_extract(state):

print("Loading & Chunking PDF...")

...

def check_compliance_with_retrieval(state):

print("Checking for 'termination clause'...")

...

def respond(state):

if state["compliant"]:

msg = "Document Approved."

else:

msg = "Document Rejected. Missing termination clause."

...Once we define the node functions and logic, it should build the state graph to manage the use case workflow:

# build the state graph

graph = StateGraph(State)

graph.add_node("upload", RunnableLambda(upload_and_extract))

graph.add_node("check", RunnableLambda(check_compliance_with_retrieval))

graph.add_node("respond", RunnableLambda(respond))

graph.set_entry_point("upload")

graph.add_edge("upload", "check")

graph.add_edge("check", "respond")

graph.set_finish_point("respond")

# execute the workflow

workflow = graph.compile()

workflow.invoke({})The full application example, along with its local execution configuration, is detailed in the next section.

Set up the environment and execute the application

Once the use case workflow and the nodes' purposes are designed, it’s time to implement the code details. The below-described procedure was configured in an environment with the following technical details:

- OS: Fedora 41

- GPU: NVIDIA RTX 4060 Ti (16 GB vRAM)

- RHAIIS 3.0.0 image

- Python 3.12

Configure the application environment

Clone the GitHub repository:

git clone https://github.com/alexbarbosa1989/rhaiis-langchain.gitMove to the application directory:

cd rhaiis-langchainCreate an

.envfile where will be configured sensitive information, such as variables and passwords. In this case, define theDOCUMENT_PATHvariable with the full path to the PDF document that will be analyzed. An example PDF document is provided in the application repository in therhaiis-langchain/docs/example/contract-template.pdfpath:echo "DOCUMENT_PATH=/home/user/rhaiis-langchain/docs/example/contract-template.pdf" >> .envVerifying the contents:

cat .env DOCUMENT_PATH=/home/user/Downloads/rhaiis-langchain/docs/example/contract-template.pdfCreate a Python virtual environment:

python3.12 -m venv --upgrade-deps venvActivate the environment:

source venv/bin/activateInstall the required libraries to run the application correctly:

pip install -r requirements.txt

Start the Red Hat AI Inference Server container

In another terminal window, start a Red Hat AI Inference Server instance to serve an LLM; in this case, the quantized RedHatAI/Meta-Llama-3.1-8B-Instruct-quantized.w8a8 model was served. Make sure that the Podman environment is previously configured by following the suggested procedure in the product documentation, specifically the Serving and inferencing with AI Inference Server section:

podman run -ti --rm --pull=newer \

--user 0 \

--shm-size=0 \

-p 127.0.0.1:8000:8000 \

--env "HUGGING_FACE_HUB_TOKEN=$HF_TOKEN" \

--env "HF_HUB_OFFLINE=0" \

-v ./rhaiis-cache:/opt/app-root/src/.cache \

--device nvidia.com/gpu=all \

--security-opt=label=disable \

--name rhaiis \

registry.redhat.io/rhaiis/vllm-cuda-rhel9:3.0.0 \

--model RedHatAI/Meta-Llama-3.1-8B-Instruct-quantized.w8a8 \

--max_model_len=4096Run the application

Go back to the terminal session where the application was cloned and the Python virtual environment is activated. There, run the Python application:

python app.pyThe application will automatically follow the design & build workflow, processing the set PDF document specified in the DOCUMENT_PATH variable. It will analyze the document's contents using the served LLM to check for the presence of a termination clause. Based on this review, the document will either be approved or rejected. The provided contract-template.pdf document complies with the established rule; therefore, it should be approved:

Loading & Chunking PDF...

Checking for 'termination clause'...

Final Response: Document Approved.

Reasoning: yes.

the document contains a termination clause in section 3, which states: "either party may terminate this agreement with 30 days written notice. upon termination, all outstanding balances must be paid." this clause outlines the conditions for terminating the agreement.For a rejected document test, only need to update the DOCUMENT_PATH variable in the .env file with another document that doesn’t contain any termination clause, and re-run the application:

Loading & Chunking PDF...

Checking for 'termination clause'...

Final Response: Document Rejected. Missing termination clause.

Reasoning: no, the document does not contain a termination clause.

a termination clause is a provision in a contract that specifies the conditions under which the contract may be terminated. the document provided appears to be an instruction manual for installing a topcase for a royal enfield himalayan motorcycle, and it does not mention anything about terminating a contract or agreement.Finally, it is possible to print the graph based on the configured workflow by uncommenting the last line in app.py and re-running the application:

# print the graph in ASCII format (OPTIONAL)

#print(workflow.get_graph().draw_ascii())# print the graph in ASCII format (OPTIONAL)

print(workflow.get_graph().draw_ascii())Additional expected output:

+-----------+

| __start__ |

+-----------+

*

*

*

+--------+

| upload |

+--------+

*

*

*

+-------+

| check |

+-------+

*

*

*

+---------+

| respond |

+---------+

*

*

*

+---------+

| __end__ |

+---------+Wrapping up

This is just a basic example that shows one of the alternatives to integrate LLMs into an application's design. Using frameworks like LangChain takes the power of LLMs to another level by adding workflow and state management to fit into specific business needs. Incorporating agentic approaches and tools like vector stores for document processing is just one of many advanced capabilities you can leverage with these integrations.

Next, you can explore more alternatives for RHAIIS serving and integrations. We encourage you to walk through the steps to run OpenAI’s Whisper model using Red Hat AI Inference Server on a Red Hat Enterprise Linux 9 environment: Speech-to-text with Whisper and Red Hat AI Inference Server