Imagine managing virtual machines (VMs) in your continuous integration (CI) process as easily as you would any other piece of software. Red Hat OpenShift Pipelines makes this vision a reality by providing a robust framework for building CI/CD systems. This framework empowers developers to seamlessly build, test, and deploy applications in a truly cloud-native way.

This article demonstrates how the integration of OpenShift Pipelines, Pipelines as Code, and Red Hat OpenShift Virtualization can automate the creation of standardized Windows golden images for efficient and consistent VM provisioning.

How it works

OpenShift Pipelines achieves this by managing pipelines, individual pipeline steps, and even pipeline runs as Kubernetes Custom Resources (CRs). The advantage of CRs lies in their ability to be stored as YAML code within files in a source control management (SCM) system like Git. This approach mirrors the principles of GitOps. By co-locating your pipeline definitions as code within the same SCM repository as your application code, you gain significant advantages.

It dramatically simplifies the versioning, reviewing, and collaborative aspects of pipeline changes, allowing them to evolve in lockstep with your application code. Furthermore, this integration provides a centralized vantage point, enabling you to monitor pipeline status and control execution directly from your SCM, eliminating the need to constantly switch between disparate systems.

Red Hat OpenShift Virtualization stands as a powerful add-on to the OpenShift platform, seamlessly integrating the management of traditional VMs directly within your cloud-native environment.

OpenShift Virtualization, combined with the declarative power of OpenShift Pipelines and the "pipelines as code" paradigm, fundamentally transforms CI/CD. This synergy enables developers to manage and interact with VMs directly within their automated workflows, treating them as native objects. Consequently, this unified approach streamlines operations, accelerates application delivery, and bridges the gap between traditional virtualization and modern cloud-native practices.

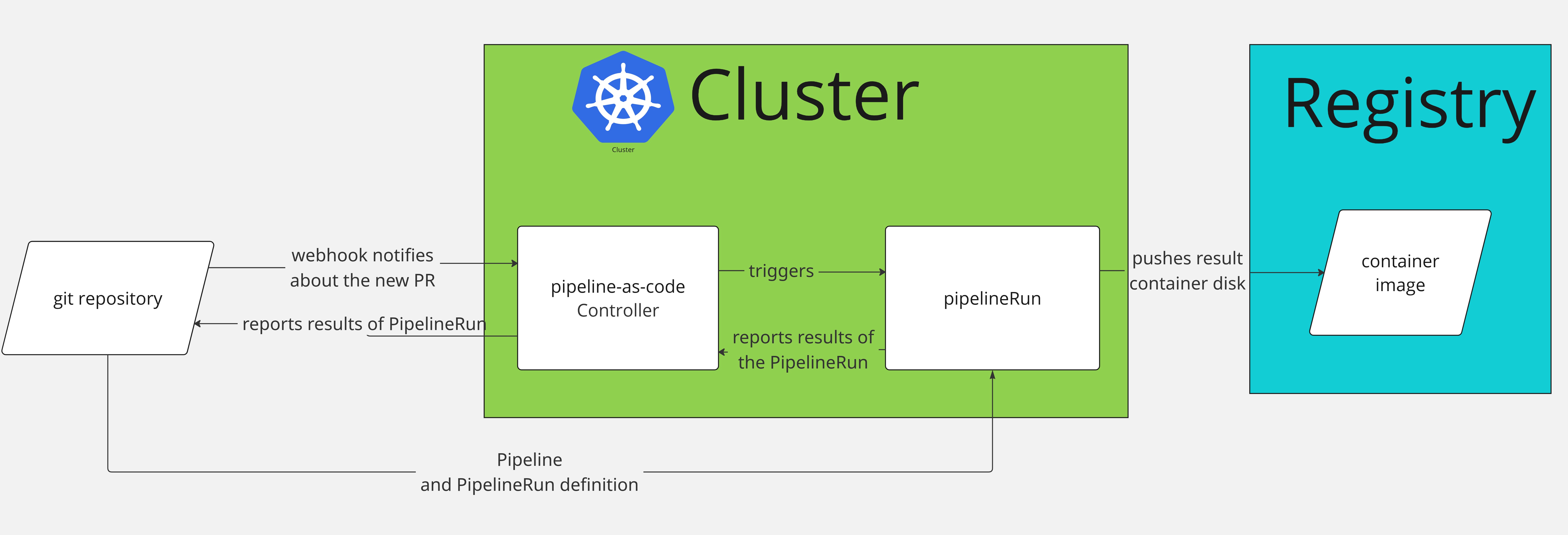

The diagram in Figure 1 shows the high-level functionality of the Windows image-building service.

The windows-image-builder repository includes multiple manifests of Pipeline, PipelineRuns, and ConfigMaps with answer files and PowerShell scripts. The connection between the repository and the cluster is managed by the Pipelines as Code (PAC) controller. When a user creates a pull request (PR), the repository webhook contacts the PAC controller running inside the cluster.

Based on the configuration and the changes introduced in the PR, the PAC controller runs the appropriate Pipeline by creating a PipelineRun for it. Because the example PipelineRun references a Pipeline stored in the same repository, PAC controller also downloads and deploys the Pipeline.

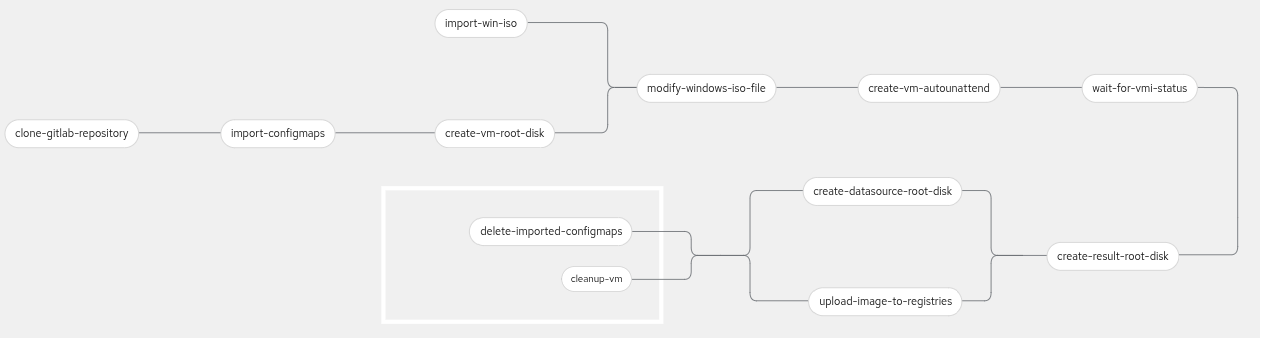

Once the PipelineRun completes successfully, PAC reports the results back to the PR. The full pipeline diagram is visible in Figure 2.

The project uses a modified windows-efi-installer pipeline (the original pipeline is located here).

The pipeline required modifications like original pipeline downloads ConfigMaps and creates Windows disk, which is available only in the cluster where the pipeline is triggered. The updated pipeline clones the windows-image-builder repository. From this cloned repository, it deploys ConfigMaps (needs to be located under the configmaps/autounattend/ path inside the repository) with answer files and PowerShell scripts.

To be able to fully use the resulting image in different clusters, the pipeline contains a task for pushing the resulting Windows image to an external registry, so then you can use the image across multiple clusters. With an appropriate answers file, the pipeline can build golden images for Windows 10, 11, Server 2012, Server 2016, Server 2019, Server 2022, and Server 2025, all with UEFI mode enabled. Right now the example repository contains answers files for Windows 11 and Server 2025.

The pipeline expects the Windows ISO to be already present in the cluster as a PVC.

Prerequisites

- You will need a Red Hat OpenShift cluster running version 4.17 or newer.

- Install Red Hat OpenShift Pipelines and OpenShift Virtualization from the OperatorHub.

- Import Windows ISO file into a PVC (you can use a

DataVolumefor this).

apiVersion: cdi.kubevirt.io/v1beta1

kind: DataVolume

metadata:

name: windows-source

spec:

source:

http:

url: <URL>

storage:

volumeMode: Filesystem

resources:

requests:

storage: 9Gi- Install the command-line tools as documented here.

- Create a new OpenShift project. Connect the repo to the OpenShift cluster as documented here.

- Test the newly created webhook in GitHub (settings / Webhooks).

- Add all required files from the example repository to your repository and push it.

git add .

git commit -sm "initialize windows-image-builder repository"

git pushExample PipelineRun

You run pipelines by creating PipelineRuns. You can find an example PipelineRun in windows-qe-virtio.yaml.

The example PipelineRun includes the parameter acceptEula. By setting this parameter, you are agreeing to the applicable Microsoft end user license agreement(s) for each deployment or installation for the Microsoft product(s). Set this parameter to true after reviewing the EULA. If you set it to false, the pipeline will exit in the first task.

A PipelineRun consists of two key fields: metadata.annotations and spec.params. Fields spec.pipelineRef, spec.taskRunSpecs, and spec.workspaces are required to keep as-is.

Annotations

Use annotations to configure PAC (for example, which pipeline to use) on which PR comment it should trigger the PipelineRun.

apiVersion: tekton.dev/v1

kind: PipelineRun

metadata:

name: windows11-qe-virtio-installer-run

annotations:

pipelinesascode.tekton.dev/pipeline: "pipeline/windows-autounattend.yaml"

pipelinesascode.tekton.dev/on-comment: "^/windows11-qe-virtio"

pipelinesascode.tekton.dev/on-event: "[incoming,push]"

pipelinesascode.tekton.dev/on-target-branch: "[main]"

pipelinesascode.tekton.dev/on-cel-expression: >-

(target_branch == "main") &&

(files.all.exists(x, x.matches('.tekton/windows-qe-virtio.yaml|configmaps/autounattend/windows11-qe-virtio.yaml')))pipelinesascode.tekton.dev/pipeline: "pipeline/windows-autounattend.yaml": Tells the PAC which local pipeline to use.pipelinesascode.tekton.dev/on-comment: "^/windows11-qe-virtio": Triggers the PipelineRun if user comments the PR with/windows11-qe-virtiocomment.pipelinesascode.tekton.dev/on-event: "[incoming,push]": Triggers the PipelineRun if the event which triggers the PAC equals incoming (triggers PipelineRun via http webhook) or push (new code is pushed to PR).pipelinesascode.tekton.dev/on-target-branch: "[main]": Triggers the PipelineRun only if the PR is opened against the main branch.pipelinesascode.tekton.dev/on-cel-expression: >-(files.all.exists(x, x.matches('.tekton/windows-qe-virtio.yaml|configmaps/autounattend/windows11-qe-virtio.yaml'))): Triggers the PipelineRun if the PR is opened against the main branch and contains any change to files.tekton/windows-qe-virtio.yamlorconfigmaps/autounattend/windows11-qe-virtio.yaml.

Parameters

This part contains all parameters the pipeline needs. Notice the result-registry-url and secret-name parameters. The result-registry-url parameter specifies the URL of the registry, where the resulting image will be pushed. You should use a full URL with a tag for this parameter. The secret-name parameter is the name of the secret that holds the registry credentials.

apiVersion: tekton.dev/v1

kind: PipelineRun

metadata:

name: windows11-qe-virtio-installer-run

spec:

params:

- name: acceptEula

value: false

- name: secretName

value: kubevirt-disk-uploader-credentials-quay

- name: resultRegistryURL

value: quay.io/<user>/<image-name>:<tag>The secret has to be in this format:

apiVersion: v1

stringData:

accessKeyId: <ACCESS_KEY_ID>

secretKey: <SECRET_KEY>

kind: Secret

metadata:

name: disk-uploader-credentials

type: OpaqueYou can adjust all other parameters based on which Windows version image you would like to build. The parameters revision and repository-url are populated by PAC and should not be changed. The parameters spec.pipelineRef, spec.taskRunSpecs, and spec.workspaces must be kept as they are.

How to trigger the pipeline

To create a new image, add a new PipelineRun with correct parameters in the .tekton folder in the repository and correct ConfigMap under configmaps/autounattend.

Commit these files and open a PR in your repository connected to the cluster. The build should start within a few seconds. You can monitor the progress by navigating to the Pipelines tab in the left menu and selecting the PipelineRuns tab.

Watch the entire automation process in action in this video:

Results

The example windows11-qe-virtio ConfigMap includes answer files that install Windows 11 with VirtIO drivers, Windows Subsystem for Linux (WSL), Python, and an enabled SSH server. The image then skips OOBE and shuts down. The resulting image is suitable for running Python test scripts or executing scripts within WSL. Alternatively, you can create a custom answer file to install your own software and configurations.

If you need different Windows images with different configurations, just create a new PipelineRun and new ConfigMap in the windows-image-builder project. Then create a new PR and the automation will trigger a new PipelineRun in the cluster. When the PipelineRun finishes, your new Windows image will be waiting for you in the registry you defined.

The newly created golden image can be imported into other clusters as described in the article Automate VM golden image management with OpenShift.

Final thoughts

This article provided a comprehensive guide on establishing your own automated pipeline for regularly building Windows golden images. This approach ensures that any modification you make to your autounattend.xml file, which dictates Windows setup and configuration, will automatically trigger the Windows image builder to produce a new, updated Windows image. This continuous process guarantees your golden images are always current, consistent, and reflect the latest desired configurations.