Although continuous integration is widely used in public projects, it has many limitations such as the size of resources or execution time. For instance, when you use GitHub actions free tier, you are limited to agents with 2 cpu and 7 GB of memory and total execution time of 2000 minutes per month. Other CI providers, like Azure Pipelines or Travis CI, have similar limitations. Continuous integration systems are popular, but free tier is not enough for some use cases. Fortunately, there is the Testing Farm for community projects maintained or co-maintained by Red Hat or Fedora/CentOS projects.

In this article, we will focus on how to use Testing Farm for projects with written and managed tests in different testing frameworks like JUnit.

What is Testing Farm?

Testing Farm is an open-source testing system offered as a service. You can use Testing Farm in various ways such as:

- Run tests using the CLI from your local machine to create a testing machine.

- Integrate it into the project as a CI check via GitHub Actions or Packit.

- Send HTTP requests to the service API with desired requirements.

The tests are defined via tmt, a test management tool that allows users to manage and execute tests on different environments. For more details about how Fedora manages the testing via tmt check out the Fedora CI documentation.

Strimzi e2e tests

As a reference project, we use strimzi-kafka-operator and test suite. The test suite is written in Java and uses the Junit5 test framework. As an execution environment, it requires a Kubernetes platform such as Red Hat OpenShift, Minikube, EKS, or similar. Due to these requirements, it is not easy to run the tests with the full potential of parallel tests execution on Azure (used as the main CI tool in Strimzi organization).

How to onboard

As the first step, you need access to the Testing Farm API. You should follow the onboarding guide, part of the official documentation.

Get the secret

This secret is specific to the user and used for authentication and authorization to the service.

Add tmt to your project

Even if users don't use tmt for test case management, there are several parts to implement in the project to make Testing Farm understand your needs.

You have to mark the root folder in your project with the .fmf directory, this can be done by using the tmt init command. For more details, check our configuration in the Strimzi repository.

Create a test plan

The plan for Testing Farm contains a test plan definition. This definition is composed of hardware requirements, preparation steps for creating a VM executor, and specific plans. The specific plan defines selectors for tests that should be executed. As part of preparation steps, we also spin-up the Minikube cluster and build and push Strimzi container images used during testing. On the bottom of the file, we specify specific test plan names and configuration for executions of specific tests defined later in this article.

The following is an example of our plan:

# TMT test plan definition

# https://tmt.readthedocs.io/en/latest/overview.html

# Baseline common for all test plans

#######################################################################

summary: Strimzi test suite

discover:

how: fmf

# Required HW

provision:

hardware:

memory: ">= 24 GiB"

cpu:

processors: ">= 8"

# Install required packages and scripts for running strimzi suite

prepare:

- name: Install packages

how: install

package:

- wget

- java-17-openjdk-devel

- xz

- make

- git

- zip

- coreutils

... ommited

- name: Build strimzi images

how: shell

script: |

# build images

eval $(minikube docker-env)

ARCH=$(uname -m)

if [[ ${ARCH} == "aarch64" ]]; then

export DOCKER_BUILD_ARGS="--platform linux/arm64 --build-arg TARGETPLATFORM=linux/arm64"

fi

export MVN_ARGS="-B -DskipTests -Dmaven.javadoc.skip=true --no-transfer-progress"

export DOCKER_REGISTRY="localhost:5000"

export DOCKER_ORG="strimzi"

export DOCKER_TAG="test"

make java_install

make docker_build

make docker_tag

make docker_push

# Discover tmt defined tests in tests/ folder

execute:

how: tmt

# Post install step to copy logs

finish:

how: shell

script:./systemtest/tmt/scripts/copy-logs.sh

#######################################################################

/smoke:

summary: Run smoke strimzi test suite

discover+:

test:

- smoke

/regression-operators:

summary: Run regression strimzi test suite

discover+:

test:

- regression-operators

/regression-components:

summary: Run regression strimzi test suite

discover+:

test:

- regression-components

/kraft-operators:

summary: Run regression kraft strimzi test suite

discover+:

test:

- kraft-operators

/kraft-components:

summary: Run regression kraft strimzi test suite

discover+:

test:

- kraft-components

/upgrade:

summary: Run upgrade strimzi test suite

provision:

hardware:

memory: ">= 8 GiB"

cpu:

processors: ">= 4"

discover+:

test:

- upgrade

To see the full version, refer to our repository.

How to execute tests

To execute tests, we create a simple shell script which calls the maven command to execute the Strimzi test suite. We also define metadata for tests which specify environment variables for test suites and other configurations like timeout.

In this test metadata example, you can see that we specify default env variables and configuration and specific tests override this configuration.

test:

./test.sh

duration: 2h

environment:

DOCKER_ORG: "strimzi"

DOCKER_TAG: "test"

TEST_LOG_DIR: "systemtest/target/logs"

TESTS: ""

TEST_GROUPS: ""

EXCLUDED_TEST_GROUPS: "loadbalancer"

CLUSTER_OPERATOR_INSTALLTYPE: bundle

adjust:

- environment+:

EXCLUDED_TEST_GROUPS: "loadbalancer,arm64unsupported"

when: arch == aarch64, arm64

/smoke:

summary: Run smoke strimzi test suite

tag: [smoke]

duration: 20m

tier: 1

environment+:

TEST_PROFILE: smoke

/upgrade:

summary: Run upgrade strimzi test suite

tag: [strimzi, kafka, upgrade]

duration: 5h

tier: 2

environment+:

TEST_PROFILE: upgrade

/regression-operators:

summary: Run regression strimzi test suite

tag: [strimzi, kafka, regression, operators]

duration: 10h

tier: 2

environment+:

TEST_PROFILE: operators

/regression-components:

summary: Run regression strimzi test suite

tag: [strimzi, kafka, regression, components]

duration: 12h

tier: 2

environment+:

TEST_PROFILE: components

/kraft-operators:

summary: Run regression kraft strimzi test suite

tag: [strimzi, kafka, kraft, operators]

duration: 8h

tier: 2

environment+:

TEST_PROFILE: operators

STRIMZI_FEATURE_GATES: "+UseKRaft,+StableConnectIdentities"

/kraft-components:

summary: Run regression kraft strimzi test suite

tag: [strimzi, kafka, kraft, components]

duration: 8h

tier: 2

environment+:

TEST_PROFILE: components

STRIMZI_FEATURE_GATES: "+UseKRaft,+StableConnectIdentities"

Run Testing Farm from the command line

Finally, we can try our changes via cmd. We need to install the Testing Farm CLI tool and API token. To install the Testing Farm CLI, follow this guide.

For example, we can run a smoke test on fedora-38 with both architectures (x86_64 and aarch64) with one command:

$ testing-farm request --compose Fedora-38 --git-url https://github.com/strimzi/strimzi-kafka-operator.git --git-ref main --arch x86_64,aarch64 --plan smoke 📦 repository https://github.com/strimzi/strimzi-kafka-operator.git ref main test-type fmf 💻 Fedora-38 on x86_64 💻 Fedora-38 on aarch64 🔎 api https://api.dev.testing-farm.io/v0.1/requests/*******-40ba-8a45-3c1fbe3da73a 💡 waiting for request to finish, use ctrl+c to skip 👶 request is waiting to be queued 👷 request is queued 🚀 request is running 🚢 artifacts https://artifacts.dev.testing-farm.io/*******-40ba-8a45-3c1fbe3da73a

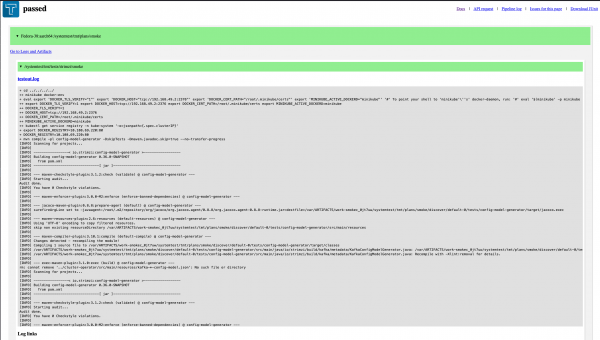

When the test run is completed, we can see the results in the web UI, as shown in Figure 1.

How Packit service simplifies integration

Testing Farm deals with resource problems, but we still need to deal with execution time limits. In Strimzi, our full regression takes around 20 hours, which is insane to run on GitHub Actions, and it is quite complicated to run it on Azure Pipelines. Here is where Packit service comes in.

Packit is an open-source project that eases the integration of your project with Fedora Linux, CentOS Stream, and other distributions. Packit is mostly used by projects that build RPM packages. However, it can be easily used only for triggering tests on Testing Farm.

To use Packit service, users should follow the onboarding guide. We are using Packit on GitHub to install the application and grant access to our organization.

After finishing the onboarding, users can create a configuration file for Packit in the repository. Because we want to only run tests, we use jobs: tests, but you can use different kinds of jobs and triggers. To see the full options for Packit, we suggest checking the documentation or the article, How to set up Packit to simplify upstream project integration.

The following is an example of our configuration file:

# Default packit instance is a prod and only this is used

# stg instance is present for testing new packit features in forked repositories where stg is installed.

packit_instances: ["prod", "stg"]

upstream_project_url: https://github.com/strimzi/strimzi-kafka-operator

issue_repository: https://github.com/strimzi/strimzi-kafka-operator

jobs:

- job: tests

trigger: pull_request

# Suffix for job name

identifier: "upgrade"

targets:

# This target is not used at all by our tests, but it has to be one of the available - https://packit.dev/docs/configuration/#aliases

- centos-stream-9-x86_64

- centos-stream-9-aarch64

# We don't need to build any packages for Fedora/RHEL/CentOS, it is not related to Strimzi tests

skip_build: true

manual_trigger: true

labels:

- upgrade

tf_extra_params:

test:

fmf:

name: upgrade

#######################################################################

- job: tests

trigger: pull_request

# Suffix for job name

identifier: "regression-operators"

targets:

# This target is not used at all by our tests, but it has to be one of the available - https://packit.dev/docs/configuration/#aliases

- centos-stream-9-x86_64

- centos-stream-9-aarch64

# We don't need to build any packages for Fedora/RHEL/CentOS, it is not related to Strimzi tests

skip_build: true

manual_trigger: true

labels:

- regression

- operators

- regression-operators

- ro

tf_extra_params:

test:

fmf:

name: regression-operators

.. ommited

The full .packit.yaml file is available on Strimzi GitHub.

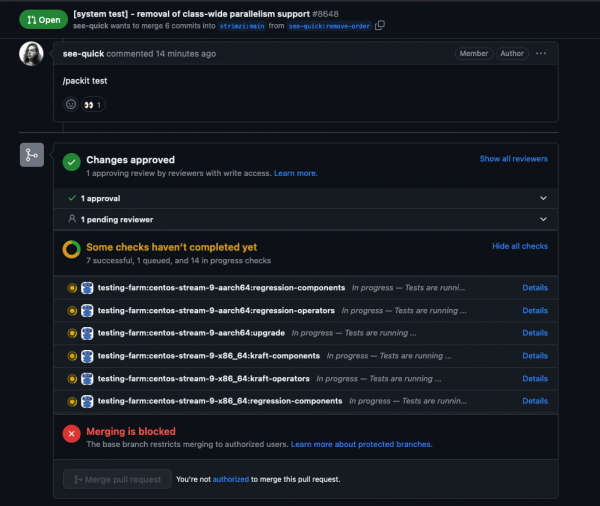

If you successfully onboard to the Packit service, you will see the triggered check in the GitHub pull request, as shown in Figure 2.

Testing Farm simplifies your upstream project

In this article, you've learned how to set up and use Testing Farm with Packit. These two services makes building and testing your upstream project easy. Now you can on-board and use it.

Last updated: September 19, 2023