After configuring my Kafka Connect Image with Debezium, demonstrated in Hugo Guerrero's article Improve your Kafka Connect builds of Debezium, I needed to configure a type of filter to only bring certain events from the database table to my topics. I was able to do this using Debezium SMT with Groovy.

What is Debezium SMT?

Debezium SMT (single message transform) is a filter feature provided by Debezium that is used to process only records that you find relevant. To do that, you need to include plugins the implementations of the JSR223 API (Scripting for the Java Platform) inside your Kafka Connect Image.

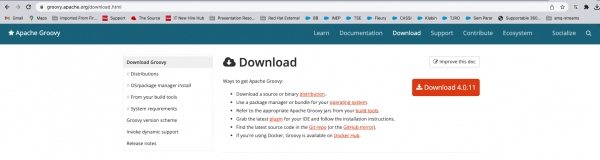

Note that Debezium does not come with an JSR 223 implementation, so you will need to provide the libs to use this feature. We will use the Groovy implementation of JSR 223, so you can download all the relevant jars from the Groovy website.

There are other JSR 223 implementations that you can use, however, we will not cover them here. If you want information about this, go to Debezium documentation.

Download the files

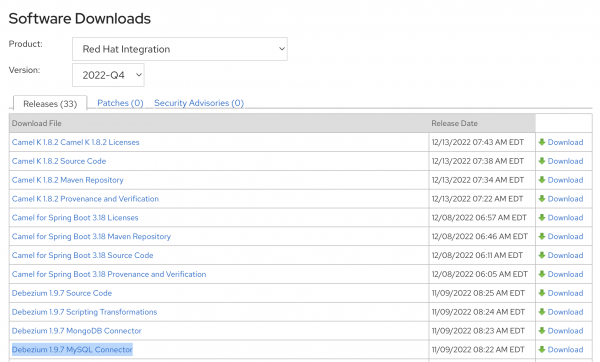

First of all, you will need your database plugin (i.e., SQL Server or MySQL) from the download page. Figure 1 illustrates the Red Hat software downloads page.

That is the connector will need to put in your Kafka Connect to work with MySQL CDC. You will also need to download the scripting transformation package.

With this in place, go to the Groovy website and download the zip that contains all the JAR's files, as shown in Figure 2.

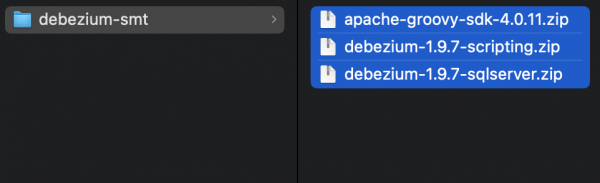

Figure 3 shows the three zip files that we will unzip in the next steps.

Creating the image

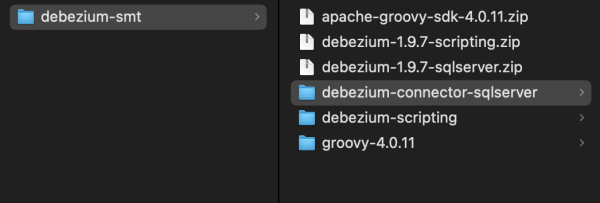

Unzip the files dowloaded in the last step. Use the SQL server plugin, as shown in Figure 4.

Go to the debezium-scripting folder and copy the debezium-scripting-1.9.7.Final...jar and place it inside the debezium-connector-sqlserver folder.

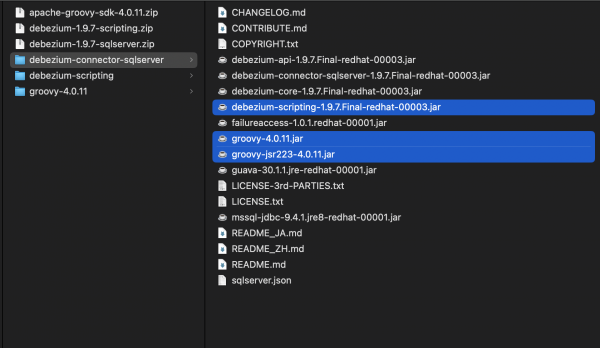

Then go to the groovy-4.0.11/lib folder and copy the jars groovy-4.0.11.jar and groovy-jsr223-4.0.11.jar. Place them in the debezium-connector-sqlserver folder. At this point, your folder should look like Figure 5. Keep in mind that your versions may be different. These are the versions available at the time of this article.

Now, zip the debezium-connector-sqlserver folder and place this zip file into your nexus or Git. Then use this as your artifact, as shown in the previously mentioned Hugo Guerrero article.

How to use transformations

To use this feature, create your Kafka connectors and configure them to use the transformations like the following:

kind: KafkaConnector

apiVersion: kafka.strimzi.io/v1beta2

metadata:

name: sql-connector-for-inserts

labels:

strimzi.io/cluster: my-connect-cluster

namespace: kafka

spec:

class: io.debezium.connector.sqlserver.SqlServerConnector

tasksMax: 1

config:

database.hostname: "server.earth.svc"

database.port: "1433"

database.user: "sa"

database.password: "Password!"

database.dbname: "InternationalDB"

table.whitelist: "dbo.Orders"

database.history.kafka.bootstrap.servers: "my-cluster-kafka-bootstrap:9092"

database.server.name: "internation-db-insert-topic" <-- # This property need to have a unique value

database.history.kafka.topic: "dbhistory.internation-db-insert-topic" <-- # This property need to have a unique value

#### Here start the transforms feature, using the condition where operation is equal 'c', only

#### events of that type will be routed to the topic created by this connector.

transforms: filter

transforms.filter.language: jsr223.groovy

transforms.filter.type: io.debezium.transforms.Filter

transforms.filter.condition: value.op == 'c'

transforms.filter.topic.regex: internation-db-insert-topic.dbo.Orders\

#### end of transforms filter

tombstones.on.delete: 'false'

Summary

This article demonstrated how to configure a Kafka connect image to use Debezium SMT with Groovy and showed you how to use transformations and filters to route events between topics. For more information, refer to the Debezium documentation.