The traditional CPU-centric system architecture is being replaced by designs where systems are aggregated from independently intelligent devices. These systems have their own compute capabilities and can natively run network functions with an accelerated data plane. The new model allows us to offload to accelerators not only the individual subroutines but whole software subsystems, such as networking or storage, with cloud-like security isolation and architectural compartmentalization.

One of the most prominent examples of this new architecture is the data processing unit (DPU). DPUs offer a complete compute system with an independent software stack, network identity, and provisioning capabilities. The DPU can host its own applications using either embedded or orchestrated deployment models.

The unique capabilities of the DPU allow for key infrastructure functions and their associated software stacks to be completely removed from the host node’s CPU cores and to be relocated onto the DPU. For instance, DPU could host the management plane of the network functions and part of the control plane, while the data plane could be accelerated by dedicated Arm cores, ASICs, GPUs, or FPGA IPs. Because DPUs can run independent software stacks locally, multiple network functions could run simultaneously on the same devices with service chaining and shared accelerators to provide generic in-line processing.

OVN/OVS offloading on NVIDIA BlueField-2 DPUs

Red Hat has collaborated with NVIDIA to extend the operational simplicity and hybrid cloud architecture of Red Hat OpenShift to NVIDIA BlueField-2 DPUs. Red Hat OpenShift 4.10 provides BlueField-2 OVN/OVS offload as a developer preview.

Installing OpenShift Container Platform on a DPU makes it possible to offload packet processing from the host CPUs to the DPU. Offloading resource-intensive tasks like packet processing from the server’s CPU to the DPU can free up cycles on the OpenShift worker nodes to run more user applications. OpenShift brings portability, scalability, and orchestration to DPU workloads, giving you the ability to use standard Kubernetes APIs along with consistent system management interfaces for both the host systems and DPUs.

In short, utilizing OpenShift with DPUs lets you get the benefits of DPUs without sacrificing the hybrid cloud experience or adding unnecessary complexity to managing IT infrastructure.

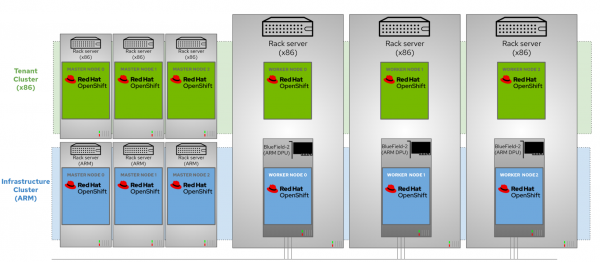

To manage DPUs, Red Hat OpenShift replaces the native BlueField operating system (OS) on each DPU and is deployed using a two-cluster design that consists of:

- Tenant cluster running on the host servers (x86)

- Infrastructure cluster running on DPUs (Arm)

The architecture is illustrated in Figure 1.

In this architecture, DPUs are provisioned as worker nodes of the Arm-based OpenShift infrastructure cluster. This is the blue cluster in Figure 1. The tenant OpenShift cluster, composed of the x86 servers, is where user applications typically run. This is the green cluster. In this deployment, each physical server runs both a tenant node on the x86 cores and an infrastructure node on the DPU Arm cores.

This architecture allows you to minimize the attack surface by decoupling the workload from the management cluster.

This architecture also streamlines operations by decoupling the application workload from the underlying infrastructure. That allows IT Ops to deploy and maintain the platform software and accelerated infrastructure while DevOps deploys and maintains application workloads independently from the infrastructure layer.

Red Hat OpenShift 4.10 provides capabilities for offloading Open Virtual Network (OVN) and Open Virtual Switch (OVS) services that typically run on servers, from the host CPU to the DPU. We are offering this functionality as a developer preview and enabling the following components to support OVN/OVS hardware offload to NVIDIA BlueField-2 DPUs:

- DPU Network Operator: This component is used with the infrastructure cluster to facilitate OVN deployment.

- DPU mode for OVN Kubernetes: This component is assigned by the cluster network operator for the tenant cluster.

- SR-IOV network operator: This component discovers compatible network devices, such as the ConnectX-6 Dx NIC embedded inside the BlueField-2 DPU, and provisions them for SR-IOV access by pods on that server

- ConnectX NIC Fast Data Path on Arm

- Kernel flow offloading (TC Flower)

- Experimental use of OpenShift Assisted Installer and BlueField-2 BMC

The combination of these components allows us to move ovn-kube-node services from the x86 host to the BlueField-2 DPU.

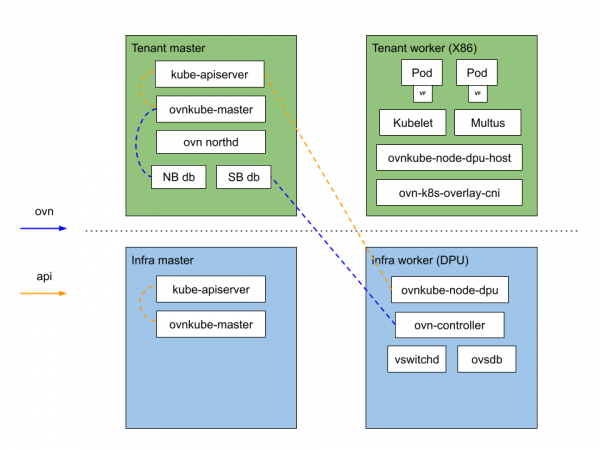

The network flows are offloaded in this manner (see Figure 2):

- The ovn-k8s components are moved from the x86 host to the DPU (ovn-kube, vswitchd, ovsdb).

- The Open vSwitch data path is offloaded from the BlueField Arm CPU to the ConnectX-6 Dx ASIC.

The following Open vSwitch datapath flows managed by ovn-k8s are offloaded to a BlueField-2 DPU that is running OpenShift 4.10:

- Pod to pod (east-west)

- Pod to clusterIP service backed by a regular pod in diff node (east-west)

- Pod to external (north-south)

Let’s take a more detailed look at the testbed setup.

Install and configure an accelerated infrastructure with OpenShift and NVIDIA BlueField-2 DPUs

In our sample testbed shown in Figure 3, we are using two x86 hosts with BlueField-2 DPU PCIe cards installed. Each DPU has eight Arm cores, two 25GB network ports, and a 1GbE management port. We’ve wired 25GB ports to a switch and also connected the BMC port of the DPU to a separate network to manage the device with IPMI.

Next, we’ve installed Red Hat OpenShift Container Platform 4.10 on a DPU to offload packet processing from the host x86 to the DPU. Offloading resource-intensive computational tasks such as packet processing from the server’s CPU to the DPU frees up cycles on the OpenShift Container Platform worker nodes to run more applications or to run the same number of applications more quickly.

OpenShift and DPU deployment architecture

In our setup, OpenShift replaces the native BlueField OS. We used the two-cluster architecture where DPU cards are provisioned as worker nodes in the Arm-based infrastructure cluster. The tenant cluster composed of x86 servers was used to run user applications.

We followed these steps to deploy tenant and infrastructure clusters:

- Install OpenShift assisted installer on the installer node using Podman.

- Install the infrastructure cluster.

- Install the tenant cluster.

- Install the DPU Network Operator on the infrastructure cluster.

- Configuring SR-IOV Operator for DPUs.

For details about how to install the OpenShift 4.10 on BlueField with OVN/OVS hardware offload, refer to the documentation.

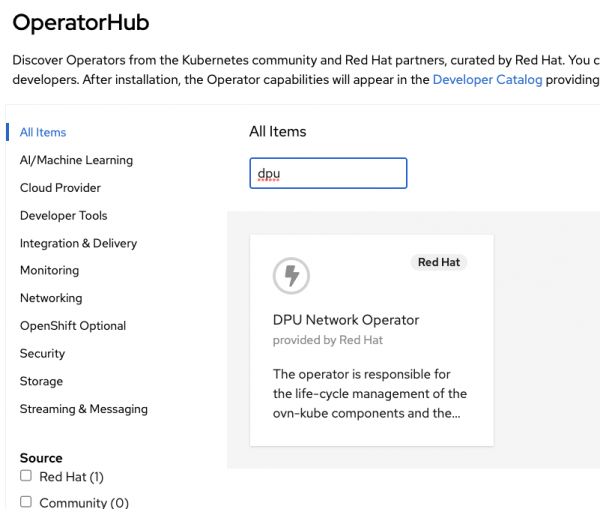

When the cluster is deployed on the BlueField, the OVN configuration is automated with the "DPU Network Operator"; this operator can be installed in the OpenShift console or with the oc command.

As shown in Figure 4, the DPU operator is available for the Arm-based OpenShift clusters in the catalog and not visible in x86 OpenShift clusters.

Validate installation using the OpenShift console

When you have completed the last step, you have two OpenShift clusters running. We will make some console checks to show the configuration done and benchmark the OVS/OVN offloading.

Tenant cluster: We can list the nodes of the Tenant cluster nodes:

[egallen@bastion-tenant ~]$ oc get nodes

NAME STATUS ROLES AGE VERSION

tenant-master-0 Ready master 47d v1.23.3+759c22b

tenant-master-1 Ready master 47d v1.23.3+759c22b

tenant-master-2 Ready master 47d v1.23.3+759c22b

tenant-worker-0 Ready worker 47d v1.23.3+759c22b

tenant-worker-1 Ready worker 47d v1.23.3+759c22b

x86-worker-advnetlab13 Ready dpu-host,worker 35d v1.23.3+759c22b

x86-worker-advnetlab14 Ready dpu-host,worker 41d v1.23.3+759c22b

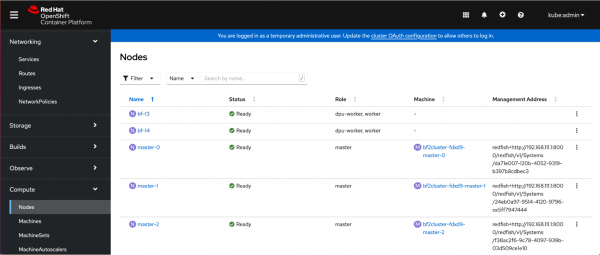

Infrastructure cluster: We can list the nodes of the Infrastructure cluster, including the BlueField-2 nodes with the machine-config pool name dpu-worker:

[egallen@bastion-infrastructure ~]$ oc get nodes

NAME STATUS ROLES AGE VERSION

bf-13 Ready dpu-worker,worker 71d v1.22.1+6859754

bf-14 Ready dpu-worker,worker 74d v1.22.1+6859754

master-0 Ready master 75d v1.22.1+6859754

master-1 Ready master 75d v1.22.1+6859754

master-2 Ready master 75d v1.22.1+6859754

worker-0 Ready worker 70d v1.22.1+6859754

worker-1 Ready worker 70d v1.22.1+6859754

Tenant cluster: We can list the pods running in the openshift-ovn-kubernetes namespace in the tenant cluster:

[egallen@bastion-tenant ~]$ oc get pods -n openshift-ovn-kubernetes

NAME READY STATUS RESTARTS AGE

ovnkube-master-99x8j 4/6 Running 7 25d

ovnkube-master-qdfvv 6/6 Running 14 (15d ago) 25d

ovnkube-master-w28mh 6/6 Running 7 (15d ago) 25d

ovnkube-node-5xlxr 5/5 Running 5 34d

ovnkube-node-6nkm5 5/5 Running 5 34d

ovnkube-node-dpu-host-45crl 3/3 Running 50 34d

ovnkube-node-dpu-host-r8tlj 3/3 Running 30 28d

ovnkube-node-f2x2q 5/5 Running 0 34d

ovnkube-node-j6w6t 5/5 Running 5 34d

ovnkube-node-qtc6f 5/5 Running 0 34d

[egallen@bastion-tenant ~]$ oc get pods -n openshift-ovn-kubernetes -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ovnkube-master-99x8j 4/6 Running 7 25d 192.168.111.122 tenant-master-2 <none> <none>

ovnkube-master-qdfvv 6/6 Running 14 (15d ago) 25d 192.168.111.121 tenant-master-1 <none> <none>

ovnkube-master-w28mh 6/6 Running 7 (15d ago) 25d 192.168.111.120 tenant-master-0 <none> <none>

ovnkube-node-5xlxr 5/5 Running 5 34d 192.168.111.121 tenant-master-1 <none> <none>

ovnkube-node-6nkm5 5/5 Running 5 34d 192.168.111.122 tenant-master-2 <none> <none>

ovnkube-node-dpu-host-45crl 3/3 Running 50 34d 192.168.111.113 x86-worker-advnetlab13 <none> <none>

ovnkube-node-dpu-host-r8tlj 3/3 Running 30 28d 192.168.111.114 x86-worker-advnetlab14 <none> <none>

ovnkube-node-f2x2q 5/5 Running 0 34d 192.168.111.123 tenant-worker-0 <none> <none>

ovnkube-node-j6w6t 5/5 Running 5 34d 192.168.111.120 tenant-master-0 <none> <none>

ovnkube-node-qtc6f 5/5 Running 0 34d 192.168.111.124 tenant-worker-1 <none> <none>

Infrastructure cluster: The DaemonSet and the nodes have the same dpu-worker label. The ovnkube-node host network pods will be scheduled on the BlueField-2:

[egallen@bastion-infrastructure ~]$ oc get ds -n default --show-labels

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE LABELS

ovnkube-node 2 2 2 2 2 beta.kubernetes.io/os=linux,node-role.kubernetes.io/dpu-worker= 65d <none>

[egallen@bastion-infrastructure ~]$ oc get nodes --show-labels | grep dpu-worker

bf-13 Ready dpu-worker,worker 71d v1.22.1+6859754 beta.kubernetes.io/arch=arm64,beta.kubernetes.io/os=linux,kubernetes.io/arch=arm64,kubernetes.io/hostname=bf-13,kubernetes.io/os=linux,network.operator.openshift.io/dpu=,node-role.kubernetes.io/dpu-worker=,node-role.kubernetes.io/worker=,node.openshift.io/os_id=rhcos

bf-14 Ready dpu-worker,worker 74d v1.22.1+6859754 beta.kubernetes.io/arch=arm64,beta.kubernetes.io/os=linux,kubernetes.io/arch=arm64,kubernetes.io/hostname=bf-14,kubernetes.io/os=linux,network.operator.openshift.io/dpu=,node-role.kubernetes.io/dpu-worker=,node-role.kubernetes.io/worker=,node.openshift.io/os_id=rhcos

Infrastructure cluster: We see the ovnkube-node DaemonSet running on the two BlueField-2 infrastructure cluster:

[egallen@bastion-infrastructure ~]$ oc get pods -n default

NAME READY STATUS RESTARTS AGE

ovnkube-node-hshxs 2/2 Running 13 (6d13h ago) 25d

ovnkube-node-mng24 2/2 Running 17 (42h ago) 25d

[egallen@bastion-infrastructure ~]$ oc get pods -n default -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ovnkube-node-hshxs 2/2 Running 13 (6d13h ago) 25d 192.168.111.28 bf-14 <none> <none>

ovnkube-node-mng24 2/2 Running 17 (42h ago) 25d 192.168.111.29 bf-13 <none> <none>

Infrastructure cluster: We get the IP address of the BlueField bf-14 to ssh on it (192.168.111.28):

[egallen@bastion-infrastructure ~]$ oc get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

bf-13 Ready dpu-worker,worker 71d v1.22.1+6859754 192.168.111.29 <none> Red Hat Enterprise Linux CoreOS 410.84.202201071203-0 (Ootpa) 4.18.0-305.40.1.el8_4.test.aarch64 cri-o://1.23.0-98.rhaos4.10.git9b7f5ae.el8

bf-14 Ready dpu-worker,worker 74d v1.22.1+6859754 192.168.111.28 <none> Red Hat Enterprise Linux CoreOS 410.84.202201071203-0 (Ootpa) 4.18.0-305.40.1.el8_4.test.aarch64 cri-o://1.23.0-98.rhaos4.10.git9b7f5ae.el8

master-0 Ready master 75d v1.22.1+6859754 192.168.111.20 <none> Red Hat Enterprise Linux CoreOS 410.84.202201071203-0 (Ootpa) 4.18.0-305.30.1.el8_4.aarch64 cri-o://1.23.0-98.rhaos4.10.git9b7f5ae.el8

master-1 Ready master 75d v1.22.1+6859754 192.168.111.21 <none> Red Hat Enterprise Linux CoreOS 410.84.202201071203-0 (Ootpa) 4.18.0-305.30.1.el8_4.aarch64 cri-o://1.23.0-98.rhaos4.10.git9b7f5ae.el8

master-2 Ready master 75d v1.22.1+6859754 192.168.111.22 <none> Red Hat Enterprise Linux CoreOS 410.84.202201071203-0 (Ootpa) 4.18.0-305.30.1.el8_4.aarch64 cri-o://1.23.0-98.rhaos4.10.git9b7f5ae.el8

worker-0 Ready worker 70d v1.22.1+6859754 192.168.111.23 <none> Red Hat Enterprise Linux CoreOS 410.84.202201071203-0 (Ootpa) 4.18.0-305.30.1.el8_4.aarch64 cri-o://1.23.0-98.rhaos4.10.git9b7f5ae.el8

worker-1 Ready worker 70d v1.22.1+6859754 192.168.111.24 <none> Red Hat Enterprise Linux CoreOS 410.84.202201071203-0 (Ootpa) 4.18.0-305.30.1.el8_4.aarch64 cri-o://1.23.0-98.rhaos4.10.git9b7f5ae.el8

Infrastructure cluster: We can ssh to one BlueField-2 of the cluster to check the configuration:

[egallen@bastion-infrastructure ~]$ ssh core@192.168.111.28

Red Hat Enterprise Linux CoreOS 410.84.202201071203-0

Part of OpenShift 4.10, RHCOS is a Kubernetes native operating system

managed by the Machine Config Operator (`clusteroperator/machine-config`).

WARNING: Direct SSH access to machines is not recommended; instead,

make configuration changes via `machineconfig` objects:

https://docs.openshift.com/container-platform/4.10/architecture/architecture-rhcos.html

---

Last login: Wed Mar 30 07:50:50 2022 from 192.168.111.1

[core@bf-14 ~]$

On one BlueField: We are running OpenShift 4.10 on the Arm cores:

[core@bf-14 ~]$ cat /etc/redhat-release

Red Hat Enterprise Linux CoreOS release 4.10

[core@bf-14 ~]$ uname -m

aarch64

On one BlueField: The BlueField-2 has 8 x Armv8 A72 cores (64-bit):

[core@bf-13 ~]$ lscpu

Architecture: aarch64

Byte Order: Little Endian

CPU(s): 8

On-line CPU(s) list: 0-7

Thread(s) per core: 1

Core(s) per socket: 8

Socket(s): 1

NUMA node(s): 1

Vendor ID: ARM

Model: 0

Model name: Cortex-A72

Stepping: r1p0

BogoMIPS: 400.00

L1d cache: 32K

L1i cache: 48K

L2 cache: 1024K

L3 cache: 6144K

NUMA node0 CPU(s): 0-7

Flags: fp asimd evtstrm aes pmull sha1 sha2 crc32 cpuid

On one BlueField: dmidecode is a tool for dumping a computer's DMI (also called SMBIOS). The tool is exporting a table of contents. Running on the Jetson gives you an error: “No SMBIOS nor DMI entry point found, sorry.” The command is working on BlueField-2; you can get SMBIOS data from sysfs:

[core@bf-14 ~]$ sudo dmidecode | grep -A12 "BIOS Information"

BIOS Information

Vendor: https://www.mellanox.com

Version: BlueField:3.7.0-20-g98daf29

Release Date: Jun 26 2021

ROM Size: 4 MB

Characteristics:

PCI is supported

BIOS is upgradeable

Selectable boot is supported

Serial services are supported (int 14h)

ACPI is supported

UEFI is supported

BIOS Revision: 3.0

On one BlueField: We can get the SOC type with the lshw command:

[core@bf-14 ~]$ lshw -C system

bf-14

description: Computer

product: BlueField SoC (Unspecified System SKU)

vendor: https://www.mellanox.com

version: 1.0.0

serial: Unspecified System Serial Number

width: 64 bits

capabilities: smbios-3.1.1 dmi-3.1.1 smp

configuration: boot=normal family=BlueField sku=Unspecified System SKU uuid=888eecb3-cb1e-40c0-a925-562a7c62ed92

*-pnp00:00

product: PnP device PNP0c02

physical id: 1

capabilities: pnp

configuration: driver=system

On one BlueField: We have 16GB of RAM on this BlueField-2:

[core@bf-14 ~]$ free -h

total used free shared buff/cache available

Mem: 15Gi 2.3Gi 6.9Gi 193Mi 6.7Gi 10Gi

Swap: 0B 0B 0B

Infrastructure cluster: I can get the OpenShift console URL (Figure 5) with an oc command:

[egallen@bastion-infrastructure ~]$ oc whoami --show-console

https://console-openshift-console.apps.bf2cluster.dev.metalkube.org

[egallen@bastion-infrastructure ~]$ host console-openshift-console.apps.bf2cluster.dev.metalkube.org

console-openshift-console.apps.bf2cluster.dev.metalkube.org has address 192.168.111.4

Tenant cluster: In the testpod1 we are running the iperf server:

[root@testpod-1 /]# taskset -c 6 iperf3 -s

-----------------------------------------------------------

Server listening on 5201

-----------------------------------------------------------

Accepted connection from 10.130.4.132, port 59326

[ 5] local 10.129.5.163 port 5201 connected to 10.130.4.132 port 59328

[ ID] Interval Transfer Bitrate

[ 5] 0.00-1.00 sec 2.35 GBytes 20.2 Gbits/sec

[ 5] 1.00-2.00 sec 2.46 GBytes 21.2 Gbits/sec

[ 5] 2.00-3.00 sec 2.42 GBytes 20.8 Gbits/sec

[ 5] 3.00-4.00 sec 2.24 GBytes 19.2 Gbits/sec

[ 5] 4.00-5.00 sec 2.39 GBytes 20.5 Gbits/sec

[ 5] 5.00-5.00 sec 384 KBytes 21.2 Gbits/sec

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate

[ 5] 0.00-5.00 sec 11.9 GBytes 20.4 Gbits/sec receiver

-----------------------------------------------------------

Server listening on 5201

-----------------------------------------------------------

Tenant cluster: In the testpod2, we can get 20-21.2Gbps of throughput with TC Flower OVS hardware offloading instead of 3Gbps of traffic throughput without offloading:

[root@testpod-2 /]# taskset -c 6 iperf3 -c 10.129.5.163 -t 5

Connecting to host 10.129.5.163, port 5201

[ 5] local 10.130.4.132 port 59328 connected to 10.129.5.163 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 2.35 GBytes 20.2 Gbits/sec 17 1.14 MBytes

[ 5] 1.00-2.00 sec 2.46 GBytes 21.2 Gbits/sec 0 1.41 MBytes

[ 5] 2.00-3.00 sec 2.42 GBytes 20.8 Gbits/sec 637 1.01 MBytes

[ 5] 3.00-4.00 sec 2.24 GBytes 19.2 Gbits/sec 0 1.80 MBytes

[ 5] 4.00-5.00 sec 2.39 GBytes 20.6 Gbits/sec 2 1.80 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-5.00 sec 11.9 GBytes 20.4 Gbits/sec 656 sender

[ 5] 0.00-5.00 sec 11.9 GBytes 20.4 Gbits/sec receiver

iperf Done.

Conclusion

This example demonstrates the benefits of composable compute architectures that include hardware-level security isolation and capabilities to offload the entire subsystem, such as networking, to the DPU hardware. This enables clean architectural compartmentalization along the same boundaries as the corresponding software services, as well as freeing up working node x83 cores so they can run more applications.

Unlike SmartNICs that have been niche products with proprietary software stacks, DPUs running Linux, Kubernetes, and open source software stacks offer network function portability. And with Red Hat OpenShift, we are offering long-term support for this stack.

As DPUs gain more compute power, additional capabilities, and increased popularity in datacenters around the world, Red Hat and NVIDIA plan to continue work on enabling the offloading of additional software functions to DPUs.

Last updated: May 5, 2022