This article is about the poll mode driver (PMD) automatic load balance feature in Open vSwitch with a Data Plane Development Kit data path (OVS-DPDK). The feature has existed for a while but we've recently added new user parameters in Open vSwitch 2.15. Now is a good time to take a look at this feature in OVS-DPDK.

When you are finished reading this article, you will understand the problem the PMD auto load balance feature addresses and the user parameters required to operate it. Then, you can try it out for yourself.

PMD threads in Open vSwitch with DPDK

In the context of OVS-DPDK a PMD thread, or poll mode driver thread, is a thread that runs 1:1 on a dedicated core to continually poll ports for packets. When it receives packets, it processes and usually forwards them depending on the rules that the packets match.

Each PMD thread is assigned a group of Rx queues from the various ports attached to the OVS-DPDK bridges to poll. Typically, the ports are DPDK physical network interface controllers (NICs) and vhost-user ports.

You can select how many and which cores are to be used for PMD threads. Increasing the number of cores makes more processing cycles available for packet processing, which can increase OVS-DPDK throughput.

For example, the following command selects cores 8 and 10 to be used by PMD threads:

$ ovs-vsctl set Open_vSwitch . other_config:pmd-cpu-mask=0x500

Note: We will refer a lot to the load of a PMD thread; this is the number of processing cycles the PMD thread uses for receiving and processing packets on its core.

PMD threads and the packet processing load

All Rx queues will not carry the same traffic, and some might have no traffic at all, so some PMD threads will do more packet processing than others. In other words, the packet processing load is not balanced across PMD threads.

In the worst case, some PMD threads could be overloaded processing packets while other PMD threads, possibly added to increase throughput, do nothing. In this scenario, some cores are not helping to increase the maximum possible throughput as their PMD threads have no useful work to do.

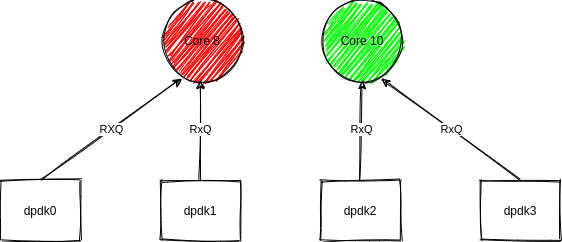

For example, in Figure 1, dpdk0 and dpdk1 ports have a lot of traffic and the PMD thread on core 8 is overloaded processing those packets. The dpdk2 and dpdk3 ports have no traffic and the PMD thread on core 10 is idle.

Whenever there is a reconfiguration, such as adding new ports in OVS-DPDK, Rx queues are reassigned to PMD threads. The primary goal of reassigning is that the Rx queues requiring the most processing are assigned to different PMD threads, so as many PMD threads as possible get to do useful work.

But what happens if the load was not known during the last reconfiguration? Reasons might be that a port was just added, or traffic wasn’t started yet, or the load changed over time and there are no more reconfigurations. This is where the PMD auto load balance feature can help.

PMD auto load balancing

If the PMD auto load balance feature is enabled, it runs periodically. When a certain set of conditions are met, it triggers a reassignment of the Rx queues to PMD threads to improve the balance of load between the PMD threads.

These are the main conditions it checks as prerequisites:

- If any of the current PMD threads are very busy processing packets.

- If the variance between the PMD thread loads is likely to improve after a reassignment.

- If it is not too soon since the last reassignment.

We can set exactly what constitutes very busy, improvement, and too soon from the command line. We’ll see that when we look at the user parameters shortly.

The nice thing about this feature is that it will only do a reassignment if it detects that a PMD thread is currently very busy and it estimates that there will be an improvement in variance after the reassignment. This helps to ensure we don’t have unnecessary reassignments.

User parameters for PMD auto load balancing

The PMD auto load balance feature is disabled by default. You can enable it at any time with:

$ ovs-vsctl --no-wait set open_vSwitch . other_config:pmd-auto-lb="true"

Let's look at the user parameters for this feature.

Load threshold

The load threshold is the percentage of processing cycles one of the PMD threads must consistently be using for one minute before a reassignment can occur. The default is 95%. Since Open vSwitch 2.15, you can set this from the command line. To set it to 70%, you would enter the following:

$ ovs-vsctl --no-wait set open_vSwitch . other_config:pmd-auto-lb-load-threshold="70"

Improvement threshold

The improvement threshold is the estimated improvement in load variance between the PMD threads that must be met before a reassignment can occur. To calculate the estimated improvement, a dry run of the reassignment is done and the estimated load variance is compared with the current variance. The default is 25%. Since Open vSwitch 2.15, you can set this from the command line. To set it to 50%, you would enter the following:

$ ovs-vsctl --no-wait set open_vSwitch . other_config:pmd-auto-lb-improvement-threshold="50"

Interval threshold

The interval threshold is the minimum time in minutes between which two reassignments can be triggered. This is used to prevent triggering frequent reassignments where traffic patterns are changeable. For example, you might only want to trigger a reassignment once every 10 minutes or every few hours. The default is one minute; to set it to 10 minutes, you would enter:

$ ovs-vsctl --no-wait set open_vSwitch . other_config:pmd-auto-lb-rebal-interval="10"

PMD auto load balancing in action

Let’s revisit the simple example from Figure 1 to see the PMD auto load balance feature in action. As shown in Figure 2, we start with all traffic on one PMD thread on core 8 and no traffic on the PMD thread on core 10.

We can check the Rx queue assignments with the following:

$ ovs-appctl dpif-netdev/pmd-rxq-show

The following output confirms that the PMD thread on core 8 has two active Rx queues while the PMD thread on core 10 has none:

pmd thread numa_id 0 core_id 8: isolated : false port: dpdk0 queue-id: 0 (enabled) pmd usage: 47 % port: dpdk1 queue-id: 0 (enabled) pmd usage: 47 % pmd thread numa_id 0 core_id 10: isolated : false port: dpdk2 queue-id: 0 (enabled) pmd usage: 0 % port: dpdk3 queue-id: 0 (enabled) pmd usage: 0 %

Note: The percentage usage shown for each Rx queue here is tightly measured around processing packets for individual Rx queues. It does not include any PMD thread operational overhead or time spent polling while getting no packets. Use ovs-appctl dpif-netdev/pmd-stats-show to get more general PMD thread load totals.

A case for reassignment

At this point, the traffic generator indicates a maximum of 11 Mpps bi-directional throughput. This is certainly a case where the PMD auto load balance feature can help by triggering a reassignment. Following the reassignment, the PMD thread on core 10 should be utilized.

Let’s set some thresholds and enable the feature:

$ ovs-vsctl set open_vSwitch . other_config:pmd-auto-lb-load-threshold="80" $ ovs-vsctl set open_vSwitch . other_config:pmd-auto-lb-improvement-threshold="50" $ ovs-vsctl set open_vSwitch . other_config:pmd-auto-lb-rebal-interval="1" $ ovs-vsctl set open_vSwitch . other_config:pmd-auto-lb="true"

The logs confirm it has been enabled and the values we have set:

|dpif_netdev|INFO|PMD auto load balance is enabled interval 1 mins, pmd load threshold 80%, improvement threshold 50%

Soon, we see that a dry run has been completed and reconfiguration of the datapath has been requested to reassign the Rx queues to the PMD threads:

|dpif_netdev|INFO|PMD auto lb dry run. requesting datapath reconfigure.

Note: You can get more detailed information about the operation and estimates in the ovs-vswitchd.log file by enabling debug:

$ ovs-appctl vlog/set dpif_netdev:file:dbg

Check the results

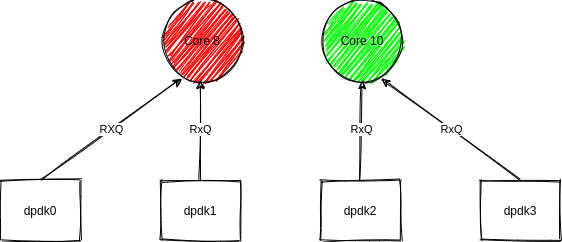

Now, if we re-check the stats, we can confirm that the two Rx queues that are receiving packets are assigned to different PMD threads.

pmd thread numa_id 0 core_id 8: isolated : false port: dpdk0 queue-id: 0 (enabled) pmd usage: 0 % port: dpdk2 queue-id: 0 (enabled) pmd usage: 88 % pmd thread numa_id 0 core_id 10: isolated : false port: dpdk1 queue-id: 0 (enabled) pmd usage: 88 % port: dpdk3 queue-id: 0 (enabled) pmd usage: 0 %

We have gone from a situation where only one PMD thread was in use, to a case where both PMD threads are being utilized, as shown in Figure 3.

Checking the traffic generator, in this case, the throughput has risen from 11 Mpps with packet drops on both dpdk0 and dpdk1 ports to the maximum configured rate of 21 Mpps without any drops.

Note: In the example above, each port has one Rx queue, but in many cases, the workload can also be distributed with more granularity by using multiple Rx queues per port and RSS.

Current limitations of PMD auto load balancing

Of course, the example shown is a clear-cut case to illustrate the parameters and usage.

The reassignment code will assign the largest loaded Rx queues to different PMD threads. It will also try to ensure that all PMD threads have the same number of assigned Rx queues. This is to find a compromise between optimizing for the current traffic load and providing resilience for cases where traffic patterns might change and there is no PMD auto load balance.

In some cases, it is possible that assigning different numbers of Rx queues to PMD threads could give an even more improved balance for the current load. This is currently only available by manual pinning and is an area for potential future optimizations.

Wrap-up

In this article, we’ve looked at the Open vSwitch with DPDK PMD auto load balance feature, the problem it helps to solve, and how it operates. The feature is taking shape and we've added new user parameters in Open vSwitch 2.15. Feel free to try it out and give feedback on the ovs-discuss@openvswitch.org mailing list.

Last updated: February 5, 2024