A new feature in version 2.16 of Open vSwitch (OVS) helps developers scale the OVS-DPDK userspace datapath to use multiple cores. The Data Plane Development Kit (DPDK) is a popular set of networking libraries and drivers that provide fast packet processing and I/O.

After reading this article, you will understand the new group assignment type for spreading the datapath workload across multiple cores, how this type differs from the default cycles assignment type, and how to use the new type in conjunction with the auto load balance feature in the poll mode driver (PMD) to improve OVS-DPDK scaling.

How PMD threads manage packets in the userspace datapath

In the OVS-DPDK userspace datapath, receive queues (RxQs) store packets from an interface that need to be received, processed, and usually transmitted to another interface. This work is done in OVS-DPDK by PMD threads that run on a set of dedicated cores and that continually poll the RxQs for packets. In OVS-DPDK, these datapath cores are commonly referred to just as PMDs.

When there's more than one PMD, the workload should ideally be spread equally across them all. This prevents packet loss in cases where some PMDs may be overloaded while others have no work to do. In order to spread the workload across the PMDs, the interface RxQs that provide the packets need to be carefully assigned to the PMDs.

The user can manually assign individual RxQs to PMDs with the other_config:pmd-rxq-affinity option. By default, OVS-DPDK also automatically assigns them. In this article, we focus on OVS-DPDK's process for automatically assigning RxQs to PMDs.

OVS-DPDK automatic assignment

RxQs can be automatically assigned to PMDs when there is a reconfiguration, such as the addition or removal of either RxQs or PMDs. Automatic assignment also occurs if triggered by the PMD auto load balance feature or the ovs-appctl dpif-netdev/pmd-rxq-rebalance command.

The default cycles assignment type assigns the RxQs requiring the most processing cycles to different PMDs. However, the assignment also places the same or similar number of RxQs on each PMD.

The cycles assignment type is a trade-off between optimizing for the current workload and having the RxQs spread out across PMDs to mitigate against workload changes. The default type is designed this way because, when it was introduced in OVS 2.9, there was no PMD auto load balance feature to deal with workload changes.

The role of PMD auto load balance

PMD auto load balance is an OVS-DPDK feature that dynamically detects an imbalance created by the user in how the workload is spread across PMDs. If PMD auto load balance estimates that the workload can and should be spread more evenly, it triggers an RxQ-to-PMD reassignment. The reassignment, and the ability to rebalance the workload evenly among PMDs, depends on the RxQ-to-PMD assignment type.

PMD auto load balance is discussed in more detail in another article.

The group RxQ-to-PMD assignment type

In OVS 2.16, the cycles assignment type is still the default, but a more optimized group assignment type was added.

The main differences between these assignment types is that the group assignment type removes the trade-off of having similar numbers of RxQs on each PMD. Instead, this assignment type spreads the workload purely based on finding the best current balance of the workload across PMDs. This improved optimization is feasible now because the PMD auto load balance feature is available to deal with possible workload changes.

The group assignment type also scales better, because it recomputes the estimated workload on each PMD before every RxQ assignment.

The increased optimization can mean a more equally distributed workload and hence more equally distributed available capacity across the PMDs. This improvement, along with PMD auto load balance, can mitigate against changes in workload caused by changes in traffic profiles.

An RxQ-to-PMD assignment comparison

We can see some of the differing characteristics of cycles and group with an example. If we run OVS 2.16 with a couple RxQs and PMDs, we can check the log messages to confirm that the default cycles assignment type is used for assigning Rxqs to PMDs:

|dpif_netdev|INFO|Performing pmd to rx queue assignment using cycles algorithm.

Then we can take a look at the current RxQ PMD assignments and RxQ workload usage:

$ ovs-appctl dpif-netdev/pmd-rxq-show

pmd thread numa_id 0 core_id 8:

isolated : false

port: vhost1 queue-id: 0 (enabled) pmd usage: 20 %

port: dpdk0 queue-id: 0 (enabled) pmd usage: 70 %

overhead: 0 %

pmd thread numa_id 0 core_id 10:

isolated : false

port: vhost0 queue-id: 0 (enabled) pmd usage: 20 %

port: dpdk1 queue-id: 0 (enabled) pmd usage: 30 %

overhead: 0 %

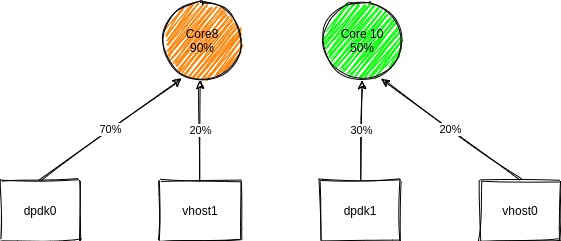

The workload is visualized in Figure 1.

The display shows that the cycles assignment type has done a good job keeping the two RxQs that require the most cycles (dpdk0 70% and dpdk1 30%) on different PMDs. Otherwise, one PMD would be at 100% and Rx packets might be dropped as a result.

The display also shows that the assignment insists on both PMDs having an equal number of RxQs, two each. This means that PMD 8 is 90% loaded while PMD 10 is 50% loaded.

That is not a problem with the current traffic profile, because both PMDs have enough processing cycles to handle the load. However, it does mean that PMD 8 has available capacity of only 10% to account for any traffic profile changes that require more processing. If, for example, the dpdk0 traffic profile changed and the required workload increased by more than 10%, PMD 8 would be overloaded and packets would be dropped.

Now we can look at how the group assignment type optimizes for this kind of scenario. First we enable the group assignment type:

$ ovs-vsctl set Open_vSwitch . other_config:pmd-rxq-assign=group

The logs confirm that is selected and immediately put to use:

|dpif_netdev|INFO|Rxq to PMD assignment mode changed to: `group`.

As mentioned earlier, the group assignment type eliminates the requirement of keeping the same number of RxQs per PMD, and bases its assignments on estimates of the least loaded PMD before every RxQ assignment. We can see how this policy affects the assignments:

$ ovs-appctl dpif-netdev/pmd-rxq-show

pmd thread numa_id 0 core_id 8:

isolated : false

port: dpdk0 queue-id: 0 (enabled) pmd usage: 70 %

overhead: 0 %

pmd thread numa_id 0 core_id 10:

isolated : false

port: vhost0 queue-id: 0 (enabled) pmd usage: 20 %

port: dpdk1 queue-id: 0 (enabled) pmd usage: 30 %

port: vhost1 queue-id: 0 (enabled) pmd usage: 20 %

overhead: 0 %

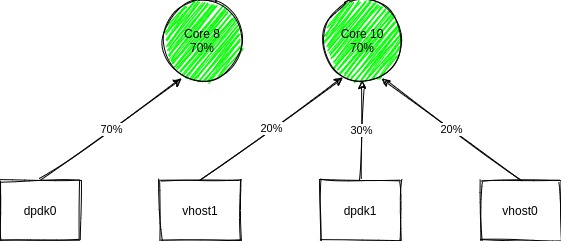

The workload is visualized in Figure 2.

Now PMD 8 and PMD 10 both have total loads of 70%, so the workload is better balanced between the PMDs.

In this case, if the dpdk0 traffic profile changes and the required workload increases by 10%, it could be handled by PMD 8 without any packet drops because there is 30% available capacity.

An interesting case is where RxQs are new or have no measured workload. If they were all put on the least loaded PMD, that PMD's estimated workload would not change. It would keep being selected as the least loaded PMD and be assigned all the new RxQs. This might not be ideal if those RxQs later became active, so instead the group assignment type spread RxQs with no measured history among PMDs.

This example shows a change from the cycles to group assignment type during operation. Although that can be done, the assignment type is typically set when initializing OVS-DPDK. Reassignments can then be triggered by any of the following mechanisms:

- PMD auto load balance (providing that user-defined thresholds are met)

- A change in configuration (adding or removing RxQs or PMDs)

- The

ovs-appctl dpif-netdev/pmd-rxq-rebalancecommand

Other RxQ considerations

All of the OVS-DPDK assignment types are constrained by the granularity of the workload on each RxQ. In the example in the previous section, it was possible to spread the workload evenly. In a case where dpdk0 was 95% loaded instead, PMD 8 would have a 95% load, while PMD 10 would have a 70% load.

If you expect an interface to have a high traffic rate and hence a high required load, it is worth considering the addition of more RxQs in order to help split the traffic for that interface. More RxQs mean a greater granularity to help OVS-DPDK spread the workload more evenly across the PMDs.

Conclusion

This article looked at the new group assignment type from OVS 2.16 for RxQ-to-PMD assignments.

Although the existing cycles assignment type might be good enough in many cases, the new group assignment type allows OVS-DPDK to more evenly distribute the workload across the available PMDs.

This dynamic assignment has the benefit of allowing more optimal use of PMDs and providing a more equally distributed available capacity across PMDs, which in turn can make them more resilient against workload changes. For larger changes in workload, the PMD auto load balance feature can trigger reassignments.

OVS 2.16 still has the same defaults as OVS 2.15, so users for whom OVS 2.15 multicore scaling is good enough can continue to use it by default after an upgrade. However, the new option is available if required.

Further information about OVS-DPDK PMDs can be found in the documentation.

Last updated: October 6, 2022