Trends in the software engineering industry show that the Python programming language is growing in popularity. Properly managing Python dependencies is crucial to guaranteeing a healthy software development life cycle. In this article, we will look at installing Python dependencies for Python applications into containerized environments, which also have become very popular. In particular, we introduce micropipenv, a tool we created as a compatibility layer on top of pip (the Python package installer) and related installation tools. The approach discussed in this article ensures that your applications are shipped with the desired software for the purposes of traceability or integrity. The approach provides reproducible Python applications across different application builds done over time.

Python dependency management

Open source community efforts provide tools to manage application dependencies. The most popular such tools for Python are:

- pip (offered by the Python Packaging Authority)

- pip-tools

- Pipenv (offered by the Python Packaging Authority)

- Poetry

Each of these tools has its own pros and cons, so developers can choose the right tooling based on their preferences.

Virtual environment management

One of the features that can be important for developers is implicit virtual environment management, offered by Pipenv and Poetry. This feature is a time-saver when developing applications locally, but can have drawbacks when installing and providing the application in a container image. One of the drawbacks of adding this layer is its potentially negative impact on container-image size because the tools add bulk to the software within the container image.

On the other hand, pip and pip-tools require explicit virtual environment management when developing applications locally. With explicit virtual environment management, the application dependencies do not interfere with system Python libraries or other dependencies shared across multiple projects.

The lock file

Even though pip is the most fundamental tool for installing Python dependencies, it does not provide an implicit mechanism for managing the whole dependency graph. This gave the pip-tools developers an opportunity to design pip-tools to manage a locked-down dependency listing, featuring direct dependencies and transitive dependencies based on application requirements.

Stating all the dependencies in the lock file provides fine-grained control over which Python dependencies in which versions are installed at any point in time. If developers do not lock down all the dependencies, they might confront issues that can arise over time due to new Python package releases, yanking specific Python releases (PEP-592), or the complete removal of Python packages from Python package indexes such as PyPI. All of these actions can introduce undesired and unpredictable issues created by changes across releases in the dependencies installed. Maintaining and shipping the lock file with the application avoids such issues and provides traceability to application maintainers and developers.

Digests of installed artifacts

Even though pip-tools states all the dependencies that are installed in specific versions in its lock file, we recommend including digests of installed artifacts by providing the --generate-hashes option to the pip-compile command, because this is not done by default. The option triggers integrity checks of installed artifacts during the installation process. Digests of installed artifacts are automatically included in lock files managed by Pipenv or Poetry.

On the other hand, pip cannot generate hashes of packages that are already installed. However, pip performs checks during the installation process when digests of artifacts are provided explicitly or when you supply the --require-hashes option.

To support all the tools discussed in this section, we introduced micropipenv. The rest of this article explains how it works and how it fits into the Python environment.

Installing Python dependencies with micropipenv

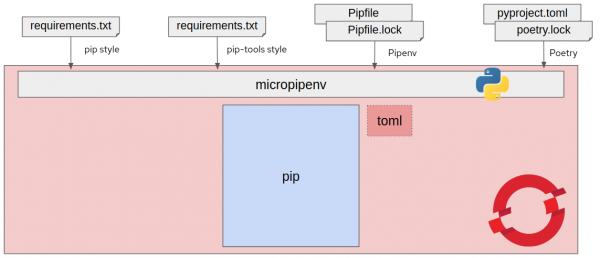

micropipenv parses the requirements or lock files produced by the tools discussed in the previous section: requirements.txt, Pipfile/Pipfile.lock, and pyproject.toml/poetry.lock. micropipenv tightly cooperates with the other tools and acts as a small addition to pip that can prepare dependency installation while respecting requirements files and lock files. All the main benefits of the core pip installation process stay untouched.

By supporting all the files produced by pip, pip-tools, Pipenv, and Poetry, micropipenv allows users to employ the tool of their choice for installing and managing Python dependencies in their projects. Once the application is ready to be shipped within the container image, developers can seamlessly use all recent Python Source-To-Image (S2I) container images based on Python 3. These images offer micropipenv functionality. You can subsequently use the applications in deployments managed by Red Hat OpenShift.

Figure 1 shows micropipenv as a common layer for installing Python dependencies in an OpenShift deployment.

To enable micropipenv in the Python S2I build process, export the ENABLE_MICROPIPENV=1 environment variable. See the documentation for more details. This feature is available in all recent S2I container images based on Python 3 and built on top of Fedora, CentOS Linux, Red Hat Universal Base Images (UBI), or Red Hat Enterprise Linux (RHEL). Even though micropipenv was originally designed for containerized Python S2I container images, we believe that it will find use cases elsewhere, such as when installing dependencies without lock file management tools or when converting between lock files of different types. We also found the tool suitable for assisting with dependency installation in Jupyter Notebook to support reproducible data science environments.

Note: Please check the Project Thoth scrum demo if you are interested in Thoth's Python S2I integration (the micropipenv demo starts at 9:00). You can also check the Improvements in OpenShift S2I talk presented at the DevNation 2019 conference. Slides and a description of the talk are also available online.

Benefits of micropipenv

We wanted to bring Pipenv or Poetry to the Python S2I build process because of their advantages for developers. But from the perspective of RPM package maintenance, both Pipenv and Poetry are hard to package and maintain in the standard way we use RPM in Fedora, CentOS, and RHEL. Pipenv bundles all of its more than 50 dependencies, and the list of these dependencies changes constantly. Package tools like these are complex, so maintaining them and fixing all possible security issues in their bundled dependencies could be very time-consuming.

Moreover, micropipenv brings unified installation logs. Logs are not differentiated based on the tool used, and provide insights into issues that can arise during installation.

Another reason for micropipenv is that a Pipenv installation uses more than 18MB of disk space, which is a lot for a tool we need to use only once during the container build.

We have already packaged and prepared micropipenv as an RPM package. It is also available on PyPI; the project is open source and developed on GitHub in the thoth-station/micropipenv repository.

At its core, micropipenv depends only on pip. Other features are available when TOML Python libraries are installed (toml or legacy pytoml are optional dependencies). The minimal dependencies give micropipenv a very light feel, overall. Having just one file in the codebase helps with project maintenance, in contrast to the much larger Pipenv or Poetry codebases. All the installation procedures are reused from supported pip releases.

Using and developing micropipenv

You can get micropipenv in any of the following ways:

- Providing ENABLE_MICROPIPENV=1 to the Source-To-Image container build process

- Installing a micropipenv RPM by running:

$ dnf install micropipenv

- Installing a micropipenv Python package by running:

$ pip install micropipenv

To develop and improve micropipenv or submit feature requests, please visit the thoth-station/micropipenv repository.

Acknowledgment

micropipenv was developed at the Red Hat Artificial Intelligence Center of Excellence in Project Thoth and brought to you thanks to cooperation with the Red Hat Python maintenance team.

Last updated: February 5, 2024