All development topics

Insights and news on Red Hat developer tools, platforms, and more. Explore what is trending now.

Featured development topics

Drive team productivity and accelerate innovation.

Develop applications on the most popular Linux for the enterprise.

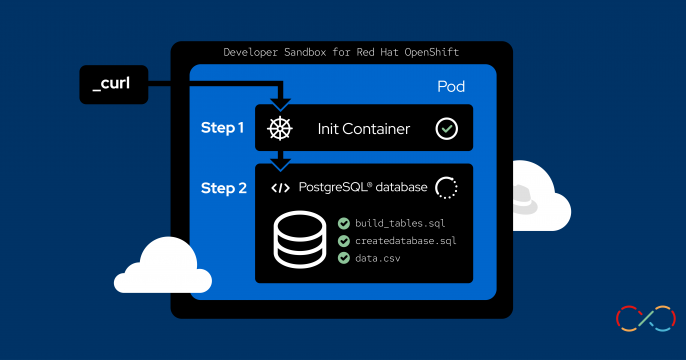

Kubernetes is the foundation of cloud software architectures.

Skip the noise that can come with developing apps.