Have you ever wanted to set up continuous integration (CI) for .NET Core in a cloud-native way, but you didn't know where to start? This article provides an overview, examples, and suggestions for developers who want to get started setting up a functioning cloud-native CI system for .NET Core.

We will use the new Red Hat OpenShift Pipelines feature to implement .NET Core CI. OpenShift Pipelines are based on the open source Tekton project. OpenShift Pipelines provide a cloud-native way to define a pipeline to build, test, deploy, and roll out your applications in a continuous integration workflow.

In this article, you will learn how to:

- Set up a simple .NET Core application.

- Install OpenShift Pipelines on Red Hat OpenShift.

- Create a simple pipeline manually.

- Create a Source-to-Image (S2I)-based pipeline.

Prerequisites

You will need cluster-administrator access to an OpenShift instance to be able to access the example application and follow all of the steps described in this article. If you don't have access to an OpenShift instance, or if you don't have cluster-admin privileges, you can run an OpenShift instance locally on your machine using Red Hat CodeReady Containers. Running OpenShift locally should be as easy as crc setup followed by crc start. Also, be sure to install the oc tool; we will use it throughout the examples.

When I wrote this article, I was using:

$ crc version CodeReady Containers version: 1.12.0+6710aff OpenShift version: 4.4.8 (embedded in binary)

The example application

To start, let's review the .NET Core project that we will use for the remainder of the article. It's a simple "Hello, World"-style web application that is built on top of ASP.NET Core.

The complete application is available from the Red Hat Developer s2i-dotnetcore-ex GitHub repository, in the branch dotnetcore-3.1-openshift-manual-pipeline. You can use this repository and branch directly for the steps described in this article. If you want to make changes and test how they affect your pipeline, you can fork the repository and use the fork.

Everything is included in the source repository, including everything we need for continuous integration. There are at least a couple of advantages to this approach: Our complete setup is tracked and reproducible, and we can easily code-review changes we might do later on, including any changes to our CI pipeline.

Project directories

Before we continue, let's review the dotnetcore-3.1-openshift-manual-pipeline branch of the repository.

This project contains two main directories: app and app.tests. The app directory contains the application code. This app was essentially created by dotnet new mvc. The app.tests directory contains unit tests that we want to run when we build the app. This was created by dotnet new xunit.

Another directory called ci contains the configuration we will use for our CI setup. I will describe the files in that directory in detail as we go through the examples.

Now, let's get to the fun part.

Install OpenShift Pipelines

Log into the OpenShift cluster as an administrator and install OpenShift Pipelines. You can use the OperatorHub in your OpenShift console, as shown in Figure 1.

See the OpenShift documentation for a complete guide to installing the OpenShift Pipelines Operator.

Develop a pipeline manually

Now that we have our .NET Core application code and an OpenShift instance ready to use, we can create an Openshift Pipelines-based system for building this code. For our first example, we'll create a pipeline manually.

Before we create the pipeline, let's start with a few things you need to know about OpenShift Pipelines.

About OpenShift Pipelines

OpenShift Pipelines offer a flexible way to build and deploy applications. OpenShift Pipelines are not opinionated: You can do pretty much anything you want as part of a pipeline. To be this flexible, OpenShift Pipelines lets us define several different types of objects. These objects represent various parts of the pipeline, such as inputs, output, stages, and even connections between these elements. We can assemble these objects however we need to, to get the build-test-deploy pipeline that we want.

Here are a few types of objects that we will use:

- An OpenShift Pipelines

Taskis a collection ofsteps that we want to run in order. There are many predefinedTasks, and we can make more as we need them.Tasks can have inputs and outputs. They can also have parameters that control aspects of theTask. - A

Pipelineis a collection ofTasks. It lets us groupTasks and run them in a particular order, and it lets us connect the output of oneTaskto the input of another. - A

PipelineResourceindicates aTaskor's input or output. There are several types, includinggitandimage. ThegitPipelineResourceindicates a Git repository, which is often used as in the input to aPipelineorTask. AnimagePipelineResourceindicates a container image that we can use via the built-in OpenShift container registry.

These objects describe what a Pipeline is. We need at least two more things to run a pipeline. Creating and running a pipeline are two distinct operations in OpenShift. You can create a pipeline and never run it. Or, you can re-run the same pipeline multiple times.

- A

TaskRundescribes how to run aTask. It connects the inputs and outputs of aTaskwithPipelineResources. - A

PipelineRundescribes how to run aPipeline. It connects the pipeline's inputs and outputs withPipelineResources. APipelineRunimplicitly contains one or moreTaskRuns, so we can skip creatingTaskRuns manually.

Now we're ready to put all of these elements into action.

Step 1: Create a new pipeline project

First, we'll create a new project to work in OpenShift:

$ oc new-project dotnet-pipeline-app

To make a functional pipeline, we will need to define a few objects that we can add to a single file, simple-pipeline.yaml. This file is located in the ci/simple/simple-pipeline.yaml file in the repository that we are using. To separate these objects, we will use a dash (---) by itself on a single line.

Step 2: Define a PipelineResource

Next, we'll define a PipelineResource. The PipelineResource will provide the source Git repository to the rest of the pipeline:

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: simple-dotnet-project-source

spec:

type: git

params:

- name: revision

value: dotnetcore-3.1-openshift-manual-pipeline

- name: url

value: https://github.com/redhat-developer/s2i-dotnetcore-ex

An object of kind: PipelineResource has a name (metadata.name) and a type of resource (spec.type). Here, we use the type git to indicate that this PipelineResource represents a Git repository. The parameters to this PipelineResource specify the Git repository that we want to use.

If you are using your own fork, please adjust the URL and branch name.

Step 3: Define the pipeline

Next, we define a pipeline. The Pipeline instance will coordinate all of the tasks that we want to run:

apapiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: simple-dotnet-pipeline

spec:

resources:

- name: source-repository

type: git

tasks:

- name: simple-build-and-test

taskRef:

name: simple-publish

resources:

inputs:

- name: source

resource: source-repository

This Pipeline uses a resource (a PipelineResource) with the name of source-repository, which should be a git repository. It uses just one Task (via the taskRef with a name of simple-publish) that will build our source code. We will connect the input of the pipeline (source-repository resource) to the input of the task (source input resource).

Step 4: Define and build a task

Next, we define a simple Task that takes a git source repository. Then, we build the task:

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: simple-publish

spec:

resources:

inputs:

- name: source

type: git

steps:

- name: simple-dotnet-publish

image: registry.access.redhat.com/ubi8/dotnet-31 # .NET Core SDK

securityContext:

runAsUser: 0 # UBI 8 images generally run as non-root

script: |

#!/usr/bin/bash

dotnet --info

cd source

dotnet publish -c Release -r linux-x64 --self-contained false "app/app.csproj"

This Task takes a PipelineResource of type git that represents the application source code. It runs the listed steps to build the source code using the ubi8/dotnet-31 container image. This container image is published by Red Hat and is optimized for building .NET Core applications in Red Hat Enterprise Linux (RHEL) and OpenShift. We also specify, via script, the exact steps to build our .NET Core application, which is essentially just a dotnet publish.

Step 5: Describe and apply the pipeline

We have everything that we need to describe our pipeline. Our final ci/simple/simple-pipeline.yaml file should look like this:

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: simple-dotnet-project-source

spec:

type: git

params:

- name: revision

value: dotnetcore-3.1-openshift-manual-pipeline

- name: url

value: https://github.com/redhat-developer/s2i-dotnetcore-ex

---

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: simple-dotnet-pipeline

spec:

resources:

- name: source-repository

type: git

tasks:

- name: simple-build-and-test

taskRef:

name: simple-publish

resources:

inputs:

- name: source

resource: source-repository

---

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: simple-publish

spec:

resources:

inputs:

- name: source

type: git

steps:

- name: simple-dotnet-publish

image: registry.access.redhat.com/ubi8/dotnet-31 # .NET Core SDK

securityContext:

runAsUser: 0 # UBI 8 images generally run as non-root

script: |

#!/usr/bin/bash

dotnet --info

cd source

dotnet publish -c Release -r linux-x64 --self-contained false "app/app.csproj"

We can now apply it to our OpenShift instance:

$ oc apply -f ci/simple/simple-pipeline.yaml

Applying the pipeline to our OpenShift instance makes OpenShift modify its currently-active configuration to match what we have specified in the YAML file. That includes creating any objects (such as Pipelines, PipelineResources, and Tasks) that did not previously exist. If objects of the same name and types already exist, they will be modified to match what we have specified in our YAML file.

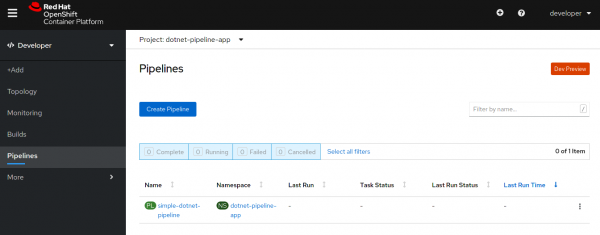

If you look in Pipelines section of the OpenShift Developer console (crc console if you are using CodeReady Containers), you should see that this pipeline is now available, as shown in Figure 2.

Did you notice that the pipeline is not running? We'll deal with that next.

Step 6: Run the pipeline

To run the pipeline, we will create a PipelineRun object that associates the PipelineResource with the Pipeline itself, and actually runs everything. The PipelineRun object for this example is ci/simple/run-simple-pipeline.yaml, as shown here:

apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

generateName: run-simple-dotnet-pipeline-

spec:

pipelineRef:

name: simple-dotnet-pipeline

resources:

- name: source-repository

resourceRef:

name: simple-dotnet-project-source

This YAML file defines an object of kind: PipelineRun, which uses the simple-dotnet-pipeline (indicated with the pipelineRef). It associates the simple-dotnet-project-source PipelineResource that we defined earlier with the input resource that the simple-dotnet-pipeline expects.

Next, we will use oc create (instead of oc apply) to create and run the pipeline:

$ oc create -f ci/simple/run-simple-pipeline.yaml

We need to use oc create here because each PipelineRun represents an actual invocation of the pipeline. We don't want to apply an update to a previous run of a Pipeline. We want to run the pipeline (again).

This is also why we used generateName instead of name when defining the pipeline YAML. Each OpenShift object has a unique name, including a PipelineRun. If we gave our pipeline a concrete name, we wouldn't be able to oc create it again. To work around this, we tell OpenShift to generate a new name, prefixing it with our provided value. This allows us to run oc create -f ci/simple/simple-pipeline.yaml multiple times. Each time we enter this command, it will run the pipeline in OpenShift.

You should now see the pipeline running in the OpenShift console, as shown in Figure 3. Wait a little bit, and it will complete.

Develop an S2I-based pipeline

In the previous section, we created a pipeline manually. For that, we had to write out all the steps to publish our application. We had to list out the exact steps to build our code, as well as the container image we wanted to use to build it. We also would have had to list out any other steps, such as running tests.

We can make our lives easier by re-using predefined tasks. The predefined s2i-dotnet-3 Task already knows how to build a .NET Core application using the s2i-dotnetcore images. In this section, you'll learn how to build an S2I-based pipeline.

Step 1: Install the .NET Core s2i tasks

Let's start by installing the s2i tasks for .NET Core 3.x:

$ oc apply -f https://raw.githubusercontent.com/openshift/pipelines-catalog/master/task/s2i-dotnet-3-pr/0.1/s2i-dotnet-3-pr.yaml

Step 2: Add an .s2i/environment file to the repository

We will also need to use a few new files in our code repository to tell the S2I build system what .NET Core projects to use for testing and deployment. We can do that by adding a .s2i/environment file to the repository with the following two lines:

DOTNET_STARTUP_PROJECT=app/app.csproj DOTNET_TEST_PROJECTS=app.tests/app.tests.csproj

DOTNET_STARTUP_PROJECT specifies what project (or application) we want to run in the final built container. DOTNET_TEST_PROJECTS specifies which projects (if any) we want to run to test our code before we build the application container.

Step 3: Define the pipeline

Now, let's define a Pipeline, PipelineResources, and Tasks in a single pipeline file. This time, we will use the s2i-dotnet-3-pr task. We will also include another PipelineResource with type: image that represents the output container image. This pipeline will build the source code and then push the just-built application image into OpenShift's built-in container registry. Our ci/s2i/s2i-pipeline.yaml should look like this:

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: s2i-dotnet-project-source

spec:

type: git

params:

- name: revision

value: dotnetcore-3.1-openshift-manual-pipeline

- name: url

value: https://github.com/redhat-developer/s2i-dotnetcore-ex

---

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: s2i-dotnet-image

spec:

type: image

params:

- name: url

value: image-registry.openshift-image-registry.svc:5000/dotnet-pipeline-app/app:latest

---

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: s2i-dotnet-pipeline

spec:

resources:

- name: source-repository

type: git

- name: image

type: image

tasks:

- name: s2i-build-source

taskRef:

name: s2i-dotnet-3-pr

params:

- name: TLSVERIFY

value: "false"

resources:

inputs:

- name: source

resource: source-repository

outputs:

- name: image

resource: image

Note how we provided the s2i-dotnet-3-pr task with the source-repository resource and the image resource. This task already knows how to build a .NET Core application from source code (based on the contents of the .s2i/environment file) and create a container image that includes the just-built application.

Note: Please keep in mind that you can only push the container image to the same namespace as your OpenShift project, which in this case is dotnet-pipeline-app. If we tried using a container name that placed the container image somewhere other than /dotnet-pipeline-app/, we would encounter permission issues.

Step 4: Apply the pipeline in OpenShift

Now let's set up this pipeline in OpenShift:

$ oc apply -f ci/s2i/s2i-pipeline.yaml

The new s2i-dotnet-pipeline should now show up in the Pipelines section of the OpenShift developer console, as shown in Figure 4.

Step 5: Run the pipeline

To run this pipeline, we will create another PipelineRun, just like we did in the previous example. Here's our ci/s2i/run-s2i-pipeline. yaml file:

apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

generateName: run-s2i-dotnet-pipeline-

spec:

serviceAccountName: pipeline

pipelineRef:

name: s2i-dotnet-pipeline

resources:

- name: source-repository

resourceRef:

name: s2i-dotnet-project-source

- name: image

resourceRef:

name: s2i-dotnet-image

Run it with:

$ oc create -f ci/s2i/run-s2i-pipeline.yaml

When you look at the OpenShift developer console, you can see the pipeline running (or having completed). The pipeline log should look something like what's shown in Figure 5:

This time, we can see that the pipeline started up, ran all of our tests, published our application, created a container image with it, and pushed the just-built container into the built-in container registry in OpenShift.

Nice, isn't it?

Conclusion

If you followed along with this article, then you should now know how to create a code-first implementation of cloud-ready CI pipelines to build, test, and publish container images for your .NET Core applications. Now that you are familiar with this procedure, why not try it out for your applications?

If you are looking for ideas to further what you've learned, here are some suggestions:

- We triggered all of our pipeline runs manually, but that's obviously not what you want for a real continuous integration setup. Consider extending the pipeline to run on triggers and webhooks from your source repository.

- We limited our CI pipeline to stop after building and publishing container images. Try creating a pipeline that goes all the way to deploying your project in OpenShift.

Additional resources

- Learn more about Red Hat CodeReady Containers.

- Get complete instructions for creating applications with OpenShift Pipelines.

- Check out the Tekton Task/Pipelines catalog.

- Also, see the OpenShift Pipelines catalog.

- Get the s2i dotnet task.

- Get the S2I container images for .NET Core.