Within the context of Kubernetes, a namespace allows dividing resources, policies, authorization, and a boundary for cluster objects. In this article, we cover two different types of Operators: namespace-scoped and cluster-scoped. We then walk through an example of how to migrate from one to the other, which illustrates the difference between the two.

Namespace-scoped and cluster-scoped

A namespace-scoped Operator is defined within the boundary of a namespace with the flexibility to handle upgrades without impacting others. It watches objects within that namespace and maintains Role and RoleBinding for role-based access control (RBAC) policies for accessing the resource.

Meanwhile, a cluster-scoped Operator promotes reusability and manages defined resources across the cluster. It watches all namespaces in a cluster and maintains ClusterRole and ClusterRoleBinding for RBAC policies for authorizing cluster objects. Two examples of cluster-scoped operators are istio-operator and cert-manager. The istio-operator can be deployed as a cluster-scoped to manage the service mesh for an entire cluster, while the cert-manager is used to issue certificates for an entire cluster.

These two types of Operators support both types of installation based on your requirements. In the case of a cluster-scoped Operator, upgrading the Operator version can impact resources managed by the Operator in the entire cluster, as compared to upgrading the namespace-scoped Operator, which will be easier to upgrade as it only affects the resource within its scope.

Migration guide: Namespace-scoped to cluster-scoped

Let's generate two Operators: namespace-scope-op and cluster-scope-op using operator-sdk:

$ operator-sdk new namespace-scope-op $ operator-sdk new cluster-scope-op

By default, both Operators are namespace-scoped. Let’s add the kind type Foo to both of these Operators:

$ operator-sdk add api --api-version=foo.example.com/v1alpha1 --kind=Foo

I’ll now make changes to the cluster-scope Operator to show how it is different from the namespace-scope Operator.

Step 1: Watch all of the namespaces

The first change we need to make in the cluster-scope-op Operator is keeping the namespace option set to empty. To watch all of the namespaces, run:

$ cluster-scope-op/cmd/manager/main.go

Figure 1 shows the difference between the results for cluster-scope on the left and namespace-scope on the right.

Step 2: Update the added API schema's scope

Next, we need to update our API type: *_types.go. If you were setting the namespace scope, you would use:

// +kubebuilder:resource:path=foos,scope=Namespaced

To set the cluster scope, use:

// +kubebuilder:resource:path=foos,scope=Cluster

Figure 2 shows the difference between the results for cluster-scope on the left and namespace-scope on the right.

Step 3: Generate the CRDs for the cluster-scope Operator

Now it's time to generate the custom resource definitions (CRDs) for the cluster-scope Operator:

cluster-scope-op-:$ operator-sdk generate crds INFO[0000] Running CRD generator. INFO[0000] CRD generation complete.

Figure 3 shows that the generated CRD was updated for the cluster-scope Operator (on the left) in *crd*.yaml.

Step 4: Update the kind type

At this point, we update the kind type for Role/RoleBinding to ClusterRole/ClusterRoleBinding. First, update the kind type from Roleto ClusterRole in role.yaml:

kind: ClusterRole

Then, update the kind typeRoleBinding to ClusterRoleBinding and the kind roleRef to ClusterRole in role_binding.yaml:

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cluster-scope-op

subjects:

- kind: ServiceAccount

name: cluster-scope-op

namespace: ${NAMESPACE}

roleRef:

kind: ClusterRole

name: cluster-scope-op

apiGroup: rbac.authorization.k8s.ioCaution: The exact resource/verb needed should be specified in the cluster role. Avoid using a wildcard (*) permission for security concerns when defining cluster roles.

The cluster-scoped Operator will be deployed under a namespace, but the Operator is used to manage the resources in a cluster-wide scope. As compared to namespace scoped Operator, which is deployed under a namespace, but only manage resources under that namespace.

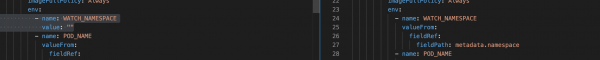

Step 5: Update the WATCH_NAMESPACE

Finally, we can add a controller by updating WATCH_NAMESPACE to an empty string in operator.yaml:

$ operator-sdk add controller --api-version=cache.example.com/v1alpha1 --kind=Foo

Figure 4 shows how this section looks now for the cluster-scope Operator (on the left) compared to the namespace-scope on the right.

Keep in mind that you need watchers that are entitled to watch the object within the cluster, which are generated by default for the primary object.

Operator Lifecycle Manager

If you want to use the Operator Lifecycle Manager (OLM) for deploying the Operator, you can generate the CSV using the command:

$ operator-sdk generate csv --csv-version 0.0.1

You can support installModes as you see in this CSV. By default, this CSV supports all installModes:

installModes:- supported: true type: OwnNamespace- supported: true type: SingleNamespace- supported: false type: MultiNamespace- supported: true type: AllNamespaces

You can also define an Operator group. An OperatorGroup is an OLM resource that provides multitenant configuration to OLM-installed Operators:

apiVersion: operators.coreos.com/v1 kind: OperatorGroup metadata: name: my-group namespace: my-namespace spec: targetNamespaces: - my-namespace - my-other-namespace - my-other-other-namespace

Conclusion

This is all you need to know in order to make your namespace Operator into a cluster-scoped Operator. Both Operator examples can be found in this repo.

Last updated: June 25, 2020