Building responsiveness applications is a never-ending task. With the rise of powerful and multicore CPUs, more raw power is available for applications to consume. In Java, threads are used to make the application work on multiple tasks concurrently. A developer starts a Java thread in the program, and tasks are assigned to this thread to get processed. Threads can do a variety of tasks, such as read from a file, write to a database, take input from a user, and so on.

In this article, we'll explain more about threads and introduce Project Loom, which supports high-throughput and lightweight concurrency in Java to help simplify writing scalable software.

Use threads for better scalability

Java makes it so easy to create new threads, and almost all the time the program ends-up creating more threads than the CPU can schedule in parallel. Let's say that we have a two-lane road (two core of a CPU), and 10 cars want to use the road at the same time. Naturally, this is not possible, but think about how this situation is currently handled. Traffic lights are one way. Traffic lights allow a controlled number of cars onto the road and make the traffic use the road in an orderly fashion.

In computers, this is a scheduler. The scheduler allocates the thread to a CPU core to get it executed. In the modern software world, the operating system fulfills this role of scheduling tasks (or threads) to the CPU.

In Java, each thread is mapped to an operating system thread by the JVM (almost all the JVMs do that). With threads outnumbering the CPU cores, a bunch of CPU time is allocated to schedule the threads on the core. If a thread goes to wait state (e.g., waiting for a database call to respond), the thread will be marked as paused and a separate thread is allocated to the CPU resource. This is called context switching (although a lot more is involved in doing so). Further, each thread has some memory allocated to it, and only a limited number of threads can be handled by the operating system.

Consider an application in which all the threads are waiting for a database to respond. Although the application computer is waiting for the database, many resources are being used on the application computer. With the rise of web-scale applications, this threading model can become the major bottleneck for the application.

Reactive programming

One solution is making use of reactive programming. Briefly, instead of creating threads for each concurrent task (and blocking tasks), a dedicated thread (called an event loop) looks through all the tasks that are assigned to threads in a non-reactive model, and processes each of them on the same CPU core. So, if a CPU has four cores, there may be multiple event loops but not exceeding to the number of CPU cores. This approach resolves the problem of context switching but introduces lots of complexity in the program itself. This type of program also scales better, which is one reason reactive programming has become very popular in recent times. Vert.x is one such library that helps Java developers write code in a reactive manner.

You can learn more about reactive programming here and in this free e-book by Clement Escoffier.

Scalability with minimal complexity

So, the thread per task model is easy to implement but not scalable. Reactive programming is more scalable but the implementation is a bit more involved. A simple graph representing program complexity vs. program scalability would look like this:

What we need is a sweet spot as mentioned in the diagram above (the green dot), where we get web scale with minimal complexity in the application. Enter Project Loom. But first, let's see how the current one task per thread model works.

How the current thread per task model works

Let's see it in action. First let's write a simple program, an echo server, which accepts a connection and allocates a new thread to every new connection. Let's assume this thread is calling an external service, which sends the response after few seconds. This mimics the wait state of the thread. So, a simple Echo server would look like the example below. The full source code is available here.

//start listening on a socket

ServerSocket server = new ServerSocket(5566);

while (true) {

Socket client = server.accept();

EchoHandler handler = new EchoHandler(client);

//create a new thread for each new connection

handler.start();

}

.

.

.

//extends Thread

class EchoHandler extends Thread {

public void run () {

try {

//make a call to the dummy downstream system. This calls will return after a couple of seconds

//simulating a wait/block call.

byte[] output = new java.net.URL("http://localhost:9090").openStream().readNBytes(5);

//write something to the connection output stream

writer.println("[echo] " + output);

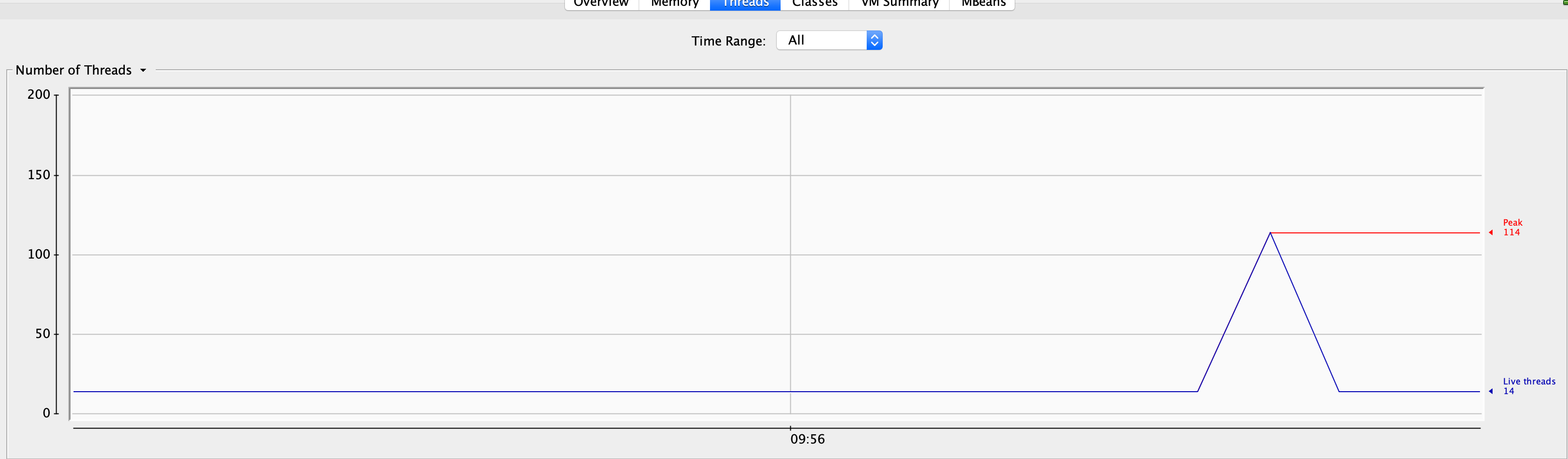

When I run this program and hit the program with, say, 100 calls, the JVM thread graph shows a spike as seen below (output from jconsole). The command I executed to generate the calls is very primitive, and it adds 100 JVM threads.

for i in {1..100}; do curl localhost:5566 & done

Project Loom

Instead of allocating one OS thread per Java thread (current JVM model), Project Loom provides additional schedulers that schedule the multiple lightweight threads on the same OS thread. This approach provides better usage (OS threads are always working and not waiting) and much less context switching.

The wiki says Project Loom supports "easy-to-use, high-throughput lightweight concurrency and new programming models on the Java platform."

The core of Project Loom involves Continuations and Fibers. The following definitions are from the excellent presentation by Alan Bateman, available here.

Fibers

Fibers are lightweight, user-mode threads, scheduled by the Java virtual machine, not the operating system. Fibers are low footprint and have negligible task-switching overhead. You can have millions of them!

Continuation

A Continuation (precisely: delimited continuation) is a program object representing a computation that may be suspended and resumed (also, possibly, cloned or even serialized).

In essence, most of us will never use Continuation in application code. Most of us will use Fibers to enhance our code. A simple definition would be:

Fiber = Continuation + Scheduler

Ok, this seems interesting. One of the challenges of any new approach is how compatible it will be with existing code. Project Loom team has done a great job on this front, and Fiber can take the Runnable interface. To be complete, note that Continuation also implements Runnable.

So, our echo server will change as follows. Note that the part that changed is only the thread scheduling part; the logic inside the thread remains the same. The full source code is available here.

ServerSocket server = new ServerSocket(5566);

while (true) {

Socketclient=server.accept();

EchoHandlerLoomhandler=newEchoHandlerLoom(client);

//Instead of running Thread.start() or similar Runnable logic, we can just pass the Runnable to the Fiber //scheduler and that's it

Fiber.schedule(handler);

}

Note: The JVM with Project Loom is available here. We need to build JVM from Project Loom branch and start using it for Java/C programs. An example is shown below:

java -version openjdk version "13-internal" 2019-09-17 OpenJDK Runtime Environment (build 13-internal+0-adhoc.faisalmasood.loom) OpenJDK 64-Bit Server VM (build 13-internal+0-adhoc.faisalmasood.loom, mixed mode)

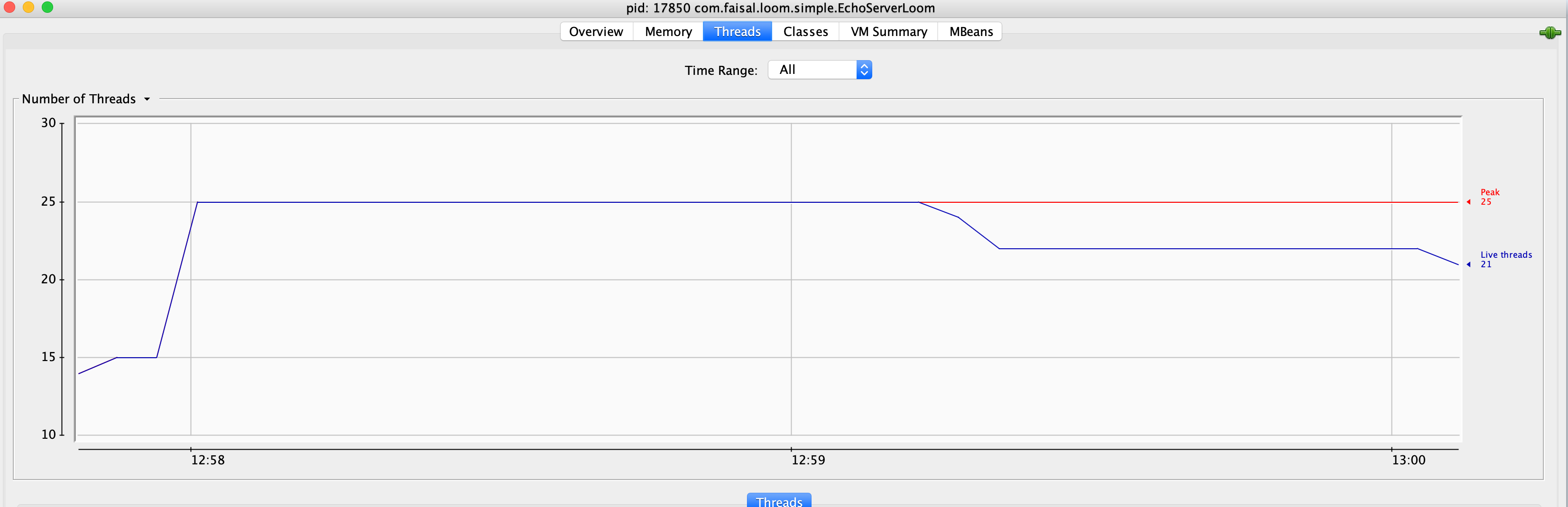

With this new version, the threads look much better (see below). By default, the Fiber uses the ForkJoinPool scheduler, and, although the graphs are shown at a different scale, you can see that the number of JVM threads is much lower here compared to the one thread per task model. This resulted in hitting the green spot that we aimed for in the graph shown earlier.

Conclusion

The improvements that Project Loom brings are exciting. We have reduced the number of threads by a factor of 5. Project Loom allows us to write highly scalable code with the one lightweight thread per task. This simplifies development, as you do not need to use reactive programming to write scalable code. Another benefit is that lots of legacy code can use this optimization without much change in the code base. I would say Project Loom brings similar capability as goroutines and allows Java programmers to write internet scale applications without reactive programming.

Last updated: February 11, 2024