Open Virtual Network (OVN) is a subproject of Open vSwitch (OVS), a performant, programmable, multi-platform virtual switch. OVN adds to the OVS existing capabilities the support for overlay networks by introducing virtual network abstractions such as virtual switches and routers. Moreover, OVN provides native methods for setting up Access Control Lists (ACLs) and network services such as DHCP. Many Red Hat products, such as Red Hat OpenStack Platform and Red Hat Virtualization, are now using OVN, and Red Hat OpenShift Container Platform will be using OVN soon.

In this article, I'll cover how OVN ARP/ND_NS actions work, the main limitations in the current implementation, and how to overcome those. First, I'll provide a brief overview of OVN's architecture to facilitate the discussion:

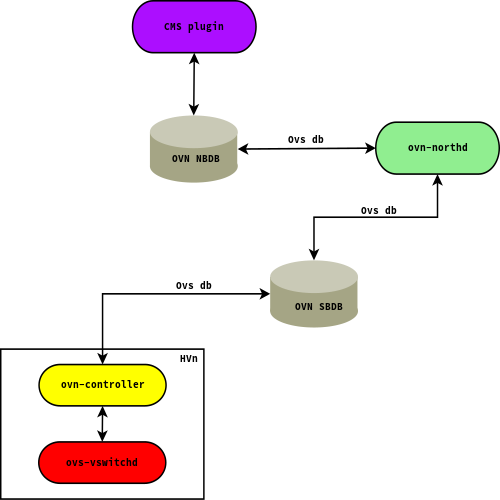

OVN architecture

An OVN deployment consists of several components:

- The OVN/CMS plugin (for example, Neutron) is the CMS interface component for OVN. The plugin’s main purpose is to translate the CMS’s notion of the logical network configuration into an intermediate representation composed by logical switches and routers that can be interpreted by OVN.

- The OVN northbound database (NBDB) is an OVSDB instance responsible for storing network representation received from the CMS plugin. The OVN northbound database has only two clients: the OVN/CMS plugin and the

ovn−northddaemon. - The

ovn−northddaemon connects to the OVN northbound database and to the OVN southbound database. It translates the logical network configuration in terms of conventional network concepts, taken from the OVN northbound database, into logical datapath flows in the OVN southbound database - The OVN southbound database (SBDB), is also an OVSDB database, but it is characterized by a quite different schema with respect to the northbound database. In particular, instead of familiar networking concepts, the southbound database defines the network in terms of match-action rule collections called logical flows. The logical flows, while conceptually similar to OpenFlow flows, exploit logical concepts, such as virtual machine instances, instead of physical ones, such as physical Ethernet ports. In particular, the southbound database includes three data types:

- Physical network data, such as the VM's IP address and tunnel encapsulation format

- Logical network data, such as packet forwarding mode

- The binding relationship between the physical network and logical network

L2 address resolution problem

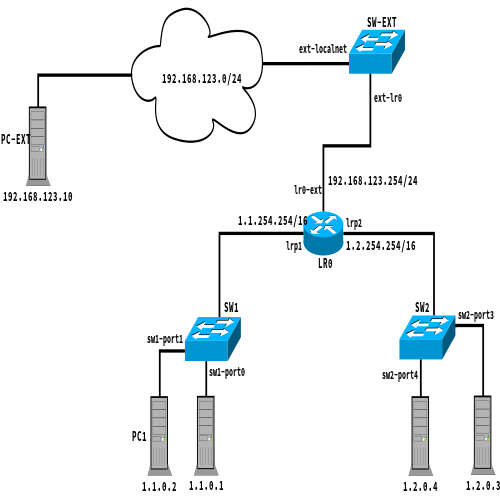

A typical OVN deployment is shown below where the overlay network is connected to an external one through a localnet port (ext-localnet, in this case):

Below, is shown the related OVN NBDB network configuration:

switch 35b34afe-ee16-469b-9893-80b024510f33 (sw2) port sw2-port4 addresses: ["00:00:02:00:00:04 1.2.0.4 2001:db8:2::14 "] port sw2-port3 addresses: ["00:00:02:00:00:03 1.2.0.3 2001:db8:2::13 "] port sw2-portr0 type: router addresses: ["00:00:02:ff:00:02"] router-port: lrp2 switch c16e344a-c3fe-4884-9121-d1d3a2a9d9b1 (sw1) port sw1-port1 addresses: ["00:00:01:00:00:01 1.1.0.1 2001:db8:1::11 "] port sw1-portr0 type: router addresses: ["00:00:01:ff:00:01"] router-port: lrp1 port sw1-port2 addresses: ["00:00:01:00:00:02 1.1.0.2 2001:db8:1::12 "] switch ee2b44de-7d2b-4ffa-8c4c-2e1ac7997639 (sw-ext) port ext-localnet type: localnet addresses: ["unknown"] port ext-lr0 type: router addresses: ["02:0a:7f:00:01:29"] router-port: lr0-ext router 681dfe85-6f90-44e3-9dfe-f1c81f4cfa32 (lr0) port lrp2 mac: "00:00:02:ff:00:02" networks: ["1.2.254.254/16", "2001:db8:2::1/64"] port lr0-ext mac: "02:0a:7f:00:01:29" networks: ["192.168.123.254/24", "2001:db8:f0f0::1/64"] port lrp1 mac: "00:00:01:ff:00:01" networks: ["1.1.254.254/16", "2001:db8:1::1/64"]

Whenever a device belonging to the overlay network (for example, PC1) tries to reach an external device (for example, PC-EXT), it forwards the packet to the OVN logical router (LR0). If LR0 has not already resolved the L2/L3 address correspondence for PC-EXT, it will send an ARP frame (or a Neighbor Discovery for IPv6 traffic) for PC-EXT. The current OVN implementation employs ARP action to perform L2 address resolution. In other words, OVN will instruct OVS to perform a "packet in" action whenever it needs to forward an IP packet for an unknown L2 destination. The ARP action replaces the IPv4 packet being processed with an ARP frame that is forwarded on the external network to resolve the PC-EXT MAC address. Below is shown the IPv4/IPv6 OVN SBDB rules corresponding to that processing:

table=10(lr_in_arp_request ), priority=100 , match=(eth.dst == 00:00:00:00:00:00), action=(arp { eth.dst = ff:ff:ff:ff:ff:ff; arp.spa = reg1; arp.tpa = reg0; arp.op = 1; output; };)

table=10(lr_in_arp_request ), priority=100 , match=(eth.dst == 00:00:00:00:00:00), action=(nd_ns { nd.target = xxreg0; output; };)

The main drawback introduced by the described processing is the loss of the first packet of the connection (as shown in the following ICMP traffic) introducing latency in TCP connections established with devices not belonging to the overlay network:

PING 192.168.123.10 (192.168.123.10) 56(84) bytes of data. 64 bytes from 192.168.123.10: icmp_seq=2 ttl=63 time=0.649 ms 64 bytes from 192.168.123.10: icmp_seq=3 ttl=63 time=0.321 ms 64 bytes from 192.168.123.10: icmp_seq=4 ttl=63 time=0.331 ms 64 bytes from 192.168.123.10: icmp_seq=5 ttl=63 time=0.137 ms 64 bytes from 192.168.123.10: icmp_seq=6 ttl=63 time=0.125 ms 64 bytes from 192.168.123.10: icmp_seq=7 ttl=63 time=0.200 ms 64 bytes from 192.168.123.10: icmp_seq=8 ttl=63 time=0.244 ms 64 bytes from 192.168.123.10: icmp_seq=9 ttl=63 time=0.224 ms 64 bytes from 192.168.123.10: icmp_seq=10 ttl=63 time=0.271 ms --- 192.168.123.10 ping statistics --- 10 packets transmitted, 9 received, 10% packet loss, time 9214ms

Proposed solution: Add buffering support for IP packets

In order to overcome this limitation, a solution for adding buffering support for IP packets has been proposed by which incoming IP frames that have no corresponding L2 address yet are queued and will be re-injected to ovs-vswitchd as soon as the neighbor discovery process is completed.

Repeating the above tests proves that even the first ICMP echo request is received by PC-EXT:

PING 192.168.123.10 (192.168.123.10) 56(84) bytes of data. 64 bytes from 192.168.123.10: icmp_seq=1 ttl=63 time=1.92 ms 64 bytes from 192.168.123.10: icmp_seq=2 ttl=63 time=0.177 ms 64 bytes from 192.168.123.10: icmp_seq=3 ttl=63 time=0.277 ms 64 bytes from 192.168.123.10: icmp_seq=4 ttl=63 time=0.139 ms 64 bytes from 192.168.123.10: icmp_seq=5 ttl=63 time=0.281 ms 64 bytes from 192.168.123.10: icmp_seq=6 ttl=63 time=0.247 ms 64 bytes from 192.168.123.10: icmp_seq=7 ttl=63 time=0.211 ms 64 bytes from 192.168.123.10: icmp_seq=8 ttl=63 time=0.187 ms 64 bytes from 192.168.123.10: icmp_seq=9 ttl=63 time=0.439 ms 64 bytes from 192.168.123.10: icmp_seq=10 ttl=63 time=0.253 ms --- 192.168.123.10 ping statistics --- 10 packets transmitted, 10 received, 0% packet loss, time 9208ms

Future development

A possible future enhancement to the described methodology could be to use the developed IP buffering infrastructure to queue packets waiting for given events and then send them back to ovs-vswitchd as soon as the requested message has been received. For example, we can rely on the IP buffering infrastructure to queue packets designated for an OpenShift pod that has not completed the bootstrap phase yet. Stay tuned :)

Additional resources

Here are some other articles related to OVN and OVS:

- How to create an Open Virtual Network distributed gateway router

- Dynamic IP address management in Open Virtual Network (OVN): Part One

- Dynamic IP address management in Open Virtual Network (OVN): Part Two

- Non-root Open vSwitch in RHEL

- Open vSwitch-DPDK: How Much Hugepage Memory?

- Open vSwitch: QinQ Performance