This is part 2 of a 2-part article about Advanced Integration with RHEV-M. The first part is available here.

In the last part you learned how to perform different operations on the engine from the outside using the API/SDK. In this part you'll learn how you can influence the engine from the inside, using extension APIs

Extension APIs

In this section we will describe the following APIs:

- UI plugins API (also covered in http://ovedou.blogspot.com and http://www.ovirt.org/Features/UIPlugins) - an API that allows extending the Administrator Portal UI. It allows you to add UI components with a RHEV-M look-and-feel, but with your own functionality. Useful in order to integrate your product with the Administrator Portal

- Scheduling API - an API that allows you to change the way the the engine schedules VMs in your data center, and fit it to your specific needs

- VDSM hooks - A mechanism that allows you to modify the VM in different lifecycle events. Useful for modifying / extending the VM's functionality

UI plugins API

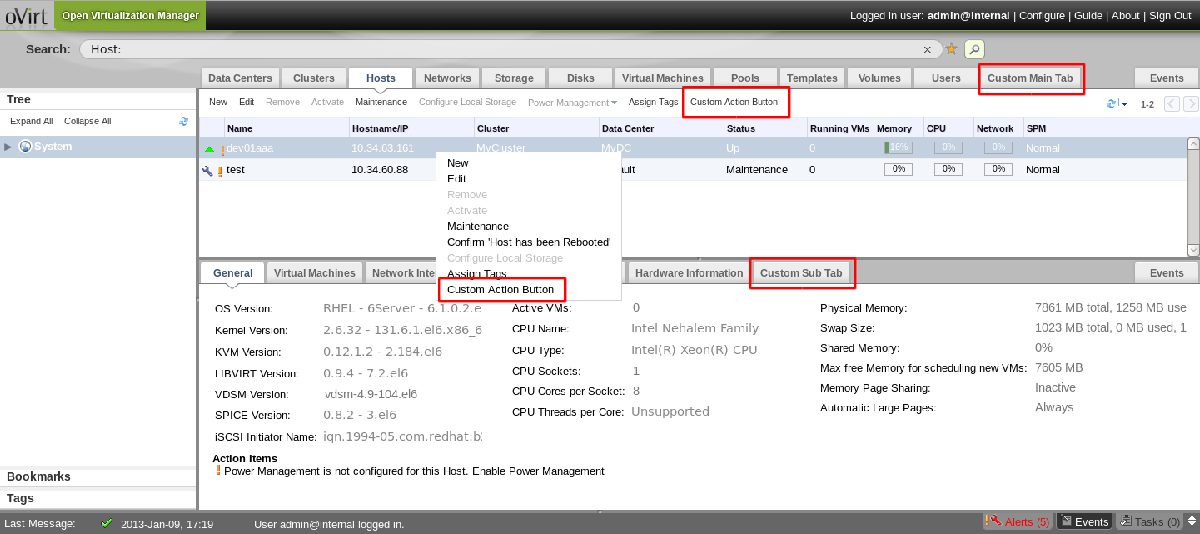

Looking at the RHEV-M Administrator Portal, there are several main UI components:

- Main Tabs - for main entities such as Data-Centers, Clusters, Hosts, VMs and more. Each main tab shows a grid with entities of that type.

- Action buttons and context menu actions - operations can be performed on each entity by either using action buttons, or context menu actions.

- Sub-Tabs - when selecting an entity in the main tab, sub-tabs are opened in the lower part of the screen, allowing you to see details about it, in different domains of interest, and performing actions related to this domain.

The UI-Plugins infrastructure allows you to add UI components from each of these types, and providing you with a set of events to act upon when certain object(s) are selected.

UI-plugins are designed to be written in javascript, using an API provided by the infrastructure.

The API contains the following methods:

- ready - indicates that the plugin is ready for initialization

- register - registers plugin event handler functions for later invocation

- configObject - returns the configuration object associated with the plugin

- addMainTab - dynamically adds a new main tab

- addSubTab - dynamically adds a new sub-tab

- addMainTabActionButton - dynamically adds a new main tab action button + context menu item

- showDialog - opens some URL in a new browser window/tab

- setTabContentUrl - dynamically changes the URL associated with a sub-tab

- setTabAccessible - dynamically shows/hides a sub-tab

- loginUserName - returns the logged-in user name

- loginUserId - returns the logged-in user name

And it supports the following events:

- UiInit - code that runs upon plugin initialization

- UserLogin - indicates that the user logged in

- UserLogout - indicates that the user logged out

- RestApiSessionAcquired - when logging in, the plugins infrastructure also logs-in the RHEV-M REST API, keeping a session ID alive for the plugin lifetime, for use in its different components

- {entityType}SelectionChange - indicates that the selection has changed in the entity type's main tab - works for all the main entities in RHEV-M (DCs, Clusters, VMs, Networks, Pools, Storage Domains and etc...)

Each plugin has a JSON-format configuration file, that contains different properties such as:

- name - unique plugin name

- url - URL of plugin host page that invokes the plugin code

- config - key-value pairs for use in the plugin code

- resourcePath - path where all the different plugin resource files are located in the server

The plugin files are placed under /usr/share/ovirt-engine/ui-plugins. No need to restart the engine after adding new plugins. Only the Administrator Portal needs to be restarted for the changes to kick in.

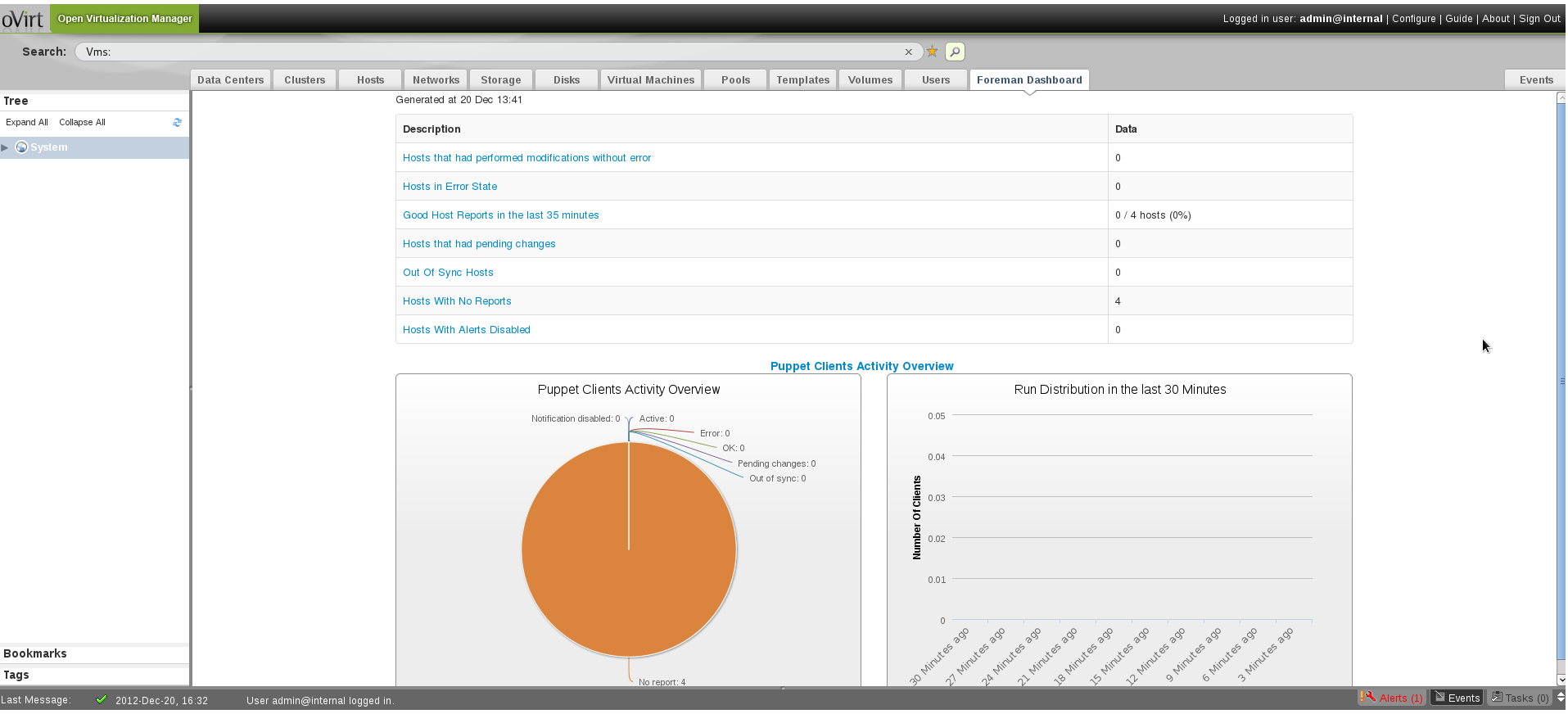

Let's have a look at an example, the Foreman UI plugin. This simple plugin does the following:

- Shows you Foreman's dashboard in a main tab

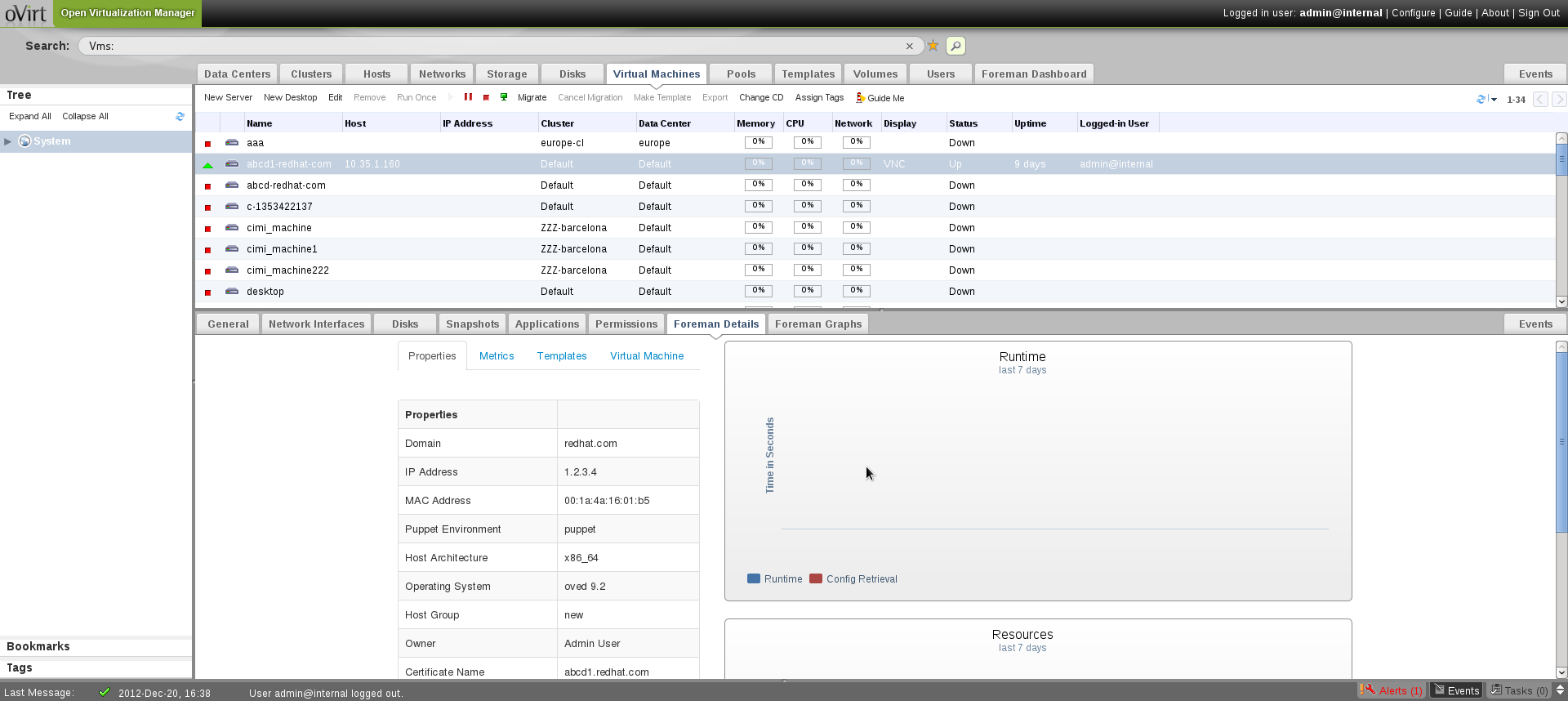

- Shows you VM details in a sub-tab, under the VM main tab

- Shows you VM-related graphs from Foreman in a sub-tab, under the VM main tab

- Adds two action buttons and context menu items, to open a new dialog with the VM details from Foreman

First, here are some screenshots of the plugin:

Now, let's see how it is done.

The main HTML file in this plugin is the start.html file. We have mentioned earlier the different events a plugin can register to. The first one used here is the UiInit event. This event is triggered when the UI plugin is being initialized. In this event we add the Dashboard main tab, and the two VM details and graphs sub-tabs:

UiInit: function() {

// Foreman Details will show the details of the Foreman Host that matches the VM

// URL will be change upon VM selection event

api.addSubTab('VirtualMachine', conf.foremanDetailsLabel, 'foreman-details', '');

// Foreman Graphs will show the different Graphs of the Foreman Host that matches the VM

// URL will be change upon VM selection event

api.addSubTab('VirtualMachine', conf.foremanGraphsLabel, 'foreman-graphs', '');

// We hide both sub-tabs until some VM is selected

setAccessibleVmForemanSubTabs(false);

// Dashboard Main Tab

api.addMainTab(conf.foremanDashboardLabel, 'foreman', conf.url + '/dashboard/ovirt');

},

Now, in order to support some SSO solution with Foreman, we registered to the RestApiSessionAcquired event, passing the session ID to Foreman, and let Foreman validate it. Won't get too deep in the Foreman side of the plugin, but just wanted to give you a hint about what you can do in your plugin in order to support SSO (see UI plugin post for more details).

// When the REST API session is acquired, we login to Foreman, using oVirt-specific SSO implementation.

// Foreman contains an oVirt authentication source in order to do that.

RestApiSessionAcquired: function(sessionId) {

var userName = conf.ovirtUserNamePrefix + api.loginUserName();

relogin(conf.logoutUrl, conf.loginUrl, userName, api.loginUserId(), sessionId);

},

Now, when the VM selection is changed, we would like to check if the VM exists in Foreman, and if so, update the URL of the sub-tab to show the VM details.

// When the VM selection is changed, we need to query Foreman to set the Foreman relevant Sub-Tabs

VirtualMachineSelectionChange: function() {

setAccessibleVmForemanSubTabs(false);

if (arguments.length == 1) {

var vmId = arguments[0].id;

var vmName = arguments[0].name;

var foremanSearchHostUrl = conf.url + '/hosts?&search=' + encodeURIComponent('uuid=' + vmId) + '+or+' + encodeURIComponent('name=' + vmName) + '&format=json';

// Get the relevant host URLs and set up the Sub-Tabs accordingly

$.getJSON(foremanSearchHostUrl, function (data) {

try {

// If a host was returned we set the show the Sub-Tabs

if (data[0] != null) {

showVmForemanSubTabs(data[0].host.name);

}

} catch (err) {

// We do nothing here. The tab will remain inaccessible

}

});

}

},

Some helper functions I used here:

var api = parent.pluginApi('foreman');

var conf = api.configObject();

function setAccessibleVmForemanSubTabs(shouldShowSubTabs) {

api.setTabAccessible('foreman-details', shouldShowSubTabs);

api.setTabAccessible('foreman-graphs', shouldShowSubTabs);

}

function showVmForemanSubTabs(hostName) {

try {

setAccessibleVmForemanSubTabs(false);

var foremanUrl = conf.url + '/hosts/' + hostName;

var foremanVmDetailsUrl = foremanUrl + '/ovirt';

var foremanVmGraphsUrl = foremanUrl + '/graphs/ovirt';

api.setTabContentUrl('foreman-details', foremanVmDetailsUrl);

api.setTabContentUrl('foreman-graphs', foremanVmGraphsUrl);

setAccessibleVmForemanSubTabs(true);

} catch (err) {

// We do nothing here. The tab will remain inaccessible

}

}

When the user logs out, we also logout from Foreman, so we register to the UserLogout event:

UserLogout: function() {

// Logging out from Foreman as well

$.get(conf.logoutUrl, { });

}

That's pretty much it!

The complete source code of all the sample plugins, including this one, are available at UI plugins samples git repo

Scheduling API

In previous RHEV-M versions, the only control you had on VMs scheduling decisions was a Cluster Policy, which was either "evenly distribution", or "power saving" scheduling policy. In RHEV-M 3.3 we introduce a new scheduling mechanism that allows you to control how the engine schedules VMs (when running, migrating, and Load balancing VMs) and customize it to fit your needs.

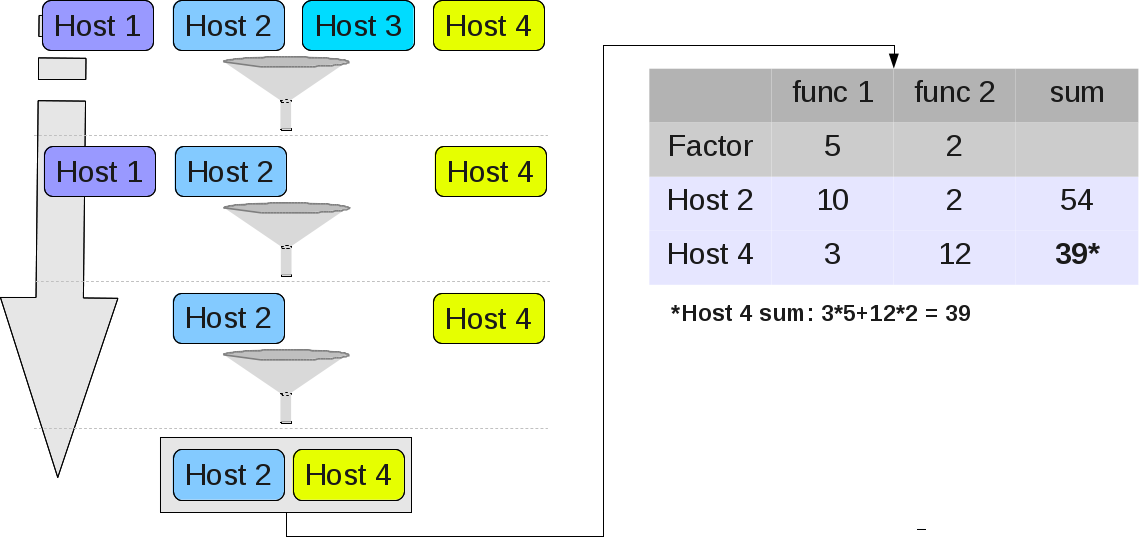

This mechanism consists of three modules:

- Filter module - filters out hosts

- Weight module - weighs hosts

- Load balancing module - decides whether a VM should be migrated to another host

These modules operate in the following use-cases

- Run VM - first the filter modules are run to filter out hosts. Then, the weight modules run and weights the different hosts. The final set of hosts and weights are taken into consideration in selecting the best host to run this VM

- Migrate VM - same as the Run VM use-case

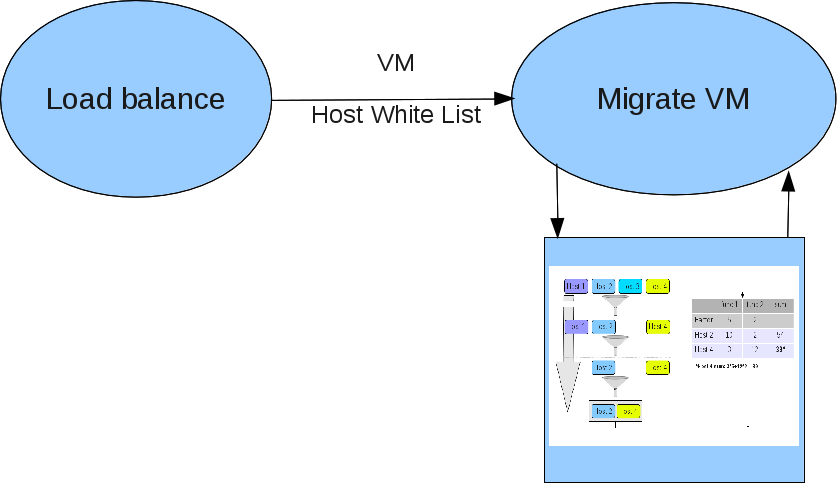

- Periodic Load Balancing - the engine periodicly initiates the load balancing module, to check whether a VM should be migrated from its source host. If so, a VM migration is initiated, and we reach use-case #2 (filters followed by weights)

Let's go over each and every module. All the examples in this section are in the Scheduler Proxy git repository.

Filter Module

Logical unit which filters out hosts

- Clear cut logic

- Easy to write and maintain

- Chained up-dependently to allow complete filtering

- Allows custom parameters

- External filters written in python can be loaded into engine

- Existing logic (pin-to-host, memory limitations, etc.) is translated into filters

Let's assume I would like to limit the maximum number of VMs running on a host. In order to do that, I can write a new filter that filters out hosts that have this maximum number of running VMs:

class max_vms():

'''returns only hosts with less running vms then the maximum'''

#What are the values this module will accept, used to present

#the user with options

properties_validation = 'maximum_vm_count=[0-9]*'

def _get_connection(self):

#open a connection to the rest api

connection = None

try:

connection = API(url='http://host:port',

username='user@domain', password='')

except BaseException as ex:

#letting the external proxy know there was an error

print >> sys.stderr, ex

return None

return connection

def _get_hosts(self, host_ids, connection):

#get all the hosts with the given ids

engine_hosts = connection.hosts.list(

query=" or ".join(["id=%s" % u for u in host_ids]))

return engine_hosts

def do_filter(self, hosts_ids, vm_id, args_map):

conn = self._get_connection()

if conn is None:

return

#get our parameters from the map

maximum_vm_count = int(args_map.get('maximum_vm_count', 100))

engine_hosts = self._get_hosts(hosts_ids, conn)

#iterate over them and decide which to accept

accepted_host_ids = []

for engine_host in engine_hosts:

if(engine_host and engine_host.summary.active < maximum_vm_count):

accepted_host_ids.append(engine_host.id)

print accepted_host_ids

First thing we do is getting a connection to the engine via the SDK. Then, we query for all the hosts that are available to run this VM (for the first filter those would be all the hosts in the cluster, and each filter will filter out hosts according to its internal logic). If they have less than the maximum number of running VMs then we keep them as valid hosts, passing them to the next filter. With just a few lines of code we added our own logic to be used in the RHEV-M scheduler.

Weight Module

Logical unit which weights hosts

The lower weight the better

- Weights can be prioritized using Factors (defaults to 1)

- The result is a score table, which will be taken into consideration when scheduling the VM

- External Weight Modules written in python can be loaded into the engine

- Predefined Weight Modules

- Even Distribution

- Power Saving

Let's see how the evenly distributed weight module can be implemented in Python. The way to make sure VMs are evenly distributed between hosts, is to weight hosts according to the number of VMs. That way, hosts with minimum number of running VMs will get the minimum weight, which will make them the perfect candidate to run this VM. That way, we would strive to keep a balanced weight between all hosts.

Here is a plugin for that:

from ovirtsdk.xml import params

from ovirtsdk.api import API

import sys

class even_vm_distribution():

'''rank hosts by the number of running vms on them, with the least first'''

properties_validation = ''

def _get_connection(self):

#open a connection to the rest api

connection = None

try:

connection = API(url='http://host:port',

username='user@domain', password='')

except BaseException as ex:

#letting the external proxy know there was an error

print >> sys.stderr, ex

return None

return connection

def _get_hosts(self, host_ids, connection):

#get all the hosts with the given ids

engine_hosts = connection.hosts.list(query=" or ".join(["id=%s" % u for u in host_ids]))

return engine_hosts

def do_score(self, hosts_ids, vm_id, args_map):

conn = self._get_connection()

if conn is None:

return

engine_hosts = self._get_hosts(hosts_ids, conn)

#iterate over them and score them based on the number of vms runnin

host_scores = []

for engine_host in engine_hosts:

if(engine_host and engine_host.summary):

host_scores.append((engine_host.id, engine_host.summary.active))

print host_scores

Load Balancing Module

- Triggers a scheduled task to determine which VM needs to be migrated

- A single load balancing logic is allowed per cluster

- Predefined Weight Modules

- Even Distribution

- Power Saving

Once a decision is made, and the load balancer requests to migrate a VM, the usual scheduling mechanism comes to life, and all the filters and the weight functions are run, to determine the destination for the migrated VM.

Here is an example for a load balancer that checks whether there is a host that is over loaded (running more than the maximum number of VMs), and if so it returns the first VM it finds on the host as a migration candidate.

def do_balance(self, hosts_ids, args_map):

conn = self._get_connection()

if conn is None:

return

#get our parameters from the map

maximum_vm_count = int(args_map.get('maximum_vm_count', 100))

#get all the hosts with the given ids

engine_hosts = self._get_hosts(hosts_ids, conn)

#iterate over them and decide which to balance from

over_loaded_host = None

white_listed_hosts = []

for engine_host in engine_hosts:

if(engine_host):

if (engine_host.summary.active < maximum_vm_count):

white_listed_hosts.append(engine_host.id)

continue

if(not over_loaded_host or over_loaded_host.summary.active < engine_host.summary.active):

over_loaded_host = engine_host

if(not over_loaded_host):

return

selected_vm = None

#just pick the first we find

host_vms = conn.vms.list('host=' + over_loaded_host.name)

if host_vms:

selected_vm = host_vms[0].id

else:

return

print (selected_vm, white_listed_hosts)

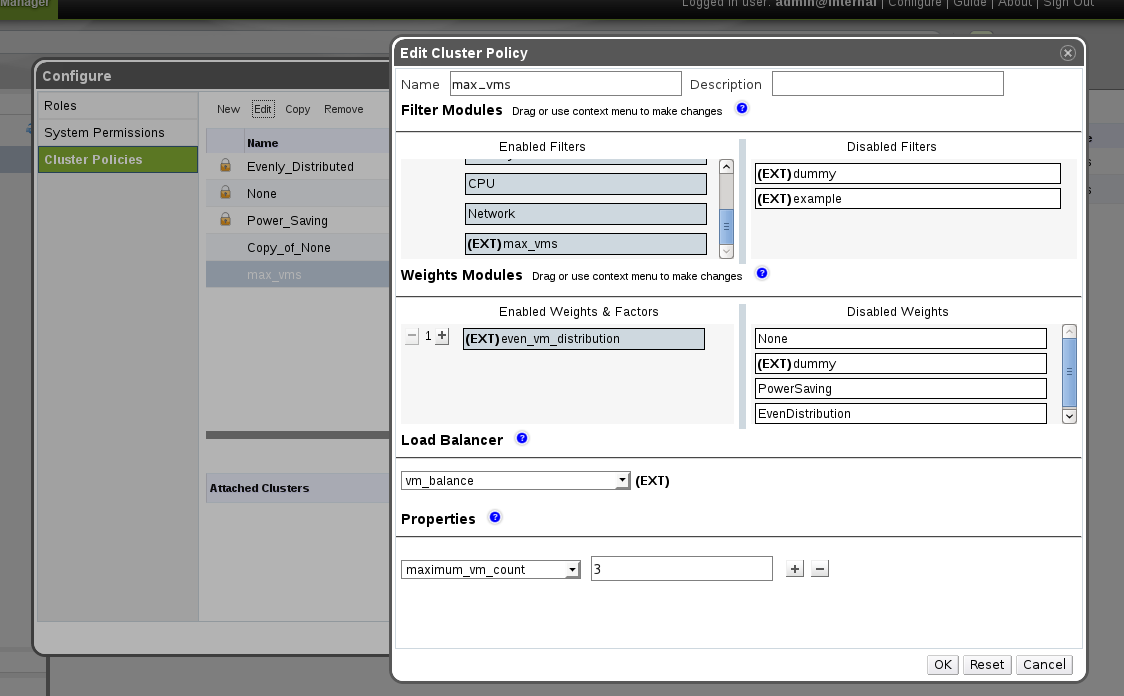

Applying Scheduling Modules

In order to use these modules, you need to create a new cluster policy, selecting the relevant filters, weight functions, and load balancing logic:

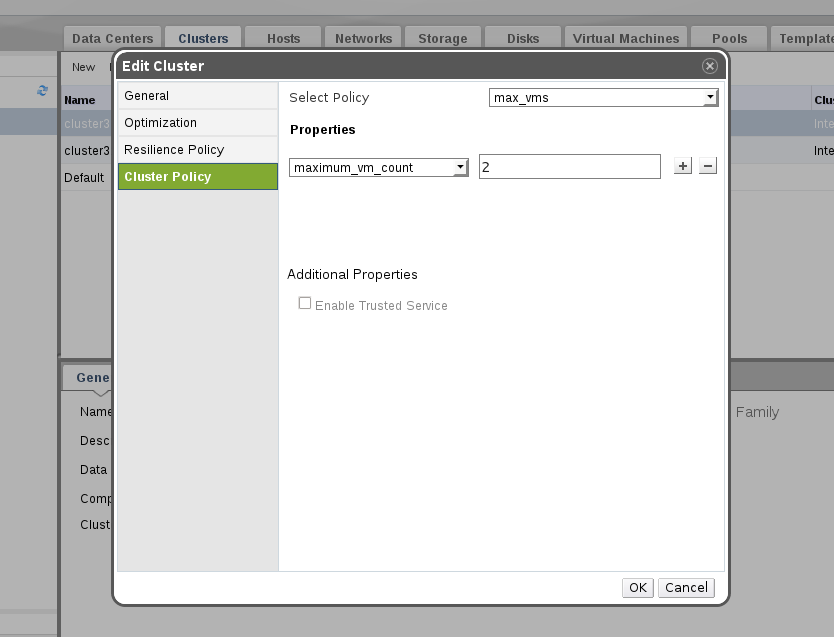

and then apply this logic in the Cluster:

That's it... you have your own scheduling policy applied on the cluster!

VDSM (Virtual Desktop and Server Manager) Hooks

VDSM hooks is a hook mechanism for customization of VMs

- Allows administrator to define scripts to modify VM operation

- Extend or modify VM configuration

- Run different system scripts

- Hook scripts are called at specific VM lifecycle events

- Hooks can modify a virtual machines XML definition before VM start

- Hooks can run system commands – eg. Apply firewall rule to VM

- Hook scripts are located on the different hosts

- Lifecycle events where you can apply hooks:

- On VDSM (management agent) Start

- On VDSM Stop

- Before VM start

- After VM start

- Before VM migration in/out

- After VM migration in/out

- Before and After VM Pause

- Before and After VM Continue

- Before and After VM Hibernate

- Before and After VM resume from hibernate

- Before and After VM set ticket

- On VM stop

- Before NIC hotplug / hotunplug

- After NIC hotplug / hotunplug

Here is an example of a hook script that adds numa support to the VM's XML definitions, in the before_vm_start lifecycle event. This can help improve a VM placement on the NUMA nodes of a NUMA-enabled hosts. In order to do that we query for existing numatune sections, and if no such section exist we add a new numatune section, with the proper configuration. Here is the source code of this hook:

#!/usr/bin/python

import os

import sys

import hooking

import traceback

'''

numa hook

=========

add numa support for domain xml:

memory=interleave|strict|preferred

numaset="1" (use one NUMA node)

numaset="1-4" (use 1-4 NUMA nodes)

numaset="^3" (don't use NUMA node 3)

numaset="1-4,^3,6" (or combinations)

syntax:

numa=strict:1-4

'''

if 'numa' in os.environ:

try:

mode, nodeset = os.environ['numa'].split(':')

domxml = hooking.read_domxml()

domain = domxml.getElementsByTagName('domain')[0]

numas = domxml.getElementsByTagName('numatune')

if not len(numas) > 0:

numatune = domxml.createElement('numatune')

domain.appendChild(numatune)

memory = domxml.createElement('memory')

memory.setAttribute('mode', mode)

memory.setAttribute('nodeset', nodeset)

numatune.appendChild(memory)

hooking.write_domxml(domxml)

else:

sys.stderr.write('numa: numa already exists in domain xml')

sys.exit(2)

except:

sys.stderr.write('numa: [unexpected error]: %sn' %

traceback.format_exc())

sys.exit(2)

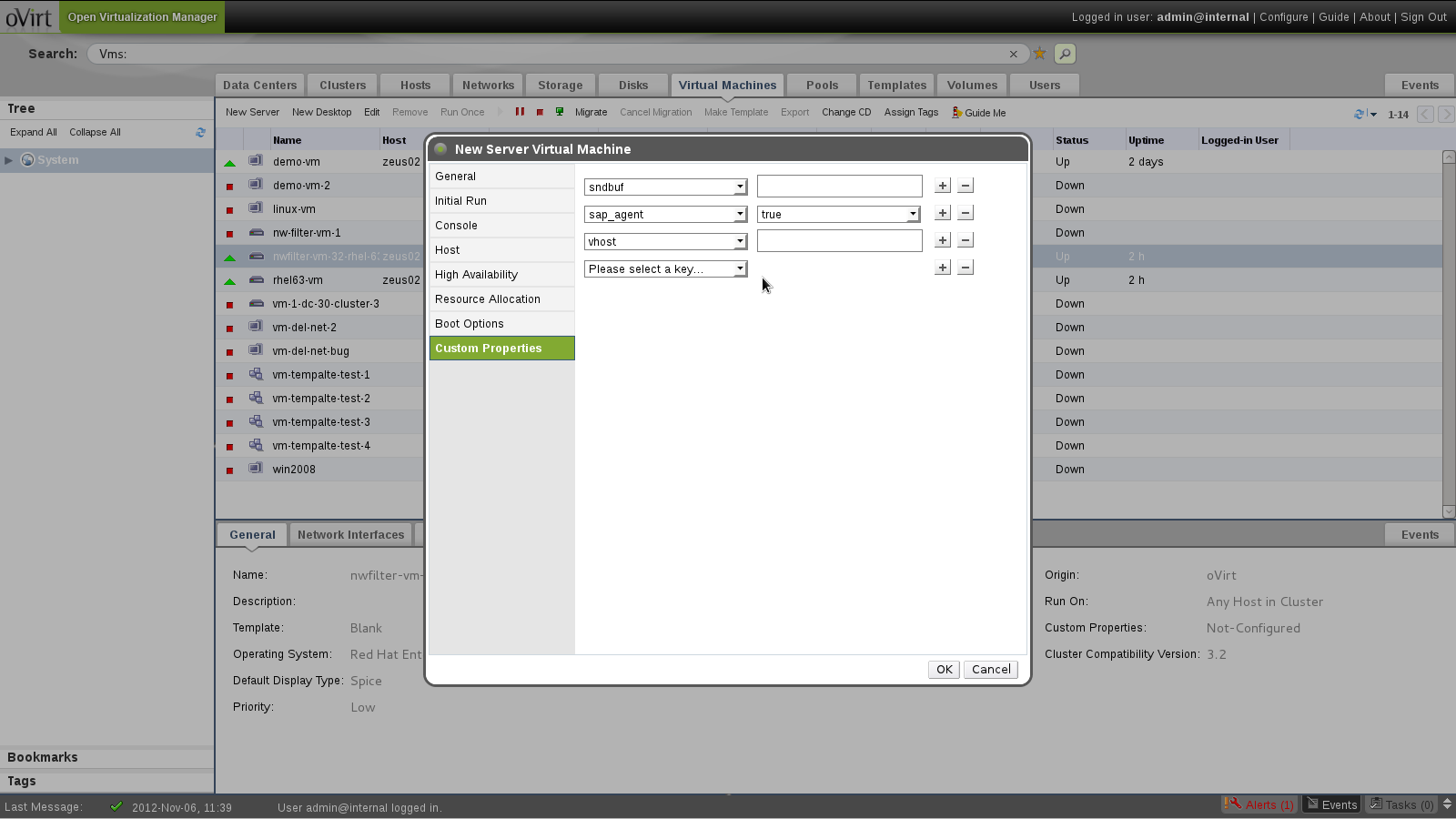

Hooks are applied on VMs using the "Custem Property" side tab on the VM configuration dialog:

More information on VDSM hooks is available at VDSM Hooks Wiki Page and a catalog of hooks is available at VDSM Hooks Catalog

Summary

In this article we saw different ways to integrate with the RHEV-M engine, both from the outside using the different REST-based APIs, and from the inside using various extension APIs. These APIs are very powerful in letting you customize and fit RHEV-M the specific needs of your organization.