Key takeaways

- Different tools for different jobs: The benchmark results show two distinct design philosophies. vLLM is designed for high-throughput, multi-user serving, while llama.cpp is optimized for single-stream efficiency and portability.

- vLLM excels at scalability: vLLM's throughput scales significantly with concurrent load, making it a strong choice for high-traffic applications. llama.cpp's throughput remains consistent, indicating a design focused on predictable single-request performance.

- vLLM delivers superior responsiveness at scale: On the NVIDIA H200 GPUs, vLLM provides near-instantaneous Time to First Token (TTFT) and lower Inter-Token Latency (ITL) at low concurrency, making it ideal for interactive applications. While llama.cpp's core generation is fast, its queuing model leads to high TTFT and constant ITL, reinforcing its suitability for offline batch processing or single-user tasks.

Following our previous analysis of Ollama and vLLM, we are extending our comparison to another giant in the inference space: llama.cpp. While vLLM is known for its Python-based, throughput-oriented architecture, llama.cpp is renowned for its lightweight C++ core, which promises exceptional efficiency.

llama.cpp is a standout in the LLM ecosystem for its efficiency and portability. Written in pure C/C++ with no external dependencies, it offers plain-vanilla inference that can run on a wide range of hardware, from powerful servers and GPUs to edge devices like laptops and phones. Its CPU-first design philosophy and support for various quantization methods (from 2-bit to 8-bit integers) make it exceptionally versatile.

A key feature of llama.cpp is its use of the GGUF (GPT-Generated Unified Format), a custom file format specifically designed for rapid loading and memory-mapped execution. This allows models to be loaded almost instantly and contributes significantly to the engine's fast startup times and low resource footprint, making it a favorite among developers running models on consumer-grade hardware.

In this post, we put these two high-performance engines to the test in a head-to-head benchmark to help you choose the right tool for your specific deployment needs on enterprise-grade hardware.

The benchmarking setup

To ensure a true "apples-to-apples" comparison, we created a controlled testing environment on Red Hat OpenShift, using full-precision models for both engines and using the latest generation of NVIDIA hardware.

- Hardware and software:

- GPU: Single NVIDIA H200-PCIe-141GB GPU

- NVIDIA driver version: 570.148.08

- CUDA version: 12.8

- Platform: OpenShift version 4.18.9

- vLLM version: v0.10.0

- llama.cpp version: b6100

- Python version: 3.13.5

- Models:

- vLLM: meta-llama/Llama-3.1-8B-Instruct (Full Precision bfloat16)

- llama.cpp: bartowski/Meta-Llama-3.1-8B-Instruct-GGUF (Full Precision F16 GGUF file)

- Benchmarking tool: We used GuideLLM (version v0.2.1), running as a container inside the same OpenShift cluster to ensure network latency was not a factor. GuideLLM is a benchmarking tool specifically designed to measure the performance of LLM inference servers. Refer to this article or this video to learn more about GuideLLM. We used its concurrency feature to simulate a load of 1 to 64 simultaneous users.

Methodology

We used a fixed dataset of prompt-response pairs to ensure that every request sent to both servers was identical. This eliminates variables from synthetic data generation and allows for a direct comparison of the engines themselves. Each test at a given concurrency level was run for 300 seconds.

Key performance metrics:

- Requests Per Second (RPS): The average number of requests the system can successfully complete each second. Higher is better.

- Output Tokens Per Second (TPS): The total number of tokens generated per second, measuring the server's total generative capacity. Higher is better.

- P99 Time to First Token (TTFT): The "worst-case" time it takes to receive the first token of a response. This measures initial responsiveness. Lower is better.

- P99 Inter-token Latency (ITL): The "worst-case" time between each subsequent token in a response, measuring text generation speed. Lower is better.

For TTFT and ITL, we used P99 (99th percentile) as the measure. P99 means that 99% of requests had a TTFT/ITL at or below this value, making it a good measure of "worst-case" responsiveness.

Performance comparison: vLLM versus llama.cpp

This comparison evaluates the latest upstream versions of vLLM and llama.cpp. Recognizing that the default setting for llama.cpp is not intended for high-concurrency scenarios, we tuned its settings for our environment.

To ensure llama.cpp fully leveraged the available GPU hardware, we used the -ngl 99 flag to offload all possible model layers to the NVIDIA H200 GPU. Additionally, we used the --threads and --threads-batch flags to increase the number of threads available for prompt and batch processing. We empirically determined that a value of 64 for both was the stable limit for our llama.cpp deployment.

Throughput (RPS and TPS)

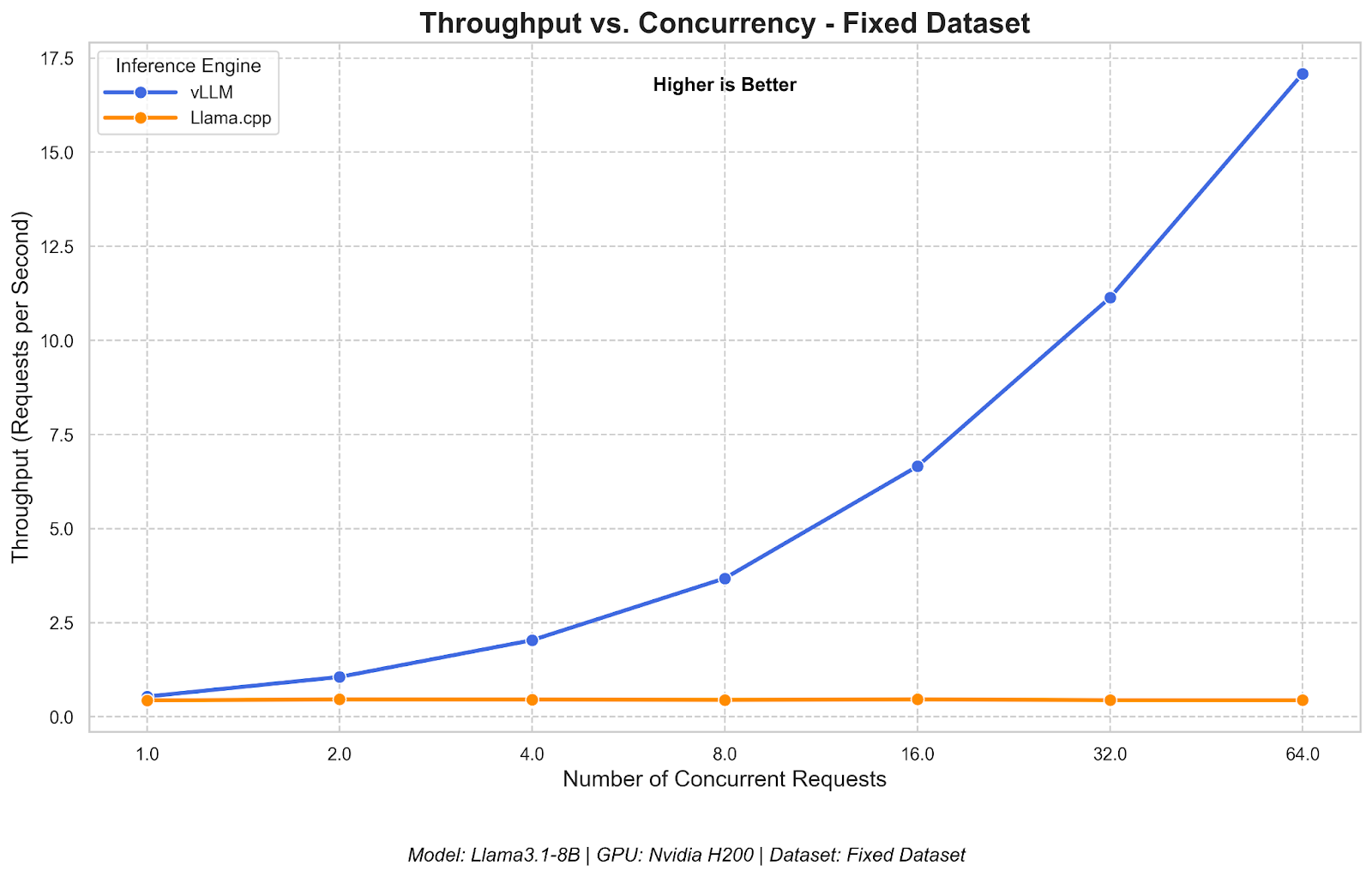

The difference in how the two engines handle a growing user load was immediate and stark. vLLM's throughput scales impressively as concurrency increases, demonstrating its ability to efficiently manage a high volume of requests. In contrast, llama.cpp's throughput remains almost perfectly flat, indicating it processes a steady but fixed amount of work, regardless of the incoming load. See Figure 1.

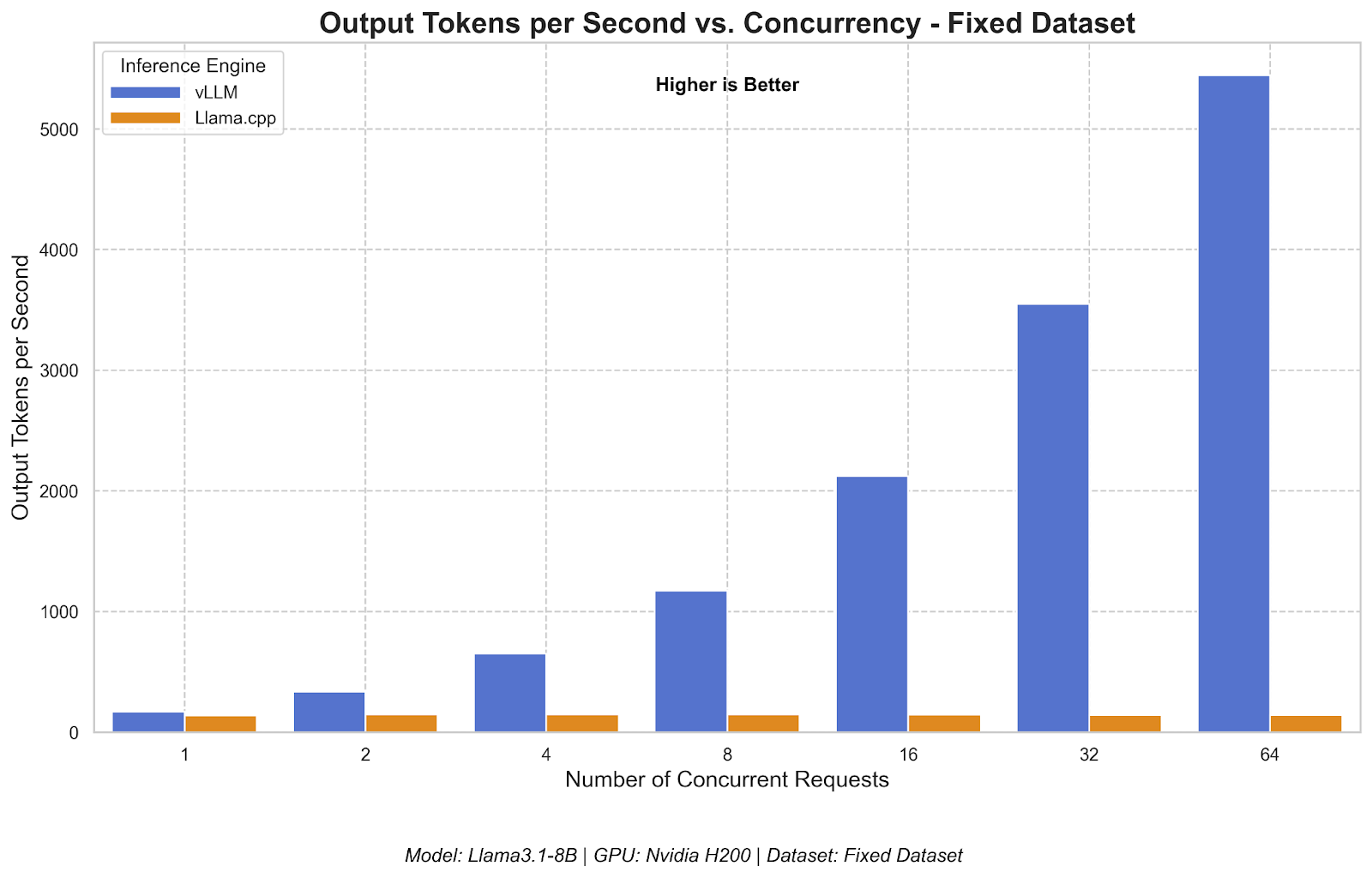

The bar chart for Output Tokens per Second (Figure 2) reinforces this finding. While llama.cpp shows comparable performance at a concurrency of 1, vLLM's total generative power quickly surpasses it and continues to grow with the load.

Conclusion: For multi-user applications where maximizing throughput and scalability is the goal, vLLM is the clear winner. At peak load, vLLM delivered more than 35 times the request throughput (RPS) and more than 44 times the total output tokens per second (TPS) compared to llama.cpp. llama.cpp's architecture is suited for single-user or low-concurrency tasks.

Responsiveness (TTFT and ITL)

The latency metrics reveal the different architectural priorities of the two engines.

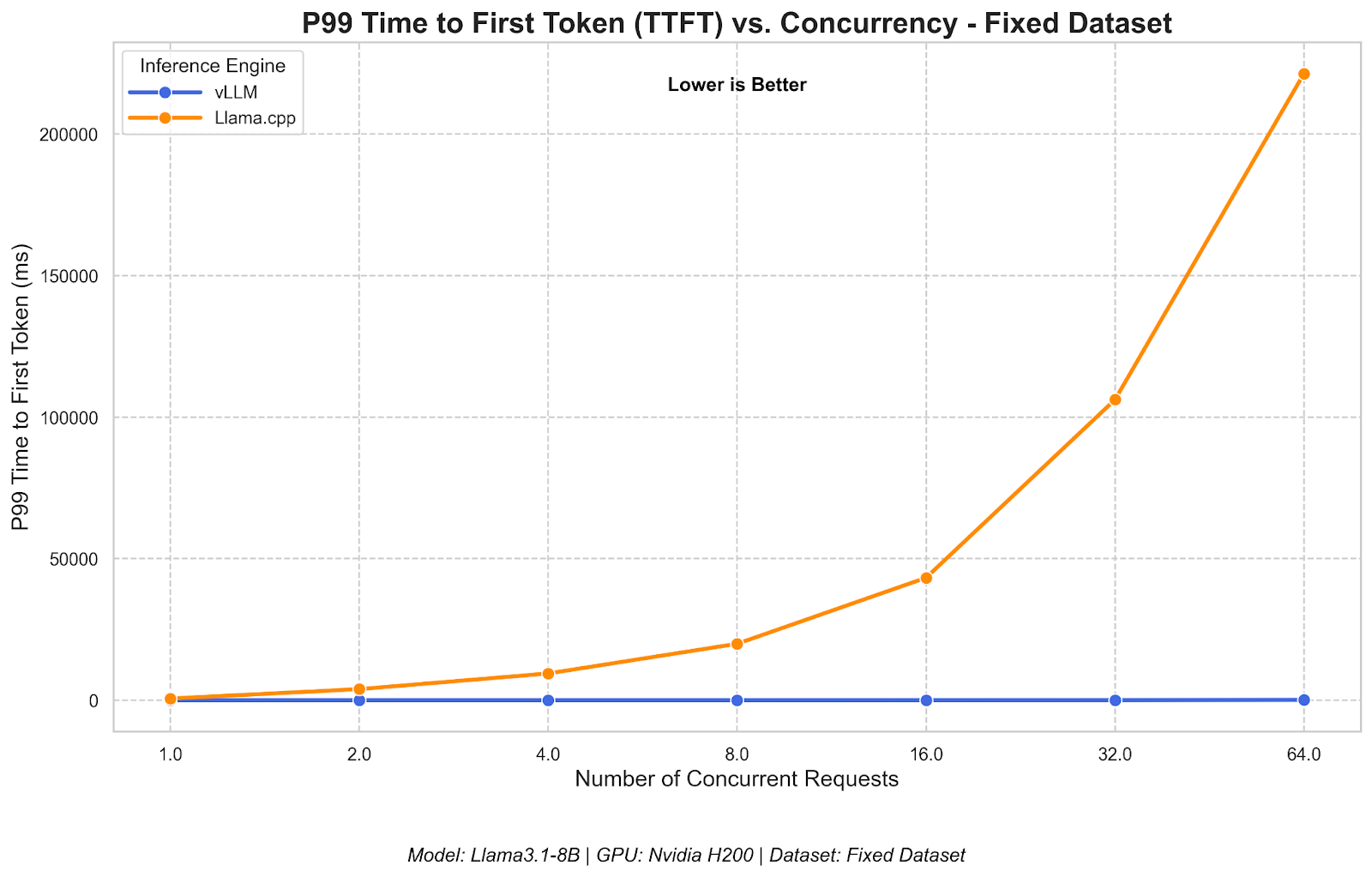

- Time to First Token (TTFT): vLLM's P99 TTFT is remarkably low and stable, remaining nearly flat even at 64 concurrent users (Figure 3). This is due to its highly efficient request scheduler. llama.cpp, on the other hand, shows an exponential increase in TTFT. This is a direct result of its queuing model. As more requests come in, they have to wait longer in line before being processed, making it unsuitable for high-concurrency interactive applications.

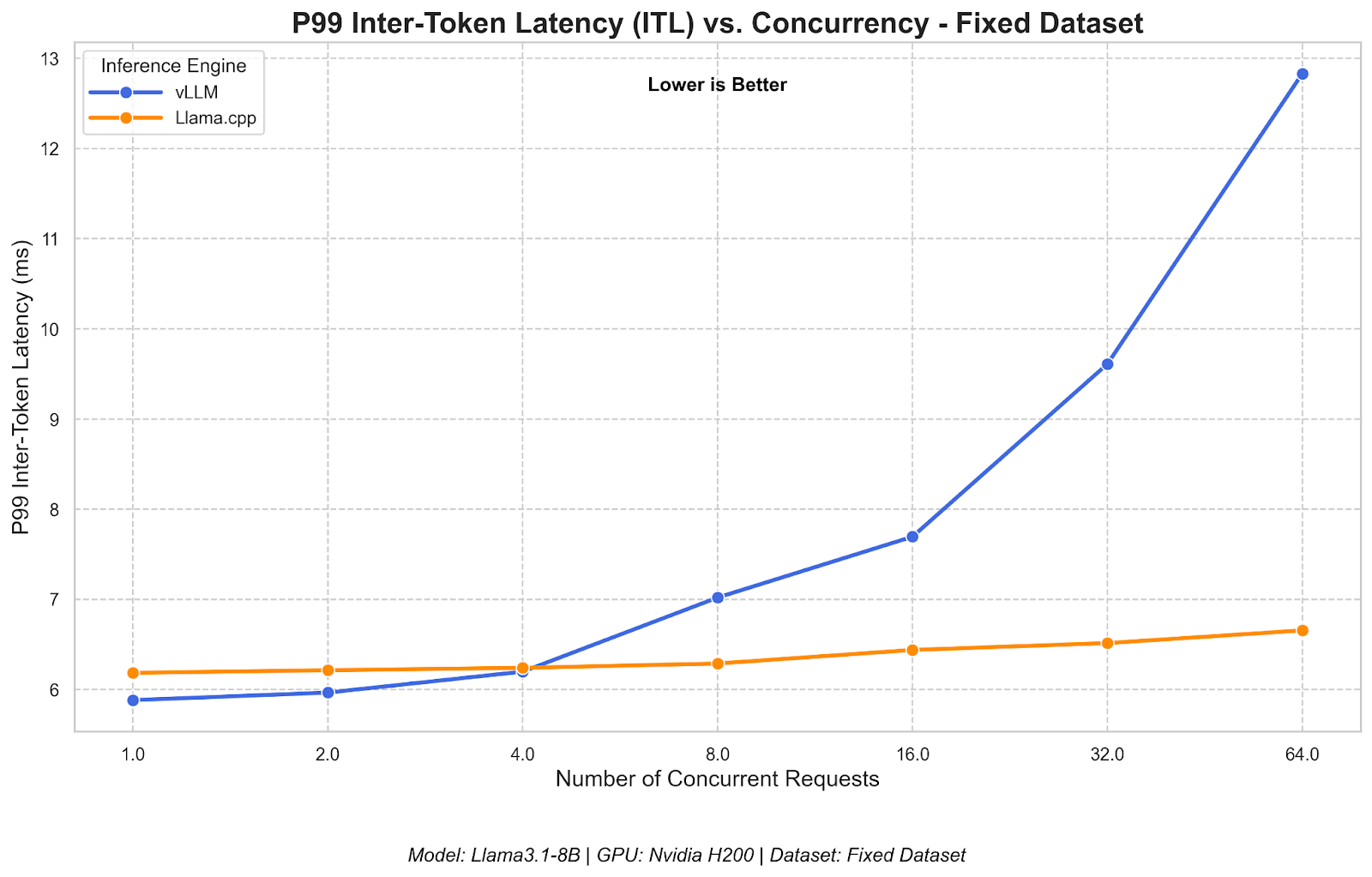

- Inter-token Latency (ITL): vLLM's P99 ITL is lower than llama.cpp's at low concurrency levels (1–4 users). After that, the roles are reversed (Figure 4). llama.cpp's P99 ITL is extremely low, showcasing the efficiency of its C++ core when processing a request. In contrast, vLLM's ITL, while still very fast, increases with concurrency. This is a classic throughput-latency trade-off: to handle many requests at once, vLLM creates large batches, which slightly increases the time to compute the next token for any single request within that batch.

Conclusion: The right tool for the job

This benchmark comparison on the NVIDIA H200 GPU highlights that vLLM and llama.cpp are both excellent tools with different strengths.

- llama.cpp remains a fantastic choice for its intended purpose: extreme portability and efficiency on a wide range of devices, especially consumer-grade hardware. Its advantages lie in its minimal dependencies, fast startup time, and its ability to run almost anywhere. It is ideal for local development, desktop applications, and embedded systems.

- vLLM is a scalability powerhouse. It is unequivocally the superior choice for production deployment in a multi-user environment. Its architecture is built to maximize the utilization of powerful hardware, delivering significantly higher throughput and maintaining excellent initial responsiveness (TTFT) under heavy concurrent load.

For performance-critical applications on modern, enterprise-grade GPUs, vLLM demonstrates a clear advantage in our tests. It is a scalability powerhouse, leading in every performance category we measured—throughput, responsiveness, and single-request generation speed. For teams building scalable, high-performance AI applications, this data shows that vLLM is a powerful and robust foundation.