The deployment architecture of Red Hat OpenStack Services on OpenShift is a modern way to set up an OpenStack Infrastructure-as-a-Service (IaaS) environment. This new architecture brings novel capabilities and opportunities. One of those new capabilities is our distributed control plane services, running in pods. More importantly, these services consume a fraction of the resources the monolithic control plane did in previous versions.

For example, look at the following taken from the Red Hat OpenShift namespace, running a full HA (all services running x3 replicas) OpenStack Services on OpenShift control plane:

root@mobile-bison:~# kubectl top pods -n openstack-dev --sum

...

470m 15410Mi

root@mobile-bison:~# kubectl top pods -n openstack-dev0 --sum

...

457m 15255Mi That got us thinking. What if we could do more with less?

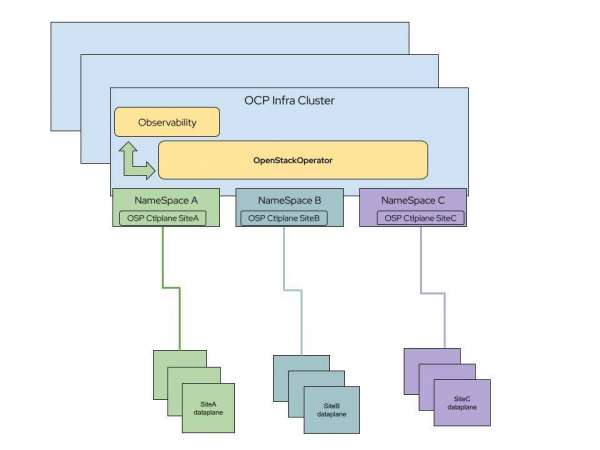

So, we decided to do something about it. In OpenStack Services on OpenShift—Feature Release 3 we will introduce the ability to run multiple OpenStack Services on OpenShift deployments under the same OpenShift infrastructure and in different namespaces (Figure 1).

This is a massive benefit for those who need to run multiple environments for:

- Development

- Staging

- Testing

- Training

How does it work?

The OpenStack operator controller is listening to all namespaces in the cluster by default, thus enabling the creation of OpenStack resources per namespace.

In a nutshell, we can follow the greenfield deployment documentation, picking a different project on which to deploy. We just need to make sure of the following:

The nmstate profile for the worker node carries the relevant VLANs for multiple environments:

apiVersion: nmstate.io/v1 kind: NodeNetworkConfigurationPolicy metadata: name: osp-ng-nncp-worker1 spec: desiredState: interfaces: - description: internalapi vlan prod ipv4: address: - ip: 172.17.0.24 prefix-length: 24 enabled: true dhcp: false ipv6: enabled: false name: enp10s0.20 state: up type: vlan vlan: base-iface: enp10s0 id: 20 - description: storage vlan prod ipv4: address: - ip: 172.18.0.24 prefix-length: 24 enabled: true dhcp: false ipv6: enabled: false name: enp10s0.30 state: up type: vlan vlan: base-iface: enp10s0 id: 30 - description: tenant vlan prod ipv4: address: - ip: 172.19.0.24 prefix-length: 24 enabled: true dhcp: false ipv6: enabled: false name: enp10s0.50 state: up type: vlan vlan: base-iface: enp10s0 id: 50 - description: ctlplane vlan stage ipv4: address: - ip: 192.168.140.24 prefix-length: 24 enabled: true dhcp: false ipv6: enabled: false name: enp10s0.140 state: up type: vlan vlan: base-iface: enp10s0 id: 140 - description: internalapi vlan stage ipv4: address: - ip: 172.17.1.24 prefix-length: 24 enabled: true dhcp: false ipv6: enabled: false name: enp10s0.21 state: up type: vlan vlan: base-iface: enp10s0 id: 21 - description: storage vlan stage ipv4: address: - ip: 172.18.1.24 prefix-length: 24 enabled: true dhcp: false ipv6: enabled: false name: enp10s0.31 state: up type: vlan vlan: base-iface: enp10s0 id: 31 - description: tenant vlan stage ipv4: address: - ip: 172.19.1.24 prefix-length: 24 enabled: true dhcp: false ipv6: enabled: false name: enp10s0.51 state: up type: vlan vlan: base-iface: enp10s0 id: 51- Network attachments for network isolation are present in each target namespace.

- We use dedicated bare metal resources for each deployment.

As we can see in the following example, all are part of a greenfield deployment:

root@mobile-bison:~# oc get network-attachment-definitions.k8s.cni.cncf.io -n openstack-dev

NAME AGE

ctlplane 30d

external 30d

internalapi 30d

storage 30d

tenant 30d

root@mobile-bison:~# oc get network-attachment-definitions.k8s.cni.cncf.io -n openstack-dev0

NAME AGE

ctlplane 45d

external 45d

internalapi 45d

storage 45d

tenant 45d

root@mobile-bison:~# oc get l2advertisements.metallb.io -n metallb-system

NAME IPADDRESSPOOLS IPADDRESSPOOL SELECTORS INTERFACES

l2advertisement-ctlplane ["ctlplane"] ["enp10s0.140"]

l2advertisement-ctlplane-dev ["ctlplane-dev"] ["enp10s0.141"]

l2advertisement-internalapi ["internalapi"] ["enp10s0.21"]

l2advertisement-internalapi-dev ["internalapi-dev"] ["enp10s0.22"]

l2advertisement-storage-stating ["storage-dev"] ["enp10s0.31"]

l2advertisement-tenant ["tenant"] ["enp10s0.51"]

l2advertisement-tenant-dev ["tenant-dev"] ["enp10s0.52"]

root@mobile-bison:~# oc get bmh -A

NAMESPACE NAME STATE CONSUMER ONLINE ERROR AGE

openshift-machine-api openshift-master-0 provisioned bm-ipi-84cx2-master-0 true 63d

openshift-machine-api openshift-master-1 provisioned bm-ipi-84cx2-master-1 true 63d

openshift-machine-api openshift-master-2 provisioned bm-ipi-84cx2-master-2 true 63d

openstack-dev rhoso18-dev-compute-0 provisioned rhoso-bmp-dev-0-2 true 59d

openstack-dev rhoso18-dev-compute-1 provisioned rhoso-bmp-dev-0-2 true 59d

openstack-dev rhoso18-dev-compute-2 provisioned rhoso-bmp-dev-0-2 true 59d

openstack-dev0 rhoso18-dev0-compute-0 provisioned rhoso-bmp-dev0-2 true 45d

openstack-dev0 rhoso18-dev0-compute-1 provisioned rhoso-bmp-dev0-2 true 45d

openstack-dev0 rhoso18-dev0-compute-2 provisioned rhoso-bmp-dev0-2 true 45dIt is important to mention that since this is the same OpenShift cluster, we have one set of operators governing all of the namespaces. That means we are using the same top level CustomResourceDefinitions (CRDs) to create all of the resources. When the operators update, it will be to the same version. So when the administrator wants to update a OpenStack Services on OpenShift deployment, it will always be the versions the operators are in.

That being said, each Namespace would be able to update individually and in the time convenient to the organization, so they can use multiple maintenance windows for different environments.

Recap

To recap, with multi-OpenStack Services on OpenShift, it is possible to deploy multiple, independent OpenStack environments, each with its own data plane. This new functionality offers unprecedented resource consolidations for OpenStack control planes, and we have more to come. Stay tuned for the next one!