Data storage gets complex in the world of containers and microservices, as we discussed in Part 1 of this series. That article explained the Kubernetes concept of a persistent volume (PV) and introduced Red Hat OpenShift Data Foundation as a simple way to get persistent storage for your applications running in Red Hat OpenShift.

Beyond the question of provisioning storage, one must think about types of storage and access. How will you read and write data? Who needs the data? Where will it be used? Because these questions sound a bit vague, let's jump into some specific examples.

I ran the examples in this article on Developer Sandbox for Red Hat OpenShift, a free instance of OpenShift that you can use to begin your OpenShift and Kubernetes journey.

Data on the ROX: ReadOnlyMany

Read access is the basic right granted by any data source. We'll start with read access and examine writing in a later section.

There are a couple of variants of read access in Kubernetes. If your application reads data from a source but never updates, appends, or deletes anything in the source, the access is ReadOnly. And, because ReadOnly access never changes the source, multiple consumers can read from the source at the same time without risk of receiving corrupted results, a type of access called ReadOnlyMany or ROX.

When a persistent volume claim (PVC) is created, the technology upon which it exists is specified by the storage class used in the YAML configuration file. When you ask for a PVC, you specify a size, a storage class, and an access method such as ROX. It is up to the underlying technology to meet the access method's requirements. And not every technology meets the access requirements of ROX. For example, Amazon Elastic Block Store (EBS) does not.

If you create a PVC using a storage class that does not support your access method, you do not receive any error message at creation time. It is not until you assign the PVC to an application that any error message appears. For example, through the following configuration, I created a PVC called claim2 that tried to use EBS with a ReadOnlyMany access level:

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: claim2

namespace: rhn-engineering-dschenck-dev

spec:

accessModes:

- ReadOnlyMany

volumeMode: Filesystem

resources:

requests:

storage: 1GiHere's what happened at the command line. OpenShift was happy to oblige me in the creation of this PVC, even though it won't accept data at runtime:

PS C:\Users\dschenck\Desktop> oc create -f .\persistentvolumeclaim-claim2.yaml

persistentvolumeclaim/claim2 createdFigure 1 shows the claim waiting for assignment.

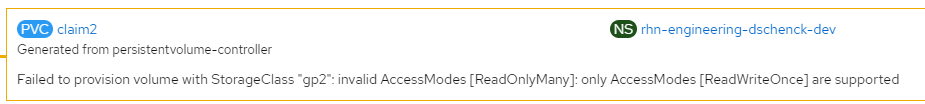

I then created an app and assigned this PVC to it. That is when the error appeared, as seen in Figure 2.

If the PVC had been based on a storage class that supported ROX, this error would not have appeared.

To avoid crucial runtime problems such as the one I've laid out here, DevOps practices are valuable. Developers and operators work together to identify problems early in the development process.

ReadWriteOnce (RWO) and ReadWriteMany (RWX)

Reading and writing data is supported by the ReadWriteOnce (RWO) and ReadWriteMany (RWX) access methods. As you can surmise, these methods are dictated by the storage method that underlies the storage class. Regardless of which access method you choose, you must make sure the storage class supports it. Two storage classes that support RWX access are CephFS and the Network File System (NFS).

Creating file system storage and using CephFS is facilitated by the OpenShift Data Foundation (ODF) Operator, which not only provisions the storage but brings with it a lot of benefits. Snapshots and backups are simple operations with ODF and can be automated as well. If you're going to provision storage, you need to bring along some sort of method for backup and recovery.

Object storage

Traditionally, operating systems and storage media have offered file systems and block storage. One of these storage types underlies the storage of regular files (e.g., readme.txt) as well as relational databases and NoSQL databases.

But what if you need to store objects such as videos and photos that benefit from large amounts of metadata? A video might have a title, an author, a subject, tags, length, screen format, etc. Storing this metadata with the object is preferable to, say, a database entry associated with the video.

Object Bucket Claims

OpenShift accommodates object storage by allowing you to create an Object Bucket Claim (OBC) object. An OBC is compatible with AWS S3 storage, which means that any existing object-storage code you have will likely work with OBC, needing few if any changes.

Using object storage means you can use an S3 client library, such as Boto3 with Python. Metadata can be stored with an object as a collection of key-value pairs. This is the storage model of choice for items such as photos, videos, and music.

The Multicloud Object Gateway

But we can go deeper. The Multicloud Object Gateway (MCG) gives you more options for object storage.

The most powerful aspect of MCG is that it allows you to store your data across multiple cloud providers using the AWS S3 protocol. MCG is able to interact with the following types of backing stores to physically store the encrypted and deduplicated data:

- AWS S3

- IBM Cloud Object Storage (IBM COS)

- Azure Blob Storage

- Google Cloud Storage

- A local PV-based backing store

The MCG is a layer of abstraction that separates the developer from the implementation, making data portability a real possibility. As a developer, I'm good with that.

MCG gives you options. As mentioned by my colleague, Michelle DiPalma, in her excellent video Understanding Multicloud Object Gateway, MCG enables three aspects of object storage:

- Flexibility across multiple backends

- Consistent experience everywhere

- Simplified management of siloed data

This is done by using three different bucket options:

- MCG object buckets (mirroring, spread across resources)

- MCG dynamic buckets (OBC/OB inside your cluster)

- MCG namespace buckets: data federated buckets

MCG object buckets ensure data safety. Your data is mirrored and spread across as many resources as you configure. You have three options for a storage architecture:

- Multicloud: A mix of AWS, Azure, and GCP, for example

- Multisite: Multiple data centers within the same cloud

- Hybrid: Can facilitate large-scale on-premises data storage and cloud-based mirroring, for example

Namespace buckets carry out data federation: Assembling many sources into one. Several data resources (e.g., Azure and AWS) are pulled together into one view. While the system architect decides where the data is written by an application, the location is hidden from the developers. They simply write to a named bucket; MCG takes care of writing the data and propagating it across the configured storage pool.

Inside object storage

OBC buckets are set up inside your cluster and are created using your application's YAML file. When you ask for an OBC, the OBC and the containing object bucket are dynamically created with permissions that you refer to in your application via environment variables. The environment variables, in a sense, bind the application and the OBC together.

Think of an OBC as the object storage sibling of a PVC. The OBC is persistent and is stored inside your cluster. OBC is great when you want developers to start using object storage without needing the overhead or time necessary for the final implementation (e.g., namespace buckets).

Learn more about container storage

This article is just an introduction to storage options for containers. There are many resources for you to dive into, learn more, and get things up and running. Here is a list to get you started:

- The aforementioned video by Michelle DiPalma, Understanding Multicloud Object Gateway

- Product Documentation for Red Hat OpenShift Container Storage 4.8

- Introducing Multi-Cloud Object Gateway for OpenShift

- Red Hat OpenShift Data Foundation (datasheet and access options)

Writing applications for OpenShift means dealing with data. Hopefully, this article series will help you reach your goals.

Last updated: September 26, 2024