Microservices is a hot topic. Web pages, architectural dissertations, conference talks... The amount of information and number of opinions is staggering, and it can be overwhelming. If ever there was a hot topic in IT, microservices are "it" right now. Create super-small services. Use Functions as a service (FaaS). Embrace serverless. Spread the workload, loosely coupled and written in any mix of software development languages. Go forth and be micro!

But have you noticed that very few are talking about the data part of all this? "Distribute the data, one database per microservice" is the standard, one-size-fits-all (which, at least in clothing, means "one size fits no one") answer.

Yeah. Sure. That's easy for you to say.

In this series of articles, we'll take a look at data storage options as they relate to microservices, Kubernetes, and—specifically—Red Hat OpenShift. We'll start with a broad overview (the buzzword is "30,000-foot view," sigh), then get more detailed in upcoming articles.

Let's begin by defining a few terms. This will give us an understanding from which to start. Note that when Kubernetes is mentioned, you can assume the information is also applicable to OpenShift.

Ephemeral versus persistent storage

If you create a pod in Kubernetes, you can use the pod's root file system for storage. That is to say, the file system inside the pod. You can write to and read from files. If you're running a relational database (RDBMS) or a NoSQL system in a pod—for example, MariaDB or Couchbase—you can use this file system to store your data. If you are running multiple containers within the same pod, the containers can share the data. This is perfectly acceptable and works fine.

With some caveats. For starters, you can't share data between pods because the file system is local to just that pod. If you wish to share data with one or more other pods, you'll need to use a volume to store your data, a Kubernetes concept we'll learn more about later. For now, just know that a volume is a directory somewhere of some type. Where? What type? We'll get to that.

Back to the subject of a pod's root file system. There's this small thing: When the pod is destroyed, so is the data. It goes away. In the context of Kubernetes, it's ephemeral.

That may be desirable, especially when you're first developing a solution and want to wipe things out and start all over every time. As a developer, there are lots of times when this is a great feature. I can mess up as much as I want while knowing that when I restart, I get another try. If I'm using a script to populate a database, I can also fine-tune the script as I'm developing my application. The storage is ephemeral, and I have complete freedom. Eventually, however, you reach a point where you want the data to stick around for a while. You want it to be persistent.

Persistent volumes and persistent volume claims

When it comes to persisting data in Kubernetes, the most common solution is to bind a persistent volume claim (PVC) to your application. A PVC, in turn, is part of a persistent volume (PV). Because the binding happens at the application layer and not the pod layer, the cluster can remove and add pods at will while the application continues to use the same PVC. As a pod comes up, it "sees" the PVC as its storage.

Think of a PV as a huge swath of storage, while a PVC is the part of the PV that you carve out for your application. You might conceptualize a PVC as a hard drive on a server if that helps make it easier to understand. In fact, it might be cloud-based storage spread across multiple clouds, but from the developer's point of view, it's local storage that happens to be persistent. Your pod can be wiped out and replaced, and the data stays intact.

Here's how it works: A volume is configured for the cluster. It might reside in AWS as an Elastic Block Store (EBS) instance, or on Azure as an Azure Disk instance, or it might use CephFS or any one of many choices. This is the underlying storage system; the PV API hides the complexity of each from the cluster administrator.

Using a volume, the cluster administrator creates a persistent volume (or several). Then, the developer or architect or operator—depending on how your organization manages this—specifies a PVC, including the size needed. Kubernetes will take care of making sure an eligible PV is found for the PVC. For example, if you specify a 100GB PVC, you must have an available PV of at least 100GB; Kubernetes will not map a 100GB PVC to, say, a 50GB PV.

Consider the following two objects: a PVC and a Deployment. Notice how the PVC carves out the storage space and how the Deployment binds to it. Notice, also, that the PVC is seeking to use the storage as file storage, as opposed to block storage. Ignore the accessModes setting for now; that's another article.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysqlvolume

spec:

resources:

requests:

storage: 5Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

tier: database

template:

metadata:

labels:

app: mysql

tier: database

spec:

containers:

- name: mariadb

env:

- name: MYSQL_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mysqlpassword

key: password

image: mariadb

resources:

limits:

memory: "128Mi"

cpu: "500m"

ports:

- containerPort: 3306

volumeMounts:

- name: mysqlvolume

mountPath: /var/lib/mysql

volumes:

- name: mysqlvolume

persistentVolumeClaim:

claimName: mysqlvolume

In this example, a 5GB PVC is created to store data for our MySQL Deployment. Our MySQL instance, running in Kubernetes, can be updated as often as we wish while the data remains intact.

Reading through the YAML for the PVC and the deployment, you can see where volume names are specified, file paths are set, etc. Again, the underlying mechanism (e.g., EBS or CephFS) is completely transparent at this point.

This setup makes backup and restore easy as well. (We'll get to that in a later article.)

Persistent storage use cases

What can you do with this persistent, shared storage? Anything you would with a directory on the server. You can use the file system to store files. Your RDBMS and NoSQL systems will use it to store your databases. You can store objects such as videos and images.

Simply put, this is where a developer typically lives. You write to and read from files and databases.

External and API-based storage

You can, if you so choose, still use API-based storage from within your application (storage that is external to the cluster). You might decide to use the OpenStack Cinder API to store objects, or your C# application might have a connection string to an Azure SQL database instance.

This option might be the best when migrating existing services into Red Hat OpenShift with minimal change.

Red Hat OpenShift Data Foundation

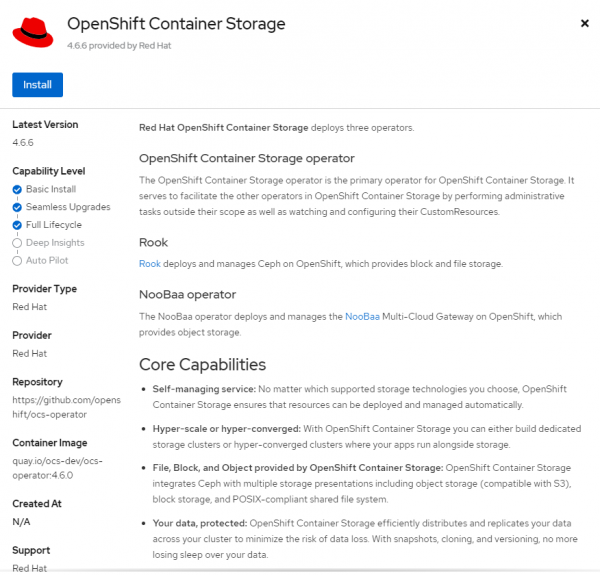

Finally (for this article), Red Hat OpenShift Data Foundation (previously Red Hat OpenShift Container Storage) is simple to install using the Red Hat OpenShift Container Storage operator. OpenShift Data Foundation introduces a way to use Ceph for file system storage, adding data resilience, snapshots, backups, and much more. This technology will be covered in a separate article. Figure 1 offers just a small taste of what the operator brings.

Coming up next

In the next article in this series, we'll introduce the concepts of ReadWriteOnce (RWO), ReadWriteMany (RWX), and Object Bucket Claims (OBC), and explore the advantages of OpenShift Data Foundation. In the meantime, check out the OpenShift Data Foundation website to learn more. You can also experiment with Kubernetes and OpenShift in the free Developer Sandbox for Red Hat OpenShift.

Last updated: October 31, 2023