This article describes how to write, configure, and install a simple Kubernetes validating admission webhook. The webhook intercepts and validates PrometheusRule object creation requests to prevent users from creating rules with invalid fields.

A key benefit of this approach is that your clusters will only contain prevalidated user-defined rules, resulting in uncluttered configuration across environments. Additionally, imagine there is an external alerting system that leverages fields in these customer-provided rules to make alerting decisions. It is important to ensure the rules are properly formatted, so the alerts are forwarded to the appropriate teams with the correct information.

The example here is quite simple, but it can serve as a starting point to cleaner Prometheus installations with minimal errors.

Overview of Kubernetes admission control

When operating clusters on Red Hat OpenShift or Kubernetes, we often perform checks in order to mutate, validate, or convert REST API requests for object creation.

Such operations occur at admission time—that is, right after the request is authorized, but before the object is registered in etcd. This process is important in order to implement control decisions in the kube-apiserver admission chain.

A common example used in existing documentation is the LimitRanger admission controller. This is a great example because the end user immediately understands that their configuration must be changed to schedule new workloads. Once pods are denied admission for a specific reason, there is no further need to progress into the pod scheduling phase.

For our example, we will focus on ValidatingAdmissionWebhook admission controllers and the steps required to write and deploy one using the Go-based controller-runtime webhook package.

An example validating admission webhook

Before diving into the code, it might be useful to explain further and visualize our example. We are going to create a validating admission webhook that will be invoked each time a new PrometheusRule is created.

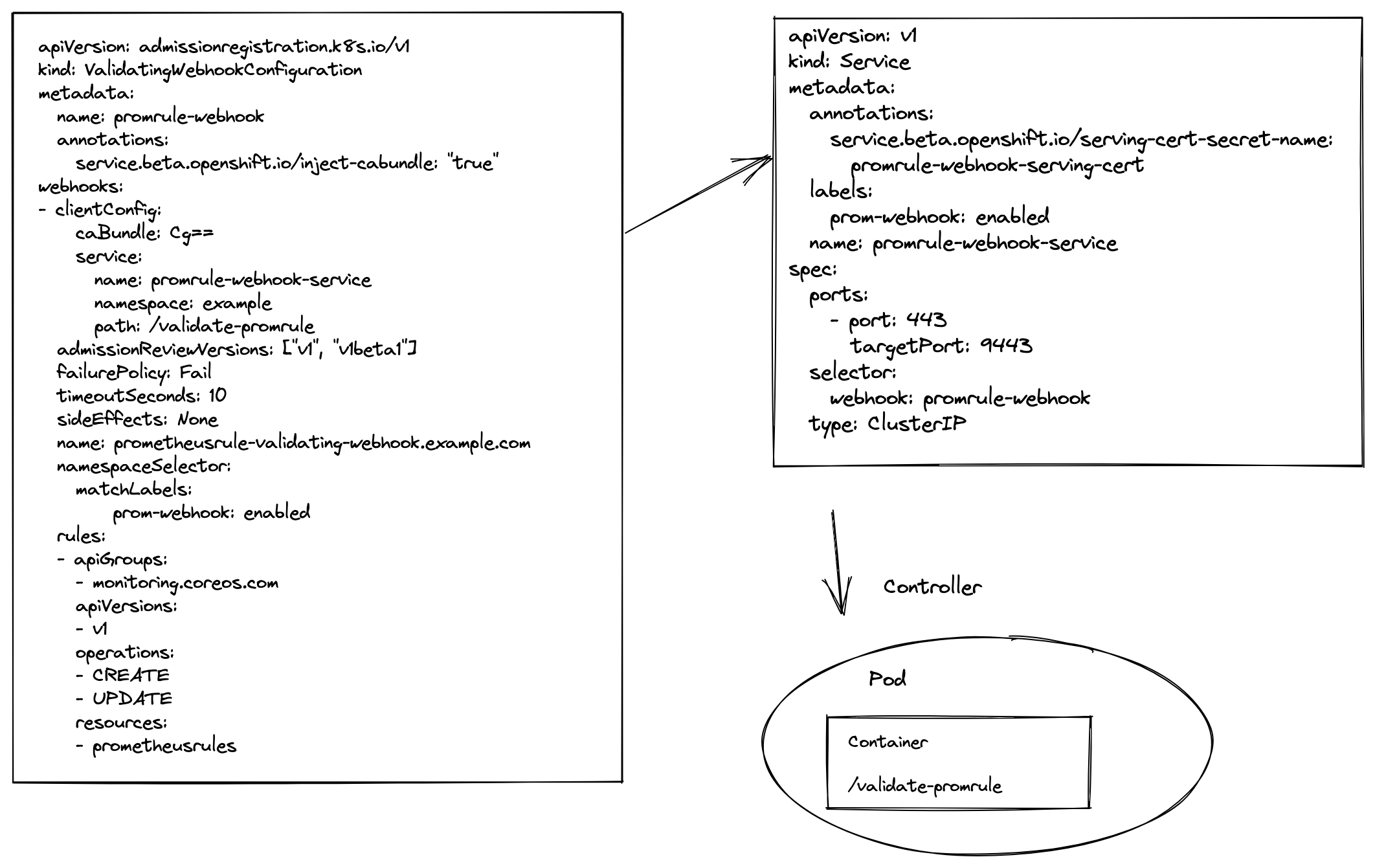

To configure the API server to forward requests to our validation code, it is necessary to create a ValidatingWebhookConfiguration with a specified service to forward requests to, as defined in webhooks.clientConfig (see Figure 1). This service will be called every time a PrometheusRule is created or updated, as seen in the rules section. Also noteworthy is the namespaceSelector, which only considers AdmissionReview objects for namespaces with the prom-webhook: enabled label.

Validating webhooks are executed sequentially and are independent of each other, even when acting on the same resource.

Because the test cluster has Red Hat OpenShift 4.6 installed, it is important to mention that a webhook for PrometheusRules already exists by default: validatingwebhookconfiguration/prometheusrules.openshift.io, which is part of the cluster monitoring stack. This is useful to know so that we don't choose the same REST endpoint path for our handler function. We also won't be allowed to name our webhook resource the same way.

Bootstrap with the Operator SDK

We will use the Operator SDK to initialize our project with a base structure that we can use to start coding.

To start, we can use the operator-sdk init command, passing in an example domain and a repository as parameters. The goal is to keep things as simple as possible since we won't be using much of the generated code, even though the generated manifests and scaffolding are very useful to have. A go.mod file and a go.sum file are also generated for us in the process:

operator-sdk init --domain=example.com --repo=github.com/example/promrule-webhook

Writing kustomize manifests for you to edit...

Writing scaffold for you to edit...

Get controller runtime:

$ go get sigs.k8s.io/controller-runtime@v0.8.3

go: downloading k8s.io/apimachinery v0.20.2

go: downloading k8s.io/client-go v0.20.2

go: downloading k8s.io/api v0.20.2

go: downloading k8s.io/apiextensions-apiserver v0.20.1

go: downloading k8s.io/component-base v0.20.2

Update dependencies:

$ go mod tidy

Given that we will be implementing a small REST handler, we don't need to generate any of the additional boilerplate code that is created by the operator-sdk create api and create webhook commands. What matters is that we now have a base starting point to implement our logic.

Set up a controller manager

The following code snippet contains the basics for setting up a controller manager, which is then used to instantiate a webhook server:

package main

import (

"os"

_ "k8s.io/client-go/plugin/pkg/client/auth/gcp"

"sigs.k8s.io/controller-runtime/pkg/client/config"

"sigs.k8s.io/controller-runtime/pkg/log"

"sigs.k8s.io/controller-runtime/pkg/log/zap"

"sigs.k8s.io/controller-runtime/pkg/manager"

"sigs.k8s.io/controller-runtime/pkg/manager/signals"

"sigs.k8s.io/controller-runtime/pkg/webhook"

webhookadmission "github.com/rflorenc/prometheusrule-validating-webhook/admission"

)

func init() {

log.SetLogger(zap.New())

}

func main() {

setupLog := log.Log.WithName("entrypoint")

// setup a manager

setupLog.Info("setting up manager")

mgr, err := manager.New(config.GetConfigOrDie(), manager.Options{})

if err != nil {

setupLog.Error(err, "unable to setup controller manager")

os.Exit(1)

}

// +kubebuilder:scaffold:builder

setupLog.Info("setting up webhook server")

hookServer := mgr.GetWebhookServer()

setupLog.Info("registering PrometheusRule validating webhook endpoint")

hookServer.Register("/validate-promerule", &webhook.Admission{Handler: &webhookadmission.PrometheusRuleValidator{Client: mgr.GetClient()}})

setupLog.Info("starting manager")

if err := mgr.Start(signals.SetupSignalHandler()); err != nil {

setupLog.Error(err, "unable to run manager")

os.Exit(1)

}

}

Once the hookServer is created, we just have to register the previously configured REST endpoint. We do this by passing it a Handler{} and a client, which the manager provides. The remaining code was generated from the initial bootstrap via the Operator SDK and starts the manager with the default configuration, logging, and signal handling.

A Prometheus rule validator

The PrometheusRuleValidator is a helper struct that receives a client to talk with the kube API and (more importantly) defines a pointer to an admission decoder:

package admission

import (

"context"

"fmt"

"net/http"

monitoringv1 "github.com/prometheus-operator/prometheus-operator/pkg/apis/monitoring/v1"

"sigs.k8s.io/controller-runtime/pkg/client"

"sigs.k8s.io/controller-runtime/pkg/webhook/admission"

)

// +kubebuilder:webhook:path=/validate-v1-prometheusrule,mutating=false,failurePolicy=fail,groups="",resources=pods,verbs=create;update,versions=v1,name=prometheusrule-validating-webhook.example.com

// PrometheusRuleValidator validates PrometheusRules

type PrometheusRuleValidator struct {

Client client.Client

decoder *admission.Decoder

}

// PrometheusRuleValidator admits a PrometheusRule if a specific set of Rule labels exist

func (v *PrometheusRuleValidator) Handle(ctx context.Context, req admission.Request) admission.Response {

prometheusRule := &monitoringv1.PrometheusRule{}

err := v.decoder.Decode(req, prometheusRule)

if err != nil {

return admission.Errored(http.StatusBadRequest, err)

}

for _, group := range prometheusRule.Spec.Groups {

for _, rule := range group.Rules {

_, found_severity := rule.Labels["severity"]

_, found_example_response_code := rule.Labels["example_response_code"]

_, found_example_alerting_email := rule.Labels["example_alerting_email"]

if !found_severity || !found_example_response_code || !found_example_alerting_email {

return admission.Denied(fmt.Sprintf("Missing one or more of minimum required labels. severity: %v, example_response_code: %v, example_alerting_email: %v", found_severity, found_example_response_code, found_example_alerting_email))

}

}

}

return admission.Allowed("Rule admitted by PrometheusRule validating webhook.")

}

func (v *PrometheusRuleValidator) InjectDecoder(d *admission.Decoder) error {

v.decoder = d

return nil

}

As the name suggests, the InjectDecoder() function injects an admission decoder into the PrometheusRuleValidator. But why do we need decoding in the first place?

When a target object is received for admission, it is wrapped or referenced in an AdmissionReview object, as shown in the following code. Before this object can be accessed, it has to be decoded so its fields can be accessed for the operations we allow:

{

"kind": "AdmissionReview",

"apiVersion": "admission.k8s.io/v1beta1",

"request": {

"uid":"800ab934-1343-01e8-b8cd-45010b400002",

"resource": {

"version":"v1",

"resource":"prometheusrules"

},

"name": "foo",

"namespace": "test",

"operation": "CREATE",

"object": {

"apiVersion": "v1",

"kind": "PrometheusRule",

"metadata": {

"annotations" : {

"abc": "[]"

},

"labels": {

"def": "true"

},

"name": "foo",

"namespace": "test"

},

"spec": {

...

...

}

}

}

}

So, to decode a PrometheusRule from the "parent" object, we first instantiate it as a raw object and then pass it on to the Decode() function together with the AdmissionReview in the request:

err := v.decoder.Decode(req, prometheusRule)

if err != nil {

return admission.Errored(http.StatusBadRequest, err)

}

Once this JSON body decoding step is done, we can access the PrometheusRule fields directly. We then allow or deny object admission based on simple validations. If it is allowed, the object will be persisted to etcd.

Validating webhook authorization

Because a ValidatingWebhook is a construct that sits close to the Kubernetes API server, certificate-based authentication and authorization are mandatory.

In Red Hat OpenShift, we can annotate a ValidatingWebhookConfiguration object with service.beta.openshift.io/inject-cabundle=true to have the webhook's clientConfig.caBundle field populated with the service CA bundle. This allows the Kubernetes API server to validate the service CA certificate used to secure the targeted endpoint.

Note in the example ValidatingWebhookConfiguration, we specify a dummy clientConfig.caBundle. The value doesn't matter here because it will be injected based on the annotation.

For the container running our code to access the service CA certificate, it has to mount a ConfigMap object annotated with service.beta.openshift.io/inject-cabundle=true. Once annotated, the cluster automatically injects the service CA certificate into the service-ca.crt key on the ConfigMap:

volumeMounts:

# default path if manager.Options.CertDir is not overriden

# /tmp/k8s-webhook-server/serving-certs/tls.{key,crt} must exist.

- mountPath: /tmp/k8s-webhook-server/serving-certs

name: serving-cert

You can specify an alternate path and mount point by altering the CertDir controller manager Options struct in main.go.

When writing tests locally, for example, we need to be able to either port-forward to our pod or authenticate to the API with a valid token and a valid TLS key and certificate combination before POSTing our payload with the AdmissionReview and reference the JSON-encoded object.

Conclusion

Validating, mutating, and converting webhooks can be of great use in organizations looking for Kubernetes policy enforcement and object validation. On the other hand, having too many registered webhooks can become hard to manage. In edge cases, they can potentially cause scalability issues if not coded correctly, because they will be called every time a new target object is created. Therefore, webhooks should be used in moderation.

Before the operator-sdk and controller-runtime webhook packages were available, there were multiple nonstandard ways to implement such a project. Requirements such as coding the web server, REST handling routing, JSON object decoding from AdmissionReview, and certificate handling could be done in all sorts of different ways. This article presented an alternative.

See the GitHub repository associated with this learning project for a full example with a Helm installer.

Last updated: September 20, 2023