Building a oversaturation detector with iterative error analysis

Learn how we built a simple, rules-based algorithm to detect oversaturation in LLM performance benchmarks, reducing costs by more than a factor of 2.

Learn how we built a simple, rules-based algorithm to detect oversaturation in LLM performance benchmarks, reducing costs by more than a factor of 2.

Learn how the llm-d project is revolutionizing LLM inference by enabling distributed, efficient, and scalable model serving across Kubernetes clusters.

Learn how we built an algorithm to detect oversaturation in large language model (LLM) benchmarking, saving GPU minutes and reducing costs.

Simplify LLM post-training with the Training Hub library, which provides a common, pythonic interface for running language model post-training algorithms.

Speculators standardizes speculative decoding for large language models, with a unified Hugging Face format, vLLM integration, and more.

Learn why prompt engineering is the most critical and accessible method for customizing large language models.

Oversaturation in LLM benchmarking can lead to wasted machine time and skewed performance metrics. Find out how one Red Hat team tackled the challenge.

Learn how to automatically transfer AI model metadata managed by OpenShift AI into Red Hat Developer Hub’s Software Catalog.

Integrate Red Hat OpenShift Lightspeed with a locally served large language model (LLM) for enhanced assistance within the OpenShift environment.

Explore the benefits of using Kubernetes, Context7, and GitHub MCP servers to diagnose issues, access up-to-date documentation, and interact with repositories.

Dive into LLM post-training methods, from supervised fine-tuning and continual learning to parameter-efficient and reinforcement learning approaches.

Learn how to deploy LLMs on Red Hat OpenShift AI for Ansible Lightspeed, enabling on-premise inference and optimizing resource utilization.

Your Red Hat Developer membership unlocks access to product trials, learning resources, events, tools, and a community you can trust to help you stay ahead in AI and emerging tech.

Discover the advantages of vLLM, an open source inference server that speeds up generative AI applications by making better use of GPU memory.

Celebrate our mascot Repo's first birthday with us as we look back on the events that shaped Red Hat Developer and the open source community from the past year.

Learn how to deploy multimodal AI models on edge devices using the RamaLama CLI, from pulling your first vision language model (VLM) to serving it via an API.

Discover SDG Hub, an open framework for building, composing, and scaling synthetic data pipelines for large language models.

Learn about the 5 common stages of the inference workflow, from initial setup to edge deployment, and how AI accelerator needs shift throughout the process.

Learn how to implement spec coding, a structured approach to AI-assisted development that combines human expertise with AI efficiency.

Get a comprehensive guide to profiling a vLLM inference server on a Red Hat Enterprise Linux system equipped with NVIDIA GPUs.

This learning path explores running AI models, specifically large language

Move larger models from code to production faster with an enterprise-grade

Learn how to scale machine learning operations (MLOps) with an assembly line approach using configuration-driven pipelines, versioned artifacts, and GitOps.

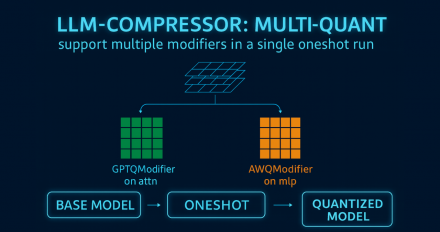

The LLM Compressor 0.8.0 release introduces quantization workflow enhancements, extended support for Qwen3 models, and improved accuracy recovery.

Learn how llm-d's KV cache aware routing reduces latency and improves throughput by directing requests to pods that already hold relevant context in GPU memory.