Page

Prerequisites and step-by-step process

Prerequisites:

- Basic understanding of Python programming language.

- Containerization concepts.

- Cloud-Native Awareness.

- Download and install the latest version of Podman Desktop on your laptop. Download from here if not already installed.

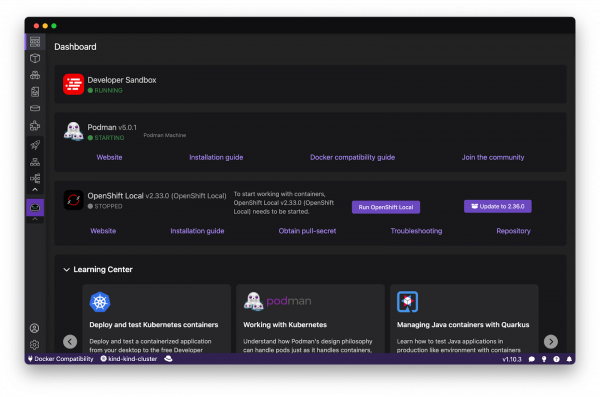

- Start Podman Machine once Podman Desktop is installed.

System and Software Prerequisites

- Software: Podman Desktop 1.10.3+ and Podman 5.0.1+.

- Hardware: At least 12GB RAM, 4+ CPUs (more is better for large models).

Step-by-step process

1. Setting Up Podman Desktop and the AI Lab Extension

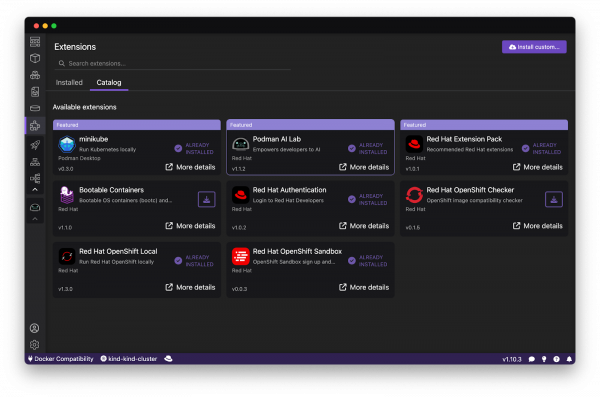

Follow the detailed installation instructions for Podman Desktop and the AI Lab extension. Familiarize yourself with the primary features and interface of the extension. Use below extension link -

Directly from Podman Desktop:

- Open Podman Desktop. Download and install if not already installed.

- Navigate to Extensions > Catalog.

- Search for Podman AI Lab and click Install. You can also install Podman AI Lab using Red Hat Extension pack available in Podman Desktop

Verification

After installation, the Podman AI Lab icon should appear in the navigation bar of Podman Desktop.

2. Download an AI Model

Podman AI Lab provides a catalog of recipes that showcase common AI use cases. Choose a recipe, configure it with your preferred settings, and run it to see the results. Each recipe includes detailed explanations and sample applications that can be run using different large language models (LLMs).

Podman AI Lab also includes a curated set of open-source AI models that you can use directly. Custom Models: You can also import your own AI models to run within Podman AI Lab.

Custom Models: You can also import your own AI models to run within Podman AI Lab. For example you can import a quantized GGUF model directly into your Podman Desktop AI Lab Environment.

Select a model from the catalog and download it locally to our workstation. Users can download AI models in widely used formats, including GGUF, PyTorch, and TensorFlow. For this example we have downloaded the Mistral-7B model from huggingface.

3. Start the inference server

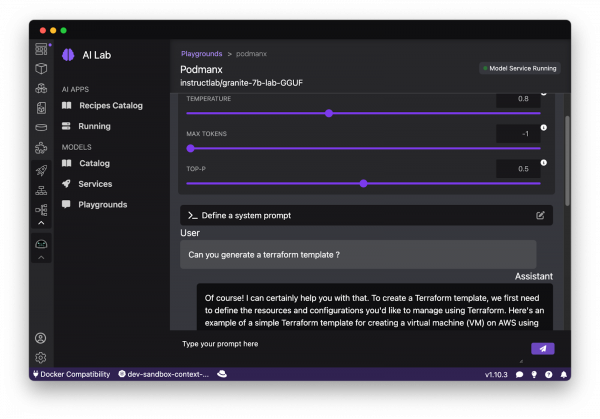

Open the Podman Desktop AI Lab interface by selecting the "Playgrounds" option from the left sidebar. In the Playgrounds area, click on "New Playground" to set up a new experimental environment. Here, you can name your new environment and choose a downloaded model to utilize.

Once configured, Podman Desktop AI Lab will initiate a containerized model server. This setup includes an interactive interface that allows you to send queries and view the responses. The Playground is housed within an isolated Podman Pod, which simplifies the underlying infrastructure, enabling straightforward model inference using an OpenAI-compatible API.

After the environment is ready, you can access the Playground dashboard. This dashboard serves as your interactive interface for sending prompts to the model and viewing the outputs.

The Playground provides several adjustable parameters designed to tailor model behavior to specific development and data science applications:

- Temperature: This setting modulates the degree of stochasticity in the model's responses. Lower temperature values result in outputs that are more deterministic and focused, which is useful for tasks requiring high accuracy. Higher values introduce more randomness, enhancing creativity and potentially leading to novel insights or solutions.

- Max Tokens: This parameter sets the upper limit on the length of the model's output, thereby controlling verbosity. It’s crucial for managing both the computational cost of generating responses and the practicality of their analysis in data-heavy environments.

- Top-p: This configuration influences the model's token selection process by adjusting the trade-off between relevance and diversity. It's key for fine-tuning the precision versus novelty aspect of the responses, especially in exploratory data analysis or when generating content.

Leverage these settings within the Podman AI Lab's Playground to systematically optimize your model according to specific criteria.

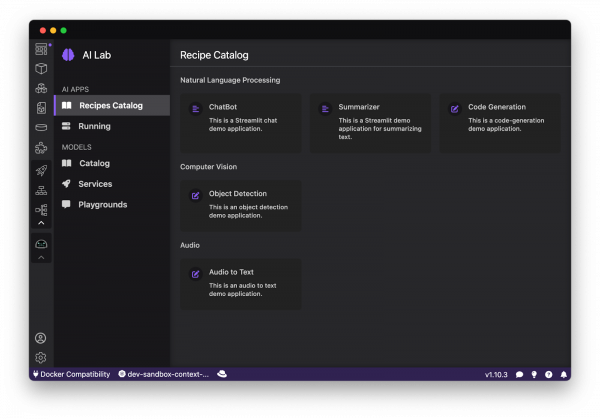

Recipes Catalog

The containerized AI recipes allow developers to quickly set up and prototype AI applications directly on their local machines. Recipes are built with at least two fundamental components: a model server and an AI application. The AI recipes cover a wide range of AI functionalities including audio processing, computer vision, and natural language processing. The sample applications rely on the llamacpp_python model server by default.

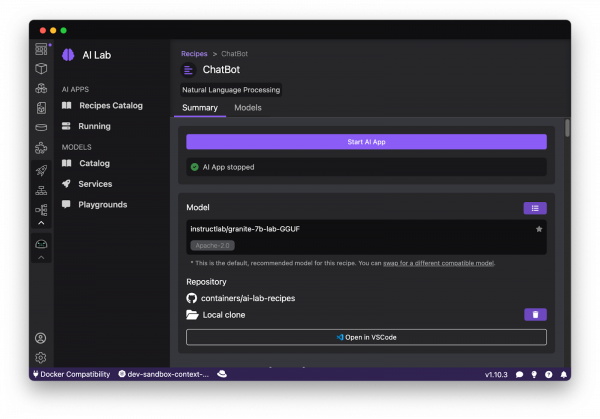

Click on the Start AI App.

Recipes are built with at least two fundamental components: a model server and an AI application.

Summary

The Podman Desktop AI Lab extension simplifies developing with AI locally. It offers essential open-source tools for AI development and a curated selection of "recipes" that guide users through various AI use cases and models. AI Lab also includes "playgrounds" where users can experiment with and evaluate AI models, such as chatbots.