Page

Implementation deep dive

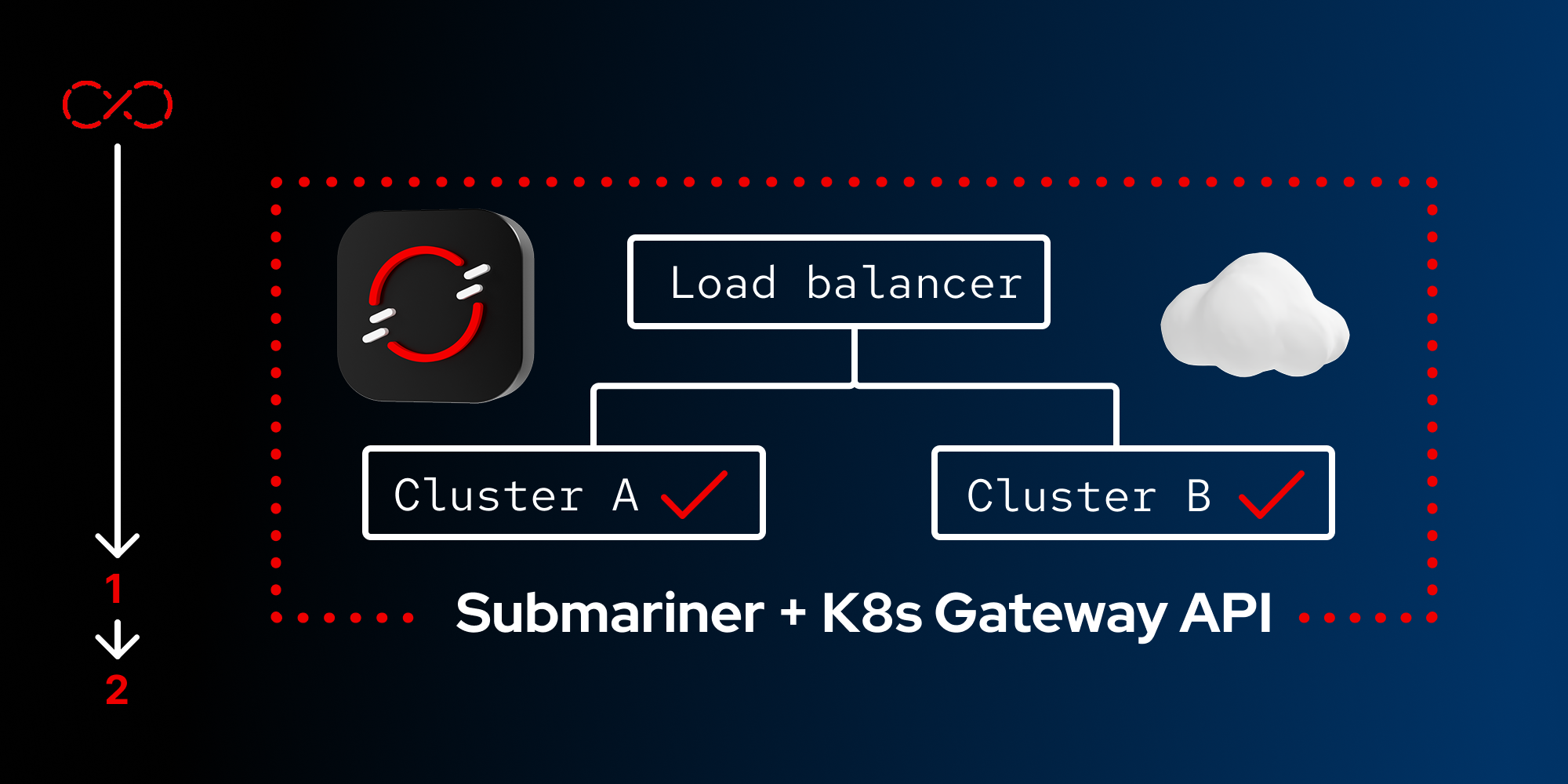

In Lesson 1, we explored the architecture for achieving robust multi-cluster application resiliency with Red Hat technologies. We discussed the common challenges organizations face and outlined how Red Hat OpenShift, Submariner, OpenShift Service Mesh, and the Gateway API integrate to create a seamless networking fabric across diverse environments.

Now, in Lesson 2, we shift from theory to practice. This installment provides a hands-on guide to implementing the solution, featuring a detailed walkthrough of the necessary Kubernetes manifests and deployment considerations. Let's dive into the specifics of bringing this powerful architecture to life in your own OpenShift environment.

Prerequisites:

- Red Hat OpenShift Container Platform: Minimum v4.18.

- Red Hat OpenShift Service Mesh: Version 3.x (OSSM3) (Technology Preview for OpenShift 4.18). Includes Istio and Gateway API components.

- Kubernetes Gateway API: Used for Ingress. "Supported Preview" in OpenShift 4.18; targeted for full general availability (GA) in later versions.

- Submariner: GA for OpenShift 4.18, providing foundational multi-cluster connectivity.

In this lesson, you will:

- Implement the solution for achieving robust multi-cluster application resiliency.

Application

We're starting with setting up the service and routing stuff for our application. Think of it like telling the network, "Hey, this app lives here!" using Kubernetes manifests.

Deployment:

# --- Deployment: The Application Workload ---

# This is a standard Kubernetes Deployment that runs your application pods.

# It has no direct knowledge of the multicluster setup.

apiVersion: apps/v1

kind: Deployment

metadata:

name: {deployment-name}

labels:

app.kubernetes.io/name: {deployment-name}

spec:

selector:

matchLabels:

# The Service will use this label to find the pods.

app.kubernetes.io/name: {deployment-name}

replicas: 1

template:

metadata:

labels:

app.kubernetes.io/name: {deployment-name}

spec:

terminationGracePeriodSeconds: 0

containers:

- name: {deployment-name}

image: docker/hashicorp/http-echo:v1

imagePullPolicy: IfNotPresent

args:

- -listen=:3000

- -text=example-service

ports:

- name: app-port

containerPort: 3000

resources:

requests:

cpu: 0.125

memory: 50MiService (ExternalName):

# --- Service (ExternalName): The Bridge to Multi-Cluster ---

# This is the crucial "bridge" in our architecture. It's a local service

# that acts as a DNS alias. Instead of pointing to local pods, it points

# to the multi-cluster DNS name provided by Submariner.

apiVersion: v1

kind: Service

metadata:

name: {service-name-external}

namespace: {namespace-name}

spec:

# This field is the key: it points to the Submariner multi-cluster service name.

externalName: {service-name-namespace-name.svc.clusterset.local}

type: ExternalNameService (ClusterIP):

# --- Service (ClusterIP): The Local Entry Point ---

# This is a standard internal service that provides a stable IP address

# and DNS name for your application pods within a single cluster.

# Submariner will monitor the health of the pods behind this service.

apiVersion: v1

kind: Service

metadata:

name: {service-name}

labels:

app.kubernetes.io/name: {service-name}

spec:

type: ClusterIP

selector:

# Selects the pods from the Deployment based on their label.

app.kubernetes.io/name: {service-name}

ports:

- name: {svc-name-port}

port: {port number}

targetPort: app-port

protocol: TCPEnable Service Mesh without ambient mode

For traditional discovery selectors-based OpenShift Service Mesh, ensure the application namespaces (and the Gateway namespace, if separate) are labeled for Istio discovery (e.g., istio-discovery=enabled) to enable automatic service discovery (istiod).

Deploying cluster-level resources

Input parameters:

- app-name = {application name}

- env = {environment name}

Submariner

We're deploying Submariner to connect our clusters. This involves subscriptions, configs, and making sure the clusters know about each other.

Red Hat Advanced Cluster Management for Kubernetes (RHACM) deploys Submariner using a feature called the Submariner add-on. When you enable this add-on for a set of managed OpenShift clusters, RHACM automates the entire complex setup process:

- RHACM intelligently analyzes your selected clusters and automatically deploys the necessary Submariner components. It designates the hub cluster to run the central Broker and places a Gateway on one or more nodes in each of the other participating clusters.

- RHACM handles the complex configuration, including generating the necessary certificates and tokens for the gateways to securely connect to the broker.

- Once deployed, RHACM ensures the Submariner gateways establish encrypted IPsec tunnels between all connected clusters, creating the unified multi-cluster network.

RHACM is responsible for managing Submariner because Submariner is a foundational piece of the multi-cluster fabric.

Subscription: {submariner}:

# --- The subscription is created by deploying the ManagedClusterAddOn resource on the ACM hubcluster

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

labels:

operators.coreos.com/submariner.submariner-operator: ""

name: submariner

namespace: submariner-operator

spec:

channel: stable-0.18

installPlanApproval: Automatic

name: submariner

source: redhat-operators

sourceNamespace: openshift-marketplaceSubmarinerConfig: {submariner}:

# --- SubmarinerConfig for Cluster A ---

# This resource provides the specific configuration for the Submariner

# deployment on the managed cluster defined above.

apiVersion: submarineraddon.open-cluster-management.io/v1alpha1

kind: SubmarinerConfig

metadata:

name: submariner

# This namespace must also match the name of the ManagedCluster in ACM.

namespace: {cluster-a}

spec:

# NATTEnable is crucial for environments where gateway nodes are behind a NAT,

# which is common in public cloud and some on-premise setups.

NATTEnable: true

# Specifies the number of nodes in the managed cluster that will be designated

# as Submariner gateways for high availability.

gatewayConfig:

gateways: 1

# The rest of the fields are standard configurations for the IPsec tunnel.

Debug: false

IPSecDebug: false

IPSecIKEPort: 500

IPSecNATTPort: 4500

NATTDiscoveryPort: 4900

airGappedDeployment: true

cableDriver: libreswan

forceUDPEncaps: false

globalCIDR: ""

haltOnCertificateError: true

insecureBrokerConnection: false

loadBalancerEnable: falseManagedClusterAddOn: {submariner}:

# --- ManagedClusterAddOn for Cluster A ---

# This resource tells ACM to enable the "submariner" add-on for a specific

# managed cluster. This is the trigger for the installation process.

apiVersion: addon.open-cluster-management.io/v1alpha1

kind: ManagedClusterAddOn

metadata:

# The name of the add-on to enable.

name: submariner

# CRITICAL: The namespace must match the name of the ManagedCluster resource

# in ACM that represents your first managed cluster (e.g., "cluster-a").

namespace: {cluster-a}

spec:

# Specifies the namespace on the managed cluster where the Submariner

# operator and its components will be installed.

installNamespace: submariner-operatorSubmarinerConfig: {submariner}:

# --- SubmarinerConfig for Cluster B ---

# This resource provides the specific configuration for the Submariner

# deployment on the managed cluster defined above.

apiVersion: submarineraddon.open-cluster-management.io/v1alpha1

kind: SubmarinerConfig

metadata:

name: submariner

# This namespace must also match the name of the ManagedCluster in ACM.

namespace: {cluster-b}

spec:

# NATTEnable is crucial for environments where gateway nodes are behind a NAT,

# which is common in public cloud and some on-premise setups.

NATTEnable: true

# Specifies the number of nodes in the managed cluster that will be designated

# as Submariner gateways for high availability.

gatewayConfig:

gateways: 1

# The rest of the fields are standard configurations for the IPsec tunnel.

Debug: false

IPSecDebug: false

IPSecIKEPort: 500

IPSecNATTPort: 4500

NATTDiscoveryPort: 4900

airGappedDeployment: true

cableDriver: libreswan

forceUDPEncaps: false

globalCIDR: ""

haltOnCertificateError: true

insecureBrokerConnection: false

loadBalancerEnable: falseManagedClusterAddOn: {submariner}:

# --- ManagedClusterAddOn for Cluster B ---

# This resource tells ACM to enable the "submariner" add-on for a specific

# managed cluster. This is the trigger for the installation process.

apiVersion: addon.open-cluster-management.io/v1alpha1

kind: ManagedClusterAddOn

metadata:

# The name of the add-on to enable.

name: submariner

# CRITICAL: The namespace must match the name of the ManagedCluster resource

# in ACM that represents your first managed cluster (e.g., "cluster-b").

namespace: {cluster-b}

spec:

# Specifies the namespace on the managed cluster where the Submariner

# operator and its components will be installed.

installNamespace: submariner-operatorManagedClusterSet: {app-name}:

# --- ManagedClusterSet: Grouping Clusters for Submariner ---

# This ACM resource defines a logical group of clusters that will be

# connected by Submariner.

apiVersion: cluster.open-cluster-management.io/v1beta2

kind: ManagedClusterSet

metadata:

annotations:

cluster.open-cluster-management.io/submariner-broker-ns: {broke-namespace}

name: {submariner}-{cluster-a-cluster-b}

spec:

clusterSelector:

selectorType: ExclusiveClusterSetLabel

$ oc label managedclusters {cluster-a} cluster.open-cluster-management.io/clusterset={cluster-a} --overwrite

$ oc label managedclusters {cluster-b} cluster.open-cluster-management.io/clusterset={cluster-b} --overwriteThe above command labels the nodes to represent a gateway for the Submariner that is responsible for routing the traffic through the encrypted channel.

Gateway {node-name}.{domaine}

# --- Submariner Gateway ---

# Note: This is a Submariner-specific resource, not a Kubernetes Gateway API resource.

# It represents a node that has been designated as a Submariner gateway,

# responsible for routing traffic through the encrypted tunnels. This object is

# typically managed by the Submariner operator, not created manually.

apiVersion: submariner.io/v1

kind: Gateway

metadata:

# The name of the gateway is typically the name of the worker node.

name: {node-name}.{domain}

# This resource lives in the namespace where the submariner operator is installed.

namespace: submariner-operator

annotations:

# When Globalnet is enabled, this annotation shows the globally unique IP

# assigned to this gateway.

submariner.io/globalIp: 242.0.255.253

spec: {}Broker: {submariner-broker}:

# --- Submariner Broker ---

# This resource is automatically created by the ACM submariner-addon in a

# dedicated namespace on the hub cluster. It acts as the central meeting point

# where all connected clusters exchange connectivity and service discovery metadata.

# After the ManagedClusterSet is created, the submariner-addon creates a

# namespace called <managed-cluster-set-name>-broker and deploys the

# Submariner broker to it.

apiVersion: submariner.io/v1alpha1

kind: Broker

metadata:

name: submariner-broker

# This namespace is auto-generated by ACM, named after the ManagedClusterSet.

namespace: {ManagedClusterSet-name}-broker

labels:

# This label is used by ACM for backup and restore purposes.

cluster.open-cluster-management.io/backup: submariner

spec:

# When enabled, Globalnet provides a feature to handle overlapping CIDRs

# between the connected clusters, which is a common issue in large enterprises.

globalnetEnabled: true

globalnetCIDRRange: 242.0.0.0/8ServiceExport: {service-name}

# --- ServiceExport: Advertising the Service ---

# This Submariner resource "advertises" the local ClusterIP service to all

# other clusters in the clusterset, making it discoverable.

apiVersion: multicluster.x-k8s.io/v1alpha1

kind: ServiceExport

metadata:

# The name must match the local ClusterIP service you want to export.

name: {service-name}

namespace: {namespace-name}ServiceImport: {service-name}

# Note: You do not create this resource manually. It is created and managed

# automatically by the Submariner Lighthouse agent in any cluster that needs

# to consume a multi-cluster service.

apiVersion: multicluster.x-k8s.io/v1alpha1

kind: ServiceImport

metadata:

# The name of the ServiceImport will match the name of the original service

# that was exported from another cluster.

name: {service-name}

# It is created in the same namespace where the service was originally defined,

# ensuring consistent naming across the cluster set.

namespace: {namespace-name}

spec:

# This section lists the ports that are exposed by the multi-cluster service.

ports:

- port: 8080

protocol: TCP

# The type 'ClusterSetIP' indicates that this service represents a stable IPGateway: {node-name}.{domain}:

# --- Submariner Gateway ---

# Note: This is a Submariner-specific resource, not a Kubernetes Gateway API resource.

# It represents a node that has been designated as a Submariner gateway,

# responsible for routing traffic through the encrypted tunnels. This object is

# typically managed by the Submariner operator, not created manually.

apiVersion: submariner.io/v1

kind: Gateway

metadata:

# The name of the gateway is typically the name of the worker node.

name: {node-name}.{domain}

# This resource lives in the namespace where the submariner operator is installed.

namespace: submariner-operator

annotations:

# When Globalnet is enabled, this annotation shows the globally unique IP

# assigned to this gateway.

submariner.io/globalIp: 242.0.255.253

spec: {}Gateway API

Kubernetes Gateway API is the modern and official way to manage how network traffic enters and moves within a Kubernetes cluster. It is the powerful and flexible successor to the older, more limited Ingress API.

Deploy the Gateway API CRD:

oc apply -f standard-install.yaml

https://github.com/kubernetes-sigs/gateway-api/releases/latest/download/standard-install.yamlGateway: {app-name}:

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

# Gateway represents an instance of a service-traffic handling infrastructure

by binding Listeners to a set of IP addresses.

metadata:

annotations:

networking.istio.io/service-type: NodePort

name: {app-name-gateway}

namespace: {gateway-namespace-name}

spec:

gatewayClassName: istio

listeners:

- allowedRoutes:

namespaces:

from: All

name: http

port: {port number}

protocol: HTTPAlternative: Gateway with service LoadBalancer, Secure communication using fixed port and TLS Termination:

# This Gateway API resource defines the entry point for all external traffic.

# It specifies the port, protocol, and TLS settings for the ingress listener.

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: {app-name-gateway}

namespace: {app-namespace-name}

spec:

gatewayClassName: istio

listeners:

- name: https-default

protocol: HTTPS # Ensures secure communication

port: 4443 # Fixed, secure port for external access

hostname: *.org.domain # A wildcard hostname for all applications

tls:

mode: Terminate # Performs TLS decryption at the Gateway

certificateRefs:

- group: ""

kind: Secret

name: {wildcard-tls-secret} # Name of the TLS secret (created by cert-manager)

namespace: {secret-namepace} # Namespace where the TLS secret resides

allowedRoutes:

namespaces:

from: Selector

selector:

matchLabels:

shared-gateway-access: "true" # Allows HTTPRoutes from namespaces with this label to attachReferenceGrant: {allow-http-route}:

apiVersion: gateway.networking.k8s.io/v1beta1

kind: ReferenceGrant

# ReferenceGrant identifies kinds of resources in other namespaces that are

trusted to reference the specified kinds of resources in the same namespace

as the policy.

metadata:

name: {name} #allow-http-route

namespace: {gateway-namespace-name}

spec:

from:

- group: gateway.networking.k8s.io

kind: HTTPRoute

# HTTPRoute provides a way to route HTTP requests. This includes the capability

to match requests by hostname, path, header, or query param. Filters can be

used to specify additional processing steps. Backends specify where matching

requests should be routed.

namespace: {app-mamespace-name}

to:

- group: gateway.networking.k8s.io

kind: Gateway

name: {gateway-name}Alternative: ReferenceGrant: {allow-http-route} that support secret and httproute:

# --- ReferenceGrant: Secure Cross-Namespace References ---

# This is a critical security resource for the Gateway API. It provides a way

# for a namespace owner to explicitly trust and allow resources from another

# namespace to reference objects within it.

apiVersion: gateway.networking.k8s.io/v1beta1

kind: ReferenceGrant

metadata:

name: allow-gateway-references

# This ReferenceGrant must be created in the target namespace (e.g., the application's namespace).

namespace: {application-namespace}

spec:

# The 'from' section specifies who is allowed to make the reference.

from:

# This allows a Gateway from the 'gateway-namespace' to reference objects here.

- group: gateway.networking.k8s.io

kind: Gateway

namespace: {gateway-namespace}

# The 'to' section specifies what objects they are allowed to reference.

to:

# This rule allows the trusted Gateway to reference Secrets (for TLS).

- group: ""

kind: Secret

name: {secret-name}

# This rule allows the trusted Gateway to reference a specific HTTPRoute.

- group: gateway.networking.k8s.io

kind: HTTPRoute

name: {httproute-name}HTTPRoute:

# --- HTTPRoute: The Ingress Routing Rule ---

# This Gateway API resource defines how traffic reaching our main Gateway

# should be routed. It attaches to the Gateway and directs traffic to our

# ExternalName service, triggering the multi-cluster DNS lookup.

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: {app-name}-httproute

spec:

parentRefs:

- group: gateway.networking.k8s.io

kind: Gateway

# Must match the name of the Gateway resource deployed by the platform team.

name: {app-name-gateway} # subscribes to the created Gateway

namespace: {gateway-namespace-name}

hostnames:

- "my-app.apps.yourcompany.com" # The public URL for this application

rules:

- backendRefs:

# This directs all matching traffic to our "bridge" service.

- group: ""

kind: Service

name: {service-name-external}

port: {port Number}

weight: 1

matches:

- path:

type: PathPrefix

value: /GatewayClass: {istio}:

# This is a cluster-scoped resource that defines a "type" or "class" of

# gateway that can be provisioned in the cluster. It acts as a template,

# linking a Gateway resource to the controller that will manage it.

#

# Note: You typically do not create this resource manually. It is automatically

# created by the Istio/OpenShift Service Mesh operator upon installation.

apiVersion: gateway.networking.k8s.io/v1

kind: GatewayClass

metadata:

# The name of the GatewayClass. When you create a Gateway resource, you will

# reference this name in its 'gatewayClassName' field.

name: istio

spec:

# the controller that responsible for managing any Gateway objects of this class.

# 'istio.io/gateway-controller' is the official name for the Istio controller.

controllerName: istio.io/gateway-controller

# A human-readable description of what this class provides.

description: The default Istio GatewayClassNote: The GatewayClass object is being created automatically when the Gateway object is created.

GatewayClass: {istio-remote}:

apiVersion: gateway.networking.k8s.io/v1

kind: GatewayClass

metadata:

name: istio-remote

spec:

controllerName: istio.io/unmanaged-gateway

description: Remote to this cluster. Does not deploy or affect configuration.Note: The GatewayClass object is being created automatically when the Gateway object is created.

Service Mesh 3 (OSSM)

OpenShift Service Mesh is an infrastructure layer that provides a uniform way to connect, manage, and observe applications built from multiple independent services (microservices). It adds a layer of control over the network traffic between these services without requiring any changes to the application code itself.

Service Mesh focuses on managing the communication between services. It provides advanced traffic management, security (with mutual TLS), and observability for all east-west traffic within the mesh. The multi-cluster mesh deployment models and external control plane deployment models are Generally Available (GA) in OpenShift Service Mesh 3.0.2, meaning they are fully supported and suitable for production use. Within the context of multi-cluster setups, OpenShift Service Mesh 3.0 includes Kubernetes multi-cluster Service (MCS) discovery. This feature is currently available as a Developer Preview (DP) feature.

Istio: {istio}-{env}:

# --- Istio: The Service Mesh Control Plane ---

# This custom resource, managed by the Sail operator, deploys and configures

# the Istio control plane (istiod) for the entire cluster.

apiVersion: sailoperator.io/v1alpha1

kind: Istio

metadata:

name: {istio}-{env}

spec:

# The namespace where the Istio control plane components will be installed.

namespace: istio-system

values:

meshConfig:

# This tells Istio to only manage namespaces with this specific label.

discoverySelectors:

- matchLabels:

istio-discovery: enabled

version: v1.24.1IstioCNI: {default}:

# --- IstioCNI: Integrating Istio with the Network ---

# This resource is managed by the Red Hat Sail operator (for OSSM 3).

# It installs the Istio CNI plugin on all nodes, which allows Istio to

# transparently redirect pod traffic to the Envoy proxy without needing

# privileged init containers. This is a more secure and efficient setup.

apiVersion: sailoperator.io/v1alpha1

kind: IstioCNI

metadata:

name: default

spec:

# The namespace where the Istio CNI daemonset will be installed.

namespace: istio-cni

version: v1.24.1.IstioRevision: {istio}-{env}:

# --- IstioRevision: A Specific Istio Control Plane Version ---

# Note: As mentioned in the learning path, you typically do not create this

# resource directly. It is created and managed automatically by the higher-level

# 'Istio' custom resource. It represents a specific, deployed version of the

# Istio control plane (istiod).

apiVersion: sailoperator.io/v1alpha1

kind: IstioRevision

metadata:

name: {istio-revision-name} # e.g., 'default' or 'istio-test'

ownerReferences:

# This section shows that this object is owned and managed by an 'Istio' resource.

- apiVersion: sailoperator.io/v1alpha1

kind: Istio

name: default

spec:

namespace: istio-system

values:

# This section contains the detailed configuration that is passed down to

# the istiod deployment for this specific revision.

global:

platform: openshift

pilot:

# Specifies the exact container image for the istiod pilot component.

image: 'registry.redhat.io/openshift-service-mesh-tech-preview/istio-pilot-rhel9@sha256:...'

meshConfig:

# This selector is inherited from the parent 'Istio' resource and tells this

# control plane which namespaces to watch and manage.

discoverySelectors:

- matchLabels:

istio-discovery: enabled

version: v1.24.1Note: The IstioRevision object is created automatically when the Istio object is created.

Deploying application-level resources

This section details the process of deploying application-level resources, specifically focusing on how to configure network routing and service discovery for applications within a multi-cluster environment. It outlines the use of Kubernetes Gateway API's HTTPRoute for managing ingress traffic to applications. It covers ServiceEntry and ServiceImport resources for enabling cross-cluster service communication and discovery, with ServiceEntry facilitating the exposure of services within the mesh and ServiceImport, allowing services from other clusters to be consumed. The following manifests live on the application’s namespace.

Input parameters:

- app-name = {application name}

- env = {environment name}

HTTPRoute: {app-name}-{gateway}:

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

annotations:

spec:

hostnames:

- {app-name}-{gateway}-{app-ns}.example.com

parentRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: {app-name}-{gateway} #subscribes to the created Gateway

namespace: {gateway-namespace}

rules:

- backendRefs:

- group: ''

kind: Service

name: {ExternalName-service-name}

port: {service port number}

weight: 1

matches:

- path:

type: PathPrefix

value: /Alternative HTTPRoute: {app-name}-{gateway}:

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: {httproute-name}

namespace: {app-namespace} # Namespace where your application service resides

spec:

parentRefs:

- group: gateway.networking.k8s.io

kind: Gateway

name: {gateway-name} # References the name of your Gateway resource

namespace: {gateway-namespace} # References the namespace of your Gateway

hostnames:

- "{gateway-listener-name}" # Must exactly match the Gateway's listener hostname

rules:

- matches:

- path:

type: PathPrefix

value: {app-path-url} (i.e. “/AdminConsole”) # Defines the path prefix for routing

backendRefs:

- group: ""

kind: Service

name: {app-svc-external-name} # Name of your backend Kubernetes ExternalName Service

port: {port-number} # Port on your backend Service

weight: 1ServiceEntry: {app-name}-{clusterset}:

apiVersion: networking.istio.io/v1

kind: ServiceEntry

Metadata:

name: {app-name-service-entry}

namespace: application-submariner

spec:

Hosts:

- {app-name}.{app-ns}.svc.clusterset.local

location: MESH_INTERNAL

ports:

- name: http

number: 8080

protocol: HTTP

resolution: DNSAlternative - serviceEntry: {app-name}-{clusterset}:

apiVersion: networking.istio.io/v1

kind: ServiceEntry

metadata:

name: {app-name} # A more descriptive name to avoid conflict with Gateway name

namespace: {app-namespace}

spec:

hosts:

- {svc-name.namespace.clusterset.local} # The multi-cluster service DNS name

location: MESH_INTERNAL # Crucial for multi-cluster discovery and mTLS expectation

ports:

- name: http

number: {port-number} # The port your application service listens on

protocol: HTTP

resolution: DNS # Indicates resolution via DNS (e.g., Submariner Lighthouse DNS)ServiceImport: {app-name}:

kind: ServiceImport

metadata:

name: {app-name}

namespace: application-submariner

spec:

ports:

- port: 8080

protocol: TCP

type: ClusterSetIPServiceExport: {submariner}-{cluster-a-cluster-b}:

apiVersion: multicluster.x-k8s.io/v1alpha1

kind: ServiceExport

metadata:

name: webserver

namespace: application-submarinerNote: The ServiceExport object is created automatically when the ServiceImport object is created.

For none Ambient mode:

$ oc label ns {app-name} istio-discovery=enabled

$ oc label ns istio-system istio-discovery=enabled

$ oc label ns {gw-name} istio-discovery=enabledNote: You can find all the manifests in the Github repository.

Congratulations on completing this learning path!

By working through both the architectural blueprint and the implementation deep dive, you have gained a comprehensive understanding of how to build a modern, resilient, and secure multi-cluster application architecture using Red Hat's powerful open-source-based technologies.

You have accomplished the following:

- Understand the challenges: You can now articulate the core networking challenges in a hybrid cloud world, including east-west traffic management, multi-cluster service discovery, and the limitations of traditional ingress.

- Mastered the architecture: You have learned the blueprint for a robust solution, understanding the specific roles that Submariner, OpenShift Service Mesh, and the Gateway API play, and how they integrate to create a seamless fabric across clusters.

- Explored the implementation: You have reviewed the practical Kubernetes manifests required to bring this architecture to life, from the foundational cluster-level resources for Submariner and Istio to the specific application-level Service, HTTPRoute, and

ServiceEntryobjects that control the traffic flow.

You are now equipped with the foundational knowledge to design and deploy applications that are not confined to a single cluster, enabling true active-active resiliency, seamless failover, and advanced traffic management for your most critical workloads.

Check out these resources to learn more about multi-cluster applications and service mesh: