Page

How Red Hat addresses multi-cluster challenges

Before we dive into solutions, let’s first explore the common hurdles that organizations employing multi-cluster applications face in regards to achieving resiliency. You’ll also gain an understanding of the solutions themselves before putting them into practice.

Prerequisites:

- General knowledge of cloud-native architecture and networking.

- An understanding of the differences between north-south and east-west traffic.

In this lesson, you will:

- Understand the challenges that organizations employing multi-cluster applications across diverse environments face.

- Explore the architecture for achieving robust multi-cluster application resiliency with Red Hat technologies.

Understanding multi-cluster challenges

Traditional multi-cloud solutions often rely on Global Server Load Balancers (GSLBs) for north-south traffic (external client to application) across stateless clusters. However, this falls short for east-west traffic, the vital inter-cluster communication in modern microservices. A unified mechanism integrating both traffic flows is paramount for true application resiliency.

Organizations face several key hurdles such as:

- Cross-cluster east-west communication: Microservices in one cluster need secure, efficient communication with backends in another, often requiring IPsec tunnels.

- Multi-cluster service discovery and routing: Applications demand seamless, secure discovery and communication with services across multiple Kubernetes clusters, without manual intervention.

- Achieving active-active for all applications: Many applications, including stateful services, require an active-active architecture, treating geographically distinct sites as a single, unified environment.

- Limitations of native ingress: Standard Kubernetes ingress controllers are single-cluster aware, excelling at intra-cluster load balancing but lacking built-in discovery or routing to remote clusters.

- Ensuring data consistency: Active-active deployments require near real-time data and session synchronization, often relying on specialized storage replication (e.g., Open Data Foundation - ODF, PortWorx) or caching (e.g., Redis).

- Strict application governance: Service mesh architectures demand granular governance, consistent security policy enforcement, and deep observability across all interconnected services.

Red Hat's integrated approach

To address these multifaceted challenges, Red Hat offers a comprehensive solution built on open source and enterprise-grade Kubernetes with Red Hat OpenShift. By combining Submariner for multi-cluster connectivity, Red Hat OpenShift Service Mesh (based on Istio) for traffic management and security, and the Kubernetes Gateway API, we deliver full-mesh application resiliency.

The core challenge has been Kubernetes-native ingress controllers' limitations. They inspect local service endpoints for routing to ClusterIP services and are not inherently aware of services spanning multiple clusters via tools like Submariner. Red Hat engineered an elegant solution using OpenShift Service Mesh and the Gateway API to bridge this gap.

The solution: ExternalName Services as the bridge

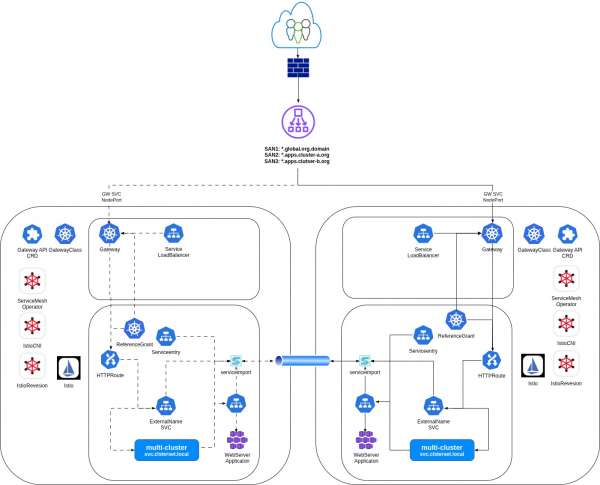

Our approach leverages an ExternalName Kubernetes Service as a crucial bridge for multi-cluster fabric. The workflow is as follows:

- Submariner identifies services for multi-cluster exposure, making them discoverable via a distinct DNS entry (e.g.,

my-service.my-namespace.svc.clusterset.local). This forms the backbone of inter-cluster service awareness. - The Kubernetes Gateway API offers a standardized way to configure ingress traffic for modern cloud-native applications. It's the powerful successor to the older Ingress API, with advanced matching capabilities.

- An ExternalName Service within the local cluster acts as an alias, pointing (via its externalName field) to the DNS name of the multi-cluster service created by Submariner.

- The Gateway API (controlled by OpenShift Service Mesh) directs incoming traffic to this ExternalName Service.

- CoreDNS (integrating with Submariner Lighthouse) resolves the ExternalName. If the service has healthy endpoints across clusters (made available via Submariner), Lighthouse DNS provides an endpoint IP, often preferring local if available.

- The Service Mesh intelligently routes traffic, leveraging Submariner's tunnels for remote endpoints.

This architecture, shown in Figure 1, effectively shifts routing from local Ingress to a DNS-resolved, multi-cluster aware mechanism orchestrated by Red Hat OpenShift Service Mesh and Submariner. This enables full-mesh application resiliency for both east-west and north-south traffic.

Istio ServiceEntry extends your service mesh reach

To fully enable this seamless cross-cluster communication, we leverage another powerful Istio construct: the ServiceEntry. Your service mesh needs a comprehensive view of all services your applications interact with, even those not natively discovered by Kubernetes. This is where Istio's ServiceEntry resource plays a crucial role.

A ServiceEntry extends Istio's internal service registry. It brings external or specially defined services under the mesh's control for advanced traffic management, observability, and security.

The ServiceEntry resource features a crucial field: location. This dictates how Istio treats the service relative to the mesh's boundaries, offering two primary values: MESH_EXTERNAL and MESH_INTERNAL.

Connecting beyond your boundaries

By default, a ServiceEntry is MESH_EXTERNAL, indicating the service resides outside the Istio mesh's network. This is ideal for:

- Third-party SaaS APIs

- On-premise databases

- Legacy applications on virtual machines (VMs)

- Services in non-Istio-enabled clusters

For MESH_EXTERNAL services, Istio proxies (Envoy sidecars) route traffic, but do not enforce mutual TLS (mTLS). Traffic can exit through an Egress Gateway, providing centralized control and policy enforcement for secure outbound calls.

Seamless multi-cluster discovery and resiliency

The true power for multi-cluster application resiliency often lies with location: MESH_INTERNAL. This setting is vital in Red Hat OpenShift environments leveraging multi-cluster Istio deployments via OpenShift Service Mesh.

Declaring a ServiceEntry as MESH_INTERNAL tells Istio that this service, even if not in the immediate cluster, is a full participant within the logical boundaries of your larger, interconnected mesh.

By strategically using ServiceEntry with location: MESH_INTERNAL, you transform individual clusters into a cohesive, resilient, and observable super-mesh. This empowers applications to transparently discover and securely communicate with services regardless of their physical location, forming the bedrock for true multi-cluster application resiliency on Red Hat OpenShift.

Meeting real-world customer needs

This solution was designed for a key client's specific requirements but is broadly applicable to any organization facing similar multi-site deployment challenges. Key drivers included:

- Active-active application deployments: Deploying critical applications across two geographically distinct sites in a fully active-active configuration.

- Real-time data synchronization: Non-negotiable requirement for near real-time data replication and consistency between data centers.

- Future-proof governance: Plan to implement a comprehensive service mesh for strict application governance, security, and observability.

- GSLB integration: Need to work with an existing GSLB that could detect site-level ingress failures but lacked fine-grained, cross-cluster application health checking.

- Modern ingress capabilities: Desire for a Kubernetes-native ingress solution with seamless integration for authentication, authorization, certificate management, advanced DNS policies, and rate limiting-strengths of Gateway API with OpenShift Service Mesh.

By thoughtfully combining Submariner, Red Hat OpenShift Service Mesh, and the Gateway API, Red Hat provides a robust and flexible architecture to deliver the multi-cluster, full-mesh application resiliency that modern enterprises demand. Figure 2 depicts the flow of these technologies working together.

Understanding the network traffic flow

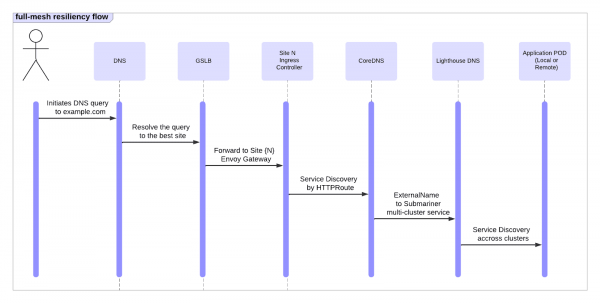

Let's trace a typical request through this architecture:

- Client access: A user accesses the application via its public URL (e.g.,

app-name-console-submariner.org.domain). Standard DNS resolution directs this request to the Virtual IP (VIP) of the GSLB. - GSLB load balancing: The GSLB evaluates site health and, based on load-balancing policies, selects the optimal site. It forwards the request to the OpenShift Service Mesh Gateway (an Envoy-based proxy) at that chosen site, preserving the original hostname.

- Gateway API routing: The Gateway, configured via Gateway API resources (Gateway and HTTPRoute), inspects the request. It matches the request to the appropriate HTTPRoute defined for the application.

- ExternalName Service as alias: The HTTPRoute forwards traffic to a backend Kubernetes service of type ExternalName. This ExternalName service points to the DNS FQDN of the multi-cluster Service (MCS) exposed by Submariner (like

my-app.my-namespace.svc.clusterset.local). - Submariner DNS resolution: The cluster's CoreDNS (integrating with Submariner Lighthouse) resolves the ExternalName. If the service has healthy endpoints in multiple clusters (made available via

ServiceExportandServiceImport), Lighthouse DNS provides an endpoint IP, often preferring local if available. ServiceEntryfor mesh-internal routing: An IstioServiceEntry(as discussed) explicitly allows traffic from within the service mesh to the Submariner-resolved DNS name. ThisServiceEntryregisters the MCS DNS name asMESH_INTERNAL, permitting outbound traffic. If the resolved endpoint is remote, Submariner's components (gateways and encrypted tunnels) seamlessly forward the request to the target Pod in that remote cluster.

This orchestrated flow ensures traffic reaches a healthy application instance, whether local or remote, transparently to the end user and application logic.

Handling failure scenarios for enhanced resiliency

A key design goal for this architecture is graceful degradation and rapid recovery:

- Entire cluster outage: If an OpenShift cluster (Site A) becomes unavailable, the GSLB detects this via health checks and automatically redirects all incoming requests to the healthy secondary site (Site B).

- Individual microservice failure: If a specific microservice on Site A fails (e.g., due to a bug or CVE), while other components remain healthy:

- The GSLB continues sending traffic to Site A.

- When requests for the failing microservice arrive at Site A's service mesh, and local instances are unhealthy, Submariner MCS DNS resolution (via Lighthouse) dynamically directs traffic for that specific service to a healthy instance in Site B.

- Other healthy application components on Site A continue to be served locally. This localized failover optimizes latency while ensuring overall application functionality.

- Service mesh gateway unresponsive: If the OpenShift Service Mesh Gateway on a site becomes unresponsive, the GSLB detects this ingress-level failure, marks the site's ingress as down, and redirects all traffic to the alternate, healthy site’s Service Mesh Gateway.

- Submariner during RHACM outage: Submariner is designed for decentralized data plane operation. If the Red Hat Advanced Cluster Management for Kubernetes hub cluster becomes unavailable, existing Submariner inter-cluster connectivity (IPsec tunnels, routing, Lighthouse DNS) will continue to function normally. While the data plane remains resilient, management operations (adding/removing clusters, updating configs) will be unavailable until RHACM connectivity is restored. The existing Submariner deployment continues with its last known good configuration.

Implementation guidelines (conceptual overview)

Implementing this full-mesh resiliency architecture requires careful planning and execution across inter-cluster connectivity, traffic routing, and application deployment. It involves two phases.

Phase 1: Cluster-level setup–Building the foundation

This phase establishes connectivity and gateway infrastructure on each OpenShift cluster as follows:

- Deploy and configure Submariner: Install the Submariner operator and deploy Submariner across clusters. This establishes the secure, encrypted overlay network and configures Lighthouse for multi-cluster DNS service discovery.

- Integrate with OpenShift Service Mesh and Gateway API: Install the Red Hat OpenShift Service Mesh operator. Create necessary Istio control plane resources and define a GatewayClass for Istio. Deploy a Gateway resource in a designated namespace as the entry point for application traffic.

- Configure GSLB: Set up your GSLB for north-south traffic, distributing requests to Service Mesh Gateways on each site. Configure robust health checks and clear failover policies.

Phase 2: Application-level setup–Deploying and exposing your services

With cluster-level infrastructure in place, deploy your applications and configure them for multi-cluster access:

- Enable multi-cluster service discovery with Submariner: For each application service accessible across clusters, create a

ServiceExportresource. This signals Submariner to advertise the service. A correspondingServiceImportresource will be automatically created in consuming clusters. - Configure Ingress routing via Gateway API and Service Mesh: Create a Kubernetes service of type ExternalName in the relevant namespace, pointing to the DNS FQDN of the Submariner

ServiceImport. Next, create an HTTPRoute resource to define how requests attach to your Gateway and forward traffic to the ExternalName service. - Service mesh enablement (for sidecar mode): If not using ambient mode, ensure application namespaces are labeled for Istio discovery (e.g.,

istio-discovery=enabled) to make the namespaces and its services known to the Istio control plane. It is used with discovery selectors in modern Istio configurations to define the scope of the mesh.