Page

Understand the devfile and utility of a personal AI assistant

With the cloud development environment set up, let’s now go over some devfile basics and learn what we can do with a personal AI assistant using a few examples.

Prerequisites/In order to get the full benefit from taking this lesson, you need to:

In this lesson, you will:

- Understand the basics of the devfile.

- Discover the utility of a personal AI assistant.

The devfile and how it works

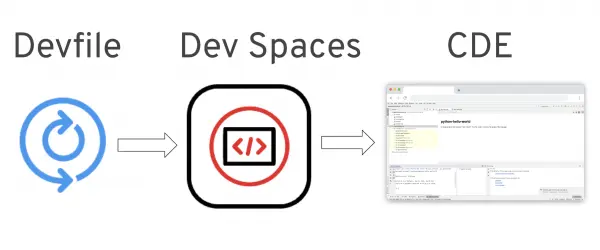

Under the hood, Red Hat OpenShift Dev Spaces uses the devfile from the root of the repository to create the CDE that contains not only the source code but also the runtime, together with predefined commands for instant development (Figure 1).

Note: Devfile is a CNCF sandbox project that provides an open standard defining containerized development environments. Learn more about devfile from the official documentation.

By using the devfile for creating a new workspace, the following two containers are being started as part of the CDE:

- udi: A container based on the Universal Developer Image, which hosts the Continue server and is used for main development activities.

- ollama: A container based on the official Ollama image that comprises the Ollama web server.

Tip: Additionally, it is possible to leverage GPUs by setting nvidia.com/gpu: 1 in the container’s resource request on the devfile level, which would tremendously improve the performance of the personal AI assistant. Due to that configuration, the ollama container (and the entire pod) will be deployed on an OpenShift worker node that hosts a GPU, which significantly accelerates the inference step of the local LLM and hence tremendously improves the performance of the personal AI assistant for developers. Developer Sandbox clusters currently do not have worker nodes with GPU available hence nvidia.com/gpu: 1 configuration is commented out in the devfile. If you have a cluster available with GPU nodes, feel free to uncomment the lines to enable the nvidia.com/gpu: 1, and run the activity there instead of Developer Sandbox. This configuration would tremendously improve the performance of the personal AI assistant for developers.

At the bottom of the devfile, a set of postStart commands are defined; these commands are executed just after the cloud development environment starts up:

events:

postStart:

- pullmodel

- pullautocompletemodel

- copyconfigpullmodel: Pulls the llama3 LLM to the CDE.pullautocompletemodel: Pulls the starcoder2 LLM to the CDE.copyconfig: Configures the AI assistant Continue to use the local LLMs by copying the continue-config.json file.

What can you do with a personal AI assistant?

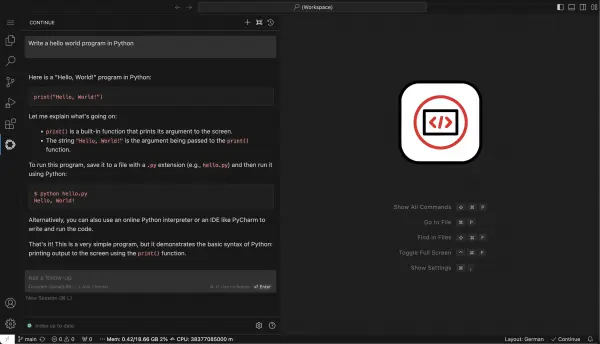

Now that we've covered devfile basics, let’s get back to the cloud development environment (CDE). Once everything is set up, we'll demonstrate the use of a private personal AI assistant for developers using some common development use cases.

Inside the CDE, after clicking the new Continue symbol on the left in the sidebar, a dialog shows up that you can use to communicate with the AI model. Inside the text box, enter Write a hello world program in Python. You will see output similar to Figure 2.

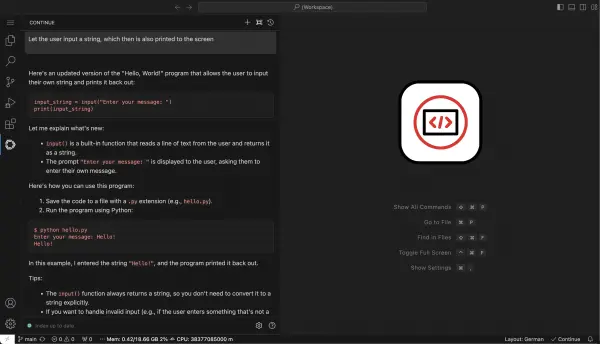

The AI model will remember your inputs; you can also ask it to modify the answer based on additional needs using the "ask a follow-up" prompt underneath the response. Insert Let the user input a string, which then is also printed to the screen in the text box and press Enter. The result is something like Figure 3.

Besides this pure chat functionality, the personal AI assistant for developers can also directly manipulate code, make code suggestions, write documentation or tests, and analyze the code for known issues. In the next example, we'll use it to write a program that checks a given date for proper format.

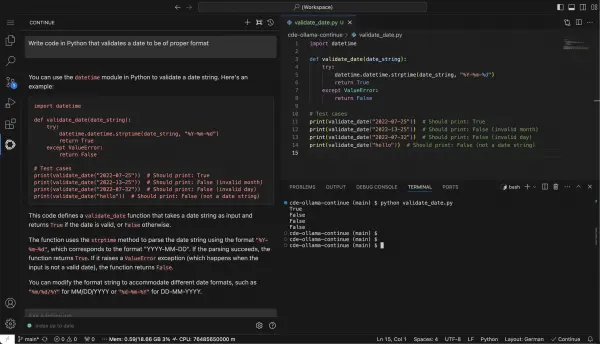

In the Continue extension, create a new session by pressing the plus sign. Now enter the text "Write the code in Python that validates a date to be of a proper format" into the text box and observe the output.

Then, create a new file named validate_date.py and add the AI-generated code by hovering over the code snippet in the Continue extension and clicking the Insert at cursor button.

Finally, click Menu → Terminal → New Terminal and execute the newly generated file by entering python validate_date.py. The output will look similar to Figure 4.

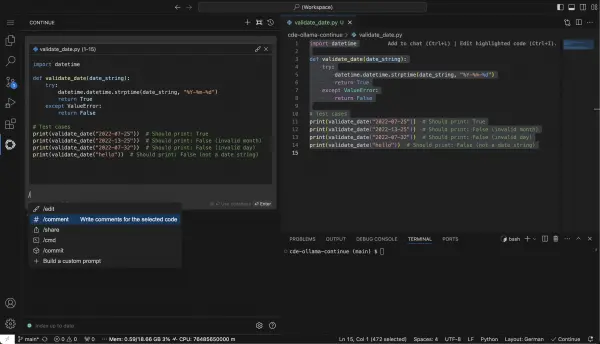

Select the entire code in the Python file validate_date.py and press Ctrl+L (or Cmd+L). One can see that the selected code is added to the Continue extension, i.e., a context is provided to the AI model. Next, type into the text box /comment (Figure 5).

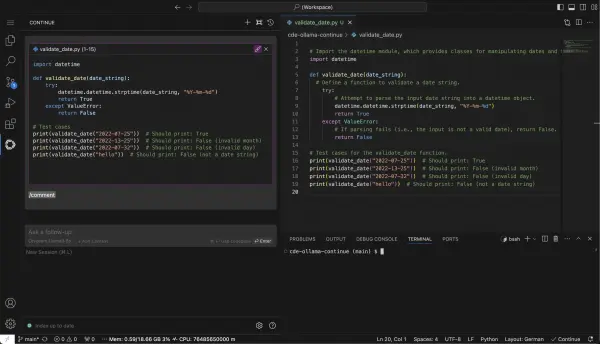

Pressing Enter after having typed /comment into the text box tells the AI assistant to write documentation for the selected code lines and add it directly to the code in the Python file validate_date.py. Then a Continue Diff tab opens and one can see the differences, in this case, the added lines of documentation. To accept the changes, you can press Ctrl+Enter (or Shift + Cmd + Enter), and the code is inserted into the file (Figure 6).

Tip: Hot keys might vary depending on the underlying OS; you can find them using F1 → Continue. These are just a few examples of how to use the AI assistant for a developer’s everyday life and make it more productive.

Tip: More information about the usage of the Continue extension within the cloud development environment can be found on the extension’s homepage. Additional models are available on the Ollama website, and more information for configuring development environments using devfile can be found in the official devfile.io documentation.

Privacy and security

The pervasive challenge with most large language models is their availability predominantly as cloud-based services. This setup necessitates sending potentially sensitive data to external servers for processing. For developers, this raises significant privacy and security concerns, particularly when dealing with proprietary or sensitive codebases. The requirement of sending data to a remote server not only poses a risk of data exposure but can also introduce latency and dependence on internet connectivity for real-time assistance. This architecture inherently limits the use of such LLMs in environments where data governance and compliance standards restrict the transfer of data off-premises or where developers prioritize complete control over their data and intellectual property.

Addressing the challenge of data privacy and security when using cloud-based LLMs, the Continue extension emerges as a compelling solution. The extension is marketed as an “open-source autopilot for software development” and uniquely, it enables the utilization of local LLMs.

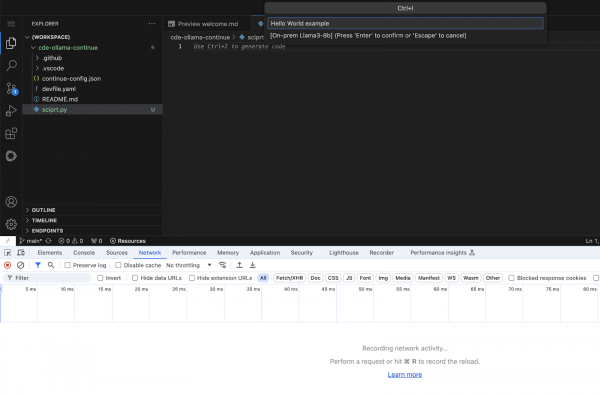

In this activity, we emulated the on-premises environment by using the on-premises Llama3-8b model. While using the personal AI assistant, you can open the Network tab in the browser window and make sure that no request is sent outside of the cluster (Figure 7).

Conclusion

Artificial intelligence (AI) assistants have the potential to revolutionize application development by enhancing productivity and streamlining workflows. For developers, an AI sidekick can act as a coding companion, offering real-time optimizations, automating routine tasks, and debugging on the fly. By running a local instance of an LLM on an air-gapped, on-premise OpenShift cluster, developers can benefit from AI intelligence without the need to transmit data externally.

When integrated within Red Hat OpenShift Dev Spaces the solution offers a seamless and secure development experience right within Visual Studio Code - Open Source (Code - OSS). This setup ensures that sensitive data never leaves the confines of the local infrastructure, all the while providing the sophisticated assistance of an AI using the Continue extension. It is a solution that not only helps mitigate privacy concerns but also empowers developers to harness AI’s capabilities in a more controlled and compliant environment.

Read more about using Red Hat OpenShift Dev Spaces in an air-gapped, on-premise environment in this success story. Happy coding!