This guide shows you how to build a zero trust environment using Red Hat Connectivity Link and Red Hat OpenShift GitOps. By following this demonstration, you will learn how to manage connectivity between services, gateways, and identity providers. This example uses the NeuralBank application to show these security patterns in a practical context.

Quick start recommendation

While you can certainly explore this implementation directly, I strongly recommend forking the repository to your own GitHub organization or user account. This approach transforms the demo into your own customizable environment where you can experiment without affecting the original repository.

When you fork the repository, you can tailor configurations to your specific needs. You can set up your own GitOps workflows with Argo CD pointing to your fork, modify cluster-specific settings like domain names, and maintain your own version control and deployment pipeline. Owning the configuration becomes particularly valuable when you need to adapt the solution to your organization's specific requirements.

After forking, simply update the repository references in applicationset-instance.yaml to point to your fork, and you'll have a fully personalized deployment pipeline ready to go.

Getting started: A quick overview

Before diving into the technical details, here's what you'll need to get started. Implementing a zero trust architecture with Red Hat Connectivity Link requires a few prerequisites. You'll need a Red Hat OpenShift 4.20+ cluster with cluster-admin privileges to install operators and manage cluster-scoped resources.

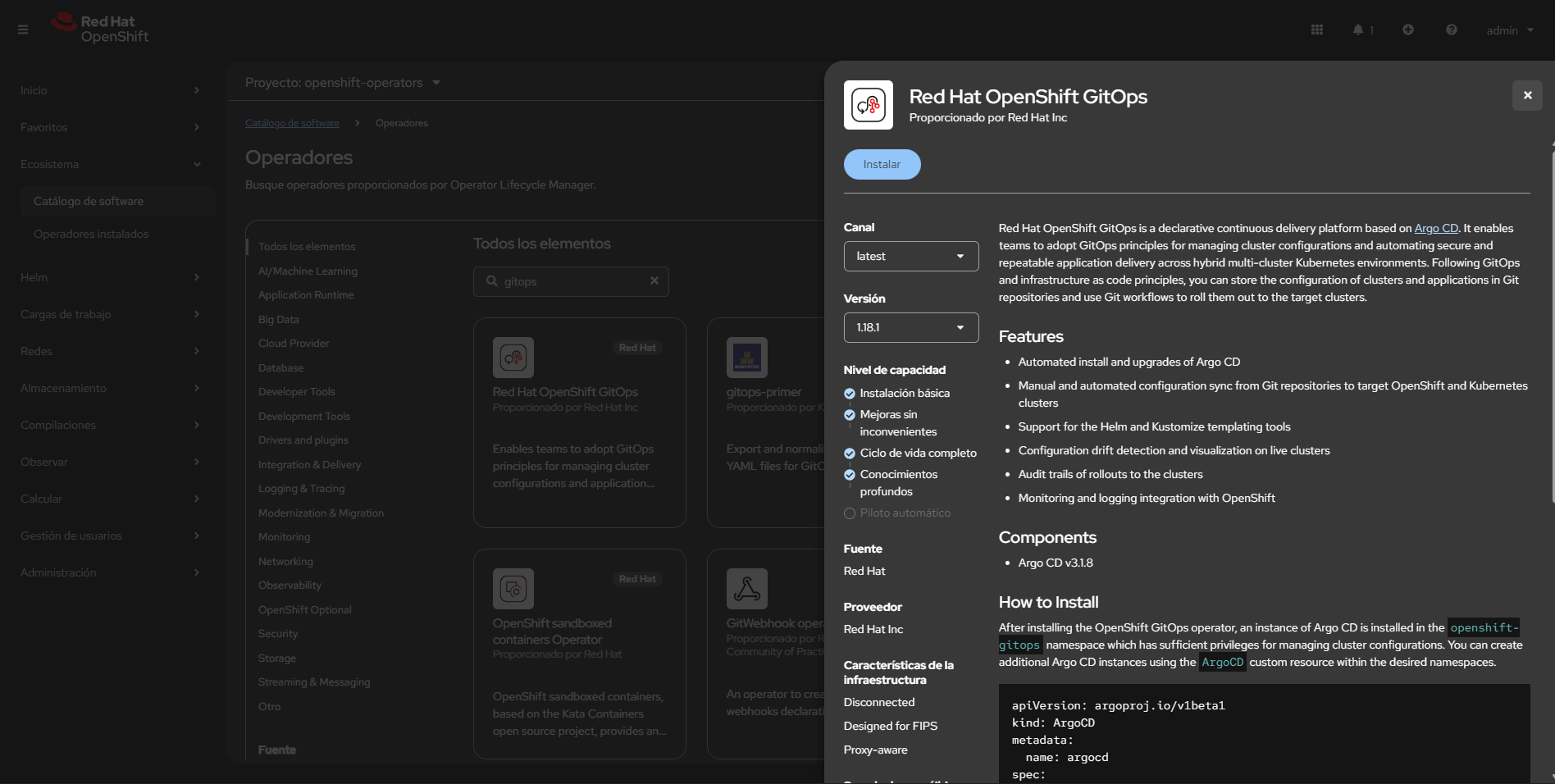

The process itself is simple: start by installing the OpenShift GitOps operator, which serves as the foundation for our GitOps workflow. Once that's in place, applying a single manifest file—applicationset-instance.yaml—triggers Argo CD to detect and manage all the resources declared in the repository. Argo CD (Red Hat OpenShift GitOps) continuously reconciles the desired state to ensure your cluster matches the configuration defined in Git.

Zero trust architecture: Automation with GitOps

At its core, this implementation demonstrates Connectivity Link using a GitOps workflow. The beauty of this approach lies in how applications and infrastructure are declared as Kubernetes and Helm manifests, which Argo CD (OpenShift GitOps) then manages. Rather than manually configuring each component, you define the desired state in code, and GitOps ensures that state is achieved and maintained.

The architecture uses several technologies to create a security-focused, scalable microservices environment. An Istio-based service mesh handles traffic management and security at the network layer, while a Kubernetes Gateway API implementation provides a modern, standards-based approach to routing external traffic.

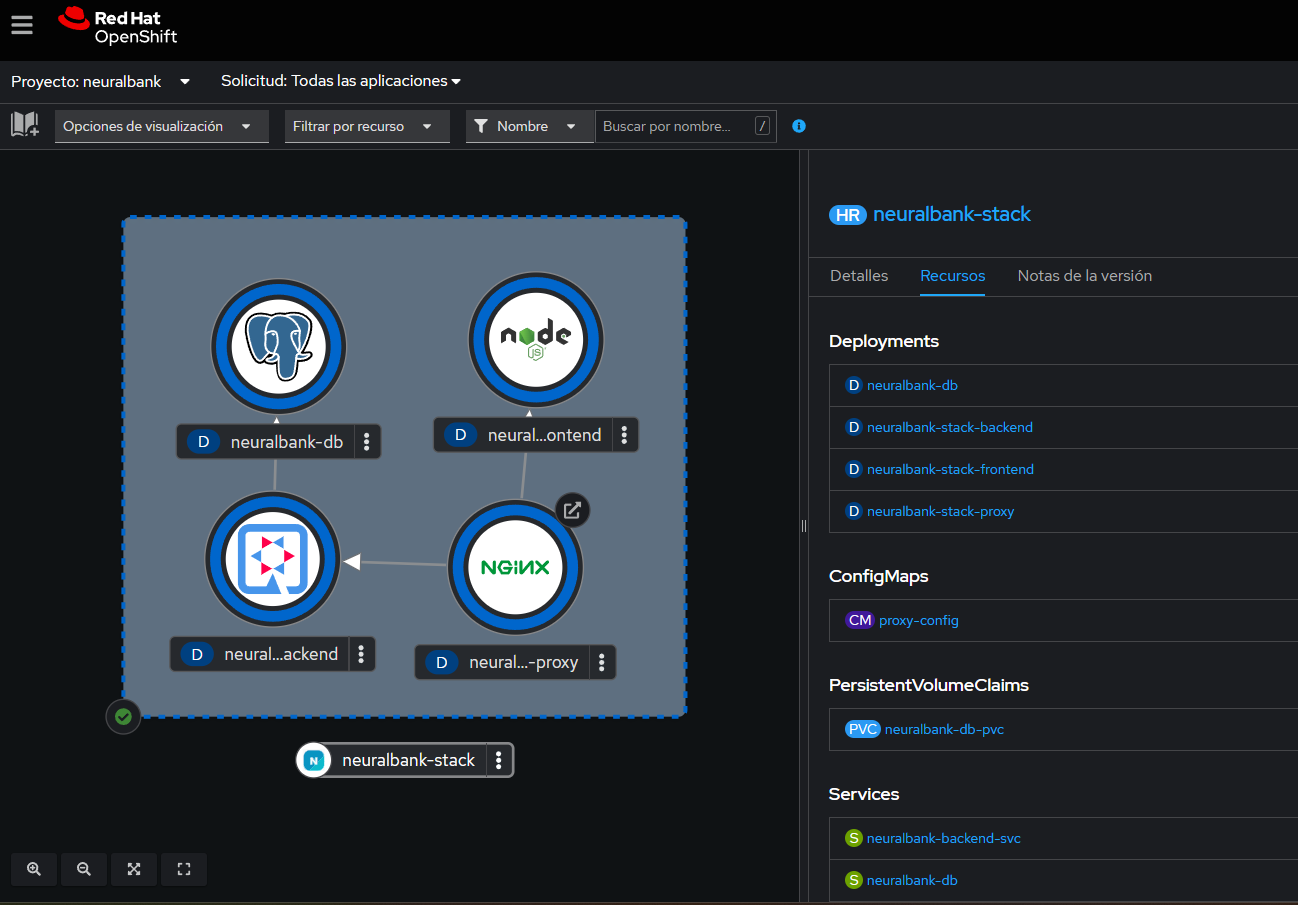

For identity and access management, we use Keycloak, which serves as our authentication provider. The authorization layer is handled by Kuadrant and Authorino, which enforce OIDC-based API protection policies. To demonstrate these capabilities in action, we deploy the NeuralBank demo application—a full-stack application with frontend, backend, and database components (Figure 1). The solution also integrates with Red Hat Developer Hub (Backstage) to provide a developer-friendly interface for managing the entire ecosystem.

Let's explore the key components that make this architecture work. Connectivity Link provides configurations and examples that demonstrate how to establish connectivity between components within an OpenShift cluster. In our GitOps context, it orchestrates the relationships between services, gateways, and authentication mechanisms to create a cohesive security framework.

OpenShift GitOps (Argo CD) acts as the orchestrator, continuously monitoring the repository and ensuring that the cluster state matches what's declared in Git. This reconciliation process happens automatically, making drift detection and correction continuous.

The Service Mesh Operator manages the Istio service mesh, handling both the control plane that makes policy decisions and the data plane that enforces those policies.

Finally, the Red Hat Connectivity Link operator manages connectivity policies and OIDC authentication, providing the declarative interface for configuring security policies.

Step 1: Install the OpenShift GitOps operator

The GitOps operator is the reconciliation engine for the entire platform. Before you can deploy anything, you need to install the OpenShift GitOps operator, which serves as the foundation for our GitOps workflow.

This is the only manual step required before applying the manifests for this demo. Navigate to the OperatorHub in the OpenShift console, search for "OpenShift GitOps," and install it. The operator handles all subsequent deployments automatically through GitOps workflows.

Before installing, ensure you have an OpenShift 4.20+ cluster with cluster-admin privileges. This demo and all its manifests have been validated against OpenShift version 4.20 or later. The newer versions of OpenShift bring enhanced capabilities and better integration with the operators we use. The cluster-admin privileges are necessary because the implementation requires several cluster-scoped operations:

- Installing the OpenShift GitOps operator.

- Allowing

ApplicationSetinstances to create and manage cluster-scoped objects. - Installing and configuring service mesh operators.

- Setting up the necessary RBAC for Argo CD to manage resources across multiple namespaces.

DNS configuration with ApplicationSets

One challenge in deploying this architecture across different clusters is handling DNS configuration. Rather than manually updating cluster domain references in multiple files—a tedious and error-prone process—you can pre-configure DNS hostnames directly in the ApplicationSet definitions. This approach uses Kustomize patches and Helm parameters to dynamically inject your cluster domain values at deployment time, making the solution portable across environments. It eliminates the need for manual edits across multiple files, which reduces configuration errors and makes your deployment easier to maintain.

Identifying the cluster domain

The first step is to identify your OpenShift cluster's base domain. This information is used throughout the configuration for Keycloak, application routes, and OIDC endpoints. You can retrieve it using this command:

oc get ingress.config/cluster -o jsonpath='{.spec.domain}'Alternatively, you can examine your cluster's console URL, which typically follows this pattern: console-openshift-console.apps.<your-cluster-domain>. The domain portion is what you'll need for configuration.

Using ApplicationSet with patches (recommended)

For most use cases, I recommend using Kustomize patches within ApplicationSet resources. This approach provides a clean, declarative way to update DNS hostnames across Keycloak configurations, Routes, and OIDC policies. The mechanism works by defining template variables in the ApplicationSet generator and then using Kustomize patches to inject those values into the appropriate resources.

The following manifest shows how to update the hostnames for Keycloak and the application using a list generator. You'll update the keycloak_host and app_host values in the generator elements, as shown in the following example:

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: connectivity-infra-plain

namespace: openshift-gitops

spec:

goTemplate: true

generators:

- list:

elements:

- name: namespaces

namespace: openshift-gitops

path: namespaces

sync_wave: "1"

- name: operators

namespace: openshift-gitops

path: operators

sync_wave: "2"

- name: developer-hub

namespace: developer-hub

path: developer-hub

sync_wave: "2"

- name: servicemeshoperator3

namespace: openshift-operators

path: servicemeshoperator3

sync_wave: "3"

- name: rhcl-operator

namespace: openshift-operators

path: rhcl-operator

sync_wave: "3"

template:

metadata:

name: '{{.name}}'

spec:

project: default

source:

repoURL: 'https://gitlab.com/maximilianoPizarro/connectivity-link.git'

targetRevision: main

path: '{{.path}}'

destination:

server: 'https://kubernetes.default.svc'

namespace: '{{.namespace}}'

syncPolicy:

automated:

selfHeal: true

prune: true

syncOptions:

- CreateNamespace=trueAlternative: ApplicationSet with Helm parameters

If your deployment strategy relies heavily on Helm charts, you might prefer using Helm parameters to inject DNS values. This approach integrates with existing Helm workflows and allows you to use Helm's templating capabilities. Similar to the Kustomize approach, you'll update the keycloak_host and app_host values in the generator, but the injection mechanism uses Helm's parameter system instead:

apiVersion: argoproj.io/v1alpha1

kind: ApplicationSet

metadata:

name: connectivity-infra-rhbk

namespace: openshift-gitops

spec:

goTemplate: true

generators:

- list:

elements:

- name: rhbk

namespace: rhbk-operator

path: rhbk

sync_wave: "2"

keycloak_host: rhbk.apps.rm2.thpm.p1.openshiftapps.com

app_host: neuralbank.apps.rm2.thpm.p1.openshiftapps.com

template:

metadata:

name: '{{.name}}'

spec:

project: default

source:

repoURL: 'https://gitlab.com/maximilianoPizarro/connectivity-link.git'

targetRevision: main

path: '{{.path}}'

kustomize:

patches:

- target:

group: k8s.keycloak.org

kind: Keycloak

name: rhbk

patch: |-

- op: replace

path: /spec/hostname/hostname

value: "{{.keycloak_host}}"

- target:

group: k8s.keycloak.org

kind: KeycloakRealmImport

name: neuralbank-full-import

patch: |-

- op: replace

path: /spec/realm/clients/0/redirectUris/0

value: "https://{{.app_host}}/*"

- target:

group: route.openshift.io

kind: Route

name: neuralbank-external-route

patch: |-

- op: replace

path: /spec/host

value: "{{.app_host}}"

- target:

group: extensions.kuadrant.io

kind: OIDCPolicy

name: neuralbank-oidc

patch: |-

- op: replace

path: /spec/provider/issuerURL

value: "https://{{.keycloak_host}}/realms/neuralbank"

- op: replace

path: /spec/provider/authorizationEndpoint

value: "https://{{.keycloak_host}}/realms/neuralbank/protocol/openid-connect/auth"

- op: replace

path: /spec/provider/tokenEndpoint

value: "https://{{.keycloak_host}}/realms/neuralbank/protocol/openid-connect/token"

- op: replace

path: /spec/provider/redirectURI

value: "https://{{.app_host}}/auth/callback"

destination:

server: 'https://kubernetes.default.svc'

namespace: '{{.namespace}}'

syncPolicy:

automated:

selfHeal: true

prune: true

syncOptions:

- CreateNamespace=trueWhen configuring these values, keep in mind that the keycloak_host should reflect your Keycloak instance's hostname. For example: rhbk.apps.<your-cluster-domain>. The app_host represents your application's hostname, such as neuralbank.apps.<your-cluster-domain>. The beauty of this approach is that once you set these values, the Kustomize patches automatically propagate them throughout all relevant resources, includingKeycloak configurations, Routes, and OIDC policies. This ensures consistency across your entire deployment.

Exploring the architecture

To truly appreciate the value of Connectivity Link, it's helpful to understand the evolution from a basic application deployment to a more secure zero trust architecture. Let's start by examining what a typical application looks like before we add security layers. Refer back to Figure 1.

Our starting point is the NeuralBank application, a representative microservices application that demonstrates real-world patterns. At its core, the application has a React-based frontend that provides the user interface, a RESTful API backend service that handles business logic, and a PostgreSQL database that stores application data. Even at this basic level, we're already leveraging modern infrastructure: an Istio service mesh provides mutual TLS (mTLS) encryption and traffic management between services, while a Kubernetes Gateway API handles external traffic ingress.

This foundation is functional, but it lacks the security controls modern applications require. Anyone who can reach the gateway can access the application. There's no mechanism to verify user identity or control access based on roles or permissions.

Transforming to zero trust: Adding security layers

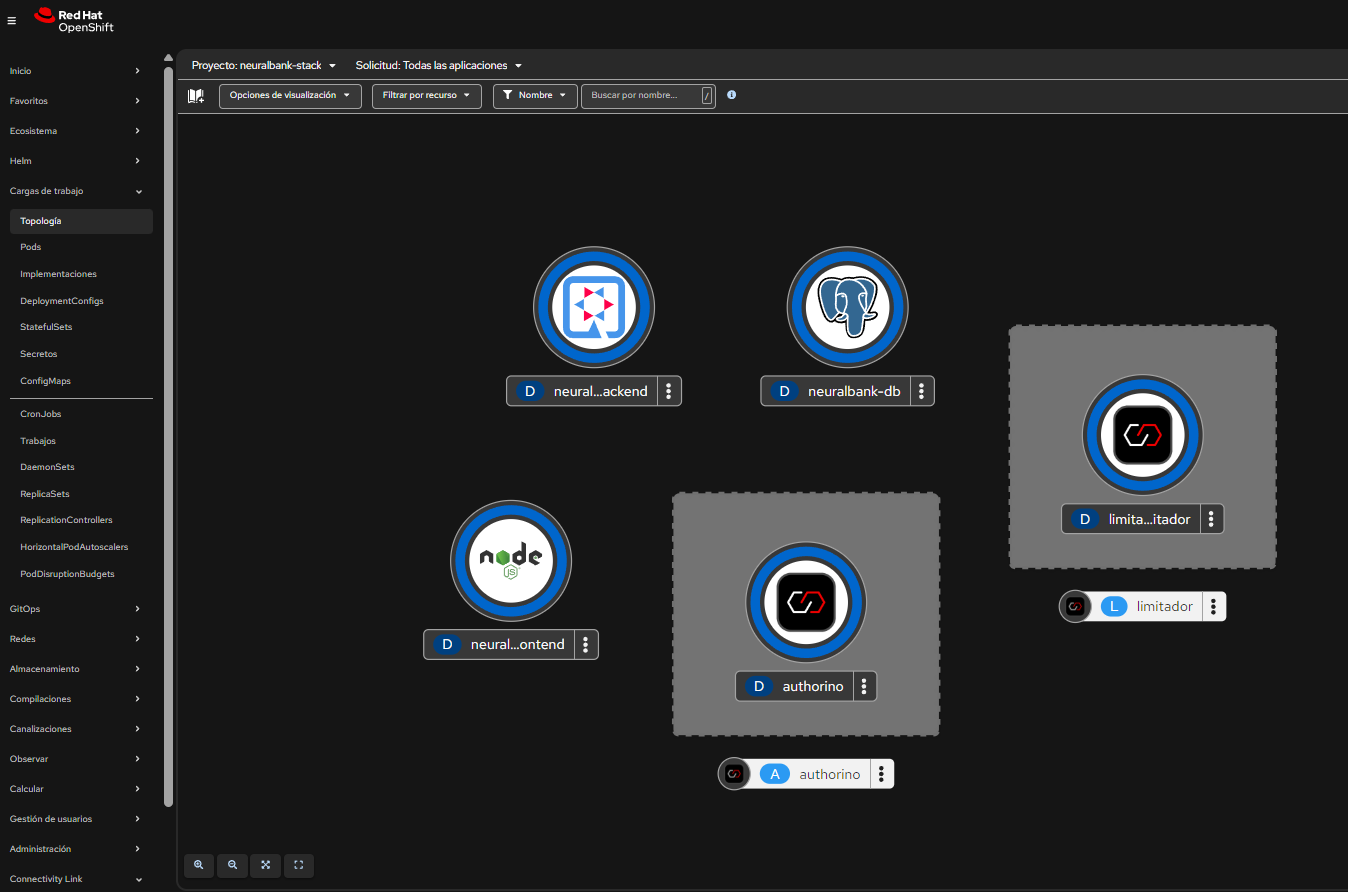

Now we transform this basic architecture into a zero trust implementation, as shown in Figure 2. The first layer we add is Keycloak, which serves as our Identity and Access Management (IAM) provider. Keycloak handles user authentication, managing identities, authentication flows, and token generation according to OIDC/OAuth2 protocols. It becomes the single source of truth for user identities and authentication status.

But authentication alone isn't enough—we also need authorization. This is where Authorino comes in. Acting as a policy-based authorization engine, Authorino enforces OIDC authentication at the API Gateway level, intercepting requests and validating tokens before allowing access to backend services. The authorization policies are defined through Kuadrant OIDCPolicy resources, which provide a declarative way to configure the authentication flow. Additionally, Kuadrant rate limiting protects APIs from abuse and prevents users from overwhelming the system with excessive requests.

Together, these components create a comprehensive security framework where every request is authenticated, authorized, and rate-limited before reaching the application.

The security stack: Understanding each component

To fully appreciate how this architecture achieves zero trust security, we need to understand how each security component works and how they integrate together. Let's examine the key players in our security stack.

Red Hat build of Keycloak: The identity foundation

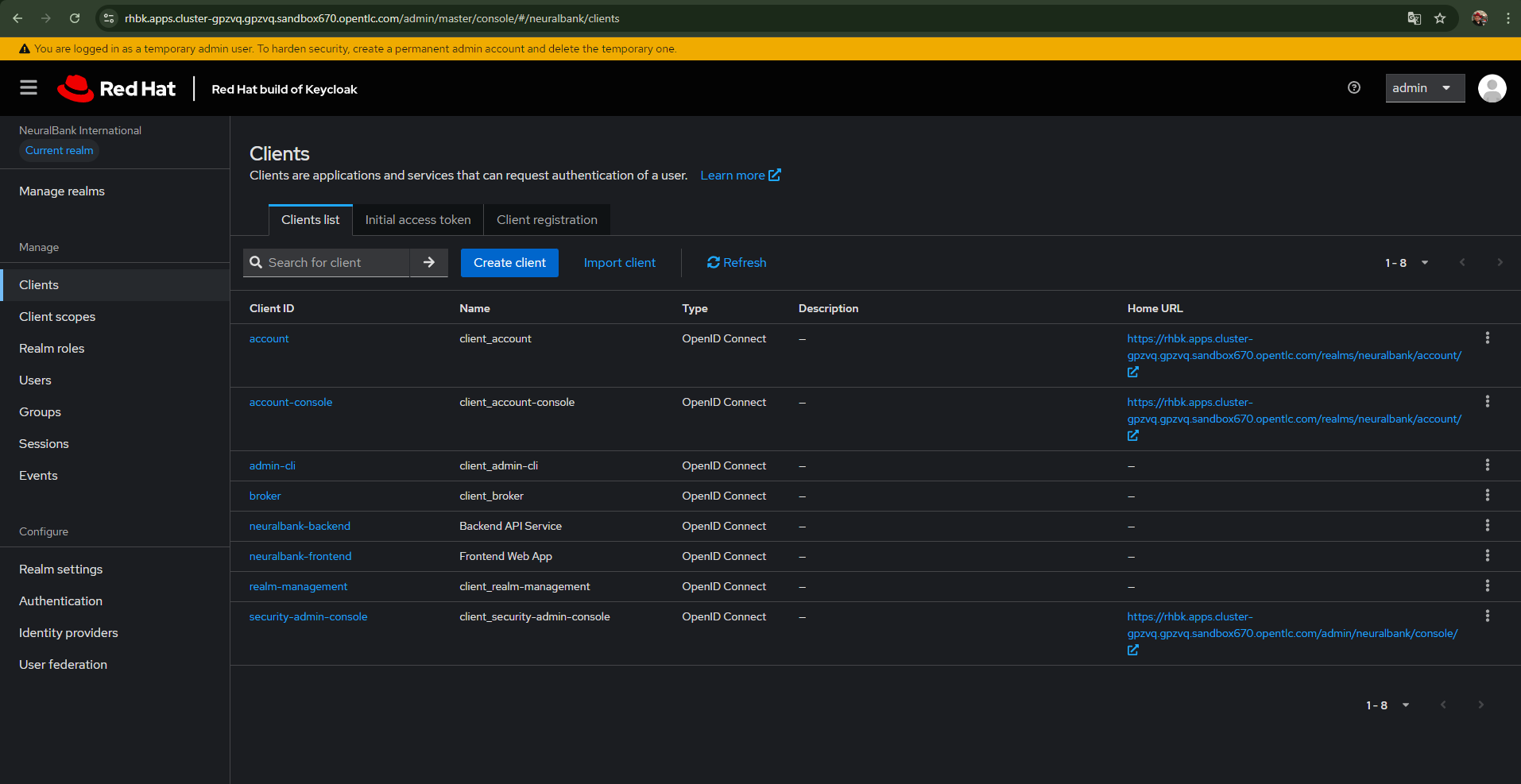

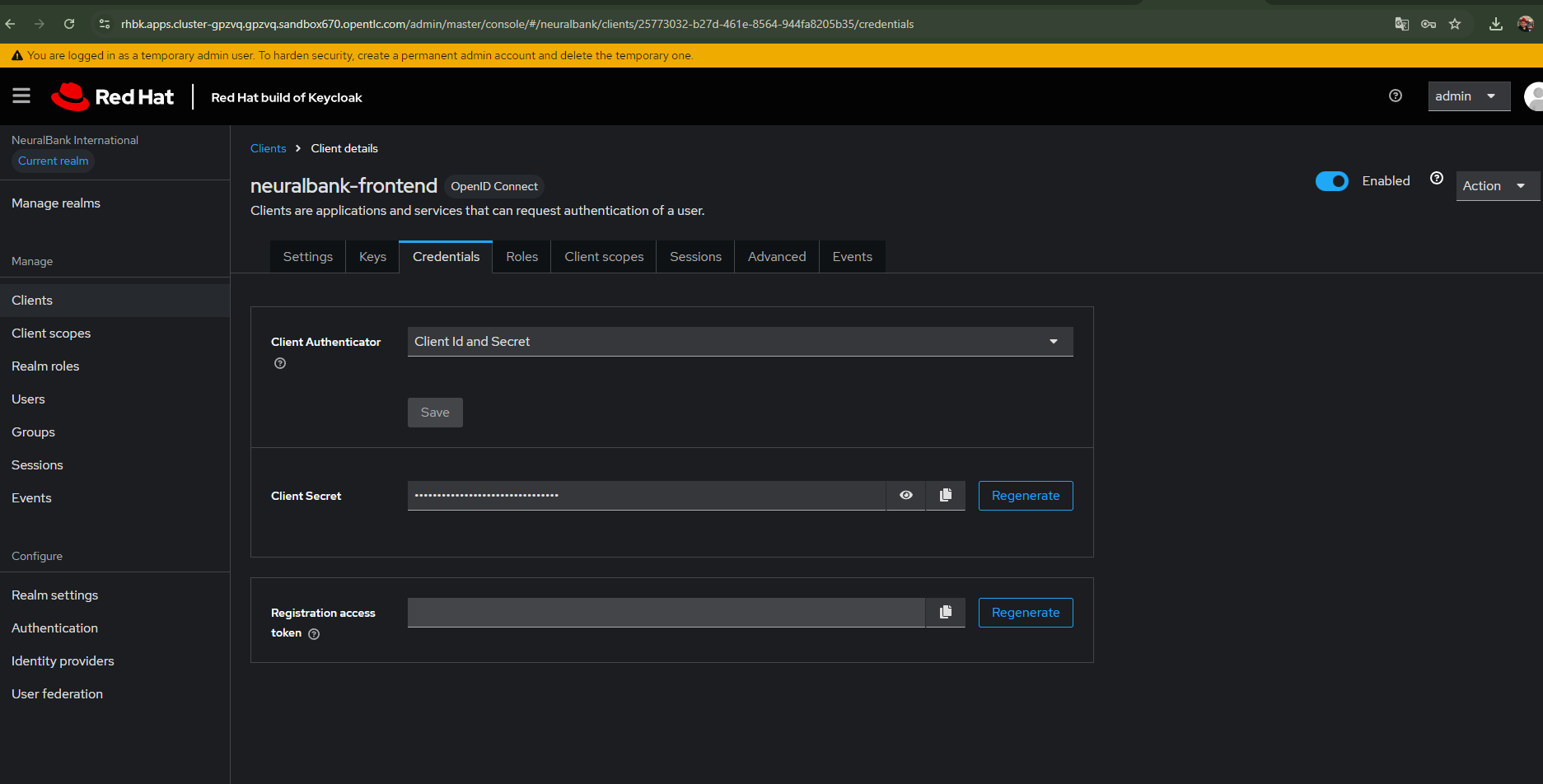

Keycloak (Figure 3) stands at the center of our authentication architecture, serving as the trusted authority that verifies user identities. When a user attempts to access the application, Keycloak is the first line of defense, managing the entire authentication process from initial login through token generation. It supports OIDC/OAuth2 protocols, which are industry standards for modern authentication, providing an interoperable way to handle identity verification.

What makes Keycloak particularly powerful in this context is its realm-based multi-tenancy, which allows you to isolate different applications or organizations while sharing the same Keycloak instance. Client authentication is handled through secrets, providing a mechanism for applications to identify themselves to Keycloak. The token-based authentication flows enable seamless single sign-on experiences, while the integration with OpenShift Service Mesh ensures that authentication works with the service mesh's security policies.

Authorino and Kuadrant: Policy enforcement at the Gateway

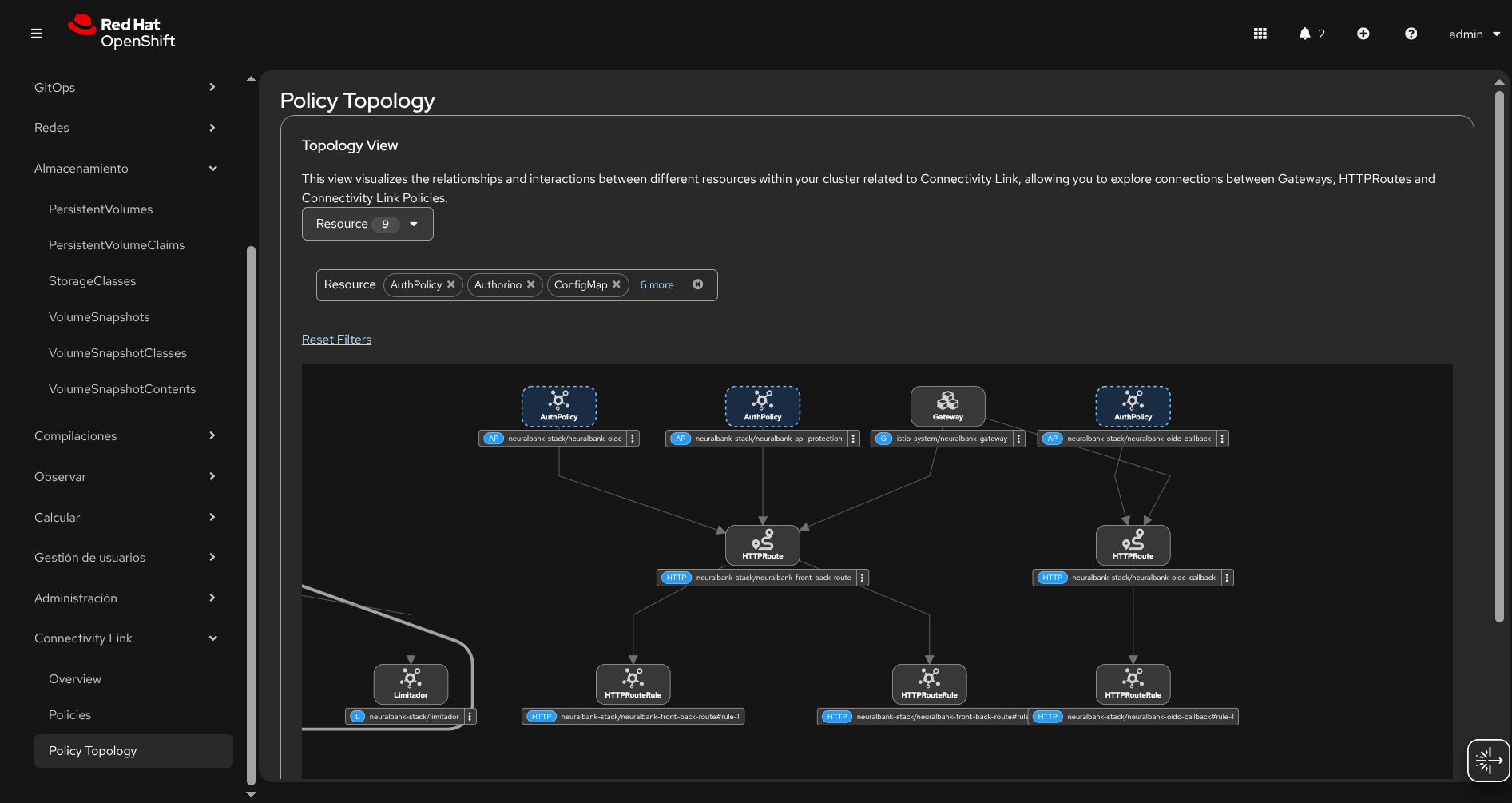

While Keycloak handles authentication (verifying identity), while Authorino handles authorization (determining what authenticated users are allowed to do). Authorino operates as an external authorization service. It intercepts requests at the API Gateway level to enforce policies before traffic reaches backend services. This separation of concerns is a key principle of zero trust architecture. Even if a user is authenticated, they must still be authorized for each specific action.

Kuadrant extends Authorino's capabilities with additional API management features, most notably rate limiting. This protection ensures that even legitimate users can't accidentally or intentionally overwhelm your APIs with excessive requests. The rate limiting policies are defined declaratively, making them easy to manage and version control alongside your application code.

The integration between Authorino and the Kubernetes Gateway API is particularly elegant. As requests flow through the gateway, Authorino validates OIDC tokens, checks authorization policies, and enforces rate limits—all before the request ever reaches your application. This means your application code can focus on business logic, confident that security is being handled at the infrastructure level. Additionally, Authorino supports API key management, providing flexibility for different types of clients and use cases.

Service mesh security: Protecting inter-service communication

Beyond the gateway-level security, the Istio service mesh adds another critical layer of protection: securing communication between services within your cluster. Even if an attacker somehow bypasses the gateway security, the service mesh ensures that inter-service communication is encrypted and controlled.

Mutual TLS (mTLS) encryption encrypts every connection between services, and both parties verify each other's identity. This means that even if network traffic is intercepted, it remains unreadable. Traffic policies provide fine-grained control over how services communicate, allowing you to implement security rules like "Service A can only communicate with Service B on port 8080" or "All traffic must use mTLS." Service isolation through network segmentation ensures that services can only communicate with explicitly authorized peers, following the principle of least privilege. See Figure 4.

Implementation deep dive: How it all comes together

Now that you understand the architecture and security components, let's explore how these pieces are actually implemented. The implementation uses Kubernetes-native resources and follows cloud-native best practices, making it both powerful and maintainable.

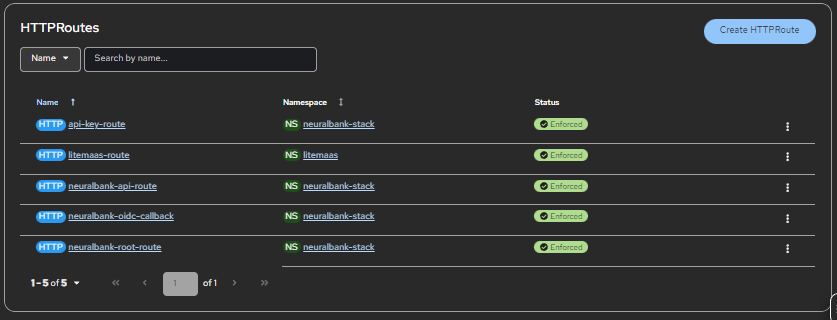

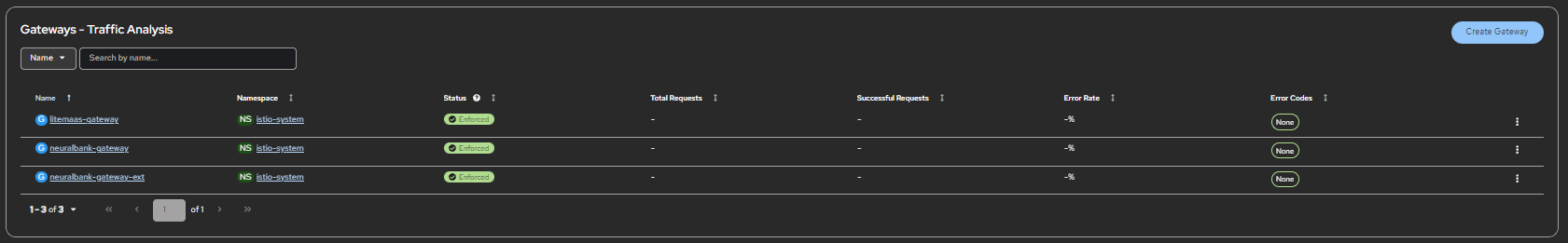

Gateway and HTTPRoute: Defining traffic entry points

In this architecture, Gateway resources serve as the entry point for all external traffic, defining listeners and protocols. HTTPRoute resources then specify the detailed routing rules that determine how traffic flows from the Gateway to backend services. This separation of concerns—gateway definition versus routing rules—is one of the elegant aspects of the Kubernetes Gateway API standard, which our implementation uses throughout.

The Gateway API represents a significant evolution from the traditional Ingress resource, providing more flexibility and better alignment with modern microservices patterns. Our implementation uses Istio's Gateway implementation, which provides full support for the Gateway API while adding Istio-specific capabilities like advanced traffic management and security policies.

When configuring an HTTPRoute, you'll work with several key elements. The parentRefs field references the Gateway resource that will handle the traffic—in our case, neuralbank-gateway in the istio-system namespace. The hostnames field specifies which hostnames this route will match, and it's crucial that these match the OpenShift Route hostname to ensure proper integration. Within the rules section, you define path-based routing: matches specify path patterns (like /api, /q, or /), while backendRefs point to the Kubernetes Services that should receive the matched traffic.

The following example to see how this works in practice. Here's the HTTPRoute configuration for neuralbank-api-route, shown in Figure 5:

spec:

parentRefs:

- name: neuralbank-gateway

namespace: istio-system

hostnames:

- "neuralbank.apps.<your-cluster-domain>"

rules:

- matches:

- path:

type: PathPrefix

value: /api

backendRefs:

- name: neuralbank-backend-svc

port: 8080This configuration creates a route that matches requests to /api/* and /q/* paths and forwards them to the neuralbank-backend-svc service on port 8080. The beauty of this approach is its declarative nature: you describe what you want, and the system makes it happen.

OIDCPolicy configuration: Securing your routes

The OIDCPolicy resource configures the authentication. This resource configures OIDC authentication for protected routes, integrating with Authorino (via Kuadrant) to enforce authentication at the gateway level. When a user attempts to access a protected route, the OIDC policy orchestrates the authentication flow, redirecting unauthenticated users to Keycloak and validating tokens for authenticated requests.

Configuring an OIDC policy (Figure 6) requires attention to several key fields, each playing a specific role in the authentication process:

provider.issuerURL: The Keycloak realm issuer URL- Format:

https://<keycloak-host>/realms/<realm-name> - Example:

https://rhbk.apps.<your-cluster-domain>/realms/neuralbank

- Format:

provider.clientID: The Keycloak client ID (must match the client configured in Keycloak)- Example:

neuralbank

- Example:

provider.clientSecret: This is a critical security credential that must be obtained from the Keycloak console after enabling client authentication. To retrieve it, log into the Keycloak console, navigate to your realm, select the Clients section, choose your client, and then go to the Credentials tab where you'll find the Client secret value. Once you have it, update theclientSecretfield in your OIDC policy configuration. Important security note: Use a Kubernetes Secret instead of hardcoding this value in your YAML files for better security and easier rotation capabilities.provider.authorizationEndpoint: Keycloak authorization endpoint- Format:

https://<keycloak-host>/realms/<realm-name>/protocol/openid-connect/auth

- Format:

provider.redirectURI: OAuth callback URL (must match a redirect URI configured in Keycloak client)- Example:

https://neuralbank.apps.<your-cluster-domain>/auth/callback

- Example:

provider.tokenEndpoint: Keycloak token endpoint- Format:

https://<keycloak-host>/realms/<realm-name>/protocol/openid-connect/token

- Format:

targetRef: References theHTTPRouteresource that should be protected by this OIDC policy- Example:

neuralbank-api-route(protects the/apiand/qendpoints)

- Example:

auth.tokenSource: Defines where to look for the authentication tokenauthorizationHeader: Token inAuthorization: Bearer <token>headercookie: Token stored in a cookie (for example,jwtcookie)

Example OIDC policy:

spec:

provider:

issuerURL: "https://rhbk.apps.<your-cluster-domain>/realms/neuralbank"

clientID: neuralbank

clientSecret: "<your-client-secret-from-keycloak>" # Update this!

authorizationEndpoint: "https://rhbk.apps.<your-cluster-domain>/realms/neuralbank/protocol/openid-connect/auth"

redirectURI: "https://neuralbank.apps.<your-cluster-domain>/auth/callback"

tokenEndpoint: "https://rhbk.apps.<your-cluster-domain>/realms/neuralbank/protocol/openid-connect/token"

targetRef:

group: gateway.networking.k8s.io

kind: HTTPRoute

name: neuralbank-api-route

auth:

tokenSource:

authorizationHeader:

prefix: Bearer

name: Authorization

cookie:

name: jwtImportant notes:

- You must update the

clientSecretafter generating it from the Keycloak console (see step 4). - The

redirectURImust exactly match one of the redirect URIs configured in the Keycloak client. - The

hostnameinHTTPRouteresources must match the hostname used in the OpenShift Route. - All URLs (issuer, endpoints) must use HTTPS and match your cluster's domain configuration.

Service Mesh Operator 3

Service Mesh Operator (Istio) configurations for service mesh control plane and gateway:

- Service mesh control plane (SMCP): Istio control plane deployment (version 1.27.3)

- Istio CNI: Istio CNI plug-in configuration

- Gateway: Kubernetes Gateway API Gateway resource with HTTP (8080) and HTTPS (443) listeners

- RBAC: ClusterRole/ClusterRoleBinding for Argo CD to manage Istio resources

Key features include:

- Istio service mesh control plane management

- Kubernetes Gateway API implementation

- Multi-protocol support (HTTP/HTTPS)

- Cross-namespace route support

Benefits of cloud-native integration

The integration with the Kuadrant project brings a transformative simplicity. By adding just a few manifest files, you radically change your application's security posture. What makes this implementation particularly compelling is how it embodies cloud native principles. Integrating with a cloud native strategy and the Kuadrant project brings significant advantages that go beyond just security. The simplicity is remarkable: by adding just a few manifest files with the appropriate configuration, you can transform your application's entire security posture. GitOps plays a crucial role here, orchestrating these changes in a clean, reproducible way within seconds, enabling a true zero trust architecture implementation.

The power of this solution lies fundamentally in its declarative nature. Rather than writing scripts or manually configuring components, you define the desired state through Kubernetes manifests. The GitOps workflow then ensures that state is achieved and maintained automatically, continuously reconciling the actual cluster state with what's declared in Git. This approach eliminates manual configuration errors, provides complete audit trails through Git history, and enables rapid deployment across multiple environments with perfect consistency.

The zero trust model is enforced comprehensively at every layer of the stack. Authentication happens through Keycloak, verifying user identity before any access is granted. Authorization occurs via Authorino, implementing policy-based access control that determines what authenticated users can actually do (see Figure 7). Rate limiting protects APIs from abuse, ensuring resource availability even under load. And service mesh policies help secure inter-service communication, ensuring that even internal traffic is encrypted and controlled.

Together, Connectivity Link and Kuadrant establish a security framework that scales naturally with your infrastructure. As you add new services or expand to new environments, the same patterns and policies apply, creating a robust foundation for modern microservices architectures that can grow with your organization's needs.

Key takeaways:

- No manual errors: All configuration resides in Git, eliminating manual configuration mistakes and providing complete audit trails

- Zero trust architecture: Authentication via Keycloak and fine-grained authorization via Authorino ensure that every request is verified and authorized

- Scalability: Rate limiting policies protect your APIs against traffic abuse, ensuring availability even under load

- Reproducibility: The declarative approach ensures your infrastructure is reproducible, auditable, and more secure by default

Step 2: Deploying the infrastructure

Once the hostnames are configured in your ApplicationSet definitions and the OpenShift GitOps operator is installed, you're ready to deploy the infrastructure. All you need to do is apply the top-level manifest file, and Argo CD takes over from there.

Use the following command to apply the ApplicationSet instance:

oc apply -f applicationset-instance.yamlArgo CD will detect the defined resources and begin creating the namespaces, operators, and the service mesh (Red Hat OpenShift Service Mesh 3). The deployment follows a carefully orchestrated progression, with each stage building upon the previous one. The journey begins with namespace creation, where we establish the organizational boundaries for operators and applications. Next comes operator installation, where we deploy the essential operators: Service Mesh and Red Hat Connectivity Link. These operators provide the automation and management capabilities that make the rest of the deployment possible.

With operators in place, Argo CD moves to infrastructure setup, configuring the Service Mesh control plane and Gateway resources that will handle traffic routing and security policies. The authentication setup phase follows, deploying Keycloak and configuring realms and clients to establish the identity foundation. Then Argo CD deploys the NeuralBank application stack itself, bringing the actual application online. Finally, security configuration applies OIDC policies and rate limiting rules, completing the zero trust security implementation.

What's remarkable about this workflow is that it's entirely automated through GitOps. Once you apply the initial ApplicationSet, Argo CD takes over, managing each stage and ensuring that the deployment proceeds in the correct order, with proper dependencies respected. You can monitor the progress through the Argo CD console, watching as each component comes online and synchronizes with the desired state defined in Git (see Figure 9).

Step 3: Manual Keycloak configuration (OIDC)

After deploying Red Hat build of Keycloak, you must perform manual steps to make the OIDC flow work correctly. While most of the deployment is automated through GitOps, Keycloak requires some manual configuration to complete the authentication setup.

Accessing the Keycloak console

First, access the Keycloak console using the generated route. The route will typically follow the pattern rhbk.apps.<your-cluster-domain>, or in our example, rhbk.apps.rm2.thpm.p1.openshiftapps.com. You can find the exact route by checking the OpenShift routes in the Keycloak namespace.

Configuring the client

In the neuralbank realm, you need to configure the client that will be used for OIDC authentication. Navigate to the Clients section and select your client (typically named neuralbank). Here are the key configuration steps:

- Activate client authentication: Enable this setting to require the client to authenticate itself when making requests. This is important for secure OIDC flows.

- Enable direct access grants: This allows the client to obtain tokens directly using credentials, which is necessary for the OIDC flow.

- Configure PKCE: Set the code challenge method to S256. PKCE (Proof Key for Code Exchange) adds an extra layer of security to the OAuth flow, particularly important for public clients.

- Set redirect URIs: Ensure that the redirect URIs match your application's callback URLs. These should follow the pattern

https://neuralbank.apps.<your-cluster-domain>/*.

Retrieving and configuring secrets

After configuring the client, you need to copy the Client Secret from the Credentials tab (Figure 10). This secret is critical for the OIDC policy configuration. Once you have the secret, update it in your rhcl-operator/oidc-policy.yaml file in Git. This ensures that Authorino can properly authenticate with Keycloak when validating tokens.

Security note: Consider using a Kubernetes Secret to store the client secret instead of hardcoding it in the YAML file. This provides better security and easier rotation capabilities.

After completing these manual steps, the OIDC authentication flow will be fully functional. Users attempting to access protected routes will be redirected to Keycloak for authentication, and Authorino will validate the tokens before allowing access to backend services.

Making API calls: Testing the implementation

Once your deployment is complete and Keycloak is configured, you'll want to test the implementation by making API calls. Understanding how to interact with the secured endpoints is crucial for validating that everything works correctly.

Accessing unprotected endpoints

Some endpoints in your application may be intentionally left unprotected for public access. These can be accessed directly using curl or any HTTP client:

curl https://neuralbank.apps.rm2.thpm.p1.openshiftapps.com/This should return the frontend application or a public endpoint without requiring authentication.

Accessing protected endpoints

Protected endpoints require authentication. When you attempt to access them without proper credentials, you'll be redirected to Keycloak for authentication. Here's how the flow works:

- Initial request: Make a request to a protected endpoint (for example,

/api/accounts). - Redirect to Keycloak: Authorino intercepts the request and redirects you to the Keycloak login page.

- Authentication: Enter your credentials in Keycloak.

- Token exchange: After successful authentication, Keycloak issues an OIDC token.

- Authorized request: The token is included in subsequent requests, and Authorino validates it before allowing access.

Using Bearer tokens for API calls

Once authenticated, you can make API calls using the Bearer token in the Authorization header:

curl -H "Authorization: Bearer <your-access-token>" \

https://neuralbank.apps.rm2.thpm.p1.openshiftapps.com/api/accountsYou can obtain the access token through the OIDC flow. When using a browser, the token is typically stored in a cookie after the initial authentication. For programmatic access, you can use the OIDC token endpoint:

curl -X POST https://rhbk.apps.rm2.thpm.p1.openshiftapps.com/realms/neuralbank/protocol/openid-connect/token \

-d "client_id=neuralbank" \

-d "client_secret=<your-client-secret>" \

-d "grant_type=client_credentials" \

-d "scope=openid"Testing rate limiting

To verify that rate limiting is working correctly, you can make multiple rapid requests to a protected endpoint:

for i in {1..20}; do

curl -H "Authorization: Bearer <your-access-token>" \

https://neuralbank.apps.rm2.thpm.p1.openshiftapps.com/api/accounts

echo "Request $i completed"

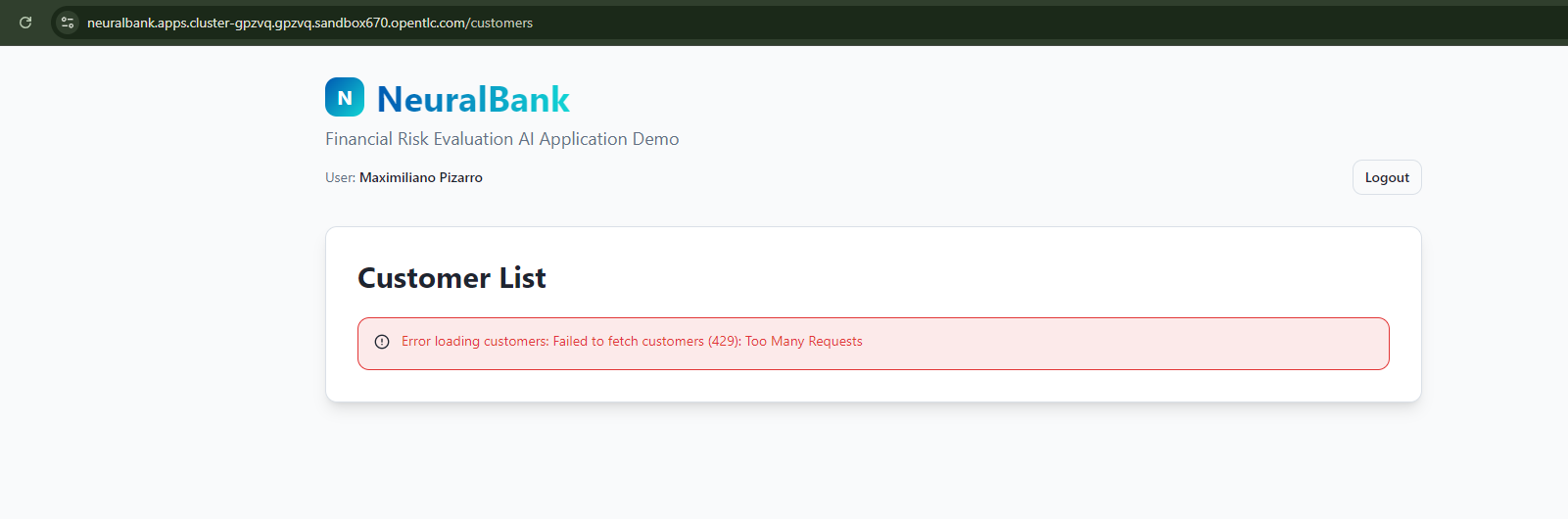

doneIf rate limiting is properly configured, you should see some requests return HTTP 429 (Too Many Requests) after exceeding the configured rate limit (Figure 10).

Conclusion

Red Hat Connectivity Link and Kuadrant provide a security framework that scales with your infrastructure. As you add services or expand to new environments, these patterns and policies provide a foundation for security-focused microservices architectures that grow with your organization. In the next article in this series, we will cover advanced policy configurations and multi-cluster connectivity. Read it here: Manage AI resource use with TokenRateLimitPolicy

Last updated: February 19, 2026