In the previous article, How to import provider network routes to OpenShift via BGP, we demonstrated how you can import provider network routes into Red Hat OpenShift Virtualization with the border gateway protocol (BGP). This article demonstrates how to expose OpenShift networks with BGP to provide additional features to your Virtual Machines (VMs). This integration, primarily through FRRouting (FRR) and User-Defined Networks (UDNs), brings advanced routing and network segmentation benefits directly to virtual machines and containers on the same platform.

The benefits of BGP for OpenShift networking

As cloud-native and hybrid environments continue to grow, so do the networking demands. OpenShift needs robust, dynamic networks, which BGP provides. This integration overcomes traditional Kubernetes networking limits by enabling dynamic routing, which essentially automates route configurations, facilitates dynamic route updates, and simplifies VM migration from other virtualization platforms, enhancing flexibility and performance.

Directly exposing external IP addresses for OpenShift workloads provides direct access to pod IP subnets and enables the dynamic import/export of cluster routes. Furthermore, BGP's support for advanced features, such as bidirectional forwarding detection (BFD), ensures rapid failover and high availability, which are essential for maintaining application uptime.

Finally, it paves the way for EVPN and "no-overlay mode", which can lead to higher throughput by removing encapsulation overhead, thereby making networks more efficient, a perfect scenario for virtualized workloads.

Thanks to the BGP capabilities, we are able to migrate VMs from other virtualization platforms, while maintaining their original routing configuration.

Exporting network routes

The problem we address in this article is exporting network routes from OpenShift to a BGP router running on a customer's provider network. This is a typical scenario for hypervisors. Now, with OpenShift (and OpenShift Virtualization), the solution is to dynamically expose the network of VMs.

As usual in the OpenShift world, all the discussed configurations are declarative, utilizing YAML files, and are ready for use with the GitOps paradigm.

Other important features provided by BGP (not covered in this article) are:

- Enhanced connectivity for workloads (dynamic routing and reachability info)

- Advanced network segmentation and isolation

- High availability and fast convergence for VM networks

Prerequisites:

- OpenShift cluster (version 4.20 or later)

- Enable OpenShift's additional routing capabilities.

- Install and configure OpenShift Virtualization. Refer to the OpenShift documentation for instructions.

To enable the additional routing capabilities for our OpenShift cluster, we need to patch the network operator:

oc patch Network.operator.openshift.io cluster --type=merge -p='{"spec":{"additionalRoutingCapabilities": {"providers": ["FRR"]}, "defaultNetwork":{"ovnKubernetesConfig":{"routeAdvertisements":"Enabled"}}}}'Exposing the VM networks

To expose a network using BGP outside the OpenShift cluster, we need to follow these overall steps:

- Create the FRRConfiguration custom resource to peer with the provider's edge router.

- Create one or more Kubernetes namespaces for our VMs.

- Assign a dedicated primary network to those Kubernetes namespaces.

- Advertise the namespace's primary network to the external BGP router.

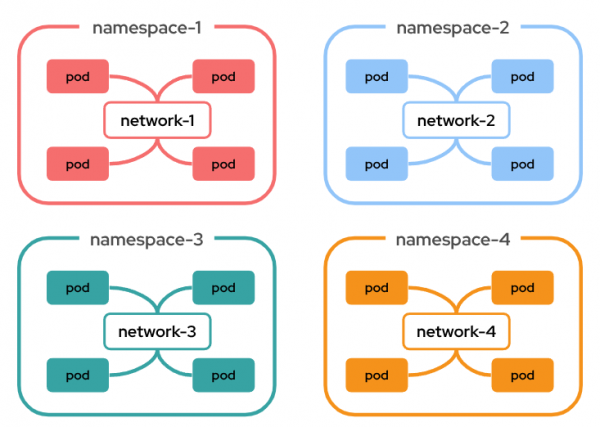

In OpenShift (using the default OVN-Kubernetes CNI plug-in), it is possible to assign a dedicated network to our namespaces (Figure 1).

Each namespace can have its own unique, isolated network by default, guaranteeing native isolation as if they were disconnected network islands.

Thanks to the BGP integration, we can expose those networks to external BGP routers and let external entities directly connect to our VMs running in those namespaces.

For this example, the external BGP router has an IPv4 address of 172.22.0.1:

enp3s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 58:47:ca:7a:79:c2 brd ff:ff:ff:ff:ff:ff

inet 172.22.0.1/24 brd 172.22.0.255 scope global noprefixroute enp3s0This is the starting routing table of the external BGP router:

default via 192.168.0.1 dev enp2s0 proto dhcp src 192.168.0.19 metric 100

172.22.0.0/24 dev enp3s0 proto kernel scope link src 172.22.0.1 metric 101Create the FRRConfiguration custom resource

Let's create the FRRConfiguration in OpenShift. As you can see, we are using 64512 as the Autonomous System (AS) and the external BGP router's IP as a BGP neighbor:

apiVersion: frrk8s.metallb.io/v1beta1

kind: FRRConfiguration

metadata:

name: receive-all

namespace: openshift-frr-k8s

spec:

bgp:

routers:

- asn: 64512

neighbors:

- address: 172.22.0.1 #external BGP router ip

asn: 64512

disableMP: true

toReceive:

allowed:

mode: allCreate Kubernetes namespaces for our VMs

To assign a dedicated network to our namespace, we need to add a special label during creation time. Regarding OpenShift 4.20, it is not possible to assign a dedicated primary network to namespaces after they have been created.

apiVersion: v1

kind: Namespace

metadata:

labels:

k8s.ovn.org/primary-user-defined-network: ""

cluster-udn: prod

name: cudn-bgp-testThe label cluster-udn is a custom label, useful for namespace(s) selection.

Assign a dedicated primary network to the namespace

To assign a dedicated primary network, we must create a ClusterUsedDefinedNetwork:

apiVersion: k8s.ovn.org/v1

kind: ClusterUserDefinedNetwork

metadata:

name: cluster-udn-prod

labels:

advertise: "true"

spec:

namespaceSelector:

matchLabels:

cluster-udn: prod

network:

layer2:

ipam:

lifecycle: Persistent

role: Primary

subnets:

- 10.100.0.0/16

topology: Layer2We have assigned a 10.100.0.0/16 primary subnet (using the layer two topology, which is a requirement for virtualization workloads) to namespaces with the label cluster-udn, having a value of prod. In a nutshell, we are selecting the previously created namespace named cudn-bgp-test.

Advertise the namespace's primary network

The final step is advertising the namespace's primary network to the external BGP router. We have a dedicated custom resource to reach our goal:

apiVersion: k8s.ovn.org/v1

kind: RouteAdvertisements

metadata:

name: default

spec:

nodeSelector: {}

frrConfigurationSelector: {}

networkSelectors:

- networkSelectionType: ClusterUserDefinedNetworks

clusterUserDefinedNetworkSelector:

networkSelector:

matchLabels:

advertise: "true"

advertisements:

- "PodNetwork"The RouteAdvertisements custom resource allows us to select the OpenShift nodes we want to use to advertise the route, the FRRConfiguration, and the actual primary network (ClusterUserDefinedNetwork) to advertise (again using labels).

Let's view the actions.

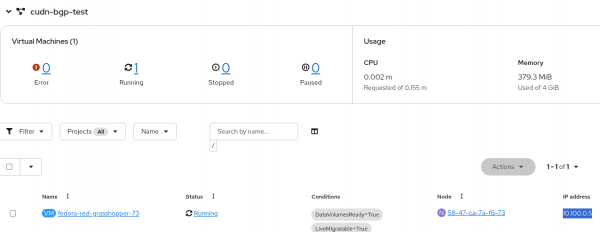

In OpenShift Virtualization, we need to create a VM in the namespace cudn-bgp-test (Figure 2).

The assigned IP address is 10.100.0.5, which is part of the configured network for the namespace.

For confirmation, we could check if the route-advertisement named default is created and accepted:

oc get routeadvertisements.k8s.ovn.org default

NAME STATUS

default AcceptedHint: We could also use ra as the short name for routeadvertisements.k8s.ovn.org.

From the external BGP router, we should receive a new route for 10.100.0.0/16, which is the network assigned to the cudn-bgp-test namespace.

External BGP route routing table:

default via 192.168.0.1 dev enp2s0 proto dhcp src 192.168.0.19 metric 100

10.100.0.0/16 nhid 18 via 172.22.0.11 dev enp3s0 proto bgp metric 20

172.22.0.0/24 dev enp3s0 proto kernel scope link src 172.22.0.1 metric 10The ICMP protocol is enabled in the VM, so we should be able to ping the VM from the external route (the VM's IP is 10.100.0.5):

External BGP router:

ping -c 3 10.100.0.5

PING 10.100.0.5 (10.100.0.5) 56(84) bytes of data.

64 bytes from 10.100.0.5: icmp_seq=1 ttl=63 time=2.87 ms

64 bytes from 10.100.0.5: icmp_seq=2 ttl=63 time=1.14 ms

64 bytes from 10.100.0.5: icmp_seq=3 ttl=63 time=0.519 ms

--- 10.100.0.5 ping statistics ---

3 packets transmitted, 3 received, 0% packet loss, time 2003ms

rtt min/avg/max/mdev = 0.519/1.507/2.867/0.993 msIn the VM, there is a running web server we could reach from the External BGP route:

External BGP router:

curl -v 10.100.0.5:80

* Trying 10.100.0.5:80...

* Connected to 10.100.0.5 (10.100.0.5) port 80

> GET / HTTP/1.1

> Host: 10.100.0.5

> User-Agent: curl/8.9.1

> Accept: */*

>

* Request completely sent off

< HTTP/1.1 200 OK

< Date: Thu, 11 Sep 2025 08:39:42 GMT

< Server: Apache/2.4.64 (Fedora Linux)

< Last-Modified: Thu, 11 Sep 2025 08:37:03 GMT

< ETag: "b-63e827103b393"

< Accept-Ranges: bytes

< Content-Length: 11

< Content-Type: text/html; charset=UTF-8

<

BGP Rules!

* Connection #0 to host 10.100.0.5 left intactWrap up

Integrating BGP with OpenShift Virtualization via FRRouting and user-defined networks unlocks a powerful set of capabilities for modern cloud-native environments. By enabling seamless exposure of VM networks outside the cluster, we align the OpenShift platform user experience with what a traditional virtualization user expects, direct ingress using the VM's IPs, which simplifies the networking configuration. The demonstrated use cases highlight how BGP enhances flexibility, scalability, and performance, while features like fast failover with BFD and loop prevention ensure robust, high-availability operations.

For those looking to implement these features, start by ensuring your OpenShift cluster meets the requirements and refer to the official documentation for deeper customization. Experimenting in a lab environment, as outlined here, can provide hands-on insights into the tangible benefits for your virtualization and containerized applications.