This article demonstrates how you can import provider network routes into Red Hat OpenShift Virtualization with border gateway protocol (BGP). As cloud-native and hybrid environments grow, so do networking demands. OpenShift needs robust, dynamic networks, which BGP provides. This integration overcomes traditional Kubernetes networking limits by enabling dynamic routing.

This integration also automates route configurations, facilitates dynamic route updates, and simplifies virtual machine (VM) migration from platforms like VMware, which enhances flexibility and performance. Furthermore, BGP's support for advanced features like bidirectional forwarding detection (BFD) ensures rapid failover and high availability, essential for maintaining application uptime.

Kubernetes networking limitations

Traditional Kubernetes networking often presents limitations, particularly in its interaction with external network environments. Manual route configurations and a lack of native routing protocol support can hinder network flexibility and performance. BGP directly addresses these challenges by enabling dynamic exchange of routing and reachability information, streamlining operations for containerized applications and virtual machines (VMs).

As organizations are increasingly embracing cloud-native architectures and hybrid environments, the networking demands grow more complex. Platforms like Red Hat OpenShift require robust, dynamic, and efficient networks. This is where the BGP, its ecosystem (e.g., BFD), and cluster user-defined network (UDN) integration excels because it provides:

- Enhanced connectivity for workloads (dynamic routing and reachability info)

- Advanced network segmentation and isolation

- Fast convergence for VM networks

Prerequisites

The following are the requirements for importing provider networks to OpenShift.

- OpenShift cluster (version 4.20 or later)

- Enable OpenShift's additional routing capabilities.

- Install and configure OpenShift Virtualization. Please refer to the OpenShift documentation for instructions.

To enable the additional routing capabilities for our OpenShift cluster, we need to patch the network operator as follows:

oc patch Network.operator.openshift.io cluster --type=merge -p='{"spec":{"additionalRoutingCapabilities": {"providers": ["FRR"]}, "defaultNetwork":{"ovnKubernetesConfig":{"routeAdvertisements":"Enabled"}}}}'How to import provider networks to the cluster default network

OpenShift clusters can dynamically import routes from external provider networks into an open virtual network (OVN), eliminating the need for manual host-based route configuration. This enables dynamic routing updates based on changes in the provider’s network, which is crucial for maintaining flexible and responsive network environments.

This dynamic routing mechanism ensures uninterrupted connectivity via backup paths and traffic balancing, quickly rerouting upon failures to minimize downtime. It also prevents routing loops by maintaining path information in updates, thereby creating the conditions for efficient and error-free data transmission.

We will showcase this feature in the following scenario and how to configure OpenShift to import routes from the provider network into the cluster.

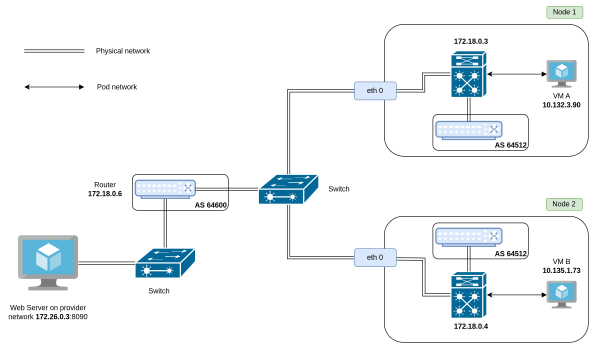

Our OpenShift cluster will contain two schedulable nodes interconnected by a top-of-rack switch and connected to a BGP speaker router. This router will expose one subnet to the OpenShift cluster, enabling a VM running in the cluster to reach a web server running in the provider network. For better understanding and additional addressing information, refer to Figure 1, which shows the router IPs, autonomous system number (ASN), the web server IP, and port.

Now we will demonstrate the following two methods of importing routes to provider networks:

- Import to the default cluster network.

- Import to a cluster-wide UDN.

These two options require creating an FRRConfiguration CR, indicating with which BGP speakers to peer and, optionally, configuring which prefixes we are interested in pulling from the provider’s network. In both options, we will use the same ASN number (64600) for the provider’s edge router.

Import provider routes to the default cluster network

Let’s start with the simpler configuration: importing provider routes to the default cluster network. In this scenario, we want to only import a subset of the prefixes, which will require additional configuration. You can check the FRRConfiguration CR we need to provision to fulfill this use case.

The FRRConfiguration is a custom resource definition (CRD) used in Kubernetes environments to configure FRRouting (FRR) instances for border gateway protocol (BGP) routing. The primary purpose is to define and manage BGP routing configurations for nodes in a Kubernetes cluster, enabling dynamic and automated network routing for workloads like VMs and containers.

apiVersion: frrk8s.metallb.io/v1beta1

kind: FRRConfiguration

metadata:

name: receive-filtered-default-network

namespace: openshift-frr-k8s

spec:

nodeSelector: {}

bgp:

bfdProfiles:

- detectMultiplier: 4

name: bfd-default

receiveInterval: 200

transmitInterval: 200

routers:

- asn: 64512

neighbors:

- address: 172.18.0.6

asn: 64600

bfdProfile: bfd-default

toReceive:

allowed:

mode: filtered

prefixes:

- prefix: 172.26.0.0/16NOTE: This BFD configuration does not provide rerouting since we have only a single neighbor configured. But it will provide faster alerting and convergence time.

After provisioning the CR, we can connect to one of the nodes, and assert that we have indeed received the filtered prefix.

root@ovn-worker:/# ip route show proto bgp

172.26.0.0/16 nhid 38 via 172.18.0.6 dev breth0 metric 20We will also see an FRRNodeState object for each OpenShift node. This FRRNodeState CR holds the configuration for the FRR router running in the node.

Now, let's confirm that this VM can actually contact the server, which exposes a web application on the IP 172.26.0.3, port 8090.

# let's first show the rules known in the guest ...

[fedora@red ~]$ ip r

default via 10.0.2.1 dev eth0 proto dhcp metric 100

10.0.2.0/24 dev eth0 proto kernel scope link src 10.0.2.2 metric 100

# let's check the HTTP reply header

[fedora@red ~]$ curl -I 172.26.0.3:8080

HTTP/1.1 200 OK

Date: Tue, 09 Sep 2025 14:33:13 GMT

Content-Length: 16

Content-Type: text/plain; charset=utf-8As we can see, the guest only has the route for the default gateway. It is the cluster infrastructure that learns the BGP routes and will route packets accordingly.

Import provider routes to a cluster UDN

Now, let's demonstrate the more elaborate use case: importing provider routes to a cluster UDN. And for that, we need a cluster UDN, which in turn, requires at least one namespace. You will find it in following manifest:

---

apiVersion: v1

kind: Namespace

metadata:

name: blue

labels:

k8s.ovn.org/primary-user-defined-network: ""

---

apiVersion: k8s.ovn.org/v1

kind: ClusterUserDefinedNetwork

metadata:

name: happy-tenant

spec:

namespaceSelector:

matchExpressions:

- key: kubernetes.io/metadata.name

operator: In

values:

- blue

network:

topology: Layer2

layer2:

role: Primary

ipam:

lifecycle: Persistent

subnets:

- 200.200.0.0/16To import routes into a cluster UDN, first the user has to import them to the default VRF, and only then leak them from the default VRF into the VRF of the cluster UDN.

We will use the following configuration:

apiVersion: frrk8s.metallb.io/v1beta1

kind: FRRConfiguration

metadata:

name: receive-filtered-blue-cudn

namespace: openshift-frr-k8s

spec:

bgp:

bfdProfiles:

- detectMultiplier: 4

name: bfd-default

receiveInterval: 200

transmitInterval: 200

routers:

- asn: 64512

neighbors:

- address: 172.18.0.6

asn: 64600

bfdProfile: bfd-default

toReceive:

allowed:

mode: filtered

prefixes:

- prefix: 172.26.0.0/16

- asn: 64512

imports:

- vrf: default

vrf: happy-tenantAfter provisioning the CR, we can connect to one of the nodes and confirm we've received the filtered prefix in the VRF created for the happy-tenant cluster user-defined network (C-UDN).

root@ovn-worker:/# ip route show proto bgp vrf happy-tenant

172.26.0.0/16 nhid 38 via 172.18.0.6 dev breth0 metric 20Now, let's confirm that this VM (connected to a primary UDN, rather than the cluster default network) can reach the web server.

# let's first show the rules known in the guest ...

[fedora@blue ~]$ ip r

default via 192.168.0.1 dev eth0 proto dhcp metric 100

192.168.0.0/16 dev eth0 proto kernel scope link src 192.168.0.6 metric 100

# let's check the HTTP reply header

[fedora@blue ~]$ curl -I 172.26.0.3:8080

HTTP/1.1 200 OK

Date: Tue, 09 Sep 2025 14:33:13 GMT

Content-Length: 16

Content-Type: text/plain; charset=utf-8Conclusion

Integrating BGP with OpenShift Virtualization via FRRouting and user-defined networks unlocks a powerful set of capabilities for modern cloud-native environments. Enabling dynamic route import from provider networks addresses the key limitations of traditional Kubernetes networking by removing the need for manual routing configurations. This greatly simplifies the networking configuration, thus enhancing flexibility and scalability of the overall solution. Features like fast failover with BFD and loop prevention ensure robust, high-availability operations.

If you consider implementing these features, start by ensuring your OpenShift cluster meets the requirements and refer to the official documentation for deeper customization. Experimenting in a lab environment can provide hands-on insights into the tangible benefits for your virtualization and containerized applications.

Last updated: October 23, 2025