If you're running AI and machine learning workloads on Kubernetes, you're likely facing a familiar problem: resource management.

How do you stop critical jobs from being starved of GPUs by less urgent workloads? How do you ensure your production inference cluster always has the resources it needs? How do you fairly share expensive hardware between multiple teams running essential jobs?

This is a major challenge for any enterprise AI platform, and it's one that Red Hat OpenShift AI 3 is built to solve. The solution is a new integration for efficient resource control: KubeRay and Kueue on OpenShift AI.

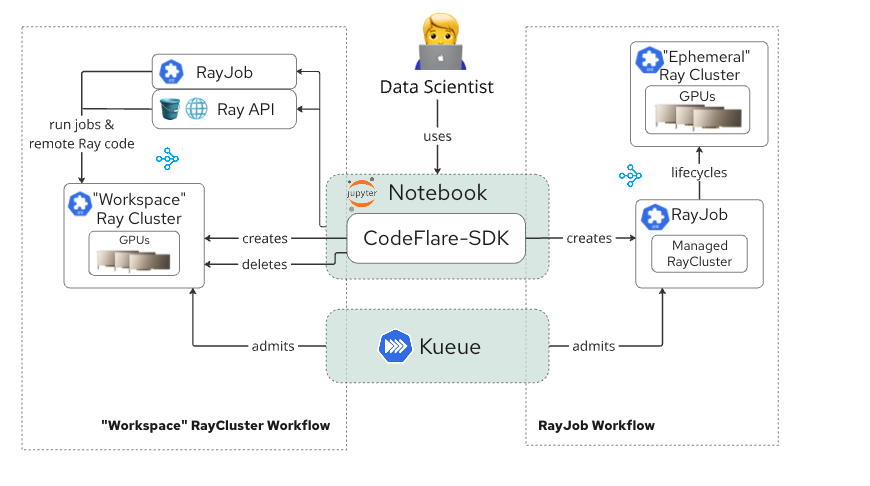

Kueue, the Kubernetes-native job queueing system, integrates directly with KubeRay. The new integration uses a built-in SDK on OpenShift AI to let you manage the entire lifecycle of RayCluster resources. This capability provides quota-aware, priority-driven scheduling for your complex Ray workloads.

Let's walk through how it works.

What you'll learn

This guide shows you how OpenShift AI 3 resolves resource contention for Ray workloads using three workflows:

- Long-running

RayCluster: Your personal Ray laboratory - Quick-iteration jobs: Fast feedback loops on your long-running

RayCluster - Ephemeral Ray clusters: Automated lifecyle management for self-cleaning jobs

These features are accessible to data scientists through a simple SDK integrated into Red Hat OpenShift AI 3 (Technology Preview).

Background: What are we working with?

First, let's briefly explain the key components:

- Ray: An open source framework for scaling Python workloads across multiple machines. Think of it as a way to turn your laptop code into distributed computing code with minimal changes.

- KubeRay: The Kubernetes operator that makes it possible to run and manage Ray on Kubernetes.

- Kueue: A Kubernetes-native job queuing system. It acts as a traffic controller for your cluster resources, ensuring fair sharing, priorities, and quotas are enforced.

- CodeFlare SDK: A Python SDK that lets data scientists interact with Ray on OpenShift AI without writing any Kubernetes YAML or learning

kubectlcommands.

KubeRay custom resource definitions (CRDs):

RayCluster: A Kubernetes resource that represents a Ray cluster (one head node plus multiple worker nodes). Managing these on Kubernetes traditionally requires writing complex YAML manifests.RayJob: A higher-level resource that manages the lifecycle of a Ray workload. It can spin up a temporaryRayClusterto run your code and automatically remove the infrastructure when it finishes, or submit against an existingRayCluster.

Kueue CRDs:

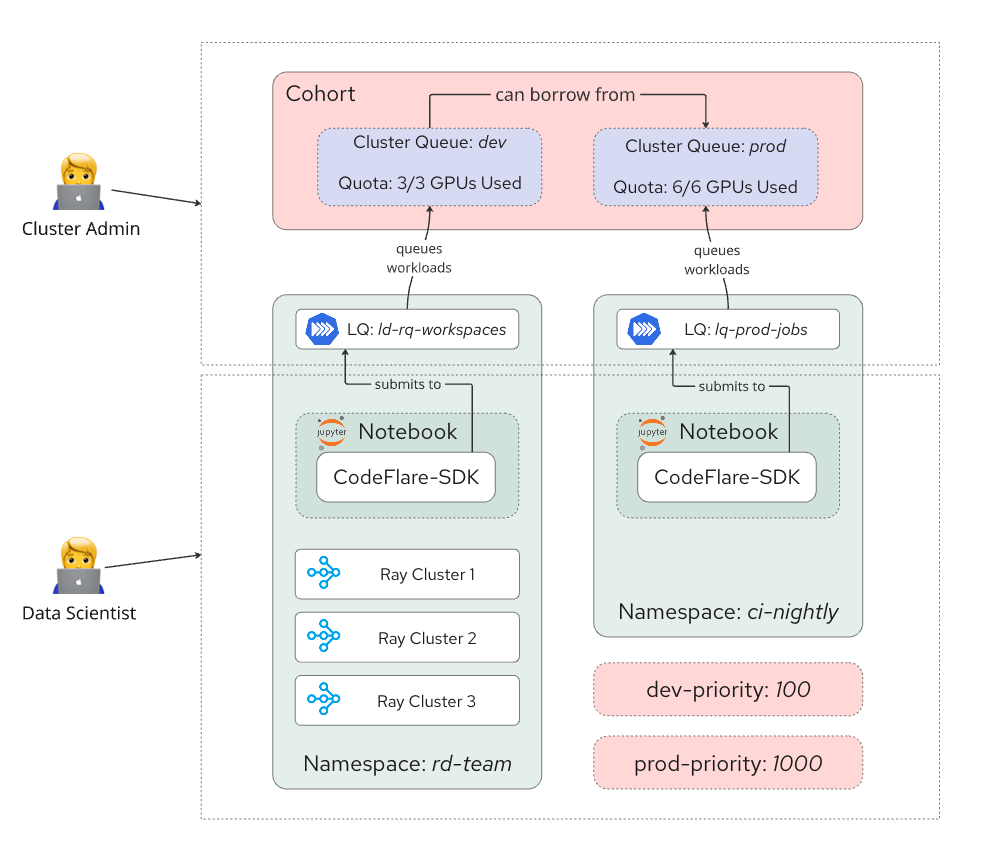

LocalQueue: A namespaced entry point where teams submit their jobs. It aggregates a specific team's workloads and routes them to aClusterQueuefor admission.ClusterQueue: A global pool of resources that enforces usage limits and quotas. It governs admission and fair sharing for workloads funnelled from multipleLocalQueueresources.- Cohort: A grouping of

ClusterQueueresources that enables sharing of unused quota. Busier queues can borrow excess capacity from idle queues. ResourceFlavor: Defines hardware variations available to the cluster.WorkloadPriorityClass: The importance of a job. This influences scheduling order and allows critical workloads to evict less important jobs.

The following sections describe the workflows available in Ray and Kueue, as shown in Figure 1.

Long-running RayCluster: Your personal Ray workspace

Use case: A data scientist needs a persistent environment for interactive development. This environment supports prototyping models, running exploratory analysis with Ray Data, or connecting to live systems for tasks like Feast feature engineering.

The problem

You can't prototype a 4-GPU model on your laptop. You need to run interactive, exploratory code—like a Feast feature engineering task or a Ray Data exploration—on a powerful, multinode cluster. However, you should not start a new cluster for every single notebook cell you execute.

The solution: A long-lived workspace cluster

The CodeFlare SDK allows you to define and manage this persistent cluster with a few lines of Python:

from codeflare_sdk import Cluster, ClusterConfiguration, TokenAuthentication

# Authenticate to RHOAI

auth = TokenAuthentication(

token = "XXXXX",

server = "XXXXX",

skip_tls=False

)

auth.login()

# Define your workspace cluster

workspace_cluster = Cluster(

ClusterConfiguration(

name="my-workspace-cluster",

num_workers=2,

worker_cpu_requests=2,

worker_memory_requests=8,

worker_extended_resource_requests={'nvidia.com/gpu': 1}, # 2 GPUs total

local_queue="ds-workspace-queue", # The team's development queue

labels={"kueue.x-k8s.io/priority-class": "rd-priority"} # The R&D priority

)

)

# Start your cluster

workspace_cluster.apply()

workspace_cluster.wait_ready()You now have a personal Ray cluster that runs as long as you need. You can connect to it from your notebook by explicitly pointing to its address. This is ideal for any task where you use ray.init() to connect your local notebook directly to the cluster for interactive exploration:

# Connect to Ray for interactive work

import ray

ray.init(workspace_cluster.cluster_uri())

# Now you can use Ray interactively

@ray.remote

def train_model(data):

# Your ML code here

pass

results = ray.get([train_model.remote(batch) for batch in data])When you're done for the day (or week), clean up:

workspace_cluster.down() # Resources immediately returned to the queueThe quick iteration loop: Running jobs on your workspace

Use case: You moved your code from a notebook into a .py script and want to test it as a self-contained job (for example, on a data sample) before running it at scale. Note: This requires Red Hat build of Kueue 1.4.

The problem

Your code has moved from a notebook into a test_model.py script. You are now in the "code-run-check" loop, and startup latency is your enemy. Starting an ephemeral cluster for every test is too slow—you might wait up to five minutes just for the cluster to pull its container images, only to discover a typo. You need an instant, subsecond submission time to iterate quickly.

The solution: Submit jobs to your existing workspace cluster

The CodeFlare SDK provides the RayJob object to submit a job to your existing cluster for quick iteration:

from codeflare_sdk import RayJob

# Define a job that runs on your EXISTING workspace cluster

quick_job = RayJob(

job_name="quick-dev-test",

entrypoint="python test_model.py",

cluster_name="my-workspace-cluster", # Points to your running cluster

namespace="your-namespace",

runtime_env={

"working_dir": ".", # Uses your current directory

"pip": "requirements.txt" # Installs dependencies

}

)

# Submit the job (instant - no cluster startup wait)

quick_job.submit()

# Check status

quick_job.status()

# Get logs

quick_job.logs()This workflow is the bridge between interactive prototyping and an automated job. It lets you test your full script (test_model.py), including its runtime_env and dependencies, without waiting for a new cluster to spin up.

The ephemeral cluster: Self-service, automated jobs

Use case: Your code is tested and ready for an automated, "fire-and-forget" run. This could be a 12-hour Ray Train job, a nightly Ray Data batch inference job, or a large-scale experiment. The cluster can be created for the job and automatically deleted on completion.

The problem

You have two new challenges:

- Resource management: You need 8 GPUs, but so do other teams. How do you submit your job without starving their critical workloads? And how does the platform ensure your job gets the resources it needs without manual intervention?

- Resource waste: You don't want to manually manage infrastructure. You need a cluster that is created for your job and automatically destroyed the second it finishes, ensuring zero idle time and zero wasted cost.

The solution: Job-managed, ephemeral clusters

This is the most powerful workflow. Instead of creating a cluster and submitting jobs to it, you define the resources your job needs, and the platform handles everything else.

from codeflare_sdk import RayJob, ManagedClusterConfig

# Define a job with embedded cluster requirements

production_job = RayJob(

job_name="final-training-run",

local_queue="batch-jobs-queue", # Production team's queue

cluster_config=ManagedClusterConfig( # Define cluster inline

num_workers=4,

worker_cpu_requests=8,

worker_cpu_limits=8,

worker_memory_requests=16,

worker_memory_limits=16,

worker_accelerators={'nvidia.com/gpu': 2}, # 8 GPUs total

),

entrypoint="python train_model_full.py",

runtime_env={

"working_dir": "https://github.com/your-org/your-repo/archive/main.zip",

"pip": "./path/to/requirements.txt"

},

labels={"kueue.x-k8s.io/priority-class": "prod-priority"}

)

# Submit and forget

production_job.submit()The data scientist never touches infrastructure. They just define what they need and let the platform handle the lifecycle.

Why this matters: Real-world scenarios

Let's look at two options: operations without Kueue, and how Kueue and Ray work together to address this common scenario.

Without Kueue: The manual admin gatekeeper

This is a standard Kubernetes or OpenShift AI cluster with the KubeRay operator, but no Kueue. To prevent a scenario where users creates clusters that conflict, the company has made the platform admin the manual gatekeeper for all resources.

The problem: High cost and difficulty

This manual approach is a drain on the organization.

- High cost (idle waste): If a data scientist finishes at 3 PM but forgets to file a deletion ticket, a 2-GPU cluster—which can cost $3-10 per hour—sits idle for five hours. This idle waste, scaled across a whole team, costs the company.

- Resource hoarding: Data scientists know the request process is slow. When they finally get a 6-GPU cluster, they might keep it "just in case" they need it, even if they aren't actively using it. Utilization plummets.

- Zero efficiency (no borrowing): The 12 GPUs reserved for the production job sit 100% idle for 16 hours a day. There is no mechanism to safely lend this massive, expensive block of capacity to the R&D team. The cluster's maximum possible utilization is permanently capped.

- Admin as bottleneck: The administrator is a human queue. Every request is blocked on one person's availability.

- Productivity loss: Data scientists spend their time waiting in queues and filing tickets instead of doing research.

With Kueue: The automated platform

The platform admin's job is no longer to act as a manual gatekeeper, but to design the platform's resource management policies. They configure Kueue once to create a self-service, automated, and efficient system.

The admin's setup

The admin defines the resource management policies (Figure 2):

cq-production: 12-GPU guarantee,prod-priority(1000).cq-development: 8-GPU guarantee,dev-priority(100).all-gpusCohort: Both queues are added to a cohort, allowing them to borrow from each other. ThereclaimWithinCohortpolicy is set, allowing production to preempt R&D.

How the scenario plays out

Here's what happens to the exact same R&D and production requests from the "before" scenario.

- 1 PM (R&D team self-service):

- A data scientist needs their 2-GPU workspace

RayCluster. They don't file a ticket. They just run theirworkspace_cluster.apply()script, which submits theirRayClusterto thelq-rd-workspacequeue. - Kueue's action: Kueue intercepts the request. It checks the 8-GPU quota for

cq-development, sees 2 GPUs are available, and instantly admits the cluster. - Two other data scientists do the same. Kueue admits their clusters, using the remaining 6 guaranteed R&D GPUs and borrowing 4 GPUs from the idle production quota.

- Result: All R&D clusters are running. The admin wasn't involved. All resources are 100% utilized.

- A data scientist needs their 2-GPU workspace

- 8 PM (Production job automated):

- A CI/CD pipeline (not an admin) automatically submits the high-priority

RayJobto thelq-prod-batchqueue. - Kueue's action:

- Intercept: Kueue sees the high-priority (

value: 1000) production job arrive. - Check quota: It checks

cq-productionand sees its 12 guaranteed GPUs are currently being borrowed by the R&D queue. - Trigger preemption: Kueue's

reclaimWithinCohortrule is activated. It identifies the lower-priority (value: 100) R&D clusters that are using the borrowed resources. - Clean eviction: Kueue cleanly preempts the R&D clusters. It doesn't just kill random pods; it suspends the

RayCluster, and the system removes the associated pods. This is a safe, orderly shutdown. - Admit: The 12 GPUs are now free. Kueue instantly admits the high-priority production job.

- Intercept: Kueue sees the high-priority (

- Result: The production job runs on time, every time, with zero manual intervention.

- A CI/CD pipeline (not an admin) automatically submits the high-priority

- The next morning (automatic cleanup and recovery):

- The nightly production job (workflow 3) finishes. Because it's an ephemeral cluster, the system automatically deletes itas part of the

RayJoblifecycle and returns its 12 GPUs to the queue. - Now that 12 GPUs are available, Kueue un-suspends the preempted Ray Clusters.

- The data scientist arrives and doesn’t need to rerun the

workspace_cluster.apply()script, as the clusters have already returned to their previous state. - Result: The admin is still not involved. The R&D team is back up and running in minutes.

- The nightly production job (workflow 3) finishes. Because it's an ephemeral cluster, the system automatically deletes itas part of the

The problem: Solved

This automated approach addresses every problem from the manual "before" scenario:

- High costs: Reduced. This is achieved by ensuring zero idle waste, as ephemeral Ray clusters are automatically deleted, and preventing hoarding, since a data scientist's long-running Ray cluster is cleanly preempted if a high-priority job needs the resources.

- Resource efficiency: Solved. The R&D team automatically borrows the 12 idle production GPUs during the day. The production job automatically reclaims them at night. The cluster is always doing useful work.

- Admin as bottleneck: Solved. The admin is no longer a human scheduler. The platform is fully self-service. The admin's job is to monitor and tweak the resource management policies, not manage individual requests.

- Productivity loss: Solved. Data scientists get their resources in seconds via the queue, not in hours via a ticket. They can focus on their work.

Conclusion: From manual gatekeeping to automated platform

The days of manually managing RayCluster YAML files, wasting money on idle GPUs, and making data scientists wait in ticket queues are over. The integration of Ray and Kueue on Red Hat OpenShift AI 3 transforms this process into a fully automated, self-service platform.

This solution provides benefits for two key roles:

- For data scientists: You get a simple, powerful set of Python-native workflows. Whether you need an interactive workspace, a fast-iteration loop, or a fire-and-forget ephemeral job , you can access the resources you need without ever writing a line of Kubernetes YAML.

- For platform administrators: You move from being a human bottleneck to a platform architect. You can now design sophisticated, fair, and efficient policies. You can automatically manage quotas, priorities, and resource sharing across the entire organization, ensuring high-priority jobs always run and cluster utilization remains high.

What's next

Explore OpenShift AI learning paths and try OpenShift AI in our no-cost Developer Sandbox.