The Model Context Protocol (MCP) server extension for Kubernetes and OpenShift enables AI assistants like Visual Studio Code (VS Code), Microsoft Copilot, and Cursor to safely and intelligently interact with your Red Hat OpenShift and Kubernetes clusters. This guide walks through how to set up the Kubernetes MCP server, configure secure access with least-privilege ServiceAccounts, and leverage its capabilities to streamline cluster inquiries and troubleshooting through natural language commands.

What is MCP?

The Model Context Protocol is an open protocol that connects large language model (LLM) applications with external data and tools. It provides LLMs with the specific context they need to perform tasks, from enhancing customer service chatbots to powering coding assistants and custom AI workflows. By using MCP, you can give LLMs a standard way to access the information and functions they need.

What is the Kubernetes MCP server?

The Kubernetes MCP server allows tools like VS Code, Microsoft Copilot, and Cursor manage your cluster via the Model Context Protocol. It lets large language models (LLMs) interact with Kubernetes and OpenShift clusters, significantly enhancing their capabilities with real-time data and actionable insights. This is achieved through several key features:

- No external dependencies: The server is a single binary with no external dependencies, such as

kubectl, Helm, Node.js, or Python. - Kubernetes and OpenShift support: Connects to both Kubernetes and OpenShift clusters.

- Generic Kubernetes resources: Enables access and CRUD operations on any Kubernetes resource, including custom resources.

- High performance: Direct API calls with no command-line tool overhead.

- Safety modes: Configurable modes to control the level of access and actions allowed (read-only, non-destructive, and so on).

- Extended Pod operations: Advanced capabilities for Pods, including event and log retrieval, command execution, and top.

- Flexible deployment: Run locally (

stdio) for single admins or in-cluster (Streamable HTTP or SSE) for teams.

The MCP server defaults to full cluster control, but it offers configurable modes for restricted access, including read-only, non-destructive, or fully unprotected operations.

For OpenShift environments, this feature is currently in a developer preview phase, which is ideal for initial trials and gathering valuable feedback.

We recommend running the MCP server with a dedicated service account (for example, cluster-reader). Optionally, start the server using the --read-only configuration option (as a safeguard if RBAC isn’t already tightly scoped).

For cluster administrators, the Kubernetes MCP server offers many advantages:

- Intuitive cluster inquiry: Pose natural language questions about your cluster's state, such as "Show me all pods in CrashLoopBackOff in the last 24 hours.”

- Streamlined troubleshooting: Diagnose and resolve issues with queries like "Why is my deployment not scaling?”

- Consistent and auditable access: Benefit from consistent, auditable access that seamlessly adheres to your OpenShift RBAC and organizational policies.

Using the Kubernetes MCP Server with OpenShift 4.19 and VS Code

We’ll walk through integrating a MCP host (AI assistant) with OpenShift, running the MCP server locally with secure, limited credentials. We’ll intuitively explore the cluster using natural language and troubleshoot issues through chained tool calls, such as logs, events, and exec.

Prerequisites

To get started, you’ll need the following:

oclogged into your OpenShift 4.19 cluster (you can useoc whoamito check this)- Node.js and npm available (run

node -vandnpm -vto check) - VS Code installed

If you need Node on macOS (the Intel path is shown here), run:

brew install node@20

export PATH="/usr/local/opt/node@20/bin:$PATH"

node -v

npm -v1. Create a read-only ServiceAccount and RBAC (OpenShift)

A ServiceAccount represents a non-human identity. Binding it to a read-only role lets tools safely query the cluster.

Create or pick a namespace for the ServiceAccount (If it exists, use your existing ServiceAccount):

oc new-project mcpCreate the ServiceAccount:

oc create sa mcp-viewer -n mcp- Grant read-only access:

Cluster-wide read-only (most common):

oc adm policy add-cluster-role-to-user cluster-reader system:serviceaccount:mcp:mcp-viewerThis binds the ServiceAccount to the built-in cluster-reader role (which can read across the whole cluster).

If you prefer namespace-scoped only (for a tighter scope):

oc -n mcp adm policy add-role-to-user view system:serviceaccount:mcp:mcp-viewerThis limits read access to the

mcpnamespace.

Quick verification (optional):

oc auth can-i --as=system:serviceaccount:mcp:mcp-viewer list pods --all-namespacesExpect

yesif you used cluster-reader;yesin themcpnamespace only if you used view.

2. Mint a ServiceAccount token

Tools authenticate via a bearer token. With Red Hat OpenShift 4.19+, we prefer using a short-lived, bound token. If that is not available, use the secret-based fallback.

Preferred path: TokenRequest API

Create a time-bound token (choose a duration to test, for example, 2 hours):

TOKEN="$(oc -n mcp create token mcp-viewer --duration=2h)"3. Build a dedicated kubeconfig that uses the token

A separate kubeconfig isolates this ServiceAccount’s credentials from your admin kubeconfig and is easy to point tools at.

Get your cluster API URL (if you don’t already have it):

API="$(oc whoami --show-server)"

oc login --server="$API" --token="$TOKEN" --kubeconfig="$HOME/.kube/mcp-viewer.kubeconfig"

chmod 600 "$HOME/.kube/mcp-viewer.kubeconfig"

Quick sanity checks

oc --kubeconfig="$HOME/.kube/mcp-viewer.kubeconfig" whoami

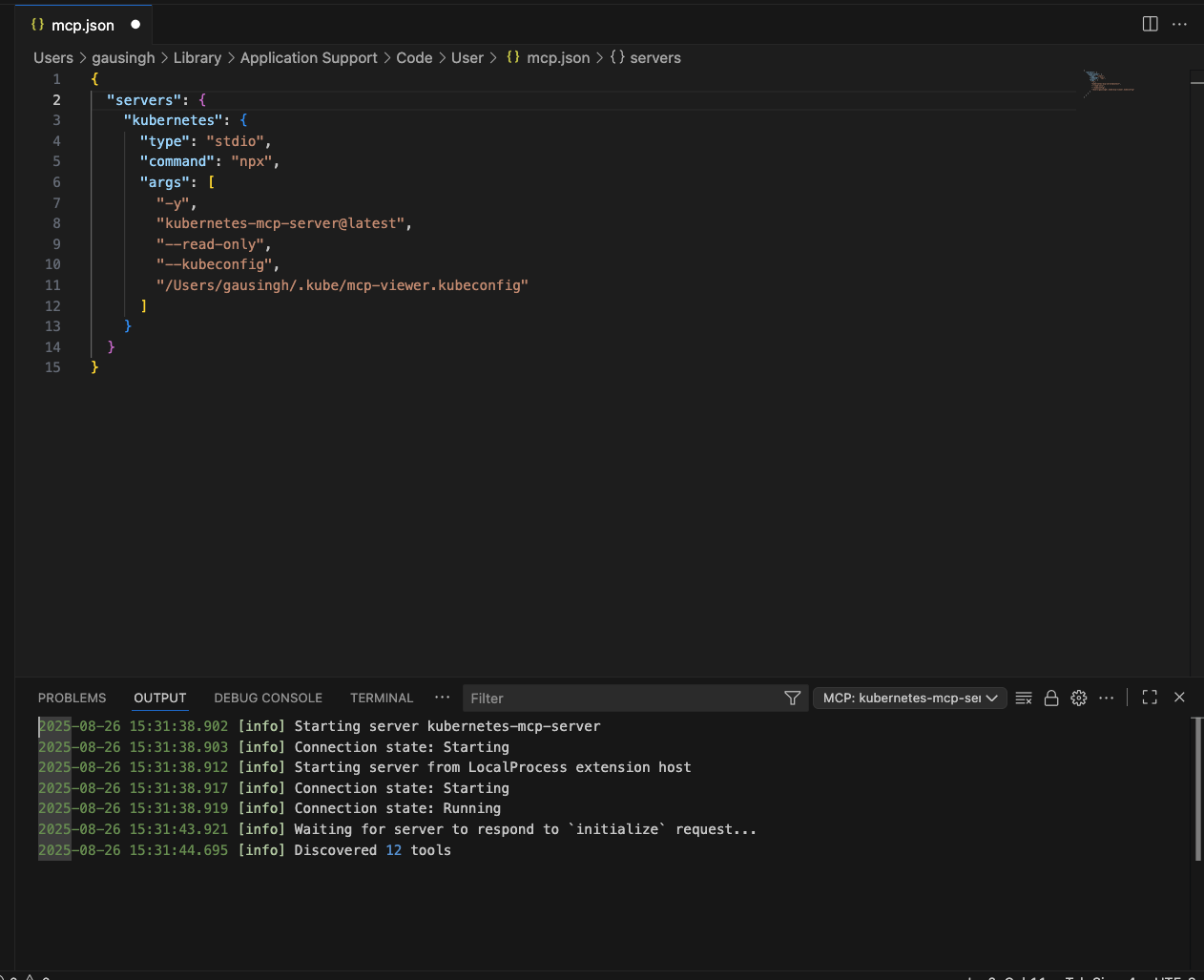

oc auth can-i --as=system:serviceaccount:mcp:mcp-viewer list pods --all-namespaces4. Add the MCP server to VS Code (local/stdio)

- Open the Command Palette (Cmd+Shift+P).

- Go to MCP: Open User Configuration or use a repo-local config: MCP: Open Workspace Folder Configuration to create the

.vscode/mcp.jsonfile. - Copy and paste this JSON (update the

kubeconfigpath if needed):

{

"servers": {

"kubernetes": {

"type": "stdio",

"command": "npx",

"args": [

"-y",

"kubernetes-mcp-server@latest",

"--read-only",

"--kubeconfig",

"$HOME/.kube/mcp-viewer.kubeconfig"

]

}

}

}- Save. If it doesn’t auto-start: Open the Command Palette and go to MCP: Show Installed Servers → kubernetes. Click the gear symbol and select Restart. See Figure 1.

5. Use it in the VS Code chat

- Open Chat using agent mode. If this is your first time using VS Code, sign in to your GitHub account to make the agent option available.

- In the chat header, open Tools and toggle kubernetes ON.

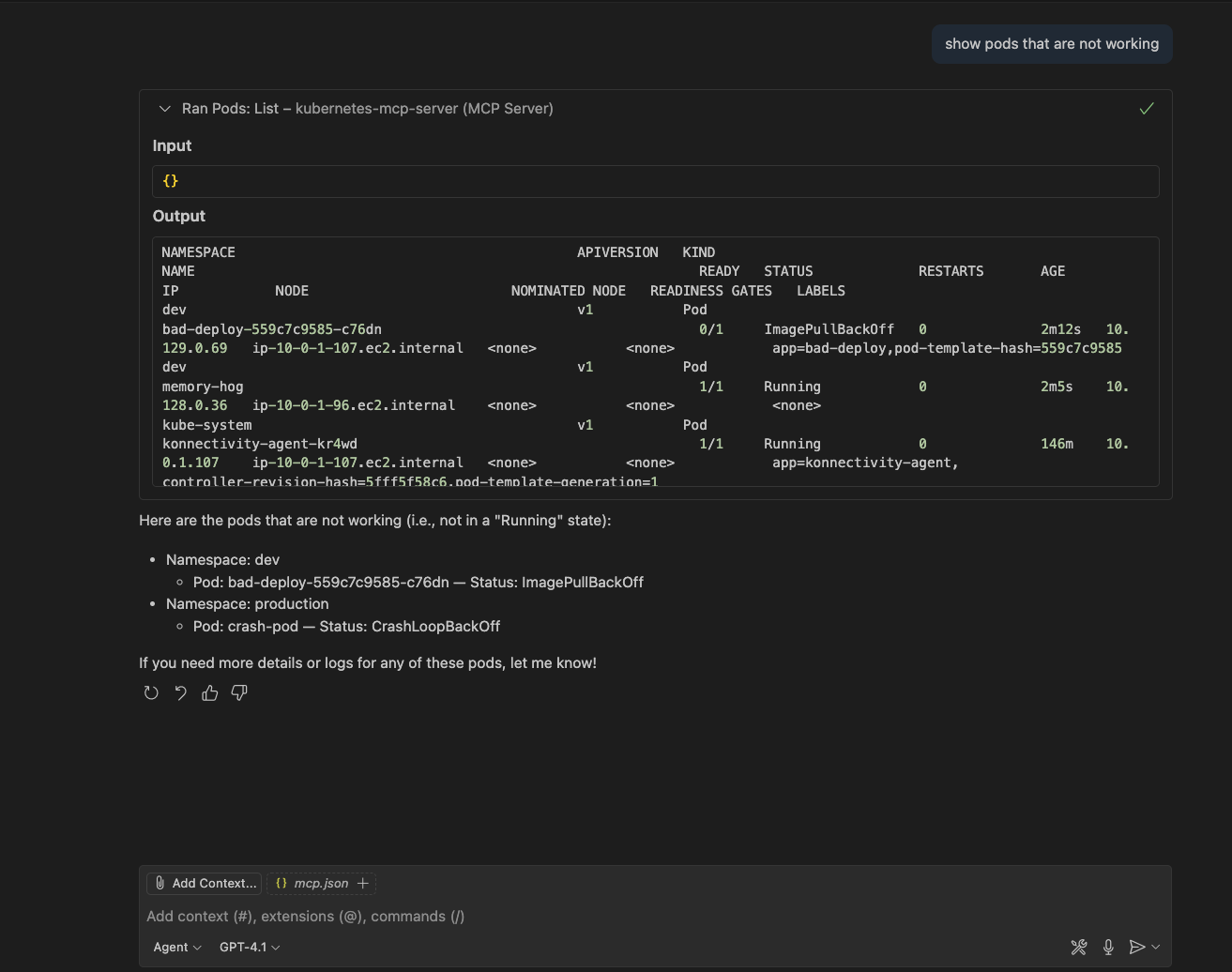

Enter the following prompt:

show pods that are not working

The output is shown in Figure 3.

6. Try these additional prompts

Try experimenting with these prompts or your own:

show nodeshow pods that are not workinglist namespacesget customresourcedefinitionsget the events of a pod in the default namespacedescribe pod <name> in namespace <ns>get deployments in namespace mcpshow nodeshelp me diagnose the pod <my-app-123>

7. Clean up resources

To clean up the resources used, run the following commands to remove the ServiceAccount and the associated kubeconfig file. The optional commented lines will completely remove the mcp project and delete the MCP configuration files from VS Code.

oc adm policy remove-cluster-role-from-user cluster-reader system:serviceaccount:mcp:mcp-viewer || true

oc -n mcp delete sa mcp-viewer --ignore-not-found || true

rm -f "$HOME/.kube/mcp-viewer.kubeconfig"

# optional:

# oc delete project mcp

# rm -f ~/Library/Application\ Support/Code/User/mcp.json

# rm -f .vscode/mcp.jsonExperience the Kubernetes MCP server for yourself

The Kubernetes MCP server gives you an AI assistant with safe, RBAC-respecting access to your OpenShift cluster. By configuring it with a least-privilege ServiceAccount and using oc create token, you keep your credentials clean and auditable while enabling powerful workflows inside VS Code.

Head over to the Kubernetes MCP server GitHub project to give this developer preview a try! We’re keen to hear your feedback, so send us feedback through your Red Hat contacts, message us at OpenShift Commons Slack, or create an issue on GitHub.