In this article, we will look at confidential virtual machines (CVMs) and how they play a key role in confidential containers (CoCo). Confidential computing is a set of security technologies that provides the confidentiality of workloads. This means that the running workload and, more importantly, it's data in transit, at rest, and in use, is isolated from the environment where the workload runs.

We often hear about projects targeting various use cases, but we will show you that these two technologies are better when integrated. Another goal of this article is to show how Red Hat Enterprise Linux (RHEL) CVMs support Red Hat OpenShift confidential containers, what they bring and what customizations are added to the guest operating system (OS) to obtain the highest security standards for data in use protection.

Confidential VMs

A confidential VM (CVM) is a VM which utilizes hardware and software capabilities to provide data confidentiality guarantees. Asking about the differences between a VM and a CVM is a bit misleading, because a CVM is an extension of a VM.

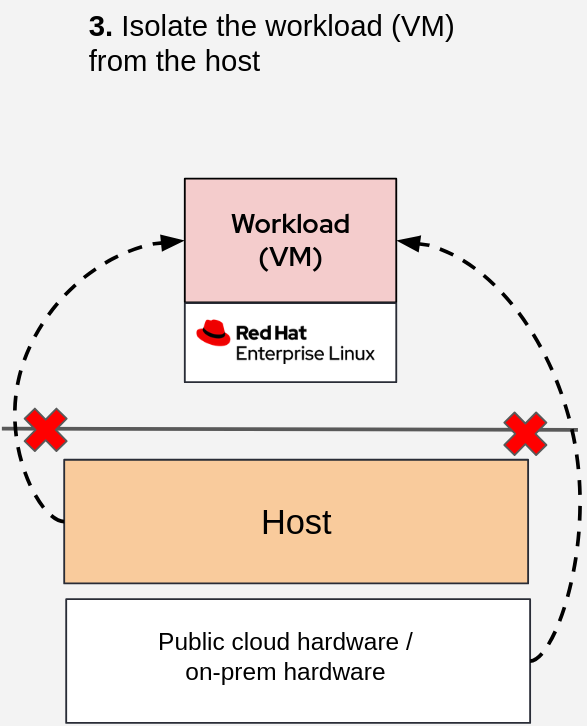

A CVM extends the traditional VM concept to also protect the workload from the host/platform. The trust model here is inversed: the workload in this case is trusted, the host not. Because it is an extension of a VM, we obtain the aforementioned benefits, plus workload isolation.

Figure 1 shows the mentioned additional use case.

To summarize, using a CVM provides the following isolation benefits:

- Access other workloads running on the same host.

- Obtain control of the host where it is running.

- Host/platform access to the workload running inside the CVM.

We will focus on the following benefits RHEL CVMs provide:

- Support for confidential hardware: The RHEL kernel supports the Trusted Execution Environment (TEE) hardware which takes care of encrypting the memory to ensure data in use confidentiality.

- Full boot chain protection: RHEL supports Unified Kernel Image (UKI), meaning that the kernel, initramfs and command line are shipped in a single, signed package file, to enable Secure Boot. In other words, using UKI with Secure boot, we can restrict the system to only boot kernel, initramfs and kernel command line that is signed by some entity we trust.

- Remote attestation capabilities: Support of trustee-guest-components package to perform attestation and secure key secret retrieval.

OpenShift confidential containers

As the name suggests, CoCo applies the same confidentiality idea to containers and brings it to Red Hat OpenShift, allowing the user to run container workloads in a confidential environment.

But how does it work internally? The answer is simple: a confidential container is a container running inside a CVM. The architectural difference between CoCo and CVMs is that in CoCo the CVM is placed transparently between the container and the platform while for CVMs the user configures it directly.

From the user’s perspective, a CoCo is no different from any other container: it is managed and orchestrated by OpenShift, deployed and deallocated normally just like any other container.

Openshift confidential containers provides two modes of deployment:

- Kata local-hypervisor: Deploy OpenShift confidential containers on bare metal.

- Kata remote-hypervisor (peer-pods): public cloud deployments

We will focus on the peer pods solution and the features that RHEL guest OS (running inside the CVM) brings.

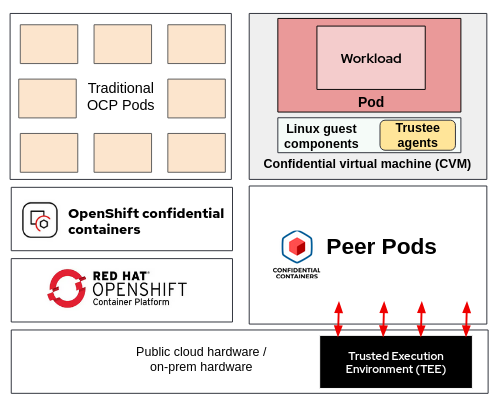

In peer-pods, the CVM runs at the same level of the OpenShift worker node VM, making it completely independent from the worker node as seen in the diagram in Figure 2.

The confidentiality of the container workload is brought by the CVM, which for OpenShift is a specific Red Hat build of a CVM bringing some key features at OS level. Shipped with OpenShift sandboxed containers, we have the following features available for peer-pods based deployments:

- Kernel support for TEE (same as general purpose CVMs).

- Unified Kernel Images support (same as general purpose CVMs).

- Linux guest components: Such components allow OpenShift to connect with the container workload, in order to make this layer completely transparent for the user.

- Attestation (Trustee) agents: This is similar to the trustee-guest-components package, but already installed in RHEL.

- Root disk integrity verification.

- Encrypted container storage encryption.

There are other high level features available in CoCo, like sealed secret support, pod bootstrap configuration, attestation metrics, and so on. These are specific features of CoCo that are not related to the CVM side of it, but if you are interested, learn more.

Next, let’s go deeper into some of these features.

UKI support

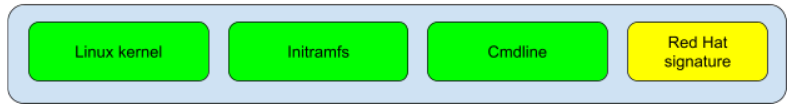

Since RHEL 10.0 and 9.6, UKIs are generally available. But what are they? And what exactly do they bring?

The main advantage of UKIs is that they tie the kernel together with initramfs and kernel command line in a single, versioned binary. This is a considerable security benefit, for a number of reasons:

- In the past, initramfs was not shipped together with kernel, or could easily get generated again and replaced. It was not part of the secure boot mechanism, so an attacker could inject malicious code through initramfs.

- Same applies to kernel command line: an attacker could add command line that disables security mechanisms in the kernel.

- An UKI can be easily measured by systemd components, which is very useful for attestation (Figure 3).

Red Hat builds and signs its own UKI and ships it via the kernel-uki-virt package.

However, CoCo does not yet support Secure Boot. This does not decrease the security level, because attestation covers its job.

Attestation uses the UKI measurements produced by the RHEL systemd components to do a very similar work that Secure Boot does, but remotely and in a more custom way. Via attestation we can check:

- If the current booting UKI is exactly the binary that we expect (as opposed to SB that checks the signature).

- The current kernel command line, as it could also be extended (not covered by SB).

By attesting such parameters, CoCo is able to verify similarly to what SB does. Also, it is even safer since such checks are done in the remote attester running in a secure environment.

Root disk integrity verification

When it comes to confidential environments and confidentiality, another interesting aspect often overlooked is about storage. As we said before, confidentiality means that the host or platform is not trusted. But if that is not trusted, how are we (workload owners) capable of uploading a root disk image for our CVM, without letting the platform access it or modifying it?

While attestation does check if UKI, CPU and some other components are untampered with, it can’t check the whole root filesystem. An attacker could simply add a script or a program inside the OS and that would go unnoticed in attestation. In this scenario we can already assume the memory is encrypted, so the host cannot read whatever lays in memory, including passwords loaded to be then soon used.

As a reminder, a virtual machine could be deployed in two ways: an OS image (i.e., .iso) which gets installed in the host machine at deployment time, or a pre-uploaded disk containing the OS already installed and ready to boot. The first option is not feasible in a confidential environment, because:

- The host/platform could modify the image or customize the installation before/during deployment.

- The host/platform could pretend that they installed the provided image but just run something else.

The second option, disk uploading, could be more interesting because one could upload an encrypted disk, and at deployment time provide the password and decrypt it, without the host/platform having access to it. But then how to provide the password to the encrypted disk, without the host having potential access to it? While the password cannot be read once inserted in the system (memory encrypted), the service used to connect to the virtual machine is often provided by the host. This means that the user still has to trust the host when providing the password. In addition, there are two more problems:

- The root disk password unlock happens very very early in the boot process, meaning that ssh is not yet started and therefore it’s even harder to provide it without relying on the host.

- If the password gets somehow leaked, the host will have access to the disk that will be used for all the CVM deployments, since it’s the same disk used every time.

In order to solve this problem, two solutions have been proposed, depending on the use case (CoCo vs general purpose CVMs): root disk integrity verification for containers, and self-encrypting root disk for the general purpose case.

The CoCo solution

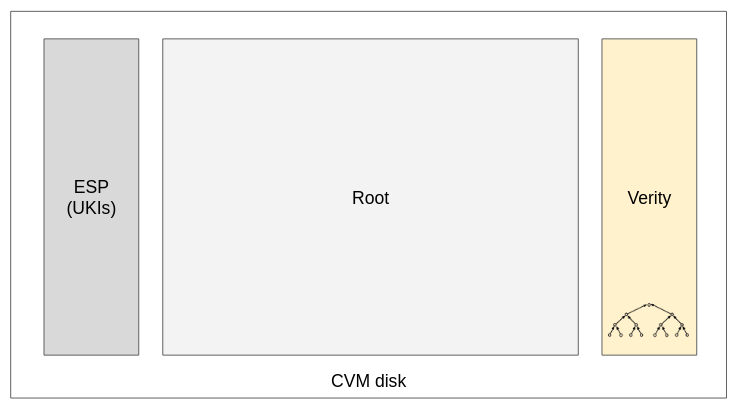

Root disk integrity verification is a feature unique to CoCo, because unlike the general-purpose CVMs, a CoCo VM layer doesn’t need to store any data persistently. In addition, it doesn’t even need to support rebooting, as a CoCo CVM is deployed together with the container workload and follows the same lifecycle of the latter.

Whatever has to be permanently stored by the container will not stay into the root disk of the CVM, but either stored in other storages like an S3 bucket, or lost once the container terminates.

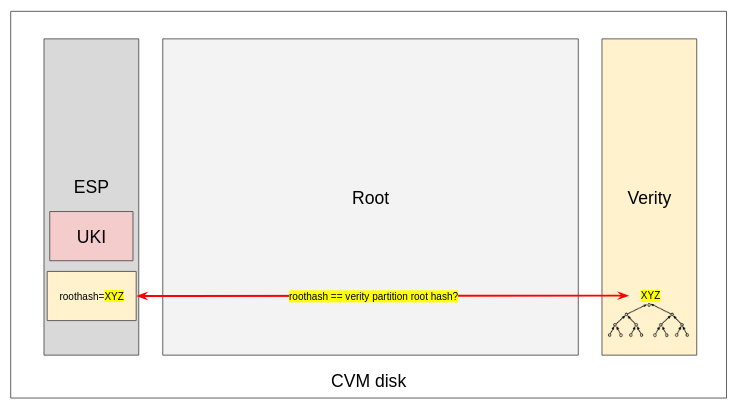

Therefore the CoCo CVM root disk is protected by dm-verity, a kernel-enforced feature that checks that the disk is not tampered with (Figure 4).

Looking at Figure 4, we see that a new verity partition is added to the disk.

dm-verity works like this: the verity partition contains a hash tree of all the blocks of the disk, meaning that if an attacker injects a file into the disk, the hash of the specific block where the file is stored/modified will have a different hash than the hash tree in the partition, and therefore the kernel will be able to detect a disk modification and fail the boot process, making the image unusable. In addition, block hashes are hashed together to form a tree of hashes.

In slightly lower-level details, the core of dm-verity are:

- The kernel command line containing the expected hash at the root of the hash tree in the verity partition.

- The verity partition containing the hash tree.

The kernel command line is provided by the actor that provides the disk. It is the expected hash that should be in the verity partition. The verity partition is shipped with the disk, so it can theoretically be modified, but any modification will reflect on the tree and therefore result in the hash at the root of the tree being different from the one expected.

In addition, at every disk read, the kernel will compute the read block hash and compare it with the corresponding block hash in the verity partition, to be sure that the block hash itself was not modified (Figure 5).

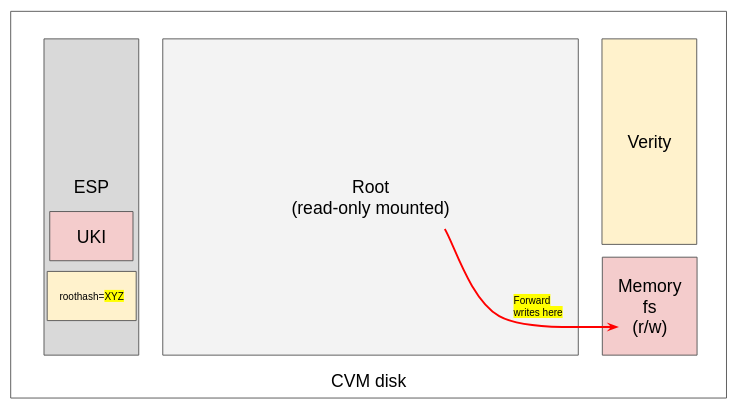

The only drawback of such an approach is that dm-verity enforces the disk to boot as read-only, preventing any write into it so the hash tree is preserved. Because containers don’t need to store anything in the CVM root disk, as it gets destroyed when the container lifecycle ends, it is fine to keep the disk ephemeral. To provide the illusion that the disk is writable and allow all RHEL services (custom and not) to correctly boot, a CoCo CVM overlays the read-only root disk with an ephemeral in-memory filesystem, as illustrated in Figure 6.

How do we make sure this mechanism is in place, and the hash provided in the command line is actually the correct one? Here is where remote attestation takes place. As dm-verity is enabled and configured via kernel command line, and the latter is part of the attestation measurements, we can simply configure the remote attester to check that the dm-verity expected command line is inserted. If the attester receives measurement confirming that the command line is there, and the CoCo CVM correctly boots, then we can be sure that the disk has not been tampered with.

What about container images? A similar line of thought of root disks can be applied to container images.

Currently (1.10.1), CoCo is only able to check for container image signature, but encrypted image support is coming in the next releases.

What about container local storage? What if the container downloads an AI model much bigger than the available memory in the CVM? The upcoming section details how encrypted container storage provides the solution.

Encrypted container storage

As previously mentioned, a container workload might need to download big quantities of data, like an AI model. Such data does not necessarily need to be stored persistently, but has to stay in a storage that is big and secure.

One trivial solution used in previous CoCo releases was to rely on an in-memory filesystem on the CoCo CVM root disk which stores everything in the encrypted memory. However, this has major drawbacks because:

- It reduces the memory that is available for computing process.

- When downloading large quantities of data, it forces the user to pick VM instance sizes with a lot of memory, which usually on cloud providers results in also having a lot unneeded computing power and of course costs much more.

A much more stable solution comes with CoCo 1.10.1, where encrypted container storage is added. This basically means that at config/deployment time, the user is capable of choosing how big the instance disk size should be.

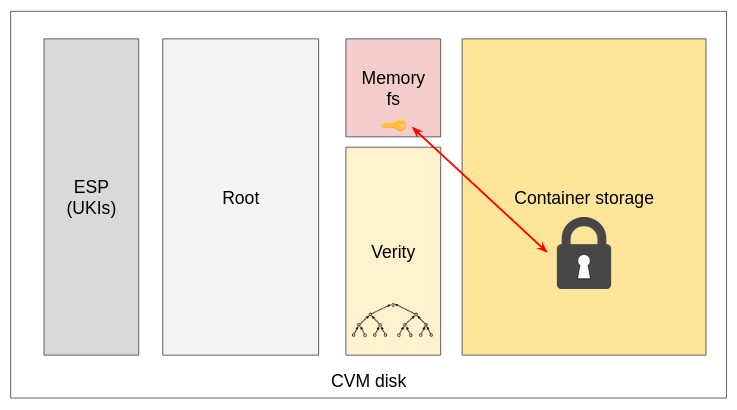

When deployed, the CoCo CVM gets a disk with the specified user size (say 100GB). Such space is split in the following partitions:

- (500MB) ESP partition containing the UKI and the dm-verity command line: Unprotected but it is attested, so the remote attester will detect tempering.

- (5GB) Root disk: Protected by dm-verity, so the kernel will prevent booting when tampering happens.

- (500MB) Verity partition - unprotected but compared at boot with the command line, so kernel will detect if it has been compromised.

- The remaining space is left unallocated.

This remaining storage is then used at boot by a custom CoCo component that takes care of:

- Creating a new partition using all leftover space.

- Creating a random key and store it in memory.

- Formatting the partition to be encrypted with the random key.

- Mounting the newly created partition so that the container ends up running on it, meaning anything stored in the container root or in emptydir that are not explicitly memory backed, will end up in the encrypted storage.

Note how the key is randomly generated at boot. This means that once the CoCo terminates, the CVM is destroyed and even if an attacker manages to get the disk, the container storage is encrypted with a random password that got lost once the CVM was terminated, making data retrieval impossible (Figure 7).

How are we sure the mechanism to encrypt the storage has no tampering? How are we sure the key is random, and not changed to “12345” by an attacker? Such scripts are part of the root disk, which itself is integrity-verified by the kernel, which is part of the UKI. Therefore, it’s attested, and we are sure that such verification is enabled and correct because we also attest the kernel command line. This is an example of how it all fits beautifully together.

Final thoughts

In this article, we have discussed the importance of CVMs, what key role they play in projects like confidential containers, and which mechanism they deploy to guarantee confidentiality. We also demonstrated the difference and similarities between CoCo and general purpose CVMs, and which new features they bring in the latest CoCo and RHEL releases.

In the future, confidential containers will probably switch to RHEL image mode, as it fits the container use case. Currently, image mode does not yet support some confidential computing features introduced here, but there are plans to catch up. At that point, the architecture might change a bit, but while the implementation details will likely change, the underlying concept will remain the same and ensure the same confidential guarantees.