According to the Britannica dictionary, ethics is an area of study that deals with ideas about what is good and bad behavior. It is a branch of philosophy dealing with what is morally right or wrong, which is then applied to various areas of knowledge, such as engineering. Ethics supports pillars such as integrity, responsibility, objectivity, fairness, confidentiality, honesty, and veracity.

As intelligent systems exert increasing influence over critical decisions across multiple domains, the need for transparency and fairness is paramount. The application of ethical principles to artificial intelligence (AI) becomes essential, as it protects against discrimination and guarantees the explainability of decisions, thus promoting the construction of a more inclusive, reliable, and socially responsible ecosystem.

AI ethics encompasses the principles and guidelines that govern the responsible development, deployment and use of artificial intelligence applications. It involves ensuring fairness and mitigating bias in machine learning algorithms, promoting transparency and explainability in decision-making processes, safeguarding privacy rights, establishing accountability for results/predictions, ensuring the security and maximizing the beneficial impact of AI on society while minimizing potential harm.

There are several challenges to implementing these principles, such as algorithmic biases (language bias, gender bias, stereotyping, etc.), open source, transparency, accountability, and regulation.

Responsible AI

Responsible AI refers to the practice of designing, developing, deploying, and using artificial intelligence systems in a way that aligns with ethical principles, social values, and legal standards. It involves ensuring that AI technologies are developed and implemented in a way that maximizes their benefits while minimizing risks and negative impacts on individuals, communities, and society as a whole.

The implementation of Responsible AI is concerned with aspects such as fairness, transparency, accountability, privacy, and security.

Explainable AI

Explainable AI refers to the ability of artificial intelligence systems to provide understandable explanations of their decisions to humans in a clear and interpretable way. The goal of Explainable AI is to increase transparency, accountability and trustworthiness of AI technologies, especially in critical domains where decisions impact the lives of individuals, such as healthcare, finance and law enforcement.

Explainable AI techniques vary depending on the type of AI model and application. They may include methods such as feature importance analysis implemented with algorithms such as Deep Learning Important FeaTures (DeepLIFT), model-agnostic approaches such as Local Interpretable Model-Agnostic Explanations (LIME), and SHapley Additive exPlanations (SHAP) for prediction accuracy validation and explanation.

Responsible AI and Explainable AI

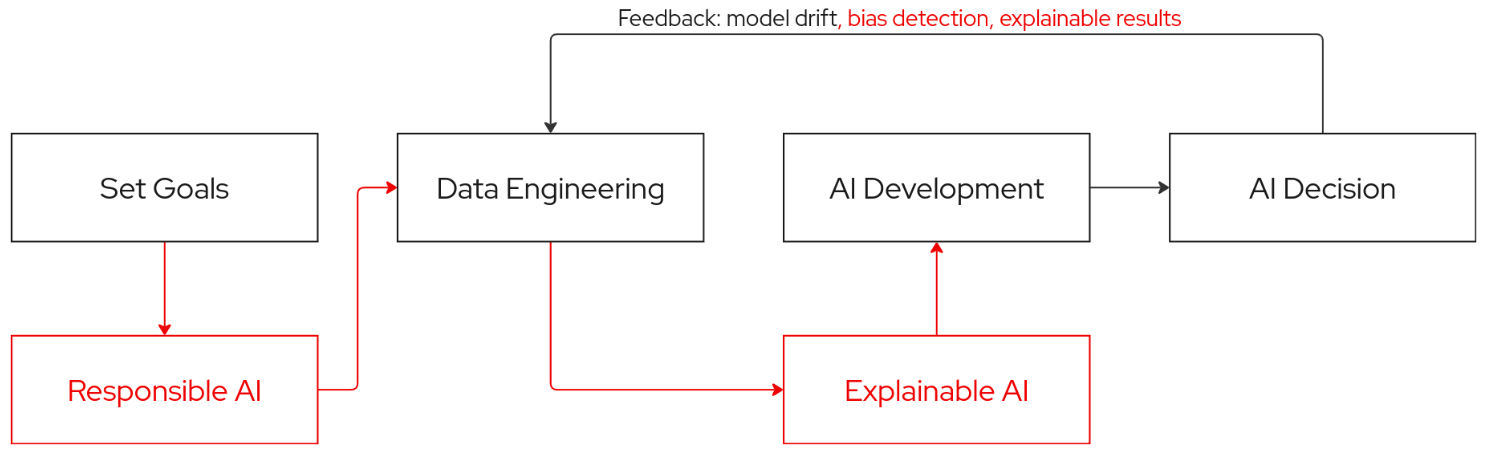

Responsible AI looks at AI during the planning stages while Explainable AI looks at AI results after the results are computed. Responsible AI and Explainable AI can work together to make better AI.

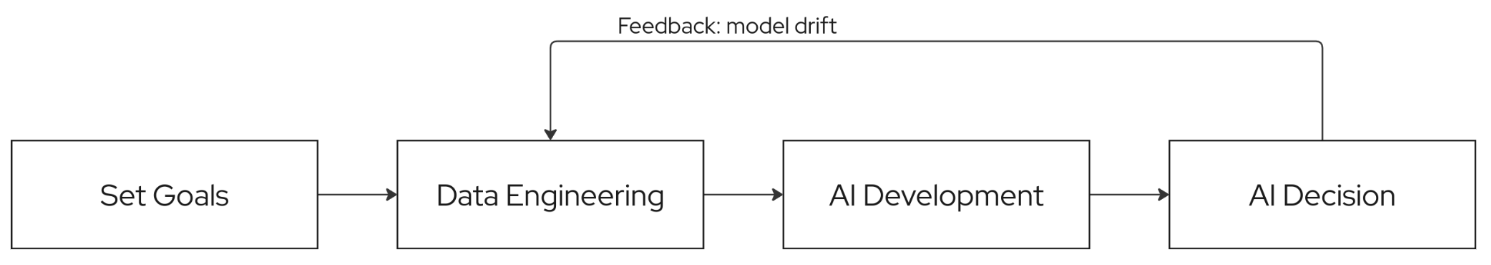

In Figure 1, we have a simplified example of a AI model development pipeline. In general, we are able to improve results and look for drifts only with the model running in production, and we often cannot know why the model made the decisions/predictions/recommendations.

In Figure 2, we have the same pipeline but with the Responsible AI stage that aims to apply ethical principles and the Explainable AI stage that seeks to understand and explain the decisions made by the model.

TrustyAI

TrustyAI is a versatile tool designed to provide explanations of decision-making services and predictive models using the following aspects:

- Explainability: Enrich model execution information through XAI algorithms, such as LIME and SHAP.

- Tracing and accountability: Extract, collect, and publish metadata for auditing and compliance.

- Runtime monitoring: Expose services in dashboards to assess data from both a business and an operational perspective.

Show me the code!

The best way to introduce TrustyAI is through a demonstration. The code for this implementation is available in the tarcis-io/demo_trustyai repository, in Jupyter Notebook trustyai.ipynb.

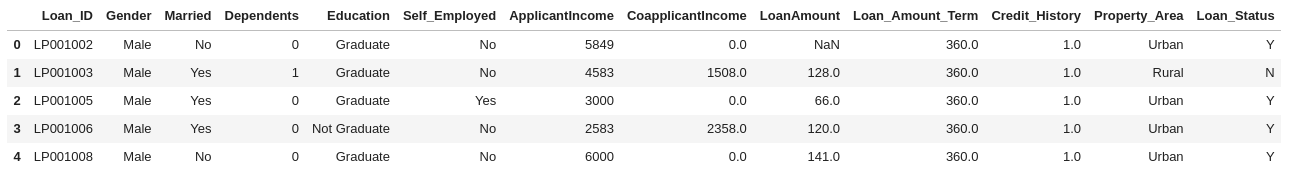

For this demo, we will use the loan dataset available on Kaggle. After downloading it, we can create the dataset object using the pandas library:

from pandas import read_csv

dataset = read_csv('loan_data_set.csv')

dataset.head()The head method should return the first records of the dataset, as shown in Figure 3.

The next step is to prepare the dataset, creating the features and labels that will be used to create the machine learning classification model. To do this, we will use the scikit-learn library:

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import LabelEncoder

label_encoder = LabelEncoder()

features = dataset.drop('Loan_ID', axis = 1).drop('Loan_Status', axis = 1)

features = features.apply(label_encoder.fit_transform)

labels = dataset[['Loan_Status']]

labels = labels.apply(label_encoder.fit_transform)

features_train, features_test, labels_train, labels_test = train_test_split(features, labels)Classification model is a supervised machine learning method where the algorithm tries to predict the correct label from given input data. In this case, the model will try to predict whether the loan was approved or not (column Loan_Status of the dataset) based on the applicant's attributes (columns Gender, Married, Dependents, Education, etc. of the dataset). For its creation and training, we will use the xgboost library:

from xgboost import XGBClassifier

model = XGBClassifier()

model.fit(features_train, labels_train)We can use the score method, in which the output should be a percentage value, to check the accuracy of the model:

model.score(features_test, labels_test)0.7012987012987013Accuracy of the machine learning model

It is possible to improve the accuracy of the model by applying data techniques such as treat missing values and feature engineering.

Now, we will run predictions on randomly chosen test examples. The output should be the classification between unapproved loan and approved loan (value 0 and value 1 respectively, depending on the label_encoder or other form of encoder used in the preparation phase):

example_0 = features_test.iloc[0]

example_1 = features_test.iloc[1]

predictions = model.predict([example_0, example_1])example_0

Gender 0

Married 0

Dependents 0

Education 0

Self_Employed 0

ApplicantIncome 290

CoapplicantIncome 0

LoanAmount 89

Loan_Amount_Term 8

Credit_History 0

Property_Area 1

Name: 69, dtype: int64

example_1

Gender 1

Married 1

Dependents 3

Education 1

Self_Employed 0

ApplicantIncome 96

CoapplicantIncome 66

LoanAmount 122

Loan_Amount_Term 8

Credit_History 1

Property_Area 0

Name: 340, dtype: int64

predictions

array([0, 1])We can see that for applicant example_0, the model classified it as loan not approved. For applicant example_1, the model classified it as loan approved.

However, why did the model classify applicant example_0 as loan not approved and example_1 as loan approved? Which attributes were taken into consideration or were decisive? Was Gender a decisive parameter? Is Gender a decisive parameter?

To try to explain the predictions of this model, we will use Explainable AI techniques using the trustyai package. First, we need to create a new explainable model based on the previous model:

from trustyai.model import Model

trustyai_model = Model(

fn = model.predict,

feature_names = list(features_train),

output_names = list(labels_train),

dataframe_input = True

)Lastly, we need to create an explainer to explain the predictions made by the model. To use the LIME algorithm, we can create a LimeExplainer and use it as follows:

from trustyai.explainers import LimeExplainer

lime_explainer = LimeExplainer()

lime_explanation_0 = lime_explainer.explain(

model = trustyai_model,

inputs = example_0.astype(float),

outputs = predictions[0]

)

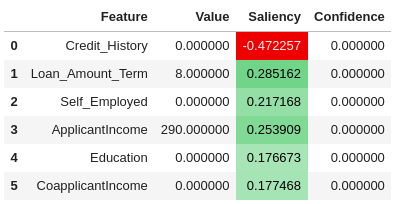

lime_explanation_0.as_html()['Loan_Status']The LIME explanation of the model's prediction for example_0 is presented in Figure 4. For this example, the attribute Credit_History (column Saliency, in red) strongly impacted the model's decision for loan not approved.

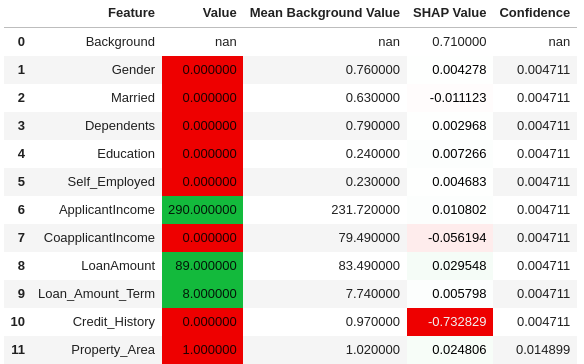

Similarly, to use the SHAP algorithm, we can create a SHAPExplainer and use it as follows:

from trustyai.explainers import SHAPExplainer

shap_explainer = SHAPExplainer(background = features_train[:100].astype(float))

shap_explanation_0 = shap_explainer.explain(

model = trustyai_model,

inputs = example_0.astype(float),

outputs = predictions[0]

)

shap_explanation_0.as_html()['Loan_Status']The SHAP explanation of the model's prediction for example_0 is presented in Figure 5. Just like the LIME algorithm, SHAP considers the Credit_History attribute as the strong influencer on the model's decision (column SHAP Value, in red).

Conclusion

Increasingly, aspects of our lives will be taken over by intelligent systems, such as whether we will be approved for a loan or whether we are compatible with a job vacancy. Having the ability to explain this decision-making is extremely important and even more so from an ethical perspective.

Explainable AI brings together a series of algorithms, such as LIME and SHAP, that can help with these explanations. TrustyAI is an open source tool that allows us to apply these algorithms to our models and be integrated into the model development lifecycle.

Responsible AI practices such as ethical guidelines, transparency, and bias mitigation are essential to promoting trustworthy AI systems that benefit society. By prioritizing these principles, we can build AI that is not only technically advanced, but also safe, fair, and aligned with human values and needs.