Page

Kafka ecosystem

Kafka ecosystem

The brokers and API are not the only tools offered to developers by Kafka, which is a mature ecosystem. Here are a few additional tools that help you write and deploy message-passing applications at scale.

What is a service registry?

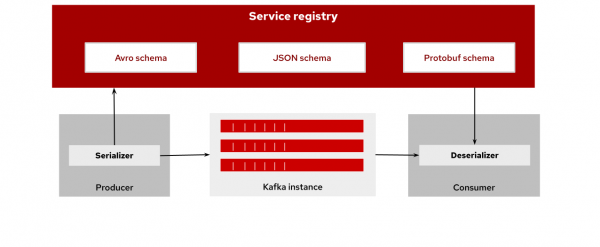

A service registry is a datastore for sharing standard event schemas and API designs across an organization. A service registry lets you decouple the structure of your data from your client applications. You share and manage your data types and API descriptions at runtime using a REST interface.

A service registry allows developer teams to search the registry for existing schemas and register new ones. The stored schemas can be used to serialize and deserialize messages (Figure 7). Before producing and consuming messages, client applications can check the schema to ensure that the messages that they send and receive are compatible with it, and therefore can be correctly parsed and consumed.

What are connectors?

A connector is a tool that recognizes the format of data in a source or sink (such as a particular database engine) and can move data quickly in and out of Kafka.

The Apache Kafka project includes Kafka Connect, a tool to manage data integration using an Apache Kafka cluster as the hub. Kafka Connect provides prebuilt connectors for databases, services, and other technologies. These connectors can be used to move data into a Kafka cluster from a given source, and out of a Kafka cluster to a given sink. The Kafka project has also created tools that make it easy to define your own connectors.

What is change data capture?

Change data capture is a software design pattern that allows a system such as Debezium to monitor data changes made in a database and then deliver those changes in real time to a downstream process or system. The goal is to keep data in sync on all data sources in real time or near real time, by moving and processing data continuously as new database events occur.

What is kcat?

kcat is an open source tool created by the Kafka community to let you connect to Kafka directly from the command line, where you can consume and produce messages as well as list topic and partition information. kcat was formerly known as kafkacat.

What is Kafka Streams?

Kafka Streams provides a domain specific language (DSL) for creating pipelines to process data streams scalably with minimal code. The DSL supports stateless operations such as filtering an existing topic to create a new topic that contains messages matching specific criteria. Stateful operations are also possible, such as producing aggregates and joining messages from multiple input streams (topics).

Want to see a practical example?

Try our Develop consumers and producers in Java learning path.