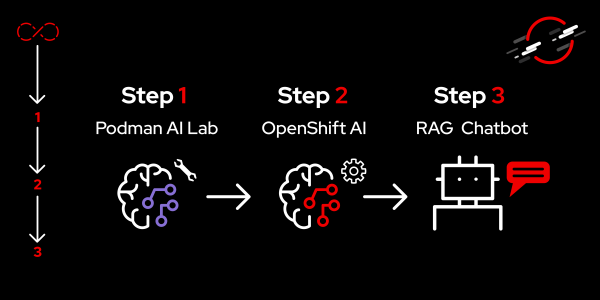

Overview: From Podman AI Lab to OpenShift AI

A common statement from many people today is "I want the ability to chat with my documents". They might be aware that large language models (LLMs) give them the ability to do that, but both the implementation details and the possible risks are unknown. These unknowns make it difficult to understand where to start.

Thankfully, there is a path forward with the newly released Podman AI Lab extension. Podman AI Lab allows you to pull down models and test them locally to see how they perform and determine which one(s) will work best for your use case(s). The chatbot recipe within Podman AI Lab makes integrating LLMs with applications as easy as the click of a button.

Podman AI Lab is an excellent place to evaluate and test models, but you'll eventually want to see how this will actually be deployed in your enterprise. For that, we can use Red Hat OpenShift and Red Hat OpenShift AI along with the Elasticsearch vector database to create a retrieval augmented generation (RAG) chatbot.

This learning path will walk you through how to go from a chatbot recipe in the Podman AI Lab extension to a RAG chatbot deployed on OpenShift and OpenShift AI.

Prerequisites:

- Admin access to an OpenShift 4.12+ cluster. The following code was tested with an OpenShift 4.15 cluster and OpenShift AI 2.9.

- OpenShift AI 2.10

- OpenShift 4.12 - 4.15

In this learning path, you will:

- Download, run, and test LLMs in Podman AI Lab.

- Find out how easy it is to start a chatbot recipe in Podman AI Lab with the downloaded model.

- Set up OpenShift AI and Elasticsearch vector database.

- Run a notebook in OpenShift AI that ingests PDFs and URLs into a vector database.

- Configure an OpenShift AI project, LLM serving model platform, and upload a custom model serving runtime.

- Update and deploy the chatbot recipe code on OpenShift from Podman AI Lab with LangChain to connect to the Elasticsearch vector database and OpenShift AI model serving endpoint.