Red Hat OpenShift AI learning

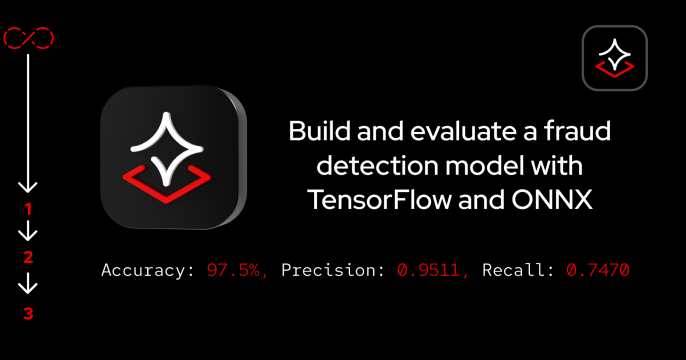

OpenShift AI gives data scientists and developers a powerful AI/ML platform for building AI-enabled applications. Data scientists and developers can collaborate to move from experiment to production in a consistent environment quickly.

Build smarter with Red Hat OpenShift AI

OpenShift AI gives data scientists and developers a powerful AI/ML platform for building AI-enabled applications. Data scientists and developers can collaborate to move from experiment to production in a consistent environment quickly.

OpenShift AI is available as an add-on cloud service to Red Hat OpenShift Service on AWS or Red Hat OpenShift Dedicated or as a self-managed software product. It provides an AI platform with popular open source tooling. Familiar tools and libraries like Jupyter, TensorFlow, and PyTorch along with MLOps components for model serving and data science pipelines are integrated into a flexible UI.