In a previous article, I showed you how to customize Red Hat OpenShift software-defined networking (SDN) for your organization's requirements and restrictions. In this article, we'll look at using the Kuryr SDN instead. Using Kuryr with OpenShift 3.11 on Red Hat OpenStack 13 changes the customization requirements because Kuryr works directly with OpenStack Neutron and Octavia.

Note: This article builds on the discussion and examples from my previous one. I recommend reading the previous one first.

Background

Traditional OpenShift installations leverage openshift-sdn, which is specific to OpenShift. Using openshift-sdn means that your containers run on a network within a network. This setup, known as double encapsulation, introduces an additional layer of complexity, which becomes apparent when troubleshooting network issues. Double encapsulation also affects network performance due to the overhead of running a network within a network.

If you are running OpenShift on OpenStack, then you have the option to use the Kuryr SDN, which allows you to directly access OpenStack's Neutron services and avoid double encapsulation. Using the kuryr-cni means that all of your OpenShift components—networks, subnets, load balancers, ports, and so on—are, in fact, OpenStack resources. This setup reduces the complexity of the networking layer. It also improves network performance. As another benefit, you can assign floating IPs to any of the OpenShift services, which allows traffic directly into the service without having to go through the OpenShift router.

In some cases, you might need to customize Kuryr's network-range defaults, such as if the defaults overlap with your organization’s network. Let's look at the requirements for customizing the Kuryr network.

Customizing the Kuryr network

We'll use the same base address range from the customization example in my previous article: 192.168.0.0/16. In this case, we'll divide the range between the service network (192.168.128.0/18), pod network (192.168.0.0/17), and docker-bridge network. For this specific example, we intend to configure Kuryr with namespace isolation, similar to ovs-multitenant when using openshift-sdn.

Note: OpenShift-Ansible, which was featured in my last article, has significantly more parameter requirements to deliver the same network IP range customizations that we can achieve with the Kuryr Container Network Interface (CNI).

Kuryr service network

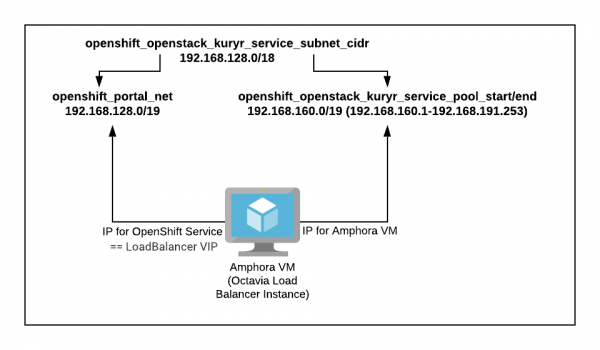

Because Kuryr uses Octavia load balancers (which is an Amphora VM in OpenStack), every OpenShift service is an instance of an Octavia load balancer. Therefore, openshift_portal_net is the address range that OpenShift uses to assign IP addresses to OpenShift (Kubernetes) services. Each IP address will be associated with an Octavia load balancer as a VIP (Virtual IP, or floating port in OpenStack) in case of failover. Additionally, because Octavia load balancers are Amphora VMs, they also require a port/IP, which should not collide with the openshift_portal_net range.

Using the following ranges ensures a clear distinction between the Amphora VM IP range and the OpenShift service IP range:

openshift_openstack_kuryr_service_subnet_cidr: The range to be used by both OpenShift service IPs and the Octavia load balancer Amphora VM IPs. In this case, the range is 192.168.128.0/18, which is between 192.168.128.0 and 192.168.191.254.openshift_portal_net: The range dedicated to OpenShift service IPs. In this case, the range is 192.168.128.0/19, which is between 192.168.128.0 and 192.168.159.254.openshift_openstack_kuryr_service_pool_start: The start of the range to be used by the Octavia load balancer Amphora VM IPs. In this case, it is the second half of the range identified in the first parameter (openshift_openstack_kuryr_service_subnet_cidr). The start of that range is 192.168.160.0.openshift_openstack_kuryr_service_pool_end: The end of the range used by the Octavia load balancer Amphora VM IPs. In this case, it is 192.168.191.254.

Figure 1 shows Amphora VM's IP allocation when implemented with an OpenShift service.

Kuryr pod network

There are no changes to the pod network, which is straightforward. In our case, it's:

openshift_openstack_kuryr_pod_subnet_cidr: 192.168.0.0/17

Customizing the Kuryr inventory parameters

Next, we'll look at all of the inventory parameters required to successfully configure a customized OpenShift IP range.

Enabling namespace isolation

These parameters are needed to enable namespace isolation in Kuryr:

openshift_use_kuryr: True openshift_use_openshift_sdn: False use_trunk_ports: True os_sdn_network_plugin_name: cni openshift_node_proxy_mode: userspace kuryr_openstack_pool_driver: nested

You must ensure that os_sdn_network_plugin_name is unset.

IP range

Failing to set the IP range clearly causes performance problems with the kuryr-controller, and IP conflicts:

#SERVICE: openshift_portal_net: 192.168.128.0/19 openshift_openstack_kuryr_service_subnet_cidr: 192.168.128.0/18 openshift_openstack_kuryr_service_pool_start: 192.168.160.1 openshift_openstack_kuryr_service_pool_end: 192.168.191.253 #POD openshift_openstack_kuryr_pod_subnet_cidr: 192.168.0.0/17

The OpenShift service IP range is from 192.168.128.0 to 192.168.159.254; the Amphora VM IP range is from 192.168.160.1 to 19.168.191.254; and the pod IP range is from 192.168.0.1 to 192.168.127.254.

Note that the following types of errors will cause the OpenShift service IP range to conflict with Amphora VM's IP range:

ERROR kuryr_kubernetes.controller.drivers.lbaasv2 [-]

Error when creating loadbalancer: {"debuginfo": null, "faultcode":

"Client", "faultstring": "IP address 192.168.123.123 already

allocated in subnet

Pre-creating subports

This value is generally relevant when you have a flat network, where pre-creating subports contributes to the overall deployment speed for applications that require network resources. In most cases, you can set this value to false:

openshift_kuryr_precreate_subports: false

Cluster sizing

This parameter changes the way that you size a cluster, so it is important. The number of pods per namespace is determined by prefixlen:

openshift_openstack_kuryr_pod_subnet_prefixlen

Where:

- /24 = 256 pods per namespace

- /25 = 128 pods per namespace

- /26 = 64 pods per namespace

Pool batch

The kuryr_openstack_pool_batch value needs to be set based on openshift_openstack_kuryr_pod_subnet_prefixlen:

kuryr_openstack_pool_max: kuryr_openstack_pool_min:

Here are some suggestions for the following values of openshift_openstack_kuryr_pod_subnet_prefixlen:

24: batch: 5, max: 10, min: 1

25: batch: 4, max: 7, min: 1

26: batch: 3, max: 5, min: 1

Also note that each OpenShift node will have its own pool, so if you have three worker nodes, each node will have a dedicated pool for that namespace. This requirement limits how many worker nodes you can use based on the size of openshift_openstack_kuryr_pod_subnet_prefixlen.

kuryr_openstack_ca: "MYORG_CA_Bundle.txt"

If you do not set this value, Kuryr will fail.

Images

Providing specific values for the images rules out the risk of default images being incorrect:

openshift_openstack_kuryr_controller_image openshift_openstack_kuryr_cni_image

kuryr_openstack_public_net_id

You can get this value from openstack network list.

Global namespaces

By default, these are the only namespaces that are considered global, meaning that Kuryr allows other namespaces to reach these namespaces despite namespace isolation:

kuryr_openstack_global_namespaces: default,openshift-monitoring

Namespace isolation

The openshift_kuryr_subnet_driver: namespace and openshift_kuryr_sg_driver: namespace are required for namespace isolation.

DNS lookup

The values here allow DNS lookup:

openshift_master_open_ports: - service: dns tcp port: 53/tcp - service: dns udp port: 53/udp openshift_node_open_ports: - service: dns tcp port: 53/tcp - service: dns udp port: 53/udp

openshift_openstack_node_secgroup_rules

# NOTE(shadower): the 53 rules are needed for Kuryr

- direction: ingress

protocol: tcp

port_range_min: 53

port_range_max: 53

- direction: ingress

protocol: udp

port_range_min: 53

port_range_max: 53

- direction: ingress

protocol: tcp

port_range_min: 10250

port_range_max: 10250

remote_ip_prefix: "{{ openshift_openstack_kuryr_pod_subnet_cidr }}"

- direction: ingress

protocol: tcp

port_range_min: 10250

port_range_max: 10250

remote_ip_prefix: "{{ openshift_openstack_subnet_cidr }}"

- direction: ingress

protocol: udp

port_range_min: 4789

port_range_max: 4789

remote_ip_prefix: "{{ openshift_openstack_kuryr_pod_subnet_cidr }}"

- direction: ingress

protocol: udp

port_range_min: 4789

port_range_max: 4789

remote_ip_prefix: "{{ openshift_openstack_subnet_cidr }}"

- direction: ingress

protocol: tcp

port_range_min: 9100

port_range_max: 9100

remote_ip_prefix: "{{ openshift_openstack_kuryr_pod_subnet_cidr }}"

- direction: ingress

protocol: tcp

port_range_min: 9100

port_range_max: 9100

remote_ip_prefix: "{{ openshift_openstack_subnet_cidr }}"

- direction: ingress

protocol: tcp

port_range_min: 8444

port_range_max: 8444

remote_ip_prefix: "{{ openshift_openstack_kuryr_pod_subnet_cidr }}"

- direction: ingress

protocol: tcp

port_range_min: 8444

port_range_max: 8444

remote_ip_prefix: "{{ openshift_openstack_subnet_cidr }}"

Most importantly, never use remote_group_id. Instead, only use remote_ip_prefix.

Conclusion

Using Kuryr with OpenShift 3.11 on OpenStack 13 provides the benefits of using OpenStack's Neutron networking directly for OpenShift pods and services, instead of the OpenShift SDN. Avoiding double encapsulation improves performance and reduces troubleshooting complexity, and you also get the benefits of directly associating floating IPs to OpenShift services, which is useful in several applications.

There are, however, parameters that must be configured correctly in order to get an optimally running OpenShift cluster with Kuryr. This article identified all of the important parameters and the recommended values that are required to configure a cluster that suits your needs.

Acknowledgments

I would like to acknowledge Luis Tomas Bolivar as my co-author and Phuong Nguyen as our peer reviewer.

References

For additional information:

- See "How to customize the Red Hat OpenShift 3.11 SDN" ( Mohammad Ahmad, 2019) for the original discussion and example that are the basis for this article.

- See "Accelerate your OpenShift Network Performance on OpenStack with Kuryr" (Rodriguez, Malleni, and Bolivar, 2019) for an architectural overview and performance comparison of the Kuryr SDN versus OpenShift SDN.

- See the Kuryr SDN and OpenShift Container Platform (OCP 3.11) documentation for detailed information about configuring the Kuryr SDN.

- See Configuring for OpenStack (OCP 3.11) for more about configuring OCP to access the OpenStack infrastructure.

- See Kuryr SDN Administration (OCP 3.11) for more about configuring the Kuryr SDN.