Update August 25, 2020: The Louketo Proxy team has announced that it is sunsetting the Louketo project. Read the link for more information, and watch our site for a new article detailing how to authorize multi-language microservices using a different method.

What if you needed to provide authentication to several microservices that were written in different languages? You could use Red Hat Single Sign-On (SSO) to handle the authentication, but then you would still need to integrate each microservice with Keycloak. Wouldn't it be great if a service could just handle the authentication flow and pass the user's details directly to your microservices? In this article, I introduce a service that does just that!

Louketo Proxy

Louketo Proxy (previously Keycloak-Gatekeeper) integrates with OpenID Connect (OIDC)-compliant providers like Keycloak. Louketo Proxy hands off the authentication to Keycloak, and then passes the authorization and user details to a microservice as header attributes. The diagram in Figure 1 illustrates the authentication flow between Louketo Proxy, Keycloak, and a microservice.

With Louketo Proxy, you don't need to worry about supporting the authentication flow across the different languages used for your microservices. As an added benefit, Louketo Proxy makes it easy to provide authentication to legacy off-the-shelf applications that do not support OIDC.

Louketo Proxy in OpenShift

Before getting started, it is worthwhile to explore the architecture that is required to run Louketo Proxy and a microservice together. In a Red Hat OpenShift deployment, the usual pattern is for a single pod to run a single container, as shown in Figure 2.

For our Louketo Proxy pattern to work, we need one pod to run two containers. Louketo Proxy and the microservice will reside within the same pod and share the resources associated with that pod, including networking. To prevent conflict, Louketo Proxy and the microservice will need to listen on different network ports. The Louketo Proxy instance also must be able to communicate with the microservice without traversing any network links. This setup reduces latency and makes it difficult to execute a man-in-the-middle (MITM) attack, which improves the security model. Figure 3 shows the OpenShift deployment pattern for Louketo Proxy with microservices.

Normally, we would create a service bound to the microservice and expose it via a route. In this case, we only create a service bound to the Louketo Proxy instance and expose it via a route. Consumers will only ever access the microservice via the Louketo Proxy instance. This arrangement enforces the security model.

Getting started with Louketo Proxy

For this example, we deploy a simple Flask application that exposes the authentication headers passed along by Louketo Proxy. If you wish, you can run the example code on Red Hat CodeReady Containers (CRC). Otherwise, you will need to update the code to run on your OpenShift cluster.

Step 1: Deploy Keycloak

The first step is to deploy Keycloak on CodeReady Containers. To fast-track the process, run the following commands from a terminal. These will create a project on OpenShift, deploy Keycloak, and create a route:

$ oc new-project sso-test $ oc new-app --name sso --docker-image=quay.io/keycloak/keycloak -e KEYCLOAK_USER='admin' -e KEYCLOAK_PASSWORD='louketo-demo' -e PROXY_ADDRESS_FORWARDING='true’ $ oc create route edge --service=sso --hostname=sso.apps-crc.testing

Create and configure the Flask client

Browse to https://sso.apps-crc.testing and log in with the username admin and the password louketo-demo. Once there, select Clients from the left-hand menu and create a new client with the fields shown in Figure 4.

After you have created the client, you will have the option to switch the client access type from public to confidential, as shown in Figure 5.

CodeReady Containers will generate a client secret, which you can view under the Credentials tab shown in Figure 6. You will need the secret when we configure Louketo Proxy, so make a note of it.

Configure the mappers

At this point, we are still configuring the Flask client. Select the Mappers tab and add two mappers:

- Groups:

- Name: groups

- Mapper type: Group Membership

- Token claim name: groups

- Audience:

- Name: audience

- Mapper type: Audience

- Included client audience: flask

Figure 7 shows the configuration for the Audience mapper.

Figure 8 shows the configuration for the Groups mapper.

Configure the user groups

Now select Groups from the left-hand menu and add two groups:

adminbasic_user

Configure the user

Again from the left-hand menu, select Users and add a user. Be sure to enter an email and set a password for the user, then add the user to the basic_user and admin groups that you just created. Next, we'll configure Louketo Proxy and the example application.

Step 2: Configure Louketo Proxy and the application

We will need the following details from our Keycloak server (note that you will enter the client secret that you saved in Step 1):

- Client ID: flask

- Client secret

- Discovery URL: https://sso.apps-crc.testing/auth/realms/master

- Groups: basic_user

Create the ConfigMaps

First, we'll use a ConfigMap to configure Louketo Proxy. Louketo Proxy supports presenting custom pages (sign-in pages, forbidden pages, and so on) that can match the look-and-feel of the associated microservice. For our use case, we will redirect all unauthenticated requests to Keycloak. Make sure that you replace the client-secret in the following ConfigMap with the one generated by Keycloak:

apiVersion: v1

kind: ConfigMap

metadata:

name: gatekeeper-config

data:

keycloak-gatekeeper.conf: |+

# The URL for retrieving the OpenID configuration - normally the /auth/realms/

discovery-url: https://sso.apps-crc.testing/auth/realms/master

# skip tls verify

skip-openid-provider-tls-verify: true

# the client ID for the 'client' application

client-id: flask

# the secret associated with the 'client' application

client-secret: < Paste Client Secret here >

# the interface definition you wish the proxy to listen to, all interfaces are specified as ':', unix sockets as unix://|

listen: :3000

# whether to enable refresh-tokens

enable-refresh-tokens: true

# the location of a certificate you wish the proxy to use for TLS support

tls-cert:

# the location of a private key for TLS

tls-private-key:

# the redirection URL, essentially the site url, note: /oauth/callback is added at the end

redirection-url: https://flask.apps-crc.testing

secure-cookie: false

# the encryption key used to encode the session state

encryption-key: nkOfcT6jYCsXFuV5YRkt3OvY9dy1c0ck

# the upstream endpoint which we should proxy request

upstream-url: http://127.0.0.1:8080/

resources:

- uri: /*

groups:

- basic_user

Next, we'll create a ConfigMap for the Flask application. Before we can create the ConfigMap, we need to obtain the public key for Keycloak. To get the public key, log in to the Keycloak admin page and go to Realm Settings > Keys. From there, select the RS256 public key, as shown in Figure 9.

The Flask application uses this public key to check the validity of the JSON Web Token (JWT) that Louketo Proxy passes. Here is the ConfigMap for the Flask application:

kind: ConfigMap apiVersion: v1 metadata: name: sso-public-key data: PUBLIC_KEY: | -----BEGIN PUBLIC KEY----- < Paste in the Keycloak public key here > -----END PUBLIC KEY-----

Bind the service

Now we need to bind a service to the Louketo Proxy listening port. We defined the listening port in Louketo Proxy's ConfigMap under the listen parameter. Here is the service binding:

apiVersion: v1 kind: Service metadata: name: flask spec: ports: - port: 3000 protocol: TCP targetPort: 3000 selector: app: flask

Configure the route

We use routes to expose our services to consumers. In this case, we are exposing the Louketo Proxy service and the terminating TLS on the router:

kind: Route apiVersion: route.openshift.io/v1 metadata: name: flask spec: host: flask.apps-crc.testing to: kind: Service name: flask weight: 100 port: targetPort: 3000 tls: termination: edge insecureEdgeTerminationPolicy: Redirect wildcardPolicy: None

Apply the DeploymentConfig

Now that we have created the ConfigMaps, service, and route we can apply our OpenShift DeploymentConfig. If you look carefully at the configuration below, you will see that we are deploying two containers within the same DeploymentConfig. As I said at the beginning of this article, our architecture calls for deploying two containers within the same pod:

kind: DeploymentConfig

apiVersion: apps.openshift.io/v1

metadata:

name: flask

labels:

app: flask

spec:

strategy:

type: Rolling

replicas: 1

selector:

app: flask

template:

metadata:

labels:

app: flask

spec:

containers:

- name: flask

image: quay.io/rarm_sa/flask-sso-gatekeeper

ports:

- containerPort: 8080

protocol: TCP

envFrom:

- configMapRef:

name: sso-public-key

imagePullPolicy: IfNotPresent

- name: gatekeeper

image: 'quay.io/louketo/louketo-proxy'

args:

- --config=/etc/keycloak-gatekeeper.conf

ports:

- containerPort: 3000

name: gatekeeper

volumeMounts:

- name: gatekeeper-config

mountPath: /etc/keycloak-gatekeeper.conf

subPath: keycloak-gatekeeper.conf

volumes:

- name : gatekeeper-config

configMap:

name: gatekeeper-config

Test the configuration

To test your configuration, browse to the example application at https://flask.apps-crc.testing. You will be redirected to Keycloak and presented with a login screen like the one in Figure 10. Enter the username and password for your application user.

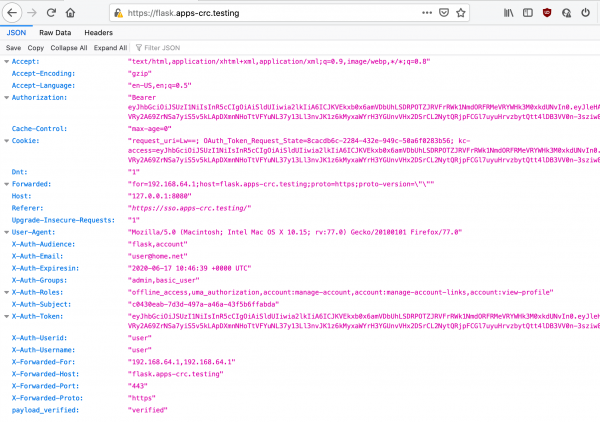

Once you have authenticated the user, you will be redirected to an application page that returns a JSON file. The file exposes the headers passed along by Louketo Proxy, as shown in Figure 11.

Conclusion

This article introduced you to Louketo Proxy, a simple way to provide authentication for your applications without having to code your own OpenID Connect clients within your microservices. As always, I welcome your questions and feedback in the comments.

Last updated: August 25, 2020