In this article, I will introduce helpful, common tips for managing reliable builds and deployments on Red Hat OpenShift. If you have experienced a sudden performance degradation for builds and deployments on OpenShift, it might be helpful to troubleshoot your cluster. We will start by reviewing the whole process, from build to deployment, and then cover each aspect in more detail. We will use Red Hat OpenShift 4.2 (Kubernetes 1.14) for this purpose.

Processes from build to deployment

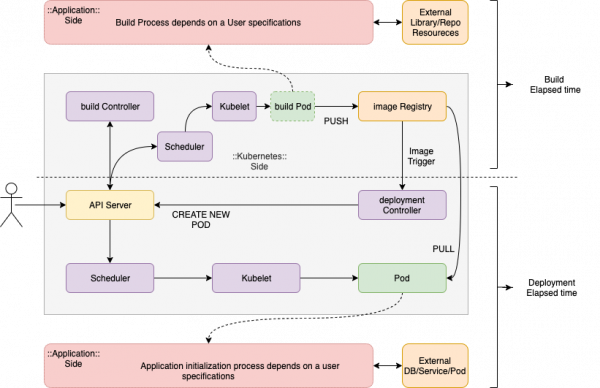

When a CI/CD process or a user triggers the build for a pod deployment, the processes will proceed as shown in Figure 1, which is simplified for readability:

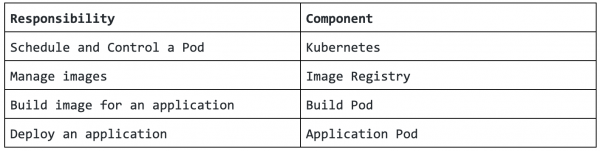

As you can see, Kubernetes does not control all of these processes, but the main processes for build and deployment depend on the user’s application build configuration and the dependencies on external systems. We can split out each process by which component has responsibility for managing things as shown in Figure 2:

Kubernetes

In this process, Kubernetes provides resources based on the BuildConfig and DeploymentConfig files. Usually, a build and deploy will fail before starting their work if there is any trouble handling resources, such as volume mount failure, scheduling pending, and so on. You can usually find the reasons for these failures easily in the event logs.

So, if both the build and deployment are successful—even though they took longer—this is not a Kubernetes scheduling and control problem. For clarifying the performance issue, we should ensure that BuildConfig and DeploymentConfig are configured with enough resource requests as in the following examples.

Here is a quick sample BuildConfig for this scenario:

apiVersion: v1

kind: BuildConfig

metadata:

name: sample-build

spec:

resources:

requests:

cpu: 500m

memory: 256Mi

Here is a quick sample DeploymentConfig for this scenario:

apiVersion: apps.openshift.io/v1

kind: DeploymentConfig

metadata:

name: sample-deployment

spec:

template:

spec:

containers:

- image: test-image

name: container1

resources:

requests:

cpu: 100m

memory: 200Mi

In general, you should allocate resources large enough to build and deploy reliably after testing, keeping in mind that this is not a minimum resource setting. For instance, if you set resources.requests.cpu: 32m, Kubernetes will assign more CPU time through the cpu.shares control group parameter as follows:

# oc describe pod test-1-abcde | grep -E 'Node:|Container ID:|cpu:'

Node: worker-0.ocp.example.local/x.x.x.x

Container ID: cri-o://XXX... Container ID ...XXX

cpu: 32m

# oc get pod test-5-8z5lq -o yaml | grep -E '^ uid:'

uid: YYYYYYYY-YYYY-YYYY-YYYY-YYYYYYYYYYYY

worker-0 ~# cat \

/sys/fs/cgroup/cpu,cpuacct/kubepods.slice/kubepods-burstable.slice/kubepods-burstable-podYYYYYYYY_YYYY_YYYY_YYYY_YYYYYYYYYYYY.slice/crio-XXX... Container ID ...XXX.scope/cpu.shares

32

As of OpenShift 4.1, you can manage cluster-wide build defaults including the above resource requests through the Build resource. Refer to Build configuration resources for more details:

apiVersion: config.openshift.io/v1

kind: Build

metadata:

name: cluster

spec:

buildDefaults:

defaultProxy:

httpProxy: http://proxy.com

httpsProxy: https://proxy.com

noProxy: internal.com

env:

- name: envkey

value: envvalue

resources:

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 10m

memory: 10Mi

buildOverrides:

nodeSelector:

selectorkey: selectorvalue

operator: Exists

Additionally, you should add kube-reserved and system-reserved to provide more reliable scheduling and minimize node resource overcommitment in all nodes. Refer to Allocating resources for nodes in an OpenShift Container Platform cluster for more details:

apiVersion: machineconfiguration.openshift.io/v1

kind: KubeletConfig

metadata:

name: set-allocatable

spec:

machineConfigPoolSelector:

matchLabels:

custom-kubelet: small-pods

kubeletConfig:

systemReserved:

cpu: 500m

memory: 512Mi

kubeReserved:

cpu: 500m

memory: 512Mi

If you have more than 1,000 nodes, you can consider tuning percentageOfNodesToScore for scheduler performance tuning. This feature's state is beta as of Kubernetes 1.14 (OpenShift 4.2).

Image registry

This component works on image push and pull tasks for both builds and deployments. First of all, you should consider adopting appropriate storage and network resources to process the required I/O and traffic for the maximum concurrent image pull and push you estimated. Usually, object storage is recommended because it is atomic, meaning the data is either written completely or not written at all, even if there is a failure during the write. Object storage can also share a volume with other duplicated registries in ReadWriteMany (RWX) mode. Further information can be found in the Recommended configurable storage technology documentation.

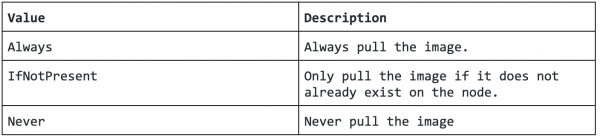

You can also consider IfNotPresent ImagePullPolicy to reduce the pull/push workload as follows:

Build pod

When a build pod is created and starts to build an application, all control passes to the build pod, and the work is configured through build configuration parameters defined by a BuildConfig. How you choose to create your application and provide source content to build and operate on it all affects build performance.

For instance, if you build a Java or Nodejs application using maven or npm, you might download many libraries from their respective external repositories. If at that time the repositories or access path have performance issues, then the build process can fail or be delayed more than usual. This factor means that if your build depends on an external service or resource, it's easy to suffer negative effects from that setup regardless of your local system’s status. So, it might be best to consider creating a local repository (maven, npm, git, and so on) to ensure reliable and stable performance for your builds. Or, you can reuse previously downloaded dependencies and previously built artifacts by using Source-to-Image (S2I) incremental builds if your image registry performance is enough to pull previously-built images for every incremental build:

strategy:

sourceStrategy:

from:

kind: "ImageStreamTag"

name: "incremental-image:latest"

incremental: true

Building with a Dockerfile can optimize layered caches to decrease image layer pull and push time. In the following example, using this method decreased the update size because splitting into layers is based on change frequency. To rephrase it, any unchanged layers are cached, as shown in Figure 4:

Let's look at an example. This image has small layers, but the 35M layer is always updated when the image build is conducted:

# podman history 31952aa275b8 ID CREATED CREATED BY SIZE COMMENT 31952aa275b8 52 seconds ago /bin/sh -c #(nop) ENTRYPOINT ["tail","-f",... 0B missing 57 seconds ago /bin/sh -c #(nop) COPY resources /resources 35.7MB missing 5 weeks ago 20.48kB missing 5 weeks ago # podman push docker-registry.default.svc:5000/openshift/test:latest Getting image source signatures Copying blob 3698255bccdb done Copying blob a066f3d73913 skipped: already exists Copying blob 26b543be03e2 skipped: already exists Copying config 31952aa275 done Writing manifest to image destination Copying config 31952aa275 done Writing manifest to image destination Storing signatures

But this image has more layers than the one above, the 5M layer is the only one that is updated. Other unchanged layers are cached:

# buildah bud --layers . STEP 1: FROM registry.redhat.io/ubi8/ubi-minimal:latest STEP 2: COPY resources/base /resources/base --> Using cache da63ee05ff89cbec02e8a6ac89c287f550337121b8b401752e98c53b22e4fea7 STEP 3: COPY resources/extra /resources/extra --> Using cache 9abc7eee3e705e4999a7f2ffed09d388798d21d1584a5f025153174f1fa161b3 STEP 4: COPY resources/user /resources/user b9cef39450b5e373bd4da14f446b6522e0b46f2aabac2756ae9ce726d240e011 STEP 5: ENTRYPOINT ["tail","-f","/dev/null"] STEP 6: COMMIT 72cc8f59b6cd546d8fb5c3c5d82321b6d14bf66b91367bc5ca403eb33cfcdb15 # podman tag 72cc8f59b6cd docker-registry.default.svc:5000/openshift/test:latest # podman history 72cc8f59b6cd ID CREATED CREATED BY SIZE COMMENT 72cc8f59b6cd About a minute ago /bin/sh -c #(nop) ENTRYPOINT ["tail","-f",... 0B missing About a minute ago /bin/sh -c #(nop) COPY resources/user /res... 5.245MB missing 2 minutes ago /bin/sh -c #(nop) COPY resources/extra /re... 20.07MB missing 2 minutes ago /bin/sh -c #(nop) COPY resources/base /res... 10.49MB missing 5 weeks ago 20.48kB missing 5 weeks ago 107.1MB Imported from - # podman push docker-registry.default.svc:5000/openshift/test:latest Getting image source signatures Copying blob aa6eb6fda701 done Copying blob 26b543be03e2 skipped: already exists Copying blob a066f3d73913 skipped: already exists Copying blob 822ae69b69df skipped: already exists Copying blob 7c5c2aefa536 skipped: already exists Copying config 72cc8f59b6 done Writing manifest to image destination Copying config 72cc8f59b6 done Writing manifest to image destination Storing signatures

You should check your build logs when troubleshooting the build process because Kubernetes can only detect whether a build pod completed successfully or not.

Application pod

Like the build pod, all control passes to the application pod once the application pod is created, so most of the work is done through the application implementation if the application depends on external services and resources to initialize, such as DB connection pooling, KVS, and other API connections. And you should also watch out whether Security Software is running on your hosts. It can usually affect all processes on the hosts, not only deployment.

For instance, if DB server that uses connection pooling has performance issues or reaches the maximum connection count while the application pod is starting, the application pod initialization can be delayed more than expected. So, if your application has external dependencies, you should also check to see whether they are running well. And if Readiness Probes and Liveness Probes are configured for your application pod, you should set initialDelaySeconds and periodSeconds large enough for your application pod to initialize. If your initialDelaySeconds and periodSeconds are too short to check the application states, your application will be restarted repeatedly and might result in a delay or failure to deploy the application pod (you can find more on this issue in Monitoring container health):

apiVersion: v1

kind: Pod

metadata:

labels:

test: liveness

name: liveness-http

spec:

containers:

- name: liveness-http

image: k8s.gcr.io/liveness

args:

- /server

livenessProbe:

httpGet:

# host: my-host

# scheme: HTTPS

path: /healthz

port: 8080

httpHeaders:

- name: X-Custom-Header

value: Awesome

initialDelaySeconds: 15

timeoutSeconds: 1

name: liveness

Build and deployment in parallel

Lastly, I recommend that you keep concurrent build and deployment tasks in one cycle as appropriate numbers for suppressing resource issues. As you can see here, these tasks are chained with other tasks and they will iterate automatically through their life cycles, so there would be more workload than you expected. Usually, the compile (build) and application initialization (deployment) processes are CPU-intensive tasks, so you might need to evaluate how many concurrent build and deployment tasks are possible to ensure you have a stable cluster before scheduling new tasks.

Conclusion

We took a look at how each part affects each other within build and deployment processes. I focused on the configuration and other information related only to build and deployment performance topics in the hopes that this information will help your understanding regarding the interaction between the build and deployment phases of applications on OpenShift. I hope this information is helpful for your stable system management. Thank you for reading.

References

Two additional references you might find useful are OpenShift Container Platform 4.2 Documentation - Understanding image builds and Scheduler Performance Tuning.

Last updated: June 12, 2023