Case management applications are designed to handle a complex combination of human and automated tasks. All case updates and case data are captured as a case file, which acts as a pivot for the management. This then serves as a system of record for future audits and tracking. The key characteristic of these workflows is that they are ad hoc in nature. There is no single resolution, and often, one size doesn't fit all.

Case management does not have structured time bounds. All cases typically don't resolve at the same time. Consider examples like client onboarding, dispute resolution, fraud investigations, etc., which, by virtue, try to provide customized solutions based on the specific use case. With the advent of more modern technological frameworks and practices like microservices and event-driven processing, the potential of case management solutions opens up even further. This article describes how you can make use of case management for dynamic workflow processing in this modern era, including components such as Red Hat OpenShift, Red Hat AMQ Streams, Red Hat Fuse, and Red Hat Process Automation Manager.

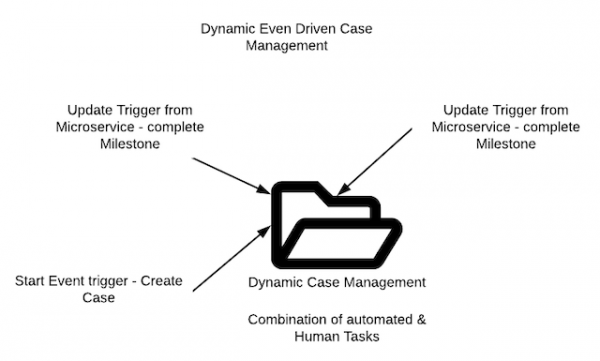

Dynamic event-driven case management

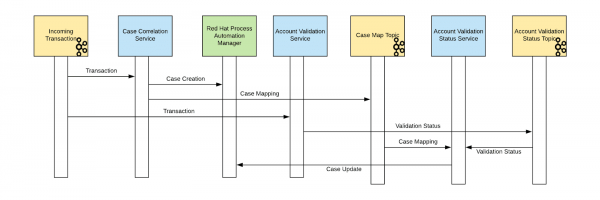

Keeping the case management ethos intact, it provides a way to orchestrate both automated and human tasks. As you can see in Figure 1, there is no direct invocation of services that leaves the case management layer. This fact has been reversed. Each of the service helpers performing a specific task on the dynamic case communicates with the case over an event stream.

This approach has several advantages:

- The case doesn't need to wait for these asynchronous tasks to be completed; instead, it just waits for a signal.

- Since there is no direct coupling, there is more scope for handling failures in a graceful manner.

- Case management not only orchestrates the various microservices performing tasks for the case but also provides for human intervention on the case and the ability to create ad hoc tasks and case data on the fly.

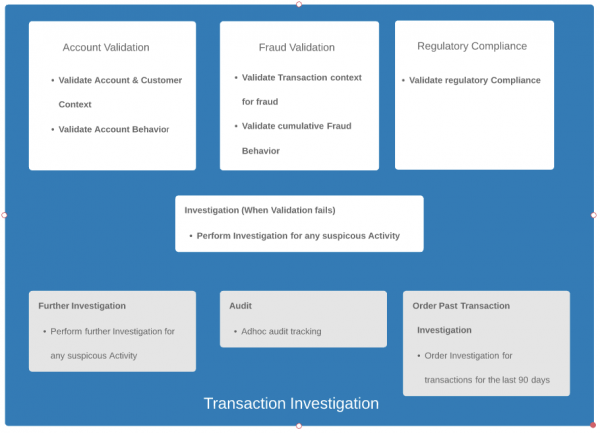

Transaction investigation overview

Let's consider a transaction investigation use case. The steps Account Validation, Fraud Validation, and Regulatory Compliance are mandatory and are invoked for every case (not in any specific order). An investigation is ordered if any validation fails. There could be other steps involved in the process, including audits or secondary level investigation of historical data, as shown in Figure 2:

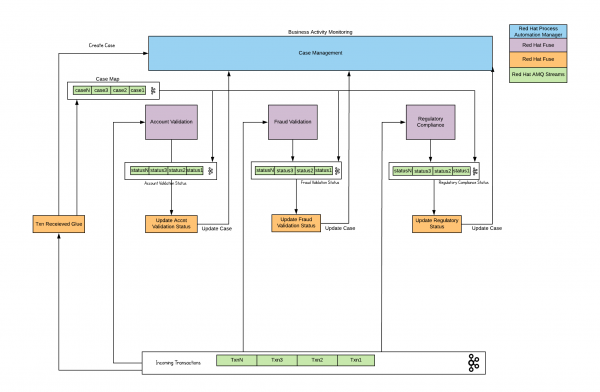

Dynamic case management architecture overview

Events are constantly created and pushed on to an event stream. The mandatory steps for the case evaluation, which include Account Validation, Fraud Validation, and Regulatory Compliance are modeled as microservices, which look for new transactions as they appear on the stream. Once the microservice evaluation is completed, the status is updated at the Case Management layer through a case correlation service, as shown in Figure 3:

Let us now look at each of these pieces in a little more detail.

Red Hat OpenShift

Red Hat OpenShift enables efficient container orchestration with rapid container provisioning, deploying, scaling, auto-healing, and management.

Red Hat AMQ Streams

AMQ Streams provides distributed messaging capabilities that can meet the demands for scale and performance. AMQ Streams takes an innovative approach by integrating the features of Kafka into the Red Hat OpenShift Container Platform, the market-leading comprehensive enterprise Kubernetes platform.

Red Hat Fuse

Red Hat Fuse enables developers to take an Agile Integration approach when developing solutions. By building and composing microservices, it provides for a distributed integration model. With over 200 out of box connectors, it makes it easy to create APIs and integration solutions rapidly.

Red Hat Process Automation Manager

Red Hat Process Automation Manager (formerly Red Hat JBoss BPM Suite) is a platform for developing containerized microservices and applications that automate business decisions and processes. It provides for a comprehensive Case Management platform which allows for static as well as dynamic tasks to be orchestrated.

Dynamic case management in action

If you notice closely, you will see that none of the systems directly communicate with each other. The stream is the channel of communication between every entity in the system. Also, you might notice that we separated the validation logic from the update status logic. Each of these run as individual microservices.

This overall architecture provides a number of advantages:

- "No tight coupling" means more resilience. Kafka provides for graceful fault tolerance, which means an individual failure does not totally bring the system down.

- Separating out microservice concerns means that each of these individual units can scale depending on load as necessary.

- Clean separation of topic data means more than one consumer can work on it simultaneously (ex., reporting).

- Bringing case management into the architecture opens up possibilities to dynamically work on the case.

- Business activity monitoring provides insights into the orchestration of these disparate microservices and allows for key performance indicator (KPI) metrics reporting and tracking.

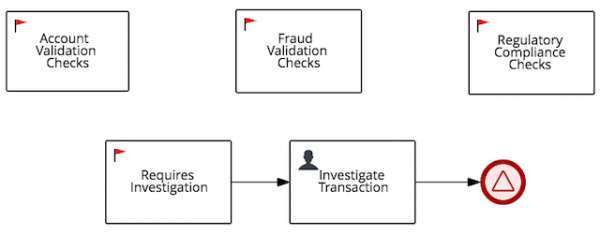

Transaction investigation case: Milestone to rescue

The mandatory steps for the case, including Account Validation checks, Fraud Validation checks, and Regulatory Compliance checks (as shown in Figure 4), are represented as milestones on the case. Milestones are a special service task that can be configured in the case definition designer by adding the milestone node to the process designer palette. Case management milestones generally occur at the end of a stage, but they can also be the result of achieving other milestones. In this example, we use this functionality to signal task completion by an external microservice.

Let us look at the Account Validation checks as an example. A typical sequence of steps is shown in Figure 5:

These steps break down as follows:

- The incoming transaction is read from the Incoming Transaction topic by a case correlation service, which creates a case with the transaction payload and pushes it to a Case Map topic (a map of the incoming transaction and case ID).

- The microservice reads from the Incoming Transaction topic and pushes the status of evaluation to a Status topic.

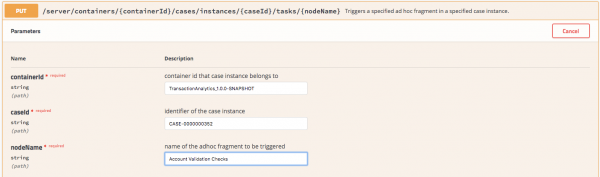

- The Correlation microservice reads from the Status topic and the Case Map topic to update the case milestone for that case over the REST API:

This sequence is then repeated for all three validation checks. Case milestones can also be kicked off with a matching condition. The required investigation, as we defined earlier, is triggered when any one of the validation checks fails. This process will then assign it to an investigator for review.

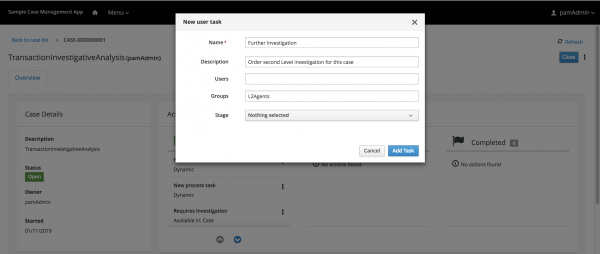

Notice that we haven't defined the milestones Audit, Order Past Transaction, and Further Investigation as a part of the regular case. This fact is because we can add these, and any other tasks or variables, to the case on the fly as needed. Red Hat Process Automation Manager provides the APIs to make this feature possible.

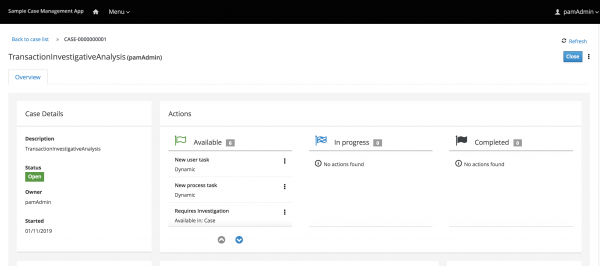

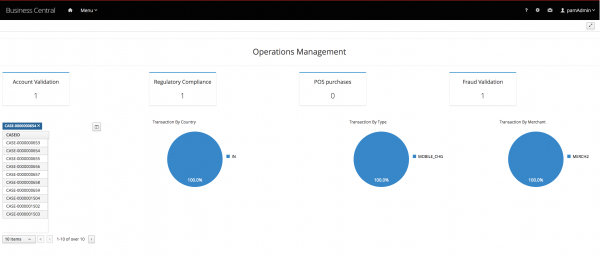

Figure 7 is an example of a sample case management application:

As described in the earlier section, we support both the static set of milestones required to be completed for the case, as well as the ad hoc tasks that can be spun on demand, as shown in Figure 8:

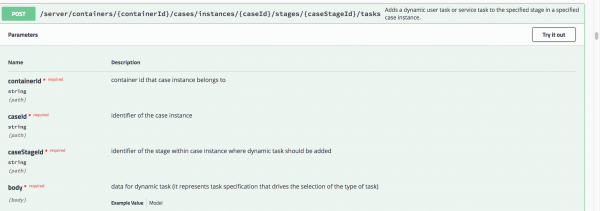

The case management Showcase uses REST API methods as exposed by the Kie-Server to add these dynamic tasks, as shown in Figure 9:

Business activity monitoring

A critical piece of a complex system of this nature is the ability to have insights into the status of these disparate microservices and human tasks. Monitoring KPI metrics and responding to problem trends is an integral job of a case management operations manager. Red Hat Process Automation Manager provides for a dashboard view of the running instances and lets you manage and track SLAs.

A combination of process and task-level SLA metrics plus case-related breakdown can be beneficial for identifying trends and reorganizing the workforce as necessary. Figure 10 shows a typical example of such a dashboard:

This dashboard provides high-level insight into the case data metrics. We can also create drill-down reports to pan right into a specific instance for traceability, as shown in Figure 11:

Summary

By combining the power of dynamic case management, distributed enterprise integration, and event streaming, we can create a completely event-sourced system that is cloud-native, resilient, scalable, and adaptive. Red Hat Process Automation Manager and Red Hat Integration together in harmony can make this happen in style.