ASP.NET Core is the web framework for .NET Core. Performance is a key feature. The stack is heavily optimized and continuously benchmarked. Kestrel is the name of the HTTP server. In this blog post, we'll replace Kestrel's networking layer with a Linux-specific implementation and benchmark it against the default out-of-the-box implementations. The TechEmpower web framework benchmarks are used to compare the network layer performance.

Transport abstraction

Kestrel supports replacing the network implementation thanks to the Transport abstraction. ASP.NET Core 1.x uses libuv for its network implementation. libuv is the asynchronous I/O library that underpins Node.js. The use of libuv predates .NET Core, when cross-platform ASP.NET was called ASP.NET 5. Then scope broadened to the cross-platform .NET implementation that we know now as .NET Core. As part of .NET Core, a network implementation became available (using the Socket class). ASP.NET Core 2.0 introduced the Transport abstraction in Kestrel to make it possible to change from the libuv to a Socket-based implementation. For version 2.1, many optimizations were made to the Socket implementation and the Sockets transport has become the default in Kestrel.

The Transport abstraction allows other network implementations to be plugged in. For example, you could leverage the Windows RIO socket API or user-space network stacks. In this blog post, we'll look at a Linux-specific transport. The implementation can be used as a direct replacement for the libuv/Sockets transport. It doesn't need privileged capabilities and it works in constrained containers, for example, when running on Red Hat OpenShift.

For future versions, Kestrel aims to become more usable as a basis for non-HTTP servers. The Transport and related abstractions will still change as part of that project.

Benchmark introduction

Microsoft is continuously benchmarking the ASP.NET Core stack. The results can be seen at https://aka.ms/aspnet/benchmarks. The benchmarks include scenarios from the TechEmpower web framework benchmarks.

It is easy to get lost watching the benchmark results, so let me give a short overview of the TechEmpower benchmarks.

There are a number of scenarios (also called test types). The Fortunes test type is the most interesting, because it includes using an object-relational mapper (ORM) and a database. This is a common use-case in a web application/service. Previous versions of ASP.NET Core did not perform well in this scenario. ASP.NET Core 2.1 improved it significantly thanks to optimizations in the stack and also in the PostgreSQL driver.

The other scenarios are less representative of a typical application. They stress particular aspects of the stack. They may be interesting to look at if they match your use-case closely. For framework developers, they help identify opportunities to optimize the stack further.

For example, consider the Plaintext scenario. This scenario involves a client sending 16 requests back-to-back (pipelined) for which the server knows the response without needing to perform I/O operations or computation. This is not representative of a typical request, but it is a good stress test for parsing HTTP requests.

Each implementation has a class. For example, ASP.NET Core Plaintext has a platform, micro, and full implementation. The full implementation is using the MVC middleware. The micro implementation is implemented at the pipeline level, and the platform implementation is directly building on top of Kestrel. While the platform class provides an idea of how powerful the engine is, it is not an API that is used to program against by application developers.

The benchmark results include a Latency tab. Some implementations achieve a very high number of requests per second but at a considerable latency cost.

Linux transport

Similar to the other implementations, the Linux transport makes use of non-blocking sockets and epoll. Like .NET Core's Socket, the eventloop is implemented in managed (C#) code. This is different from the libuv loop, which is part of the native libuv library.

Two Linux-specific features are used: SO_REUSEPORT lets the kernel load-balance accepted connections over a number of threads, and the Linux AIO API is used to batch send and receive calls.

Benchmark

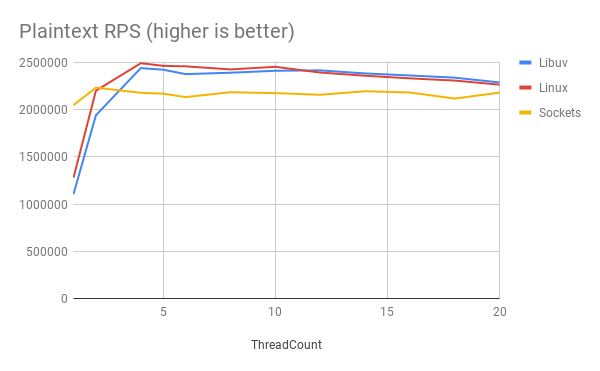

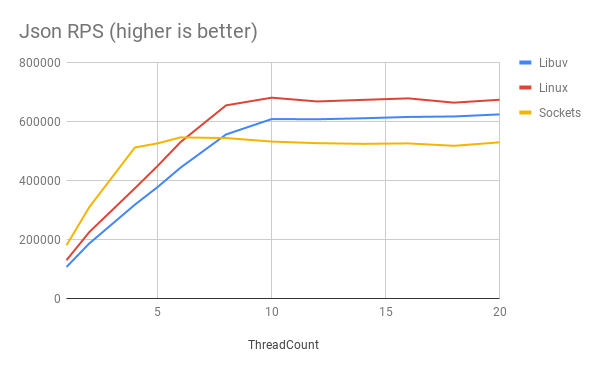

For our benchmark, we'll use the JSON and Plaintext scenarios at the micro class. For the JSON benchmark, the web server responds with a simple JSON object that is serialized for each request. This means that for each request, our web server will do a tiny amount of useful work which makes the transport weigh through. For the Plaintext scenario, the server responds with a fixed string. Due to the pipelining (per 16 requests), only 1/16 of the requests need to do network I/O.

Each transport has a number of settings. Both the libuv and Linux transport have a property to set the number of threads for receiving/sending messages. The Sockets transport performs sends and receives on the ThreadPool. It has an IOQueueCount setting that we'll set instead.

The graphs below show the HTTP requests per second (RPS) for varying ThreadCount/IOQueueCount settings.

We can see that each transport is initially limited by the number of allocated threads. The actual handling happens on the ThreadPool, which is not fully loaded yet. We see Sockets has a higher RPS because it is also using the ThreadPool for network sends/receives. We can’t compare it with the other transports because it is constrained in a different way (it can use more threads for transporting).

Linux vs libuv |

|

| Plaintext | +15% |

| JSON | +20% |

When we increase the ThreadCount sufficiently, the transport is no longer the limiting factor. Now the constraint becomes either the CPU or network bandwidth.

The TechEmpower Round 16 benchmark hit the network bandwidth for the Plaintext scenario. If you look at the benchmark results, you see the top results are all about the same value. These benchmarks indicate underutilized CPU.

For our benchmark, the CPU is fully loaded. The processor is busy sending/receiving and handling the requests. The difference we see between the scenarios is due to the different workload per network request. For Plaintext, we receive 16 pipelined HTTP requests with a single network request. For JSON, there is an HTTP request per network request. This makes the transport weigh through much more in the JSON scenario compared to the Plaintext scenario.

libuv vs Sockets |

Linux vs libuv |

Linux vs Sockets | |

| Plaintext | +9% | +0% | +9% |

| Json | +17% | +10% | +28% |

Using the Linux transport

The Kestrel Linux transport is an experimental implementation. You can try it by using the 2.1.0-preview1 package published on myget.org. If you try this package, you can use this GitHub issue to give feedback and to be informed of (security) issues. Based on your feedback, we'll see if it makes sense to maintain a supported 2.1 version published on nuget.org.

Do this to add the myget feed to a NuGet.Config file:

<?xml version="1.0" encoding="utf-8"?> <configuration> <packageSources> <add key="rh" value="https://www.myget.org/F/redhat-dotnet/api/v3/index.json" /> </packageSources> </configuration>

And add a package reference in your csproj file:

<PackageReference Include="RedHat.AspNetCore.Server.Kestrel.Transport.Linux" Version="2.1.0-preview1" />

Then we call UseLinuxTransport when creating the WebHost in Program.cs:

public static IWebHost BuildWebHost(string[] args) =>

WebHost.CreateDefaultBuilder(args)

.UseLinuxTransport()

.UseStartup()

.Build();

It is safe to call UseLinuxTransport on non-Linux platforms. The method will change the transport only when the application runs on a Linux system.

Conclusion

In this blog post, you've learned about Kestrel and how its Transport abstraction supports replacing the network implementation. We took a closer look at TechEmpower benchmarks and explored how CPU and network limits affect benchmark results. We’ve see that a Linux-specific transport can give a measurable gain compared to the default out-of-the-box implementations.

For information about running .NET Core on Red Hat Enterprise Linux and OpenShift, see the .NET Core Getting Started Guide.

Last updated: November 2, 2023