This is part III of a three-part series describing a proposed approach for an agile API lifecycle: from ideation to production deployment. If you missed it or need a refresher, please take some time to read part I and part II.

This series is coauthored with Nicolas Massé, also a Red Hatter, and it is based on our own real-life experiences from our work with the Red Hat customers we’ve met.

In part II, we discovered how ACME Inc. is taking an agile API journey for its new Beer Catalog API deployment. ACME set up modern techniques for continuously testing its API implementation within the continuous integration/continuous delivery (CI/CD) pipeline. Let's go now to securing the exposition.

Milestone 6: API Exposition to the Outer World

The final milestone of our journey is here. The purpose of this stage is to securely expose our API to the outer world. This is the typical use case for the API management and associated gateway solutions. Applying API management when exposing the API to the outer world allows a better separation of concerns.

The API gateway is typically there to:

- Apply identification of consumers through the delivery of API keys

- Enforce security policies using standards such as OAuth or SAML

- Apply consumption policies such as rate limiting or version control

- Prevent attacks such as denial-of-service attacks and script injection

- Provide better insights into how your API is used in terms of traffic and peak usage

The management counterpart is a better place for:

- Distributing official documentation (contracts AND mocks AND tests)

- Onboarding developers, registering and accessing forums or FAQs for the API

- Building analytics reporting on usage

- Applying monetization policies and producing charge-back/show-back reports

API management solutions are not really new but what we want to highlight here is that there’s a shift in their architecture. As stated in the latest ThoughtWorks Technology Radar, the overambitious API gateway is now on hold. We’re shifting to a world of micro-gateways that have to be deployed close to the protected back end and that should focus on core concerns about traffic management (authentication, access control, and service-level agreements).

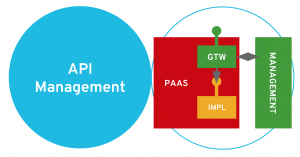

What we are trying to achieve is described on the following schema: having a simple, lightweight gateway deployed close to our implementation and, thus, on the same cloud-ready PaaS platform.

The solution we introduce now is the 3scale API Management solution by Red Hat. 3scale is a hybrid architecture solution with a lightweight gateway that is easily deployable on OpenShift right next your back end. So, we are going to deploy a 3scale gateway for our ACME Beer Catalog API.

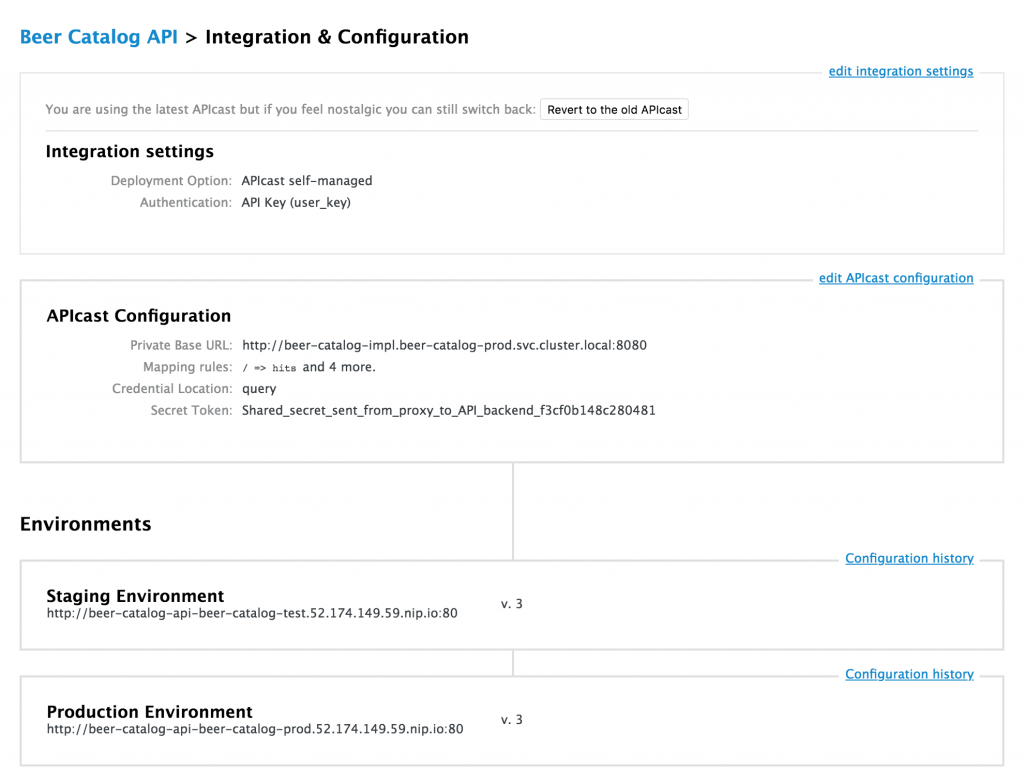

Before diving into gateway deployment, there’s a bunch of setup to do in the 3scale management console. Basically, we’ll have to declare this new API, its application plan and service plan, and its integration details.

You can see this in the screen capture below. Here, the gateway will just route incoming traffic to the http://beer-catalog-impl.beer-catalog-prod.svc.cluster.local:8080 URL that is the internal Kubernetes URL of our back end. With 3scale, there is no need to let the back end be reachable from the outer world.

As previously mentioned, we may then use a custom script to deploy the 3scale gateway on our beer-catalog-prod environment. The script is called deploy-3scale.sh and is located in the GitHub repository. You will have to adapt the 3scale security token before running it.

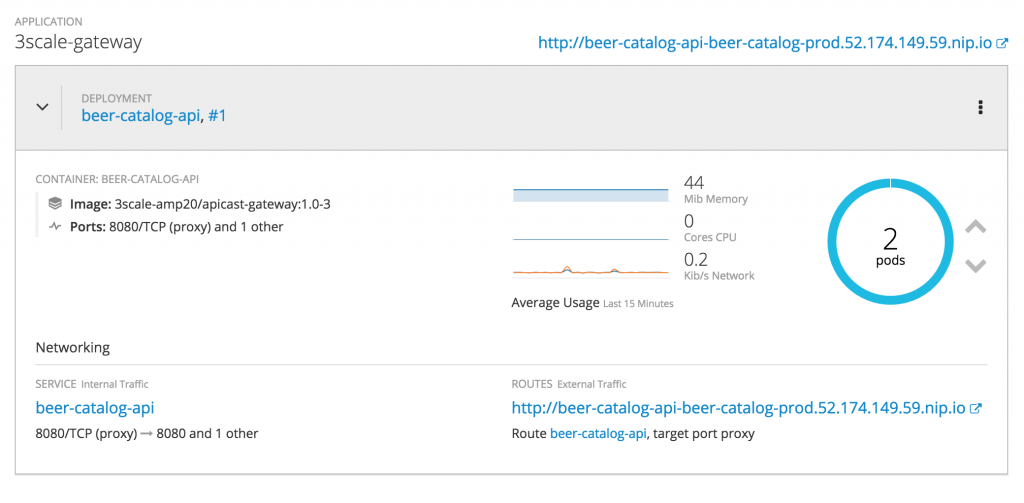

So, just execute this script once you are logged on to your OpenShift environment and you should get a new 3scale gateway appearing in your beer-catalog-prod project.

Now that we’ve got our implementation deployed and protected, it’s time for a final test. We will also have a review on what insights and powerful features it can bring us for the 3scale API gateway.

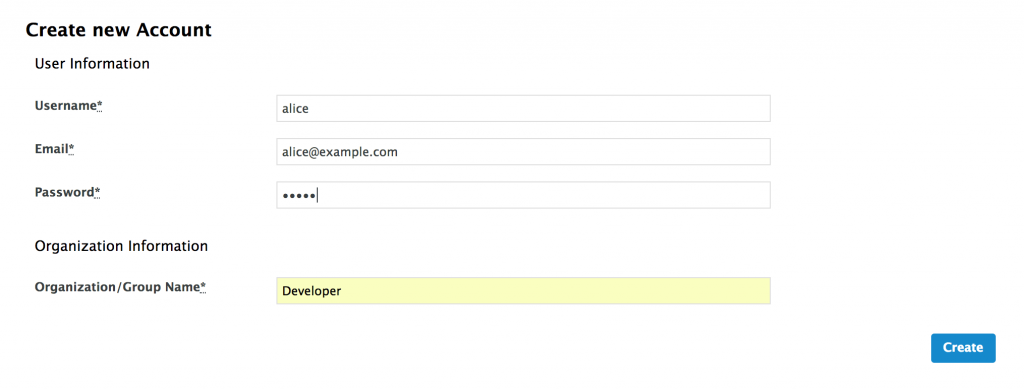

Suppose a reseller partner of ACME has just developed a new mobile application for its customers to browse the ACME brewery catalog. We are going to test it. Before that, we’ll simulate the registration of Alice, a new developer who wants to use our Beer Catalog API. Just create a new developer in the Developer group from the admin console.

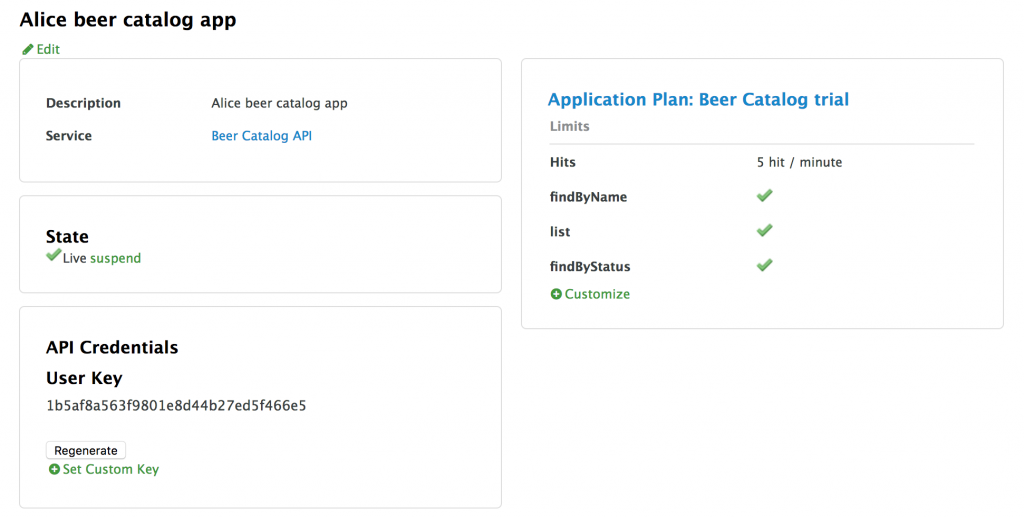

And then, create a new application within Alice's account. This application, which we’ll call the Alice beer catalog app, should use an Application Plan and a Service Plan you have attached to your API.

The goal of this registration is to retrieve the User Key we see in the screenshot above. This key is the access credential we should provide to identify on the 3scale gateway. Finally, we can set up the mobile app that is located in the /api-consumer directory of the GitHub repository. Copy the key and then edit the config.js file to paste in the key. Then, run everything from this directory on a web server.

For example, you can simply run python -m SimpleHTTPServer 8888 and open a new browser window on http://localhost:8888. You may adapt the display for a mobile device for a better perspective on future usage.

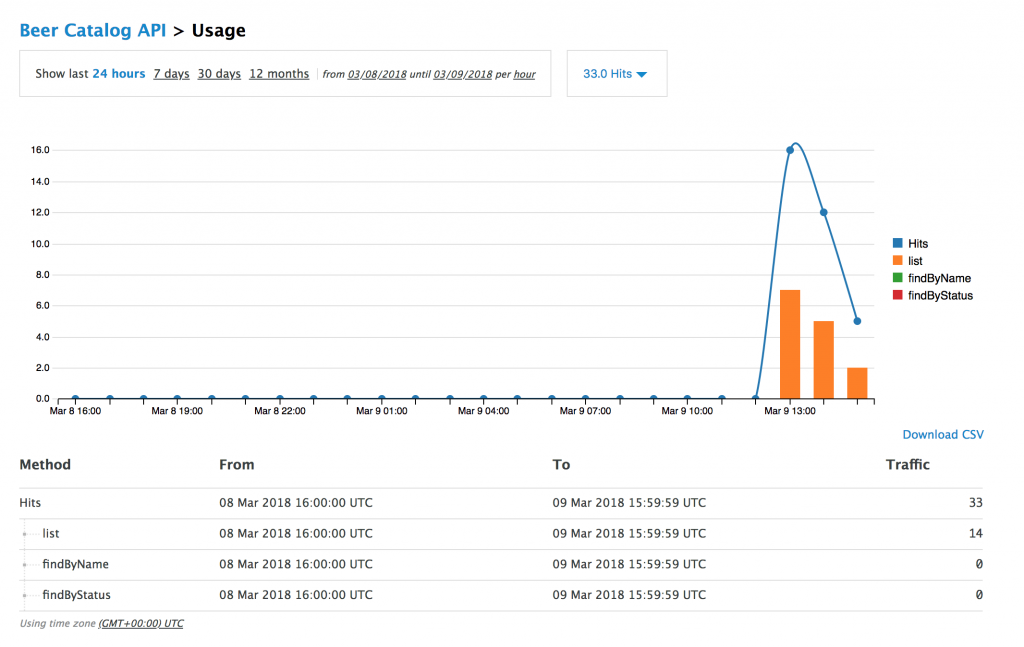

Click the refresh button a couple of times and, depending on your application plan rate limits, you should raise some exception messages when hitting the API too much. Now, let’s go back to the 3scale administration console and see the analytics of your API being updated, reflecting real-time traffic, such as what's shown below:

It is now time to play with some feature such as rate limits and monetization policies to see the full benefits of the 3scale gateway.

The End-to-End Flow

Here we are at the end of our journey for the ACME Inc. API. This journey led us to deploy our API onto a production environment but also to use new methodologies such as mocking and continuous testing when delivering the API to the rest of the world. We have also tested a mobile application that uses our API, managed through the 3scale management solution.

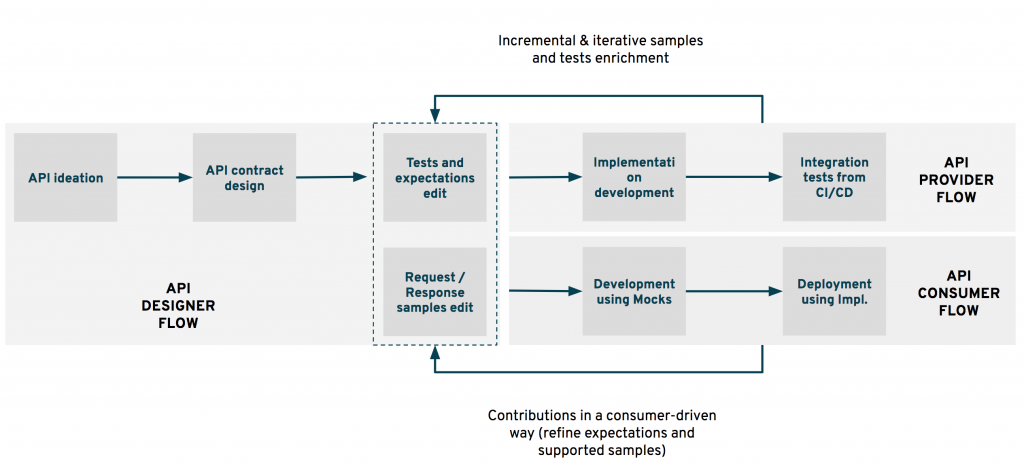

When looking in the rear-view mirror, you may ask if all those stages can be realized by only one person and in a sequence. That’s precisely what we’d like to highlight in next figure. The whole lifecycle indeed involves different flows and different actors. We could at least dissociate:

- The API Designer flow that encompasses the first milestones of our journey: from the ideation to the mocks and tests ready-to-use milestone. We mainly see a sole responsibility for these activities.

- The API Provider flow that takes care of the following milestones in our journey: from development to continuous tests to deployment.

- The API Consumer flow, which may be impacted when introducing the sampling and mocking step in your lifecycle. This flow can be dramatically faster and earlier in the timeline when sampling and mocking are done.

Of course, these flows should have interactions and feedback loops should be integrated between each party. Typically, the Provider may be able to enrich the set of samples to illustrate some edge cases that may have been left out during initialization. Its contributions are also very helpful for making samples more clear regarding business rules or expectations. API consumer feedback has also a great role to play when it comes to contract design. Consumer-driven expectations are gold for later non-regression tests realized in the delivery pipeline of the API Provider.

All these feedback loops are really easy to set up and govern when using open and collaborative tools such as we did during the ACME Inc. use case. Have any doubts about the API operations? Have a look at Microcks repository. Want to enrich the API by adding a new exception case? Edit the Postman collection and realize a Pull/Merge Request on the Git repository. Want to emphasize one of your particular usages of the API through its contract? You get the point...

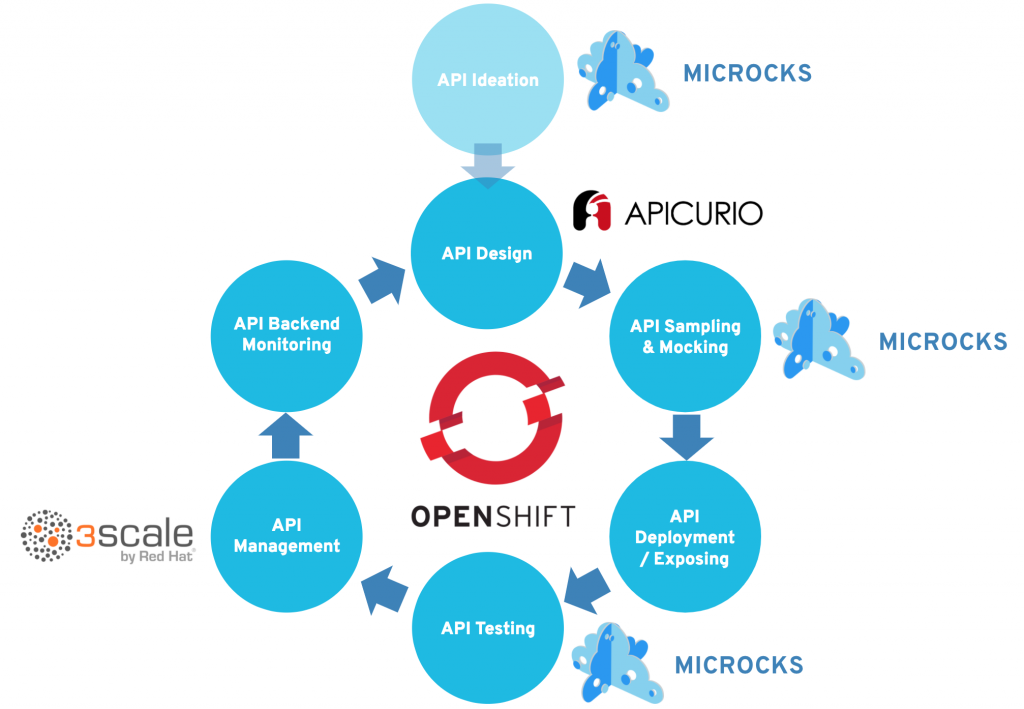

The API Lifecycle

Throughout our journey, we have gone through milestones that make up a cycle. The different phases—API ideation, design, sampling, deployment, and so on—build up a truly iterative and incremental cycle that allows you to be more efficient when deploying the API. Moreover, it allows consumers to start playing with the API earlier in the development cycle by using sampling and mocking techniques.

Remember each milestone of our API journey? Here is the full picture with the different tools and technologies we have used during the different phases.

Besides the API we developed in our use case, all the tools introduced in this series may also be deployed on the Red Hat OpenShift Container Platform. They may be deployed on-premise or in the cloud, depending on the hybrid cloud strategy of your enterprise.

What we want to highlight here is the unique positioning of OpenShift as the true enabler of an agile API Journey.

Using OpenShift, you'll be able to easily deploy the tools used during the first phases: Microcks and Apicurio can both be installed on OpenShift. The container platform also provides extensive support for all the languages and frameworks that can be used for modern application development. These can be microservices-oriented frameworks such as Spring Boot, VertX, NodeJS, or WildFly Swarm as well as integration solutions such as JBoss Fuse. Continuous testing and delivery is made easy in OpenShift through the support and integration of Jenkins pipelines. Microcks comes to the rescue for testing contracts and expectations. The 3scale micro-gateway can be deployed in seconds for enforcing security and access policies. And, you finally get everything you need—from metrics and log collection to distributed tracing and automatic resilience—to run and monitor your back ends and these components the operational excellence way. It's definitely worth a try, isn’t it?

Key Takeaways

With API management solutions becoming mainstream, it's getting easy to securely expose APIs to the world. However, a true agile delivery process for your API is more than just an API management solution.

The API journey goes through many milestones including contract designing, mocking, and testing. Your delivery process should encompass all these activities in order to be truly agile and speed up your development as well as to meet the needs of your customers and partners.

Our proposed approach is built on pragmatic usage of open source tools that allow real collaboration and communication among projects and consumers in an ecosystem. Modern development methods based on a container platform also help in setting up continuous deployment and improvement loops.

If you already use this methodology, if you went through the examples of this blog post series, or if you want to discuss this further, feel free to reach out to the authors: Laurent @lbroudoux and Nicolas @nmasse_itix.

Last updated: April 3, 2023