It's 2026, and the AI landscape feels pretty polarized. On one side, you have chatbots designed for open conversation. On the other, many people are talking about autonomous agents.

But in the rush toward autonomy, we often overlook the practical middle ground. Most enterprise applications don't actually need a model to be creative or self-directed; they just need it to be reliable. They need predictability, not improvisation.

To build better infrastructure, it helps to shift the perspective. Instead of viewing the model as a "digital employee," try treating it as a deterministic component: a semantic processor. At its heart, an LLM is just a text-prediction engine. By embracing that, we can move past the "bot" mindset and use the model for what it's best at: turning messy human language into clean system data.

To make this work, we use Apache Camel. We treat the LLM as a standard, stateless endpoint, no different from a REST API or a database.

- Camel handles the plumbing: It bridges the gap between the isolated model and your dynamic enterprise data, such as files, queues, and databases.

- You handle the logic: You retain full control over prompt construction.

This approach prioritizes mechanics over magic. By bypassing the complex frameworks, you expose the inner workings of the machine. The hidden prompts and runtime proxies disappear. The integration is no longer a black box—it's just code. You can trace the data, verify the logic, and sleep at night knowing the system behaves reliably.

Here are two architectural patterns that turn LLMs into boring, effective semantic processors:

- Generative parsing for structuring data

- Semantic routing for directing flow

- Grounded pipelines for contextual integrity

Generative parsing (structuring data)

Regex is fragile. We all know it.

Generative parsing is the solution. Instead of treating the LLM as a chatbot, we use it as a text-processing engine that converts unstructured input into rigid, type-safe JSON. This ensures that downstream processes receive clean, validated data. It forces the model to follow a predefined schema.

Use cases: Entity resolution, data extraction, and PII redaction.

Canonical entity resolution

The goal: Raw text + an allowlist → ID.

Unlike a database lookup, this pattern excels at fuzzy semantic matching. It handles typos, abbreviations, and partial matches, such as mapping Orion Logs to Orion Logistics (a company name) without requiring a complex fuzzy-search algorithm.

Example execution:

echo 'I am sending the payment to Orion Logs tomorrow.' | camel run --source-dir=./{

"entityName": "Orion Logistics",

"entityId": "1024",

"evidenceSpans": [

"Orion Logs"

],

"confidence": 0.95

}Route processing logic:

- Initialize data: Trigger the workflow and load the allowed whitelist entities into memory.

- Define persona: Configure the system instructions to make the AI behave as a data normalization engine.

- Construct prompt: Combine the raw user input and the loaded whitelist into a single text payload.

- Invoke model: Call the

openaicomponent. ThejsonSchemaparameter guides the LLM to output text in JSON.

- route:

from:

uri: "direct:entity-resolution"

steps:

- setVariable:

name: "entityWhitelist"

expression:

constant: |

[

{"id": "0482", "name": "Acme VAT Compliance"},

{"id": "0991", "name": "Global Tax Services Ltd"},

{"id": "1024", "name": "Orion Logistics"}

]

- setHeader:

name: "CamelOpenAISystemMessage"

simple: |

You are a data normalization engine.

Match the input text to exactly one entity from the provided Whitelist

- If the entity is found, return the ID and Name

- If no match is found, return null values

- Never invent new IDs

- setBody:

simple: |

RAW_INPUT_TEXT_START

${body}

RAW_INPUT_TEXT_END

WHITELIST_JSON_START

${variable.entityWhitelist}

WHITELIST_JSON_END

- to:

uri: "openai:chat-completion"

parameters:

temperature: 0.15

jsonSchema: "resource:classpath:resolution.schema.json"Leaf-node classification

The goal: Map content into a deep tree, not just loose keywords.

If you let them, LLMs are lazy. They will dump everything into the root category (for example, "banking"). This schema enforces a "leaf node" selection, forcing the model to be specific.

Example execution:

echo "I noticed a charge on my account from a vendor in London that I never visited. I need this money back." | camel run --source-dir=./{

"rationale": "The customer explicitly states they never visited London... indicating a transaction they did not recognize. This aligns with Unrecognized_Charge.",

"path": "Security_and_Access > Fraud_and_Disputes > Unrecognized_Charge",

"confidence": 0.99,

"status": "ACCEPTED"

}Route processing logic:

- Load resource: Read the

banking-category-tree.json(taxonomy) andfew-shotexamples from classpath storage into variables. - Inject context: Construct the system prompt by combining the rules, the loaded taxonomy, and the examples.

- Classify: Send the prompt to the AI with a low temperature setting (0.15) to ensure consistent, deterministic results.

- Validate contract: Verify the LLM response against the schema contract and reject any output that violates the structure.

- route:

from:

uri: "direct:classify-leaf-node"

steps:

- setVariable:

name: "bankingCategoryTree"

simple: "resource:classpath:banking-category-tree.json"

- setVariable:

name: "fewShotExamples"

simple: "resource:classpath:few-shot-examples.txt"

- setHeader:

name: "CamelOpenAiSystemMessage"

simple: >

You are an expert Banking Taxonomy Classifier. Your goal is to map user inquiries to the single most specific leaf node in the provided JSON taxonomy.

### TAXONOMY

${variable.bankingCategoryTree}

### CLASSIFICATION RULES

1. **Hierarchy of Urgency:** Prioritize "Security_and_Access" above all else. If a user mentions a lost card, theft, or inability to access their money, it must go to `Security_and_Access` regardless of the product type (Credit, Debit, or Loan).

2. **Fraud vs. Dispute Distinction:**

- Classify as `Unrecognized_Charge` (Fraud) if the user claims they *never* made the transaction or were not present.

- Classify as `Dispute_Transaction` if the user *did* authorize a purchase but the amount is wrong, the service was not received, or the subscription was cancelled but charged anyway.

3. **Intent Over Product:** Do not look for specific product names (e.g., "Visa", "Checking") to determine the top-level category. Look for the *action*.

- Example: A user asking for a "Checking Statement" and a "Credit Card Statement" should both route to `Account_Management > General_Inquiries > Statement_Request`.

4. **Exact Match Only:** The output "path" must match a valid path in the taxonomy exactly. Do not invent new nodes or combine paths.

5. **Distinguish Symptom from Solution:** Map generic symptoms to broad diagnostic categories, reserving specific resolution paths only when the user explicitly identifies the root cause.

### FEW-SHOT EXAMPLES

${variable.fewShotExamples}

- to:

uri: "openai:chat-completion"

parameters:

temperature: 0.15

jsonSchema: "resource:classpath:classification-result.schema.json"

- to:

uri: "json-validator:classification-result.schema.json"Semantic routing (directing flow)

Semantic routing is an intelligent traffic controller. Traditional routing relies on static headers or matching content. This pattern directs traffic based on intent. It allows the system to make dynamic decisions based on a nuanced understanding of the text, such as blocking harmful content, prioritizing urgent requests, or flagging compliance risks.

Use cases: Content moderation, risk scoring, and triage.

Quantitative risk scoring

The goal: Turn feelings into integers.

This pattern converts subjective text into a hard number (riskScore). This enables deterministic routing: you can pass the LLM output into a standard switch statement, such as if score > 90 then block).

Example execution:

echo 'Heads up: I was auditing your open-source SDK (com.company.client:v4.1.2) and noticed it depends on left-pad-utils version 0.9. That library was flagged yesterday as having a crypto-miner injection. You are distributing malware to all your clients who updated today. You need to yank this from Maven Central right now.' | camel run --source-dir=./{

"reasoning": "...",

"triageCategory": "SECURITY_INCIDENT",

"interactionStyle": "PROFESSIONAL",

"riskScore": 100

}Route processing logic:

- Load logic: Fetch the

risk-rubric.txtfile (the scoring logic) from the project resources. - Dynamic injection: Insert the rubric text dynamically into the system instructions.

- Analyze: Submit the user's ticket text to the AI model for evaluation.

- Structure output: Enforce the ticket-risk schema, ensuring the resulting riskScore is a machine-readable integer rather than a text description.

- route:

from:

uri: "direct:triage-ticket"

steps:

- setVariable:

name: "riskRubric"

simple: "resource:classpath:risk-rubric.txt"

- setHeader:

name: "CamelOpenAiSystemMessage"

expression:

simple: |

You are a Security Operations Center AI.

Task: Analyze the user's intent and assign the score and category based strictly on the provided rubric.

${variable.riskRubric}

- to:

uri: "openai:chat-completion"

parameters:

temperature: 0.15

jsonSchema: "resource:classpath:ticket-risk.schema.json"Compliance gap analysis

The goal: Feed the system a contract and a checklist, and have it tell you exactly what is missing.

Asking an LLM to review a contract is usually a trap. The model wants to be helpful, so it tends to hallucinate compliance where none exists. To fix this, we use a pattern that forces the model to "prove a negative."

The mechanism here is strict: we demand an evidence field for every single check. If the model marks a section as COMPLIANT, it must copy-paste the exact sentence from the source text that proves it.

The logic is simple: If you cannot quote the text, you cannot mark it as compliant. This effectively kills hallucinations, because the model cannot invent a quote that doesn't exist without getting caught by the validator.

Example execution:

curl -s https://privacy.apache.org/policies/privacy-policy-public.html | camel run --source-dir=./{

"audit_results": {

"DPO_CONTACT": {

"standard_evidence": "STANDARD 1 (DPO_CONTACT): Must explicitly provide an email address for a Data Privacy Officer.",

"policy_evidence": "E-Mail: vp-privacy@apache.org",

"status": "Compliant",

"remediation": "N/A"

},

"DATA_RETENTION": {...} ,

"COOKIES": {...},

"THIRD_PARTY_SHARING": {...},

"USER_RIGHTS": {

"standard_evidence": "STANDARD 5 (USER_RIGHTS): Must mention 'Right to be Forgotten' or 'Erasure'.",

"policy_evidence": "<li>in accordance with Art. 17 GDPR, to demand the deletion of your personal data stored by us...",

"status": "Compliant",

"remediation": "N/A"

}

}

}Route processing logic:

- Load standard: Retrieve

corporate-standard.txtto serve as the source of truth. - Set auditor rules: Configure the system instructions with strict constraints (for example,

Quote exact excerpts,Prove the negative). - Generate audit: Call the LLM model to perform the comparison between the input content and the standard.

- Log and verify: Output the raw result to the console for inspection, then validate the JSON structure before continuing.

- route:

from:

uri: "direct:privacy-audit"

steps:

- setVariable:

name: "corporateStandard"

simple: "resource:classpath:corporate-standard.txt"

- setHeader:

name: "CamelOpenAISystemMessage"

simple: |

You are a compliance auditor.

Task: Perform a strict Compliance Audit of the PRIVACY POLICY CONTENT against the CORPORATE STANDARDS provided.

Rules:

1. Use only the CORPORATE STANDARDS as the source of truth.

2. Do not rely on external laws, assumptions, or best practices.

3. Quote exact excerpts from both the standard and the policy for every finding.

4. If no matching policy text exists, state: "No matching policy text found".

5. Classify each requirement as: Compliant, Partially Compliant, Non-Compliant, Needs Clarification, or Not Applicable.

6. For Partially Compliant or Non-Compliant items, provide a minimal, precise remediation aligned to the standard.

7. Output a single JSON object that strictly conforms to the provided JSON Schema.

8. Do not include markdown, code fences, or extra keys.

9. Be concise, objective, and deterministic.

CORPORATE STANDARDS:

${variable.corporateStandard}

- to:

uri: "openai:chat-completion"

parameters:

temperature: 0.15

jsonSchema: "resource:classpath:privacy-audit.schema.json"

- log:

message: "${body}"

- to:

uri: "json-validator:privacy-audit.schema.json"Grounded pipelines (contextual integrity)

One of the most dangerous trends of 2025–2026 is the "text-to-SQL" agent—giving a model direct access to your database schema and hoping it writes safe queries. It's a security nightmare waiting to happen.

Grounded pipelines take a stricter approach. The goal is for the model to define intent and parameters rather than execute actions.

Instead of asking an agent to figure it out, we decompose the task into three distinct phases. We treat the LLM not as a decision maker, but as two separate components in a standard Camel route: a parser at the start and a synthesizer at the end. The AI never touches the database directly. It never executes code. It sits safely on the perimeter, leaving the core integration logic strictly deterministic.

- The parser (AI): Converts unstructured user text into strict, validatable JSON. It identifies the intent and parameters, but performs no actions.

- The executor (system): The "air gap." Camel runs the actual logic (SQL, APIs, file I/O) using the trusted JSON parameters. The AI is completely removed from this step and it cannot improvise here.

- The synthesizer (AI): Receives the raw system data and transforms it into a clean natural language response. It reports only what the executor provides.

Use cases: Zero-trust retrieval, "air-gapped" execution, and sanitized querying.

"Air-gapped" database querying

The goal: Turn conversation into database constraints.

Giving an LLM direct SQL access is a major security risk. This pattern separates intent from execution. The LLM defines what to search for (filters, limits, categories), but your trusted code handles the actual database connection, effectively "air-gapping" your sensitive data and logic from the model's unpredictability.

Example execution:

Input

echo 'Show me only available Dell monitors' | camel run --source-dir=./Phase 1 output

{

"category": "MONITOR",

"brand": "Dell",

"inStockOnly": true

}Phase 2 action: Camel safely executes the parameterized SQL query.

- Returns 1 row

[{PRICE=350.00, ITEM_ID=5, STOCK_QTY=8, NAME=Dell UltraSharp}]

Phase 3 output:

Here is the available Dell monitor:

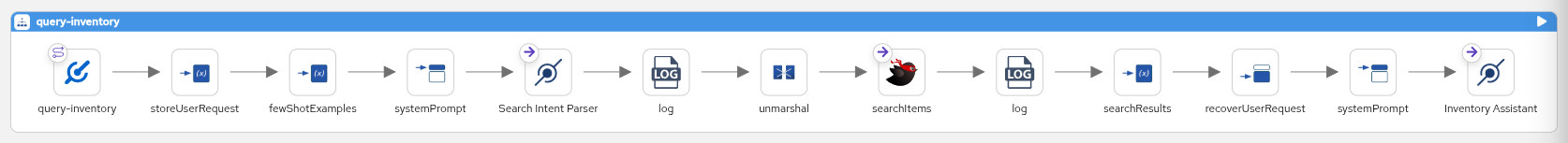

- **Dell UltraSharp** (Quantity: 8, Price: $350.00)You can use the Kaoto integration designer to visually build and manage these multi-phase routes (Figure 1).

Why this works

It turns "magic" into mechanics.

In a fully autonomous agent, if the user asks for a product that doesn't exist and the bot hallucinates one, you have no idea why. Did the SQL fail? Did the prompt fail?

With a grounded pipeline, the lines are drawn clearly.

- Data is wrong? Check phase 1 (the JSON parsing).

- Database is slow? Check phase 2 (the SQL query).

- Tone is rude? Check phase 3 (the final prompt).

By removing the autonomy, we gain total traceability. It might feel less exciting than a self-thinking robot, but it works.

Technical implementation

We use Apache Camel for a reason: It has over 15 years of production history connecting systems such as Salesforce, Kafka, and SAP.

Modern AI gateways are great for HTTP, but your enterprise data isn't just in APIs. It lives in databases, message queues, and legacy files. Camel bridges that gap. It lets you ingest a customer email via IMAP, enrich it with SQL data, and then pass it to the AI.

The runtime and configuration

You can start with the Camel Launcher command-line interface (CLI) for rapid prototyping, then export to Quarkus or Spring Boot Maven projects for production.

Crucially, this setup is vendor neutral. We use camel-openai, but that doesn't mean you are locked to OpenAI. It supports any OpenAI-compatible provider such as: Red Hat AI Inference Server (vLLM), Red Hat OpenShift AI (KServe), Amazon Bedrock, Google Cloud Vertex AI, Mistral, Cloudflare AI Gateway, Groq, OpenRouter, NVIDIA NIM Microservices, NVIDIA NeMo Guardrails, local LLM inference servers (llama.cpp, Ollama, LM Studio, LocalAI), and more.

Configuration (application.properties):

camel.component.openai.apiKey={{env:OPENAI_API_KEY}}

camel.component.openai.baseUrl={{env:OPENAI_BASE_URL}}

camel.component.openai.model={{env:OPENAI_MODEL}}Note

As of January 2026, for cost-effective local processing, consider models like Ministral-3-8B, Qwen3-VL-8B, or Granite-4.0-H-Tiny.

Developer tips

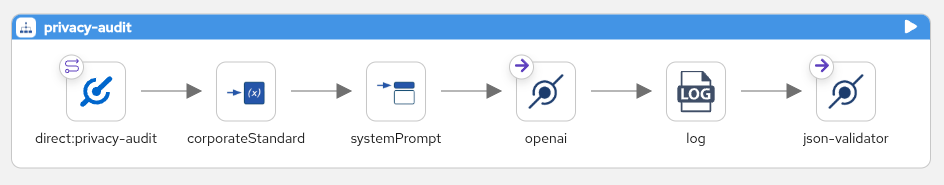

Don't just write YAML by hand. Use Kaoto to design your routes visually (Figure 2), or use an AI coding agent like Claude Code or Cursor.

Prompt tip:

Create a Camel 4.17 YAML route that acts as a 'folder watchdog' for summarization. It should monitor a directory for new text files. If a file is small (under 5KB), wrap its content in a user prompt and send it to the camel-openai component to generate a summary. Save the AI's response into an output folder. If the file is too big, just log a warning and ignore it.Try it yourself

You can install and run all the patterns discussed in this post by following the instructions in the GitHub repository.

Conclusion

To succeed with enterprise AI, you need to stop treating the model like a colleague. As we've seen, the immediate value for the enterprise is in semantic processors that manage your data.

By decoupling the intelligence from the infrastructure using Apache Camel, we strip away the unpredictability. We replace the illusion of a digital employee with the reliability of a stateless function. Whether via generative parsing or semantic routing, this approach transforms the LLM from a black box of high entropy into a standard, testable element of your pipeline.

Innovation doesn't always look like a futuristic agent performing magic. Often, innovation is a predictable, boring system that works every single time.